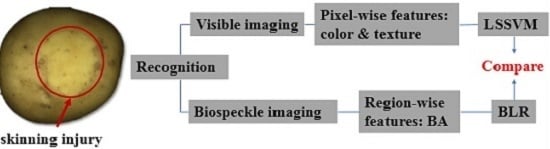

CCD-Based Skinning Injury Recognition on Potato Tubers (Solanum tuberosum L.): A Comparison between Visible and Biospeckle Imaging

Abstract

:1. Introduction

2. Materials and Methods

2.1. Sample Preparation

2.2. Image Acquisition Systems

2.2.1. Visible Imaging

2.2.2. Biospeckle Imaging

2.3. Data Processing Methods

2.3.1. Visible Imaging

2.3.2. Biospeckle Imaging

2.4. Statistical Analysis

3. Results

3.1. Experiment Results Obtained from Visible Imaging

3.2. Experiment Results Obtained from Biospeckle Imaging

3.3. Comparison of Classification Results Based on Visible and Biospeckle Imaging

4. Discussions

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| CCD | Charge Coupled Device |

| ROI | Region of Interest |

| BA | Biospeckle Activity |

| DT-CWT | Dual-tree Complex Wavelet Transform |

| GLCM | Gray Level Co-occurrence Matrix |

| THSP | Time History of the Speckle Pattern |

| IM | Inertia Moment |

| LS-SVM | Least Square Support Vector Machine |

| BLR | Binary Logistic Regression |

References

- Zaheer, K.; Akhtar, M.H. Potato Production, Usage, and Nutrition-A Review. Crit. Rev. Food Sci. 2016, 56, 711–721. [Google Scholar] [CrossRef] [PubMed]

- Reeve, R.M.; Hautala, E.; Weaver, M.L. Anatomy and compositional variation within potatoes. Am. J. Potato Res. 1969, 46, 361–373. [Google Scholar] [CrossRef]

- Lulai, E.C. The roles of phellem (skin) tensile-related fractures and phellogen shear-related fractures in susceptibility to tuber-skinning injury and skin-set development. Am. J. Potato Res. 2002, 79, 241–248. [Google Scholar] [CrossRef]

- Lulai, E.C. The canon of potato science: 43. Skin-set and wound-healing/suberization. Potato Res. 2007, 50, 387–390. [Google Scholar] [CrossRef]

- Bojanowski, A.; Avis, T.J.; Pelletier, S.; Tweddell, R.J. Management of potato dry rot. Postharvest Biol. Technol. 2012, 84, 99–109. [Google Scholar] [CrossRef]

- Hampson, C.; Dent, T.; Ginger, M. The effect of mechanical damage on potato crop wastage during storage. Ann. Appl. Biol. 1980, 96, 366–370. [Google Scholar]

- Zhang, B.H.; Huang, W.Q.; Li, J.B.; Zhao, C.J.; Fan, S.X.; Wu, J.T.; Liu, C.L. Principles, developments and applications of computer vision for external quality inspection of fruits and vegetables: A review. Food Res. Int. 2014, 62, 326–343. [Google Scholar] [CrossRef]

- Zheng, C.X.; Sun, D.W.; Zheng, L.Y. Recent developments and applications of image features for food quality evaluation and inspection—A review. Trends Food Sci. Technol. 2006, 17, 642–655. [Google Scholar] [CrossRef]

- Haralick, R.M.; Shanmugam, K.; Dinstein, I. Textural Features for Image Classification. IEEE Trans. Syst. Man Cybern. 1973, 3, 610–621. [Google Scholar] [CrossRef]

- Kartikeyan, B.; Sarkar, A. An identification approach for 2-D autoregressive models in describing textures. CVGIP-Graph. Model Image Process. 1991, 53, 121–131. [Google Scholar] [CrossRef]

- Marique, T.; Pennincx, S.; Kharoubi, A. Image Segmentation and Bruise Identification on Potatoes Using a Kohonen’s Self-Organizing Map. J. Food Sci. 2005, 70, 415–417. [Google Scholar] [CrossRef]

- Ebrahimi, E.; Mollazade, K.; Arefi, A. Detection of Greening in Potatoes using Image Processing Techniques. J. Am. Sci. 2011, 7, 243–247. [Google Scholar]

- Barnes, M.; Duckett, T.; Cielniak, G.; Stround, G.; Harper, G. Visual detection of blemishes in potatoes using minimalist boosted classifiers. J. Food Eng. 2010, 9, 339–346. [Google Scholar] [CrossRef] [Green Version]

- Moallem, P.; Razmjooy, N.; Ashourian, M. Computer vision-based potato defect detection using neural networks and support vector machine. Int. J. Robot. Autom. 2013, 28, 1–9. [Google Scholar] [CrossRef]

- Zdunek, A.; Herppich, W.B. Relation of biospeckle activity with chlorophyll content in apples. Postharvest Biol. Technol. 2012, 64, 58–63. [Google Scholar] [CrossRef]

- Adamiak, A.; Zdunek, A.; Kurenda, A.; Rutkowski, K. Application of the Biospeckle Method for Monitoring Bull’s Eye Rot Development and Quality Changes of Apples Subjected to Various Storage Methods—Preliminary Studies. Sensors 2012, 12, 3215–3227. [Google Scholar] [CrossRef] [PubMed]

- Alves, J.A.; Júnior, R.A.B.; Vilas Boas, E.V.B. Identification of respiration rate and water activity change in fresh-cut carrots using biospeckle laser and frequency approach. Postharvest Biol. Technol. 2013, 86, 381–386. [Google Scholar] [CrossRef]

- Nassif, R.; Nader, C.A.; Afif, C.; Pellen, F.; Brun, G.L.; Jeune, B.L.; Abboud, M. Detection of Golden apples climacteric peak by laser biospeckle measurements. Appl. Opt. 2014, 53, 8276–8282. [Google Scholar] [CrossRef] [PubMed]

- Liu, H.B.; Gao, Y.W.; Lu, J.Z.; Rao, X.Q. Pear defect and stem/calyx discrimination using laser speckle. Trans. Chin. Soc. Agric. Eng. 2015, 31, 319–324. [Google Scholar]

- Arefi, A.; Moghaddam, P.A.; Hassanpour, A.; Mollazade, K.; Motlagh, A.M. Non-destructive identification of mealy apples using biospeckle imaging. Postharvest Biol. Technol. 2016, 112, 266–276. [Google Scholar] [CrossRef]

- Fogelman, E.; Tanami, S.; Ginzberg, I. Anthocyanin synthesis in native and wound periderms of potato. Physiol. Plant. 2015, 153, 616–626. [Google Scholar] [CrossRef] [PubMed]

- Lulai, E.C.; Neubauer, J.D.; Olson, L.L.; Suttle, J.C. Wounding induces changes in tuber polyamine content, polyamine metabolic gene expression, and enzyme activity during closing layer formation and initiation of wound periderm formation. J. Plant Physiol. 2015, 176, 89–95. [Google Scholar] [CrossRef] [PubMed]

- Suttle, J.C.; Lulai, E.C.; Huckle, L.L.; Neubauer, J.D. Wounding of potato tubers induces increases in ABA biosynthesis and catabolism and alters expression of ABA metabolic genes. J. Plant Physiol. 2013, 170, 560–566. [Google Scholar] [CrossRef] [PubMed]

- Haralick, R.M. Statistical and structural approaches to texture. Proc. IEEE 1979, 67, 786–804. [Google Scholar] [CrossRef]

- Riaz, F.; Hassan, A.; Rehman, S.; Qamar, U. Texture Classification Using Rotation- and Scale-Invariant Gabor Texture Features. IEEE Signal Proc. Lett. 2013, 20, 607–610. [Google Scholar] [CrossRef]

- Celik, T.; Tjahjadi, T. Multiscale texture classification using dual-tree complex wavelet transform. Pattern Recogn. Lett. 2009, 30, 331–339. [Google Scholar] [CrossRef]

- Arizaga, R.; Trivi, M.; Rabal, H. Speckle time evolution characterization by the co-occurrence matrix analysis. Opt. Laser Technol. 1999, 31, 163–169. [Google Scholar] [CrossRef]

- Oulamara, A.; Tribillon, G.; Duvernoy, J. Biological Activity Measurement on Botanical Specimen Surfaces Using a Temporal Decorrelation Effect of Laser Speckle. J. Mod. Opt. 1989, 36, 165–179. [Google Scholar] [CrossRef]

- Banks, N.; Kays, S. Measuring internal gases and lenticel resistance to gas diffusion in potato tubers. J. Am. Soc. Hortic. Sci. 1988, 113, 577–580. [Google Scholar]

- Tyner, D.; Hocart, M.; Lennard, J.; Graham, D. Periderm and lenticel characterization in relation to potato cultivar, soil moisture and tuber maturity. Potato Res. 1997, 40, 181–190. [Google Scholar] [CrossRef]

- Lulai, E.C.; Neubauer, J.D. Wound-induced suberization genes are differentially expressed, spatially and temporally, during closing layer and wound periderm formation. Postharvest Biol. Technol. 2014, 90, 24–33. [Google Scholar] [CrossRef]

- Neubauer, J.D.; Lulai, E.C.; Thompson, A.L.; Suttle, J.C.; Bolton, M.D. Wounding coordinately induces cell wall protein, cell cycle and pectin methyl esterase genes involved in tuber closing layer and wound periderm development. J. Plant Physiol. 2012, 169, 586–595. [Google Scholar] [CrossRef] [PubMed]

- Braga, R.A.; Dupuy, L.; Pasqual, M.; Cardoso, R.R. Live biospeckle laser imaging of root tissues. Biophys. Struct. Mech. 2009, 38, 679–686. [Google Scholar] [CrossRef] [PubMed]

- Zdunek, A.; Adamiak, A.; Pieczywek, P.M.; Kurenda, A. The biospeckle method for the investigation of agricultural crops: A review. Opt. Laser Eng. 2014, 52, 276–285. [Google Scholar] [CrossRef]

| Category | Number of Features | Description | |

|---|---|---|---|

| Color | RGB | 6 | Mean values and standard deviations of three color channels of RGB |

| Texture | GLCM | 8 | Mean values and standard deviations of ASM (Angular Second Moment), ENT (Entropy), INE (Inertia) and COR (Correlation) |

| Gabor | 108 | Twelve filters with 3 scales and 4 orientations, with one image divided into 3 × 3 image blocks | |

| DT-CWT | 12 | Real and imaginary images at approximately ±15°, ±45° and ±75°, respectively | |

| 1 h | 12 h | 1 d | 3 d | 7 d | |

|---|---|---|---|---|---|

| Con * | −13.064 ± 5.429 d | 18.832 ± 3.965 c | 30.943 ± 4.464 a,b | 37.436 ± 4.882 a | 28.983 ± 3.249 b |

| 0 | 1 h | 1 d | 3 d | 5 d | 7 d | |

|---|---|---|---|---|---|---|

| Mean value * | 2421.08 ± 115.323 b,c | 3903.28 ± 390.157 a | 2771.88 ± 82.416 b | 2647.68 ± 114.337 b | 2753.90 ± 129.702 b | 2196.21 ± 192.280 c |

| 0 | 1 h | 1 d | 3 d | 5 d | 7 d | |

|---|---|---|---|---|---|---|

| 10 s | 1 | 1 | 1 | 1 | 1 | 1 |

| 20 s | 0.968 * | 0.931 * | 0.926 * | 0.956 * | 0.963 * | 0.960 * |

| 30 s | 0.966 * | 0.915 * | 0.897 * | 0.957 * | 0.956 * | 0.938 * |

| 40 s | 0.965 * | 0.928 * | 0.921 * | 0.949 * | 0.959 * | 0.948 * |

| Statistics | Visible Imaging | Biospeckle Imaging | ||

| 1 h | 1 d | 1 h | 1 d | |

| Mean Value | 75% | 88.33% | 88.1% | 53.8% |

| ANOVA between 1 h and 1 d | ||||

| F-Value | 8.930 | 19.044 | ||

| Level of Significance p | 0.024 | 0.005 | ||

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Gao, Y.; Geng, J.; Rao, X.; Ying, Y. CCD-Based Skinning Injury Recognition on Potato Tubers (Solanum tuberosum L.): A Comparison between Visible and Biospeckle Imaging. Sensors 2016, 16, 1734. https://doi.org/10.3390/s16101734

Gao Y, Geng J, Rao X, Ying Y. CCD-Based Skinning Injury Recognition on Potato Tubers (Solanum tuberosum L.): A Comparison between Visible and Biospeckle Imaging. Sensors. 2016; 16(10):1734. https://doi.org/10.3390/s16101734

Chicago/Turabian StyleGao, Yingwang, Jinfeng Geng, Xiuqin Rao, and Yibin Ying. 2016. "CCD-Based Skinning Injury Recognition on Potato Tubers (Solanum tuberosum L.): A Comparison between Visible and Biospeckle Imaging" Sensors 16, no. 10: 1734. https://doi.org/10.3390/s16101734

APA StyleGao, Y., Geng, J., Rao, X., & Ying, Y. (2016). CCD-Based Skinning Injury Recognition on Potato Tubers (Solanum tuberosum L.): A Comparison between Visible and Biospeckle Imaging. Sensors, 16(10), 1734. https://doi.org/10.3390/s16101734