Development and Evaluation of Interactive Flipped e-Learning (iFEEL) for Pharmacy Students during the COVID-19 Pandemic

Abstract

:1. Introduction

2. Methods

2.1. Study Design

2.2. Software and Applications

2.3. Procedure

2.4. Questionnaires to Evaluate Student’s and Faculty’s Perceptions

2.5. Validity and Reliability of the Surveys

2.6. Ethical Considerations

2.7. Statistical Analysis

3. Results

4. Discussion

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Pilkington, L.I.; Hanif, M. An account of strategies and innovations for teaching chemistry during the COVID-19 pandemic. Biochem. Mol. Biol. Educ. 2021, 49, 320–322. [Google Scholar] [CrossRef]

- Ballad, C.A.C.; Labrague, L.J.; Cayaban, A.R.R.; Turingan, O.M.; Al Balushi, S.M. Self-directed learning readiness and learning styles among Omani nursing students: Implications for online learning during the COVID-19 pandemic. Nurs. Forum 2021, 57, 94–103. [Google Scholar] [CrossRef] [PubMed]

- Khtere, A.R.; Yousef, A.M.F. The Professionalism of Online Teaching in Arab Universities. Educ. Technol. Soc. 2021, 24, 1–12. [Google Scholar]

- Attarabeen, O.F.; Gresham-Dolby, C.; Broedel-Zaugg, K. Pharmacy student stress with transition to online education during the COVID-19 pandemic. Curr. Pharm. Teach. Learn. 2021, 13, 928–934. [Google Scholar] [CrossRef] [PubMed]

- Smith, E.; Boscak, A. A virtual emergency: Learning lessons from remote medical student education during the COVID-19 pandemic. Emerg. Radiol. 2021, 28, 445–452. [Google Scholar] [CrossRef]

- Haftador, A.M.; Shirazi, F.; Mohebbi, Z. Online class or flipped-jigsaw learning? Which one promotes academic motivation during the COVID-19 pandemic? BMC Med. Educ. 2021, 21. [Google Scholar] [CrossRef]

- Ketterer, B.; Childers, J.W.; Arnold, R.M. An Innovative Application of Online Learning for Hospice Education in Medicine Trainees. J. Palliat. Med. 2021, 24, 919–923. [Google Scholar] [CrossRef]

- Morgado, M.; Mendes, J.J.; Proença, L. Online Problem-Based Learning in Clinical Dental Education: Students’ Self-Perception and Motivation. Healthc 2021, 9, 420. [Google Scholar] [CrossRef]

- Wong, F.M.F.; Kan, C.W.Y. Online Problem-Based Learning Intervention on Self-Directed Learning and Problem-Solving through Group Work: A Waitlist Controlled Trial. Int. J. Environ. Res. Public Health 2022, 19, 720. [Google Scholar] [CrossRef]

- Cho, M.K.; Kim, M.Y. Factors Influencing SDL Readiness and Self-Esteem in a Clinical Adult Nursing Practicum after Flipped Learning Education: Comparison of the Contact and Untact Models. Int. J. Environ. Res. Public Health 2021, 18, 1–12. [Google Scholar]

- Duszenko, M.; Fröhlich, N.; Kaupp, A.; Garaschuk, O. All-digital training course in neurophysiology: Lessons learned from the COVID-19 pandemic. BMC Med. Educ. 2022, 22. [Google Scholar] [CrossRef]

- Belfi, L.M.; Bartolotta, R.J.; Giambrone, A.E.; Davi, C.; Min, R.J. “Flipping” the Introductory Clerkship in Radiology: Impact on Medical Student Performance and Perceptions. Acad. Radiol. 2015, 22, 794–801. [Google Scholar] [CrossRef] [PubMed]

- Co, M.; Chung, P.H.Y.; Chu, K.M. Online teaching of basic surgical skills to medical students during the COVID-19 pandemic: A case-control study. Surg. Today 2021, 51, 1404–1409. [Google Scholar] [CrossRef] [PubMed]

- Co, M.; Chu, K.M. Distant surgical teaching during COVID-19—A pilot study on final year medical students. Surg. Pract. 2020, 24, 105–109. [Google Scholar] [CrossRef]

- Suppan, M.; Gartner, B.; Golay, E.; Stuby, L.; White, M.; Cottet, P.; Abbas, M.; Iten, A.; Harbarth, S.; Suppan, L. Teaching Adequate Prehospital Use of Personal Protective Equipment During the COVID-19 Pandemic: Development of a Gamified e-Learning Module. JMIR Serious Games 2020, 8, e20173. [Google Scholar] [CrossRef]

- Chou, P.N. Effect of students’ self-directed learning abilities on online learning outcomes: Two exploratory experiments in electronic engineering. Int. J. Humanit. Soc. Sci. 2012, 2, 172–179. [Google Scholar]

- Alassaf, P.; Szalay, Z.G. Transformation toward e-learning: Experience from the sudden shift to e-courses at COVID-19 time in Central European countries; Students’satisfaction perspective. Stud. Mundi Econ. 2020, 7. [Google Scholar] [CrossRef]

- Gonzalez, T.; De la Rubia, M.A.; Hincz, K.P.; Comas-Lopez, M.; Subirats, L.; Fort, S.; Sacha, G.M. Influence of COVID-19 confinement on students’ performance in higher education. PLoS ONE 2020, 15, e0239490. [Google Scholar] [CrossRef]

- Khalil, R.; Mansour, A.E.; Fadda, W.A.; Almisnid, K.; Aldamegh, M.; Al-Nafeesah, A.; Alkhalifah, A.; Al-Wutayd, O. The sudden transition to synchronized online learning during the COVID-19 pandemic in Saudi Arabia: A qualitative study exploring medical students’ perspectives. BMC Med. Educ. 2020, 20. [Google Scholar] [CrossRef]

- Dhawan, S. Online Learning: A Panacea in the Time of COVID-19 Crisis. J. Educ. Technol. Syst. 2020, 49, 5–22. [Google Scholar] [CrossRef]

- Schlenz, M.A.; Schmidt, A.; Wöstmann, B.; Krämer, N.; Schulz-Weidner, N. Students’ and lecturers’ perspective on the implementation of online learning in dental education due to SARS-CoV-2 (COVID-19): A cross-sectional study. BMC Med. Educ. 2020, 20, 1–7. [Google Scholar] [CrossRef]

- Bao, W. COVID-19 and online teaching in higher education: A case study of Peking University. Hum. Behav. Emerg. Technol. 2020, 2, 113. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Rogowska, A.M.; Kuśnierz, C.; Bokszczanin, A. Examining Anxiety, Life Satisfaction, General Health, Stress and Coping Styles During COVID-19 Pandemic in Polish Sample of University Students. Psychol. Res. Behav. Manag. 2020, 13, 797–811. [Google Scholar] [CrossRef] [PubMed]

- Melgaard, J.; Monir, R.; Lasrado, L.A.; Fagerstrøm, A. Academic Procrastination and Online Learning during the COVID-19 Pandemic. Procedia Comput. Sci. 2022, 196, 117–124. [Google Scholar] [CrossRef] [PubMed]

- Patricia Aguilera-Hermida, A. College students’ use and acceptance of emergency online learning due to COVID-19. Int. J. Educ. Res. Open 2020, 1, 100011. [Google Scholar] [CrossRef] [PubMed]

- Pelikan, E.R.; Lüftenegger, M.; Holzer, J.; Korlat, S.; Spiel, C.; Schober, B. Learning during COVID-19: The role of self-regulated learning, motivation, and procrastination for perceived competence. Zeitschrift Fur Erziehungswiss. 2021, 24, 1. [Google Scholar] [CrossRef]

- Hong, J.C.; Lee, Y.F.; Ye, J.H. Procrastination predicts online self-regulated learning and online learning ineffectiveness during the coronavirus lockdown. Pers. Individ. Dif. 2021, 174, 110673. [Google Scholar] [CrossRef]

- Rasheed, R.A.; Kamsin, A.; Abdullah, N.A. Challenges in the online component of blended learning: A systematic review. Comput. Educ. 2020, 144, 103701. [Google Scholar]

- Adedoyin, O.B.; Soykan, E. COVID-19 pandemic and online learning: The challenges and opportunities. Interact. Learn. Environ. 2020, 1–13. [Google Scholar] [CrossRef]

- Favale, T.; Soro, F.; Trevisan, M.; Drago, I.; Mellia, M. Campus traffic and e-Learning during COVID-19 pandemic. Comput. Networks 2020, 176. [Google Scholar] [CrossRef]

- Rapanta, C.; Botturi, L.; Goodyear, P.; Guàrdia, L.; Koole, M. Online University Teaching During and After the COVID-19 Crisis: Refocusing Teacher Presence and Learning Activity. Postdigital Sci. Educ. 2020, 2, 923–945. [Google Scholar] [CrossRef]

- Wilson, J.A.; Waghel, R.C.; Dinkins, M.M. Flipped classroom versus a didactic method with active learning in a modified team-based learning self-care pharmacotherapy course. Curr. Pharm. Teach. Learn. 2019, 11, 1287–1295. [Google Scholar] [CrossRef] [PubMed]

- Schoonenboom, J.; Johnson, R.B. How to Construct a Mixed Methods Research Design. Kolner Z. Soz. Sozpsychol. 2017, 69, 107. [Google Scholar] [CrossRef] [PubMed]

- Branch, R.M. Instructional design: The ADDIE approach. Instr. Des. ADDIE Approach 2010, 1–203. [Google Scholar] [CrossRef]

- Lull, M.E.; Mathews, J.L. Online Self-testing Resources Prepared by Peer Tutors as a Formative Assessment Tool in Pharmacology Courses. Am. J. Pharm. Educ. 2016, 80, 124. [Google Scholar] [CrossRef]

- Ohsato, A.; Seki, N.; Nguyen, T.T.T.; Moross, J.; Sunaga, M.; Kabasawa, Y.; Kinoshita, A.; Morio, I. Evaluating e-learning on an international scale: An audit of computer simulation learning materials in the field of dentistry. J. Dent. Sci. 2022, 17, 535–544. [Google Scholar] [CrossRef]

- Aithal, A.; Aithal, P.S. Development and Validation of Survey Questionnaire & Experimental Data—A Systematical Review-based Statistical Approach. SSRN Electron. J. 2020. [Google Scholar] [CrossRef]

- Hurtado-Parrado, C.; Gantiva, C.; Gómez-A, A.; Cuenya, L.; Ortega, L.; Rico, J.L. Editorial: Research on Emotion and Learning: Contributions from Latin America. Front. Psychol. 2020, 11, 11. [Google Scholar] [CrossRef]

- Devkaran, S.; O’Farrell, P.N.; Ellahham, S.; Arcangel, R. Impact of repeated hospital accreditation surveys on quality and reliability, an 8-year interrupted time series analysis. BMJ Open 2019, 9, 1V. [Google Scholar] [CrossRef] [Green Version]

- O’Neill, M.E.; Mathews, K.L. Levene tests of homogeneity of variance for general block and treatment designs. Biometrics 2002, 58, 216–224. [Google Scholar] [CrossRef]

- Cho, H.J.; Zhao, K.; Lee, C.R.; Runshe, D.; Krousgrill, C. Active learning through flipped classroom in mechanical engineering: Improving students’ perception of learning and performance. Int. J. STEM Educ. 2021, 8. [Google Scholar] [CrossRef]

- Mahmoud, M.A.; Islam, M.A.; Ahmed, M.; Bashir, R.; Ibrahim, R.; Al-Nemiri, S.; Babiker, E.; Mutasim, N.; Alolayan, S.O.; Al Thagfan, S.; et al. Validation of the arabic version of general medication adherence scale (GMAS) in sudanese patients with diabetes mellitus. Risk Manag. Healthc. Policy 2021, 14, 4235–4241. [Google Scholar] [CrossRef] [PubMed]

- Kalmar, E.; Aarts, T.; Bosman, E.; Ford, C.; de Kluijver, L.; Beets, J.; Veldkamp, L.; Timmers, P.; Besseling, D.; Koopman, J.; et al. The COVID-19 paradox of online collaborative education: When you cannot physically meet, you need more social interactions. Heliyon 2022, 8, e08823. [Google Scholar] [CrossRef] [PubMed]

- Rodrigues, H.; Almeida, F.; Figueiredo, V.; Lopes, S.L. Tracking e-learning through published papers: A systematic review. Comput. Educ. 2019, 136, 87–98. [Google Scholar] [CrossRef]

- Abbasi, M.S.; Ahmed, N.; Sajjad, B.; Alshahrani, A.; Saeed, S.; Sarfaraz, S.; Alhamdan, R.S.; Vohra, F.; Abduljabbar, T. E-Learning perception and satisfaction among health sciences students amid the COVID-19 pandemic. Work 2020, 67, 549–556. [Google Scholar] [CrossRef]

- Sindiani, A.M.; Obeidat, N.; Alshdaifat, E.; Elsalem, L.; Alwani, M.M.; Rawashdeh, H.; Fares, A.S.; Alalawne, T.; Tawalbeh, L.I. Distance education during the COVID-19 outbreak: A cross-sectional study among medical students in North of Jordan. Ann. Med. Surg. 2020, 59, 186–194. [Google Scholar] [CrossRef]

- Freeze, R.D.; Alshare, K.A.; Lane, P.L.; Wen, H.J. IS success model in e-learning context based on students’ perceptions. J. Inf. Syst. Educ. 2010, 21, 173–184. [Google Scholar]

- Chan, P.; Kim, S.; Garavalia, L.; Wang, J. Implementing a strategy for promoting long-term meaningful learning in a pharmacokinetics course. Curr. Pharm. Teach. Learn. 2018, 10, 1048–1054. [Google Scholar] [CrossRef]

- Vinall, R.; Kreys, E. Use of End-of-Class Quizzes to Promote Pharmacy Student Self-Reflection, Motivate Students to Improve Study Habits, and to Improve Performance on Summative Examinations. Pharm 2020, 8, 167. [Google Scholar] [CrossRef]

- Shahba, A.A.; Sales, I. Design Your Exam (DYE): A Novel Active Learning Technique to Increase Pharmacy Student Engagement in the Learning Process. Saudi Pharm. J. SPJ Off. Publ. Saudi Pharm. Soc. 2021, 29, 1323–1328. [Google Scholar] [CrossRef]

- Hennig, S.; Staatz, C.E.; Bond, J.A.; Leung, D.; Singleton, J. Quizzing for success: Evaluation of the impact of feedback quizzes on the experiences and academic performance of undergraduate students in two clinical pharmacokinetics courses. Curr. Pharm. Teach. Learn. 2019, 11, 742–749. [Google Scholar] [CrossRef] [PubMed]

- Khanova, J.; McLaughlin, J.E.; Rhoney, D.H.; Roth, M.T.; Harris, S. Student Perceptions of a Flipped Pharmacotherapy Course. Am. J. Pharm. Educ. 2015, 79, 140. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Giuliano, C.A.; Moser, L.R. Evaluation of a Flipped Drug Literature Evaluation Course. Am. J. Pharm. Educ. 2016, 80, 66. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bouw, J.W.; Gupta, V.; Hincapie, A.L. Assessment of students’ satisfaction with a student-led team-based learning course. J. Educ. Eval. Health Prof. 2015, 12, 23. [Google Scholar] [CrossRef] [PubMed]

- Valler-Jones, T. The impact of peer-led simulations on student nurses. Br. J. Nurs. 2014, 23, 321–326. [Google Scholar] [CrossRef] [PubMed]

- Camargo, C.P.; Tempski, P.Z.; Busnardo, F.F.; Martins, M.d.A.; Gemperli, R. Online learning and COVID-19: A meta-synthesis analysis. Clinics 2020, 75, e2286. [Google Scholar] [CrossRef] [PubMed]

- Gismalla, M.D.-A.; Mohamed, M.S.; Ibrahim, O.S.O.; Elhassan, M.M.A.; Mohamed, M.N. Medical students’ perception towards E-learning during COVID 19 pandemic in a high burden developing country. BMC Med. Educ. 2021, 21, 377. [Google Scholar] [CrossRef]

- Gewin, V. Five tips for moving teaching online as COVID-19 takes hold. Nature 2020, 580, 295–296. [Google Scholar]

- Alsoufi, A.; Alsuyihili, A.; Msherghi, A.; Elhadi, A.; Atiyah, H.; Ashini, A.; Ashwieb, A.; Ghula, M.; Ben Hasan, H.; Abudabuos, S.; et al. Impact of the COVID-19 pandemic on medical education: Medical students’ knowledge, attitudes, and practices regarding electronic learning. PLoS ONE 2020, 15, e0242905. [Google Scholar] [CrossRef]

- Ramos-Morcillo, A.J.; Leal-Costa, C.; Moral-García, J.E.; Ruzafa-Martínez, M. Experiences of Nursing Students during the Abrupt Change from Face-to-Face to e-Learning Education during the First Month of Confinement Due to COVID-19 in Spain. Int. J. Environ. Res. Public Health 2020, 17, 5519. [Google Scholar] [CrossRef]

- Sarwar, H.; Akhtar, H.; Naeem, M.M.; Khan, J.A.; Waraich, K.; Shabbir, S.; Hasan, A.; Khurshid, Z. Self-Reported Effectiveness of e-Learning Classes during COVID-19 Pandemic: A Nation-Wide Survey of Pakistani Undergraduate Dentistry Students. Eur. J. Dent. 2020, 14, S34–S43. [Google Scholar] [CrossRef] [PubMed]

- Al Zahrani, E.M.; Al Naam, Y.A.; AlRabeeah, S.M.; Aldossary, D.N.; Al-Jamea, L.H.; Woodman, A.; Shawaheen, M.; Altiti, O.; Quiambao, J.V.; Arulanantham, Z.J.; et al. E- Learning experience of the medical profession’s college students during COVID-19 pandemic in Saudi Arabia. BMC Med. Educ. 2021, 21, 443. [Google Scholar] [CrossRef]

- Förster, C.; Eismann-Schweimler, J.; Stengel, S.; Bischoff, M.; Fuchs, M.; Graf von Luckner, A.; Ledig, T.; Barzel, A.; Maun, A.; Joos, S.; et al. Opportunities and challenges of e-learning in vocational training in General Practice—A project report about implementing digital formats in the KWBW-Verbundweiterbildung(plus). GMS J. Med. Educ. 2020, 37, Doc97. [Google Scholar] [CrossRef] [PubMed]

- AlQhtani, A.; AlSwedan, N.; Almulhim, A.; Aladwan, R.; Alessa, Y.; AlQhtani, K.; Albogami, M.; Altwairqi, K.; Alotaibi, F.; AlHadlaq, A.; et al. Online versus classroom teaching for medical students during COVID-19: Measuring effectiveness and satisfaction. BMC Med. Educ. 2021, 21, 452. [Google Scholar] [CrossRef]

- Bhattarai, B.; Gupta, S.; Dahal, S.; Thapa, A.; Bhandari, P. Perception of Online Lectures among Students of a Medical College in Kathmandu: A Descriptive Cross-sectional Study. JNMA J. Nepal Med. Assoc. 2021, 59, 234–238. [Google Scholar] [CrossRef]

- Ibrahim, N.K.; Al Raddadi, R.; AlDarmasi, M.; Al Ghamdi, A.; Gaddoury, M.; AlBar, H.M.; Ramadan, I.K. Medical students’ acceptance and perceptions of e-learning during the COVID-19 closure time in King Abdulaziz University, Jeddah. J. Infect. Public Health 2021, 14, 17–23. [Google Scholar] [CrossRef]

| Teaching Technique | Average Attendance (%) * | Mean Pre-Test Score (%) | Mean Post-Test Score (%) | Mean Comprehensive Exam Score (%) |

|---|---|---|---|---|

| Live Paper-based learning method (PICkLE), n = 149 | NA | NA | 90.2 ± 8.26 | NA |

| Remote Paper-based learning method (PICKLE), n = 9 | 97.8 ± 5.0 | 16.4 ± 17.1 | 93.5 ± 8.4 | 83.8 ± 18.7 |

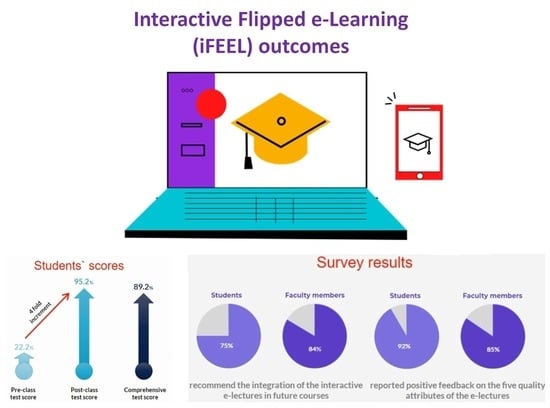

| Remote Interactive-electronic learning method (iFEEL), n = 9 | 95.6 ± 9.9 | 22.2 ± 16.3 | 95.2 ± 7.7 | 89.2 ± 9.2 |

| Statistical test | Independent t-test | Independent t-test | ANOVA followed by LSD | Independent t-test |

| p-value | 0.667 | 0.474 | iFEEl vs. live PICkLE, (0.08), iFEEl vs. remote PICkLE (0.658) | 0.449 |

| Question ** | Students (Responses = 40), Faculty (Responses = 32) | I Strongly Support (%) * | I Support (%) * | Neutral (%) * | I object (%) * | I Strongly Object (%) * | Mean | Standard Deviation |

|---|---|---|---|---|---|---|---|---|

| The lecture material was clear and easy to understand | Students | 72.5% | 22.5% | 5% | 0 | 0 | 4.68 | 0.57 |

| Faculty | 31.3% | 56.3% | 12.5% | 0 | 0 | 4.19 | 0.64 | |

| The audio quality and illustrations were clear | Students | 65.0% | 27.5% | 7.5% | 0 | 0 | 4.58 | 0.64 |

| Faculty | 37.5% | 56.3% | 6.3% | 0 | 0 | 4.31 | 0.59 | |

| The lecture animation helped me to understand the lecture material | Students | 65.0% | 25.0% | 10.0% | 0 | 0 | 4.55 | 0.68 |

| Faculty | 25.0% | 53.1% | 18.8% | 0 | 0 | 4.00 | 0.76 | |

| The video quality was good | Students | 72.5% | 22.5% | 5% | 0 | 0 | 4.68 | 0.57 |

| Faculty | 34.4% | 50.0% | 12.5% | 0 | 0 | 4.16 | 0.77 | |

| The embedded questions were interactive and helped me to understand the lecture | Students | 72.5% | 17.5% | 10% | 0 | 0 | 4.63 | 0.67 |

| Faculty | 31.3% | 50.0% | 15.6% | 0 | 0 | 4.09 | 0.78 |

| Question | Learning Method | I Strongly Support (%) * | I Support (%) * | Neutral (%) * | I Object (%) * | I Strongly Object (%) * | Mean | Standard Deviation |

| Do you support incorporating these learning models in future course offerings? | Live PICkLE (n = 84) | 77.4% | 16.7% | 4.8% | 1.2% | 0 | 4.70 | 0.62 |

| Remote PICkLE (n = 8) | 62.5% | 0 | 12.5% | 25% | 0 | 4.00 | 1.41 | |

| iFEEL (n = 8) | 75% | 12.5% | 0 | 12.5% | 0 | 4.50 | 1.07 | |

| Question | Learning Method | Excellent (%) * | Good (%) * | Acceptable (%) * | Weak (%) * | Very Weak (%) * | Mean | Standard Deviation |

| How well do you remember the basic information you studied in the course? | Live PICkLE (n = 85) | 12.9% | 57.6% | 24.7% | 2.4% | 2.4% | 3.76 | 0.80 |

| How would you rate your understanding of the lecture material? | Remote PICkLE (n = 8) | 62.5% | 37.5% | 0 | 0 | 0 | 4.63 | 0.52 |

| iFEEL (n = 8) | 75% | 12.5% | 12.5% | 0 | 0 | 4.63 | 0.74 | |

| Question | Learning Method | Very Useful (%) * | Useful (%) * | Neutral (%) * | Not Useful (%) * | Absolutely Useless (%) * | Mean | Standard Deviation |

| How useful was studying this course in groups? | Live PICkLE (n = 85) | 57.6% | 30.6% | 8.2% | 1.2% | 2.4% | 4.40 | 0.88 |

| How useful was this model in developing your personal skills (teamwork - the ability to negotiate and persuade–decision-making)? | Remote PICkLE (n = 8) | 50% | 37.5% | 12.5% | 0 | 0 | 4.38 | 0.74 |

| How useful was the “e-lectures” in motivating you to review the scientific material before attending the lecture? | iFEEL (n = 8) | 75% | 12.5% | 12.5% | 0 | 0 | 4.63 | 0.74 |

| How useful was the “e-lectures” in motivating you to focus during the lecture? | iFEEL (n = 8) | 75% | 12.5% | 12.5% | 0 | 0 | 4.63 | 0.74 |

| Question | Remote PICkLE * | iFEEL * | Both are Same * | None of Them * | ||||

| Which method do you prefer to use in teaching this course in terms of clarification and retention the information? (n = 8) | 12.5% | 75% | 12.5% | 0 | ||||

| Which method do you prefer to use in teaching this course in terms of visualizing the instruments and how they work? (n = 8) | 0 | 87.5% | 12.5% | 0 | ||||

| Gender | Male * | Female * | |||

| 62.5% | 37.5% | ||||

| Academic rank | Professor * | Associate Professor * | Assistant Professor * | Lecturer * | Teaching Assistant * |

| 3.1% | 15.6% | 68.8% | 12.5% | 0 | |

| Academic Degree | Ph.D. * | M.Sc. * | Pharm. D * | Bachelor * | M.D * |

| 87.5% | 12.5% | 0 | 0 | 0 | |

| Question | Very Helpful (%) * | Helpful (%) * | Neutral (%) * | Not Helpful (%) * | Not Completely Helpful (%) * | Mean | Standard Deviation |

| What is the expected effect of using this model in motivating the student to peruse the scientific material before attending the lecture? (n = 32) | 25.0 | 65.1 | 3.1 | 6.3 | 0 | 4.09 | 0.73 |

| What is the expected effect of using this model in motivating the student to focus during the lecture? (n = 32) | 40.6 | 46.9 | 9.4 | 3.1 | 0 | 4.25 | 0.76 |

| Question | Very Useful (%) * | Useful (%) * | Neutral (%) * | Not Useful (%) * | Not Completely Useful (%) * | Mean | Standard Deviation |

| How useful do you consider the “visual instructional videos and animated illustrations” in understanding the material (especially the one that needs practical skill or visual illustration)? (n = 32) | 53.1 | 40.6 | 6.3 | 0 | 0 | 4.47 | 0.62 |

| Question | I Strongly Support (%) * | I Support (%) * | Neutral (%) * | I Object (%) * | I Strongly Object (%) * | Mean | Standard Deviation |

| Do you support the integration of this type of interactive e-lectures in the courses you teach? (n = 32) | 37.5 | 46.9 | 9.4 | 3.1 | 3.1 | 4.13 | 0.94 |

| Item | Initial Codes | Number of References | Main Themes |

|---|---|---|---|

| Advantages |

| 2 | Self-directed learning |

| 2 | Effective comprehension | |

| 2 | Enhancing interpersonal skills | |

| Disadvantages |

| 2 | Time-related issues |

| 1 | Difficulty in comprehension | |

| 1 | Lack of hands-on training for practical aspects |

| Item | Initial Codes | Number of References | Main Themes |

|---|---|---|---|

| Advantages |

| 3 | Appropriate format/structure |

| 1 | Flexible ways of learning | |

| Disadvantages |

| 1 | Lack of hands-on training for practical aspects |

| 1 | Lack of dedication |

| Item | Initial Codes | Number of References | Main Themes |

|---|---|---|---|

| Advantages |

| 2 | Modern Teaching/learning strategy |

| 12 | Appropriate format/structure | |

| 9 | Engaging and Interactive | |

| 3 | Flexibility | |

| 3 | Student-centered | |

| 2 | Time effective | |

| Disadvantages |

| 5 | Time-related issues |

| 4 | Technical issues | |

| 4 | Subject/topic issues | |

| Monitoring system is required to check compliance | 4 | Compliance issues | |

| 5 | Poor comprehension and interaction |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Shahba, A.A.; Alashban, Z.; Sales, I.; Sherif, A.Y.; Yusuf, O. Development and Evaluation of Interactive Flipped e-Learning (iFEEL) for Pharmacy Students during the COVID-19 Pandemic. Int. J. Environ. Res. Public Health 2022, 19, 3902. https://doi.org/10.3390/ijerph19073902

Shahba AA, Alashban Z, Sales I, Sherif AY, Yusuf O. Development and Evaluation of Interactive Flipped e-Learning (iFEEL) for Pharmacy Students during the COVID-19 Pandemic. International Journal of Environmental Research and Public Health. 2022; 19(7):3902. https://doi.org/10.3390/ijerph19073902

Chicago/Turabian StyleShahba, Ahmad A., Zaid Alashban, Ibrahim Sales, Abdelrahman Y. Sherif, and Osman Yusuf. 2022. "Development and Evaluation of Interactive Flipped e-Learning (iFEEL) for Pharmacy Students during the COVID-19 Pandemic" International Journal of Environmental Research and Public Health 19, no. 7: 3902. https://doi.org/10.3390/ijerph19073902

APA StyleShahba, A. A., Alashban, Z., Sales, I., Sherif, A. Y., & Yusuf, O. (2022). Development and Evaluation of Interactive Flipped e-Learning (iFEEL) for Pharmacy Students during the COVID-19 Pandemic. International Journal of Environmental Research and Public Health, 19(7), 3902. https://doi.org/10.3390/ijerph19073902