1. Introduction

Liabilities of insurance companies depend on the fair value of the outstanding claims that typically involve guarantees (that are also called embedded options). The market consistent value of these guarantees is defined under the risk-neutral measure , i.e., they are then computed with pricing formulas that agree on the current implied volatility surfaces. To hedge against the risks involved in these claims, insurers often acquire (complex) option portfolios that also require market consistent risk-neutral valuation themselves. Furthermore, on 1 January 2016 the so-called Solvency II directive came into effect which introduced the Solvency Capital Requirement (SCR). The SCR is defined as the minimum amount of capital which should be held by an insurer, such that the insurer is able to pay its claims over a one-year horizon with a 99.5% probability. The regulator demands that the insurer’s available capital should be greater than, or equal to, the SCR. Because the claims typically depend on future market consistent valuation, computing the SCR, and, more generally performing proper Asset Liability Management (ALM), is a challenging task.

To compute these future market consistent values of the embedded options, insurers require the probability distribution of the values of these embedded options. Typically, this is done by simulating a large number of random future states of the market and, after that, the different states are valued under the market consistent risk-neutral measure. From the simulated embedded option values, the desired statistics can then be extracted. The future states of the market can be computed by means of risk-neutral models (

in

), or real-world models (

in

). Risk-neutral simulations are, for example, used to calculate the Credit Value Adjustment (CVA) (see, e.g.,

Pykhtin (

2012)), which is a traded quantity and should therefore be computed using no-arbitrage arguments. For quantities that are not traded (or hedged), the

in

approach appears to be incorrect (see, e.g.,

Stein (

2016)) and the future state of the market should be modelled using the real-world measure (

in

). Real-world models are calibrated to the observed historical time-series and are typically used to compute non-traded quantities such as Value-at-Risk (VaR).

The risk-neutral measure at

is connected to the observed implied volatility surface and is therefore well-defined. However, the definition of the risk-neutral measure at future time

is debatable. Despite some relevant research on predicting the implied volatility surfaces (see, e.g.,

Cont et al. (

2002),

Mixon (

2002) and

Audrino and Colangelo (

2010)), it is common practice to use option pricing models that are only calibrated at time

, thereby assuming that the risk-neutral measure is independent of the state of the market (see, for example,

Bauer et al. (

2010) and

Devineau and Loisel (

2009)). This is however not in line with historical observations, where we see that the implied volatility surface does depend on the state of the market. Another drawback of this approach is that the resulting SCR is

pro-cyclical, i.e., the SCR is relatively high when the market is in crisis and relatively low when the market is stable. The undesired effect of pro-cyclicality is that it can aggravate a downturn

Bikker and Hu (

2015).

In this paper, we investigate the impact of relaxing the assumption that the risk-neutral measure is considered to be independent of the state of the market and develop the so-called VIX Heston model, which depends on the current and also on simulated implied volatilities. This approach, which we have named here the approach, takes into account the measure information at time and simulates risk-neutral model parameters (thus, future implied volatility surfaces are obtained by means of simulation) based on historically observed relations with some relevant market variables such as the VIX index.

As is well-known, the VIX index is a volatility measure for the S&P-500 index, which is calculated by the Chicago Board Options Exchange (CBOE) (see

CBOE (

2015)), and it is therefore directly linked to the implied volatility surface. Consequently, extracting information from the VIX index is frequently studied (see, e.g.,

Duan and Yeh (

2012)) and our approach in this paper is based on the methodology presented in

Singor et al. (

2017), where the development of the Heston model parameters for the S&P-500 index options and the VIX index have been analysed.

The contribution of our present research is two-fold. First, we discuss the justification of using a risk-neutral model with time-dependent parameters. By means of a hedge test, we show that hedging strategies that take into account the changes in the implied volatility surface significantly outperform those strategies that do not, both in simulation and with empirical tests. This leads to the conclusion that the time-dependent risk-neutral measure can be used for the evaluation of future embedded option prices. Secondly, we show the impact of our new approach. For that, we use real data from 2007 to 2016 and compute the SCR on a monthly basis with a constant measure and also with the VIX Heston model where this assumption is relaxed. We conclude that the VIX Heston model predicts out-of-sample implied volatility surfaces accurately and computes more conservative and stable SCRs. The impact of using the new approach on the SCR depends on the initial state of the market and may vary from to in our experiments. Moreover, we see that the SCR that is computed with the VIX Heston model is significantly less pro-cyclical, for example, it is lower in the wake of the 2008 credit crisis, as it incorporates the likely normalisation.

The outline of this paper is as follows. In

Section 2, we give the definition of the SCR. In

Section 3, we explain the dynamic VIX Heston model. In

Section 4, we present the hedge tests with the corresponding results, followed by

Section 5 where we present the numerical VIX Heston results and the impact of the using

dynamics on the SCR.

Section 6 concludes.

2. Solvency Capital Requirement

Let us denote the policy’s net income in the interval

by

, which is defined as the cash flows up to time

t that are generated under the real-world market. The mathematical definition is given by

where

is the expected return and “

” denotes the generated cash flow over the interval

. Similarly, we define the policy’s liabilities by

and they are given by the discounted expected cash flows under the risk-neutral measure in the interval

:

with risk-free rate

r. Note that a positive/negative cash flow corresponds to an income/liability for the insurer. We define

which can be thought of as the policy’s net value at time

t. The Solvency Capital Requirement is now defined as the 99.5% Value-at-Risk (i.e., the 99.5% quantile) of the one-year loss distribution under the real-world measure, i.e.,

Here, is defined as the discounted value of .

Guaranteed Minimum Accumulation Benefit

This section is dedicated to deriving the fund dynamics and the SCR of a frequently used guarantee in the insurance industry, namely the Guaranteed Minimum Accumulation Benefit (GMAB) variable annuity rider.

We assume that the fund only contains stocks, but the derivation is similar when different assets are combined. We denote the stock and fund value by

and

, respectively, and define the initial premium by

G. We assume the payout at maturity

T is at least equal to the initial premium, in other words,

The dynamics of the fund are very similar to the stock dynamics, except for a fee

which is deducted from the fund as a payment to the insurer. This fee can be thought of as a dividend yield. Following

Milevsky and Salisbury (

2001), we obtain

The specific dynamics depend on the assumptions regarding stock price

. Here, we assume the Black–Scholes model (geometric Brownian motion, GBM) under the

observed real-world measure , which yields:

Moreover, for the valuation, a risk-neutral Heston model is implemented, leading to

The income is generated by the accumulated fees, hence

The liabilities, on the other hand, depend on the final value of the fund, as follows,

If , the policyholder receives and the insurer has no liabilities.

If , the policyholder receives G and the liabilities of the insurer are equal to .

Moreover, the insurer continues to claim future fees, hence, according to Equation (

2), we can write the liabilities as

where

denotes the value of a European put option on the fund at time

t with strike price

G and dividend yield

. We can substitute these definitions into Equation (

4) to obtain

with

which can be thought of as the sum of the realized and expected fees. In this case, the SCR depends on the real-world distribution of

, which determines

and also influences the risk-neutral valuation of

.

Variable annuity riders require a risk-neutral valuation at (future) time , as the liabilities are, by definition, conditional expectations under the risk-neutral measure. In the case of a GMAB rider, there is an analytic expression available, but often there is no such expression for . Hence, the evaluation of the conditional expectation typically requires an approximation. In that case, often the Least-Squares Monte Carlo algorithm is used to approximate the conditional expectations at . The experiments presented in this paper were also performed for the Guaranteed Minimum Withdrawal Benefit (GMWB) variable annuity rider. As the results and conclusions were very similar as for the GMAB, in this paper, we restrict ourselves to the GMAB variable annuity.

The distributions of and in Equation (12) can be obtained by means of a Monte Carlo simulation,

First, the net policy value

is determined, according to definition Equation (

3).

Thereafter, the fund value along with other explanatory variables is simulated according to the real-world measure up to time .

Subsequently, the values of and are evaluated for each trajectory. The value of can be obtained directly from the trajectory of , however, requires a risk-neutral valuation for which the risk-neutral measure at time is required.

Finally, the simulated values are combined to construct the loss distribution. The SCR corresponds to the 99.5% quantile of this distribution.

Some more detail about the Monte Carlo simulation is provided in

Appendix A.

3. Dynamic Stochastic Volatility Model

When valuing options, one typically wishes to calibrate a risk-neutral model according to the market’s expectations, which are quantified by the implied volatility surface. This implied volatility surface can be used to extract European option prices for a wide range of maturities and strikes. The market expectation (and so the volatility surface), is however unknown at

and therefore practitioners typically use the implied volatility surface at

to calibrate the risk-neutral model parameters. In this

standard in approach, these parameters are assumed to be constant over time, i.e., the risk-neutral measure is independent of the real-world measure. Note that this approach is also used to compute risk measures from the Basel accords, such as credit value adjustment (CVA), capital valuation adjustment (KVA) and potential future exposure (PFE) (see

Kenyon et al. (

2015);

Jain et al. (

2016);

Ruiz (

2014)). However, regarding the computation of CVA, this quantity is typically hedged, and so using only the market expectation at

is sufficient.

In the approach, we relax the assumption of independence. The calibrated risk-neutral model parameters are related to the simulated real-world scenarios.

3.1. Heston Model

We assume the Heston model as a benchmark. The Heston stochastic volatility model (

Heston 1993) assumes the volatility of the stock price process to be driven by a CIR model, i.e., under the

measure,

where

r denotes the risk-free rate,

the speed of mean-reversion,

the long-term variance,

the volatility of variance and

the correlation between asset price and variance. The risk-free rate is assumed to be constant throughout this research. To calibrate these parameters according to the market’s expectations, one wishes to minimize the distance between the model’s and market’s implied volatilities. For the Heston model, consider the following search space for the parameters

where

denotes the search domain for parameter

p. Using this search space, one is able to find the calibrated parameters at time

t by minimizing the sum of squared errors:

The set of parameters minimizing this expression is considered risk-neutral and reflects the market’s expectations.

When the market is subject to changes, its expectations will change accordingly. Hence, the implied volatility surface will evolve dynamically over time. Consequently, the Heston parameters may change over time.

Figure 1 shows the monthly evolution of the Heston parameters from January 2006 to February 2017.

During this calibration procedure, we assumed

to be constant; an unrestricted

led to unstable results and did not significantly improve the accuracy. The other parameters, however, do not appear constant over time. This may give rise to issues in risk-management applications, where one simulates many real-world paths to assess the sensitivity to the market of a portfolio, balance sheet, etc. In many cases, a risk-neutral valuation is required, which is nested inside the real-world simulation, for example, when the portfolio or balance sheet contains options. Consequently, the future implied volatility surface for each trajectory needs to be known, such that the Heston parameters can be calibrated accordingly. Modelling the implied volatility surface over time is a challenging task (see, e.g.,

Cont et al. (

2002);

Mixon (

2002);

Audrino and Colangelo (

2010)), as it quantifies the market’s expectations that depend on many factors. Moreover, even if the implied volatility surface is modelled, one would still need to perform a costly calibration procedure. Performing this calibration for each of the simulated trajectories would require a significant computational effort. As the Heston model is a parameterization of the implied volatility surface, an attractive alternative is to simulate the Heston parameters directly.

Figure 1 clearly shows that the parameters are time-dependent, which is in contrast with the assumptions of the plain Heston model. In this research we first search for relations between the risk-neutral parameters and observe real-world market indices, such as the VIX index. When a relation is found, the future risk-neutral parameters can be extracted from a real-world simulation. In this way, by performing a real-world simulation, we can directly forecast the set of Heston parameters within each simulated trajectory. The risk-neutral measure is then conditioned on the simulated state of the market, without the need of a simulated implied volatility surface.

3.2. VIX Heston Model

We have already described the difficulties of modelling the implied volatility surface, or equivalently, the option prices. A different approach is therefore required to calibrate the Heston parameters in simulated markets. The simulated parameter sets should accurately reflect the expectations of the simulated market, for example by linking the dynamics of the Heston parameters to the dynamics of the market. In

Singor et al. (

2017), an approach is considered which is based on the assumptions of a linear relationship between the VIX index and the Heston parameters. After analysis, it was concluded that:

The initial volatility and the volatility of the volatility are highly correlated to the VIX index, with correlation coefficients of 0.99 and 0.76, respectively.

The long term volatility appears to be correlated to the VIX index trend line (estimated by a Kalman filter) with a correlation coefficient of 0.74.

To this end, the following restrictions are imposed on the Heston model parameters:

where both the speed of mean reversion

and the correlation coefficient

are assumed to be constant over time. The constant

assumption is justified by the fact that

displays a mean reverting pattern and it can therefore be approximated by its long-term mean. The constant

assumption is justified by observations in

Gauthier and Rivaille (

2009). They argued that the effect on the implied volatility surface of increasing

is similar to decreasing

. Thus, allowing

to change over time unnecessarily overcomplicates the model. Moreover, numerical experiments show that an unrestricted

sometimes leads to unstable results.

The purpose of the restrictions is to accurately reflect the market’s expectations. To this end, we wish to minimize the distance between the observed and predicted implied volatility surfaces. Therefore, we calibrate the constraint parameters with a procedure similar to Equation (

15). By changing the parameter set from

to

and summing over all points in time one obtains

with,

By including the VIX-index in the real-world simulation, one is able to efficiently evaluate the set of Heston parameters in line with the simulated state of the market. For more information regarding the derivation and properties of the VIX Heston model, we refer the reader to

Singor et al. (

2017).

It is, however, important to stress the different assumptions in the real-world and risk-neutral markets. Risk-neutral valuations are performed under the Heston model, which assumes constant parameters. However, in real-world simulations, we assume the Heston parameters to

change over time, according to the simulated state of the market, similar to

Figure 1. One could argue that this approach is invalid, since we are violating the assumptions of the risk-neutral market. To this end, we discuss a justification of this approach, by means of a hedge test. Moreover, to assess the impact of time-dependent Heston parameters, we implement the VIX Heston model as proposed in

Singor et al. (

2017) in a risk-management application: the Solvency Capital Requirement.

4. Hedge Test

Before implementing the dynamic risk-neutral measure (the VIX Heston model) in a risk-management application, we first test its applicability from a theoretical point of view. For example, in theory, one should be able to hedge against future positions using today’s implied volatility surface. This no longer applies when one assumes a risk-neutral measure that changes over time. To this end, we perform an experimental hedge test that determines which approach is more accurate in terms of future option prices, a dynamic or constant risk-neutral measure.

The plain Heston model assumes

and

to be constant, hence, from a theoretical point of view, it would be redundant to hedge against changes of these parameters. However, due to the dynamic behaviour of the implied volatility surface,

and

will change over time (see

Figure 1). Thus, from an empirical point of view, the option price dynamics are subject to changes of these parameters. To support this claim, we compare three different hedging strategies. The first strategy is the

classical Delta-Vega hedge, which does not take any changes of

and

into account. The replicating portfolio aims at hedging an option “A”, with value

, by holding a certain amount of stocks and a different option with value

(which is called option “B” from this point onwards), i.e.,

where

denotes the risk-free asset (for example, a bank account or a government bond), which grows with constant risk-free rate

r. Note that Option B depends on the same underlying market factors as Option A. Following

Bakshi et al. (

1997), we impose the so-called minimized variance constraints,

where

refers to the covariation between the two processes and

is the Brownian motion which is independent from

, driving random changes in the volatility. By imposing these constraints, one obtains a portfolio that has no covariation with the underlying asset and its volatility. In other words, changes in the asset’s value and changes in the asset’s volatility will have neither direct nor indirect (through correlations) effect on the portfolio. These constraints give us the following hedge ratios,

Under the assumptions of the Heston model, the portfolio dynamics are given by,

Note that the random components have disappeared from the portfolio. Thus, the portfolio should be insensitive to changes in the market, if it respects the assumptions of the Heston model.

Secondly, we assume a model which is similar to the classical Delta-Vega hedge, but with adjusted hedge ratios. We call this strategy the

adjusted Delta-Vega hedge. Assuming a dynamic model (see

Appendix B for more details), we can apply Ito’s lemma to obtain

with

. For notational purposes, we rewrite this expression as

where the coefficients are defined as

The hedge ratios now take the correlated components of

and

into account, due to the minimized variance constraints of Equation (

20). Using Equation (

24), the hedge ratios can be derived, giving

where

and

are defined as in Equation (

25) for Option B. The additional stochastic variables

and

follow mean reverting processes (see Equation (

A1) in

Appendix B), such that the portfolio dynamics are found to be,

The portfolio still depends on the randomness associated with and . However, the randomness associated with and have disappeared, including the random components of and that are correlated to and .

We also consider a strategy that aims at completely hedging against any changes of

and

, by introducing two additional options,

Again, all options depend on the same underlying market factors, but they have different contract details. In this case, we require to be protected against any changes of

,

,

and

, hence we impose

Substituting these constraints leads to a system of equations, which is solved by

By imposing Equation (

29), one removes all randomness associated with

,

,

and

. Hence, the portfolio dynamics only depend on deterministic changes under the assumed market dynamics. From this point onwards, we refer to this strategy as the

full hedge strategy.

4.1. Hedge Test Experiments

In this section, we discuss the results of the hedge test which was explained above. This test experiment indicates which approach is more accurate when evaluating future option prices, a measure which assumes and to be constant or a measure which assumes and to change over time. We thus consider the classical approach, with constant and , and two approaches that assume and to change over time. The different hedging strategies are tested in a simulated market as well as in an empirical market.

The empirical hedge test is based on daily implied volatility surfaces of the S&P-500 European put and call options from January 2006 to February 2014.

4.1.1. Simulated Market

First, we evaluate the hedging strategies in a controlled environment. In this test, we assume

and

to follow the Heston model, with the key difference that

and

are time-dependent mean reverting processes (as in Equation (

A1) in

Appendix B). This set-up will be informative, as it is in line with historical observations. The processes of

and

are discretized by the Quadratic Exponential (QE) scheme, as proposed in

Andersen (

2008). Furthermore, the processes of

and

are discretized by the Milstein scheme, while also taking the correlations with

into account. The details of this simulation scheme can be found in

Appendix B.

The performance of the classical Heston Delta-Vega, the adjusted Heston Delta-Vega and the full hedge is compared under the dynamics of this market. The strategies all aim at hedging a short position in a European call option with maturity

years and strike

. Moreover, at the start date of the option,

, we assume

The adjusted Delta-Vega and full hedge involve additional options. These options depend on the same stock as Option A, but the contract details are different, i.e.,

Moreover, we assume the following parameters in the dynamics of the

and

processes,

In reality, these parameters cannot be freely chosen, as they are implied by the market. By analysing the historical behaviour of and , one is able to estimate these SDE parameters. In this case, however, we assume to know these parameters and use them when determining the hedge strategy.

Ideally, the portfolio value should be equal to zero for each point in time, because the initial portfolio value is equal to zero. Every deviation from zero is thought of as a hedge error. Over the entire life of the option we desire the mean and standard deviation of this error to be as close as possible to zero. To this end, we introduce the following error measures for simulation

j,

The overall hedging performance of the

M simulations can be judged by

In the current set-up, these error measures are random variables, as they are determined by means of Monte Carlo simulations. To this end, we analyse the stability of the error measures across the simulated trajectories by the standard error,

with error measure

E, its mean

and the simulated trajectories

.

The performance of the strategies under these assumptions based on

simulations is given in

Table 1.

These results show that, in this experiment, it is beneficial to take parameter correlations into account when hedging, in terms of both the mean error and the standard deviation. While still not perfect, the dynamic Heston Delta-Vega hedge is better able to remain risk-neutral on average and it deviates less from this average. The full hedge performs even better with a mean approximately equal to zero and a standard deviation equal to or lower than any of the previous strategies, despite the dynamic behaviour of the market.

The purpose of these hedging strategies is to replicate the value of an option. In the case of the classical Delta-Vega hedge, only and are allowed to change. By respecting the assumptions of the Heston model, we are not fully able to replicate the future option values. On the other hand, when assuming the adjusted Heston model, and are allowed to change as well. Hedging strategies considering this dynamic behaviour, produce more accurate future option price estimates in the current set-up. This indicates the importance of taking dynamic parameters into account when determining future option prices, even when the assumptions of the underlying model are violated. However, note that the comparison is not completely fair, since we specifically assume a Heston market with time-dependent and . It can therefore be expected that a strategy taking these assumptions into account outperforms one that does not. Therefore, to better assess the true performance of these strategies, we also perform an empirical test.

4.1.2. Empirical Market

When hedging in practice, the underlying assumptions are not always respected and the

true parameters are, of course, unknown. To quantify the effect of these difficulties, we test the hedging strategies on historical data in this section. All hedging ratios depend on the dynamic risk-neutral parameters, hence

,

,

and

vary over time, according to the changes in the historically observed implied volatility surfaces. Moreover, the adjusted Heston Delta-Vega hedge depends on the correlation and volatility of

and

, which we define as follows

It can be quite challenging to determine the correlation between the Brownian motions driving the parameters, hence we assume it to be equal for each time-interval. Moreover, the volatility estimator only depends on past observations; the sum indices vary from to with year, where indicates the starting date of the option.

In this test, we hedge an at-the-money European call option with one year maturity on the S&P-500 index. All hedging strategies are subjected to daily rebalances that are based on the parameters as seen on that date. The transaction costs are excluded from this test, as we are interested in the performance of the hedging strategies with respect to changes in and . Including transaction costs would increase the costs of the full dynamic Heston hedging strategy, as it involves more financial assets. This would bias the results and it is therefore best to exclude the transaction costs from the present test.

The test is repeated on a monthly basis from July 2006 to February 2013 and the performance is assessed by the mean error and mean squared error during the life of the option,

The time intervals of the hedging portfolios overlap in this set-up, since the test is repeated on a monthly basis and the option maturity is one year. However, all strategies depend on different initial conditions and therefore perform differently, despite the overlapping time-intervals. The results of this test are graphically presented in

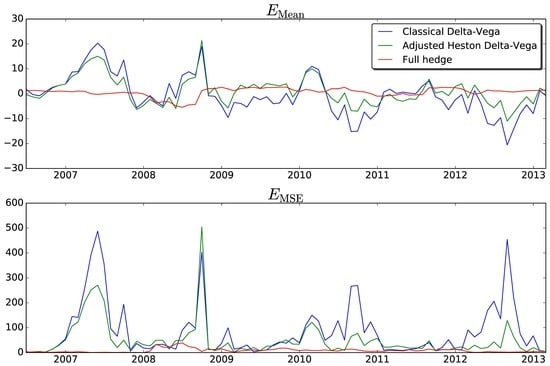

Figure 2.

The hedging performances are similar to the simulation results:

The classical Delta-Vega hedge does not take changes of the parameters into account and appears to be the most unstable method. This strategy has the most and highest error “peaks” and is therefore most unreliable.

The adjusted Delta-Vega hedge is still not perfect but appears to be more stable than the classical Delta-Vega hedge, the error “peaks” happen less frequently and are less pronounced. The error can be minimized by optimizing the correlation and volatility of and , but the optimization can only be performed afterwards, which is not the objective of this test.

The full hedge is the most stable out of the three strategies. It does not have any error “peaks” and outperforms the other two strategies in most cases. Moreover, this strategy does not depend on additional parameters which may introduce an error if chosen poorly, such as in the dynamic Heston Delta-Vega hedge.

We can conclude that respecting the assumptions of the underlying model (in this case, the Heston model) does not necessarily lead to more accurate future option prices. By taking changes of the and parameters into account, we are able to replicate option values more accurately both in a controlled (simulation) and uncontrolled (empirical) environment.

6. Conclusions

The research in this paper was motivated by the open question of how to value future guarantees that are issued by insurance companies. The future value of these guarantees is essential for regulatory and Asset Liability Management purposes. The complexity of the valuation is found in the fact that, first, these guarantees involve optionalities and thus need to be valued using the risk-neutral measure; and, second, whereas this measure is well-defined at , the future risk-neutral measure, at future time , is debatable.

For a large part, the liabilities evolve according to real-world models and, therefore, the future values of these guarantees need to be computed conditionally on the real-world scenarios. In this paper, we demonstrate the benefits of option valuation under a new, so-called measure in Asset Liability Management. This is done by modelling the Heston model parameters, which form the parameterization of the implied volatility surface, conditional on the real-world scenarios.

Basically, we advocate the use of dynamic risk-neutral parameters in the cases in which we need to evaluate asset prices under the measure, before an option value is required at a future time point. It means that the development of the real-world asset paths in the future are taken into account in the option valuation.

A hedge test was implemented for an academic test case, where the dynamic strategy outperformed the strategy with static parameters. Importantly, the results from this hedge test case were confirmed by a hedge test based on 12 years of empirical, historical data. Several conclusions have already been given after each of the structured test experiments is presented.

The results obtained by the strategy for the Solvency Capital Requirement of the variable annuities exhibited differences of even 50%, as compared to the conventional risk-neutral pricing of these annuities. Next to that, we saw that the SCR was significantly less pro-cyclical under the new approach, which is a highly desired feature.