Acceleration Feature Extraction of Human Body Based on Wearable Devices

Abstract

:1. Introduction

- (1)

- Video based body monitoring. The behavior monitoring method based on video images is used to recognize human activities by observing the image sequence taken by the camera [7,8,9]. However, visual tools such as cameras are usually fixed and are more suitable for indoor use. There are many limitations to the use of video image-based behavior monitoring for behaviors that penetrate indoors and outdoors and in different locations. For example, it is difficult to deploy and can be easily blocked by objects. Its identification and data processing methods rely heavily on the external environment and are highly intrusive to the privacy of human activities. In addition, if a more accurate identification rate is required, the requirements for data sources are higher.

- (2)

- Sensor based body monitoring [10,11]. With the development of micromachines, sensors can sense more and more content with low costs. Wearable devices can be attached to the human body and move with people, thus providing continuous monitoring without interfering with the normal activities of the wearer. Considering this advantage, many researchers are more inclined to use sensors as human body data acquisition tools. Wearable devices are favored by users because of their compactness, lightness, and ability to continuously monitor human behavior data.

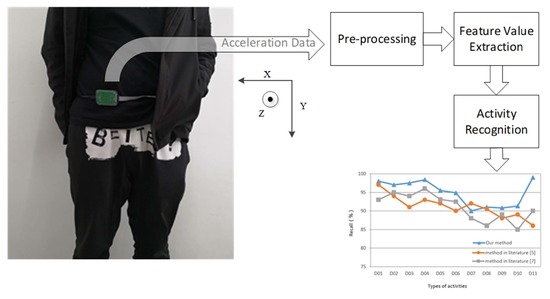

2. Methods

2.1. Datasets

2.2. Pre-Processing

2.3. KGA Algorithm

2.3.1. Initial Stage

2.3.2. Optimization Stage

- (a)

- Selection operation. Select the best individuals from all the generated populations or generate new populations for inheritance, then evaluate them primarily by the fitness of each population. Make selection by method of roulette, so that the selection probability of each population individual can be expressed as:

- (b)

- Crossover operation. Randomly select two population individuals for exchange and combination. Suppose the two populations are and respectively, and their crossing at position can be expressed as:where, is a random number between 0 and 1.

- (c)

- Mutation operation. One individual population is selected randomly from all the generated populations for mutation operation. The variation of individual at position can be expressed as:

2.4. Feature Extraction

2.4.1. Standard Deviation

2.4.2. Interval of Peaks

2.4.3. Difference between Adjacent Peaks and Troughs

3. Results and Discussion

3.1. Analysis of Filtering Algorithms

3.2. Analysis of Running Activity

3.3. Analysis of Going up and down Stairs

3.4. Analysis of Sit-Up Activity

3.5. Analysis of Jumping Activity

3.6. Comparison of Feature Values of Different Motions

3.7. Comparison of Different Methods

4. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Conflicts of Interest

References

- Hussain, S.; Kang, B.H.; Lee, S. A Wearable Device-Based Personalized Big Data Analysis Model. In Ubiquitous Computing and Ambient Intelligence. Personalisation and User Adapted Services; Springer: Berlin/Heidelberg, Germany, 2014; pp. 236–242. [Google Scholar]

- Hsu, Y.L.; Yang, S.C.; Chang, H.C.; Lai, H.-C. Human Daily and Sport Activity Recognition Using a Wearable Inertial Sensor Network. IEEE Access 2018, 6, 1–14. [Google Scholar] [CrossRef]

- Wilson, C.; Hargreaves, T.; Hauxwell-Baldwin, R. Smart homes and their users: A systematic analysis and key challenges. Pers. Ubiquitous Comput. 2015, 19, 463–476. [Google Scholar] [CrossRef] [Green Version]

- Song, F.; Zhou, Y.; Wang, Y.; Zhao, T.; You, I.; Zhang, H. Smart Collaborative Distribution for Privacy Enhancement in Moving Target Defense. Inf. Sci. 2019, 479, 593–606. [Google Scholar] [CrossRef]

- Chen, Y.; Shen, C. Performance Analysis of Smartphone-Sensor Behavior for Human Activity Recognition. IEEE Access 2017, 5, 3095–3110. [Google Scholar] [CrossRef]

- Song, F.; Zhu, M.; Zhou, Y.; You, I.; Zhang, H. Smart Collaborative Tracking for Ubiquitous Power IoT in Edge-Cloud Interplay Domain. IEEE Internet Things J. 2020, 7, 6046–6055. [Google Scholar] [CrossRef]

- Janidarmian, M.; Fekr, A.R.; Radecka, K.; Zilic, Z. A Comprehensive Analysis on Wearable Acceleration Sensors in Human Activity Recognition. Sensors 2017, 17, 529. [Google Scholar] [CrossRef]

- Song, F.; Zhou, Y.; Chang, L.; Zhang, H. Modeling Space-Terrestrial Integrated Networks with Smart Collaborative Theory. IEEE Netw. 2019, 33, 51–57. [Google Scholar] [CrossRef]

- Wang, A.; Chen, G.; Yang, J.; Chang, C.-Y.; Zhao, S. A Comparative Study on Human Activity Recognition Using Inertial Sensors in a Smartphone. IEEE Sens. J. 2016, 16, 4566–4578. [Google Scholar] [CrossRef]

- Lara, O.D.; Labrador, M.A. A Survey on Human Activity Recognition using Wearable Sensors. IEEE Commun. Surv. Tutor. 2013, 15, 1192–1209. [Google Scholar] [CrossRef]

- Su, X.; Caceres, H.; Tong, H.; He, Q. Online Travel Mode Identification Using Smartphones with Battery Saving Considerations. IEEE Trans. Intell. Transp. Syst. 2016, 17, 2921–2934. [Google Scholar] [CrossRef]

- Ling, B.; Intille, S.S. Activity Recognition from User-Annotated Acceleration. Data Proc. Pervasive 2004, 3001, 1–17. [Google Scholar]

- Priyanka, K.; Tripathi, N.K.; Peerapong, K. A Real-Time Health Monitoring System for Remote Cardiac Patients Using Smartphone and Wearable Sensors. Int. J. Telemed. Appl. 2015, 2015, 1–11. [Google Scholar]

- Chao, C.; Shen, H. A feature evaluation method for template matching in daily activity recognition. In Proceedings of the 2013 IEEE International Conference on Signal Processing, Communication and Computing (ICSPCC 2013), KunMing, China, 5–8 August 2013; pp. 1–4. [Google Scholar]

- Maekawa, T.; Kishino, Y.; Yasushi, S. Activity recognition with hand-worn magnetic sensors. Pers. Ubiquitous Comput. 2013, 17, 1085–1094. [Google Scholar] [CrossRef]

- Cheng, J.; Amft, O.; Bahle, G.; Lukowicz, P. Designing Sensitive Wearable Capacitive Sensors for Activity Recognition. IEEE Sens. J. 2013, 13, 3935–3947. [Google Scholar] [CrossRef]

- Barshan, B.; Yüksek, M.C. Recognizing Daily and Sports Activities in Two Open Source Machine Learning Environments Using Body-Worn Sensor Units. Comput. J. 2013, 57, 1649–1667. [Google Scholar] [CrossRef]

- Zhang, M.; Sawchuk, A.A. A feature selection-based framework for human activity recognition using wearable multimodal sensors. In Proceedings of the 13th International Conference on Ubiquitous Computing (UbiComp 2011), Beijing, China, 17–21 September 2011; pp. 92–98. [Google Scholar]

- Dinov, I.D. Decision Tree Divide and Conquer Classification. In Data Science and Predictive Analytics; Springer: Berlin/Heidelberg, Germany, 2018; pp. 307–343. [Google Scholar]

- Aziz, O.; Russell, C.M.; Park, E.J.; Robinovitch, S.N. The effect of window size and lead time on pre-impact falls detection accuracy using support vector machine analysis of waist mounted inertial sensor data. In Proceedings of the 2014 36th Annual International Conference of the IEEE Engineering in Medicine and Biology Society, Chicago, IL, USA, 26–30 August 2014; pp. 30–33. [Google Scholar]

- Diep, N.N.; Pham, C.; Phuong, T.M. A classifier based approach to real-time falls detection using low-cost wearable sensors. In Proceedings of the 2013 International Conference on Soft Computing and Pattern Recognition (SoCPaR), Hanoi, Vietnam, 15–18 December 2015; pp. 14–20. [Google Scholar]

- Jian, H.; Chen, H. A portable falls detection and alerting system based on k-NN algorithm and remote medicine. China Commun. 2015, 12, 23–31. [Google Scholar] [CrossRef]

- Song, F.; Ai, Z.; Zhou, Y.; You, I.; Choo, R.; Zhang, H. Smart Collaborative Automation for Receive Buffer Control in Multipath Industrial Networks. IEEE Trans. Ind. Inform. 2020, 16, 1385–1394. [Google Scholar] [CrossRef]

- Foody, G.M. RVM-based multi-class classification of remotely sensed data. Int. J. Remote Sens. 2008, 29, 1817–1823. [Google Scholar] [CrossRef]

- Anguita, D.; Ghio, A.; Oneto, L.; Parra, X.; Reyes-Ortiz, J.L. Human Activity Recognition on Smartphones Using a Multiclass Hardware-Friendly Support Vector Machine Ambient Assisted Living and Home Care. In Ambient Assisted Living and Home Care; Springer: Berlin/Heidelberg, Germany, 2012; pp. 216–223. [Google Scholar]

- Martín, H.; Bernardos, A.M.; Iglesias, J.; Casar, J.R. Activity logging using lightweight classification techniques in mobile devices. Pers. Ubiquitous Comput. 2013, 17, 675–695. [Google Scholar] [CrossRef]

- Song, F.; Ai, Z.; Zhang, H.; You, I.; Li, S. Smart Collaborative Balancing for Dependable Network Components in Cyber-Physical Systems. IEEE Trans. Ind. Inform. 2020. [Google Scholar] [CrossRef]

- Nukala, B.T.; Shibuya, N.; Rodriguez, A.I.; Nguyen, T.Q.; Tsay, J.; Zupancic, S.; Lie, D.Y.C. A real-time robust falls detection system using a wireless gait analysis sensor and an Artificial Neural Network. In Proceedings of the 2014 IEEE Healthcare Innovation Conference (HIC), Seattle, WA, USA, 8–10 October 2014; pp. 219–222. [Google Scholar]

- Angela, S.; López, J.; Vargas-Bonilla, J. SisFall: A Fall and Movement Dataset. Sensors 2017, 17, 198. [Google Scholar]

- Digital Signal Processing Formula Variable Program (four)-Butterworth Filter (On). Available online: https://blog.csdn.net/shengzhadon/article/details/46784509 (accessed on 7 July 2015).

- Kalman, R.E. A New Approach to Linear Filtering and Prediction Problems. J. Fluids Eng. 1960, 82, 35–45. [Google Scholar] [CrossRef] [Green Version]

- Xianglin, W.; Jianwei, L.; Yangang, W.; Chaogang, T.; Yongyang, H. Wireless edge caching based on content similarity in dynamic environments. J. Syst. Archit. 2021, 102000. [Google Scholar] [CrossRef]

- Bingqian, H.; Wei, W.; Bin, Z.; Lianxin, G.; Yanbei, S. Improved deep convolutional neural network for human action recognition. Appl. Res. Comput. 2019, 36, 3111–3607. [Google Scholar]

| Number | Type | Number of Experiments | Duration (s) |

|---|---|---|---|

| D01 | Walk slowly | 1 | 100 |

| D02 | Walk quickly | 1 | 100 |

| D03 | Run slowly | 1 | 100 |

| D04 | Run quickly | 1 | 100 |

| D05 | Go up and down the stairs slowly | 5 | 25 |

| D06 | Go up and down the stairs quickly | 5 | 25 |

| D07 | Sit→get up slowly in a half-high chair | 5 | 12 |

| D08 | Sit→get up quickly in a half-high chair | 5 | 12 |

| D09 | Sit→get up slowly in a low chair | 5 | 12 |

| D10 | Sit→get up quickly in a low chair | 5 | 12 |

| D11 | Jump up | 5 | 12 |

| Parameters | Values |

|---|---|

| Sampling frequency | 25 |

| Cut-off frequency | 5 |

| Order | 4 |

| Motion Type | Sacc | SD | DAPT | IoP |

|---|---|---|---|---|

| D03 | 1.37 | 0.15 | 0.51 | 0.39 |

| D04 | 1.39 | 0.25 | 0.52 | 0.38 |

| D05 | 1.97 | 0.92 | 0.29 | 0.79 |

| D06 | 1.41 | 0.23 | 0.59 | 0.48 |

| D07 | 1.1 | 0.014 | 0.11 | 1.02 |

| D08 | 1.43 | 0.17 | 0.58 | 0.62 |

| D11 | 1.82 | 0.38 | 1.01 | 0.72 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Huang, Z.; Niu, Q.; You, I.; Pau, G. Acceleration Feature Extraction of Human Body Based on Wearable Devices. Energies 2021, 14, 924. https://doi.org/10.3390/en14040924

Huang Z, Niu Q, You I, Pau G. Acceleration Feature Extraction of Human Body Based on Wearable Devices. Energies. 2021; 14(4):924. https://doi.org/10.3390/en14040924

Chicago/Turabian StyleHuang, Zhenzhen, Qiang Niu, Ilsun You, and Giovanni Pau. 2021. "Acceleration Feature Extraction of Human Body Based on Wearable Devices" Energies 14, no. 4: 924. https://doi.org/10.3390/en14040924

APA StyleHuang, Z., Niu, Q., You, I., & Pau, G. (2021). Acceleration Feature Extraction of Human Body Based on Wearable Devices. Energies, 14(4), 924. https://doi.org/10.3390/en14040924