Several tailor-made experiments are conducted to compare the performance and the power consumption of the FSR and that of the RR. Each experiment has a unique behaviour, purpose and suitability to investigate and explore the latent relationship among operating frequency, performance and power consumption. The interaction and interdependency among these three individual factors then can be interpreted and explained.

5.1. Lab Setup

The lab setup consists of two NetFPGA boards and two desktop computers as corresponding board hosts installed with CentOS 5.5. The two experimental NetFPGA boards are both in Version 2.1 Revision 3. The NetFPGA board is a research platform with open source hardware and software resources. The developers could start with publicly-available NetFPGA packages to design and implement their own mechanisms or algorithms with new custom modules.

As shown in

Figure 9, one NetFPGA board is configured as a packet generator (PG) [

26], while the other one is configured as the RR or the FSR in turn for power consumption and performance measurements. Network traffic with different bit rates and packet sizes is generated by the PG and sent from its four Ethernet ports to the corresponding ports on the RR or the FSR. Then, the traffic is routed through the four Ethernet ports on the RR or the FSR with different operating frequencies and sent back to the corresponding ports on the PG.

Power consumption measurements were taken using a smart PCI bus extender, a data acquisition unit (DAQ) and associated software. As mentioned in [

27,

28], the PCI bus extender could precisely measure the real-time power consumption of a PCI-based board. The DAQ is optimized for superior accuracy at fast sampling rates. A software script on a computer was used to collect the power consumption data automatically read from the DAQ. For fixed traffic experiments, power consumption is calculated as the average of three million samples at 764,000 samples per second, and thus, each power consumption measurement takes about 3.926 (i.e., 3,000,000/764,000) s.

5.2. Evaluation Metrics

Power consumption: Power consumption in a network device is the sum of two components: quiescent power and dynamic power. Quiescent power is the static power drawn by the device when it is powered up, configured with user logic, but there is no traffic load. Dynamic power is the power consumed during processing traffic load in the core or I/O of the device, and it is, therefore, frequency dependent. The total power consumption of a network device can be modelled as a linear function of the traffic characteristics as given in Equation (1) [

29]:

where

P is the total power,

P is the quiescent power,

P is the dynamic power,

A is the activity factor, such as the fraction of the circuit that is switching,

C is the load capacitance,

f is the core operating frequency and

V is the supply voltage. For a given NetFPGA board,

P,

C and

V are fixed values, and

A is affected by the number of active ports, the instantaneous traffic bit rate and the forwarding packet size.

The power Equation (1) is the guideline for the theoretical analysis. In operation, the NetFPGA 1G board is a PCI-based board, and all of the power supplies are derived from the 3.3-V and the 5-V power rails on the PCI bus. The embedded FPGA chips draw power from the 3.3-V rail of the PCI bus, and the PHY components are supplied by the 5-V rail. The 3.3-V and 5-V pins of the PCI bus extender are used to measure the overall current drawn by the 3.3-V and 5-V powered components on the NetFPGA board mounted on the PCI bus extender. Hence, the total power consumption can be calculated with Equation (2):

where

P is the total power,

I is the total current of the board components’ drawn power from the 3.3-V rail of the PCI bus and

I is the total current of 5-V powered components.

Performance: The peak measured throughput is the peak measured rate of successful data delivery over a real implemented system. In the performance evaluation, it is the most important metric to be measured because it directly determines the preset threshold in the frequency control policies. Each operating frequency has an associated traffic throughput threshold beyond which the router will begin to lose a significant number of packets. The peak measured throughput must be measured as accurately as possible. Otherwise, the difference between the input traffic load and inaccurate preset threshold could degrade the performance of the FSR.

The round trip time (RTT) is also an important network performance measure in the performance evaluation. It is the propagation time taken for a packet to travel from a specific source to a specific destination and return back to the source. Measuring the RTT assists network operators and end users in understanding the network performance and taking measures to improve the QoS if needed.

To ensure the required QoS, a router should speedily and accurately forward data packets with little or no packet loss. Packet loss is another important metric adopted in the performance evaluation in the experiments. In the NetFPGA user data path pipeline, packet loss occurs in four MAC receive queues and four MAC output queues, but not in any MAC transmit queues. This is because the transmit queues simply take packets from the corresponding output queues and send the packets out through the Ethernet ports. Thus, the total packet loss can be calculated with the following equation:

where

L is the total packet loss,

L is the total packet loss in the four receive queues and

L is the total packet loss in the four output queues.

Transition time is closely associated with packet loss. It is the time required for the router to switch from one frequency to another. Experimental results show that, under constant heavy traffic load, a longer transition time could result in greater packet loss, especially during scaling from a lower frequency to a higher one. Thus, transition time is another important metric in the performance evaluation.

5.3. Fixed Traffic Experiments

As indicated in Equation (1), the total power consumption of a router is affected by the operating frequency, the number of active ports, the traffic bit rate and the packet size. To evaluate the impact of each of these factors on the performance and power consumption of the RR and the FSR, a series of carefully designed experiments is conducted.

We start with measuring the power consumption of the RR and the FSR in idle state when the board is configured as the RR or the FSR, but without routing any traffic. To evaluate the impact of the operating frequency and the number of active ports on the power consumption, the RR bit file and the FSR bit file are, in turn, downloaded into the core logic FPGA processor and configured as an RR and an FSR for comparison. The four Ethernet ports are activated one by one, and the power consumption of the RR and the FSR is measured at their allowed operating frequencies. The measurement results show that the power consumption of a router is proportional to the number of active ports, with approximately 1 W additional power consumption for each activated port.

As shown in

Table 3 and

Table 4, the results indicate that under the same operating frequencies of 125 MHz or 62.5 MHz, the FSR consumes more power than the RR. This is due to the fact that an additional AFIFO module and several frequency division modules are integrated into the FSR. Each additional module incurs additional power consumption. Moreover, for the RR, the two SRAMs work synchronously with the core logic FPGA processor for writing and reading data at 125 MHz or 62.5 MHz. For the FSR, the core logic can be scaled among five different frequencies of 125 MHz, 62.5 MHz, 31.3 MHz, 15.6 MHz and 7.8 MHz, but the two SRAMs are working asynchronously with the core logic and running at 125 MHz constantly. This means the power consumption difference between the RR and the FSR at 62.5 MHz is even more than that at 125 MHz, because at 62.5 MHz, the two SRAMs run at 62.5 MHz in the RR and run at 125 MHz in the FSR, although the core logic FPGA processor is running at the same 62.5 MHz.

The traffic characteristics (traffic bit rate and packet size) could also affect the power consumption. As indicated in [

14], the packet sizes of 64 Bytes, 576 Bytes and 1500 Bytes are typical packet sizes in real network links, and the packet size profile peaks at 64 bytes and 1500 bytes. The minimum size of a standard Ethernet packet is 64 bytes. One thousand five hundred bytes is the maximum transmission unit (MTU) for Ethernet. MTU is an important factor for network throughput and should be as large as possible, because larger MTU introduces less overhead for payload transmission. In multi-network environments, if a maximum-sized packet travels from a network with a larger MTU to a smaller MTU network, the packet will have to be fragmented to smaller-sized packets. Five hundred seventy six bytes is the default IP maximum datagram size. It is also the default conservative packet size that all IP routers support.

To evaluate the impact of the traffic characteristics on the power consumption, a series of experiments were performed using different operating frequencies (125 MHz, 62.5 MHz, 31.3 MHz, 15.6 MHz and 7.8 MHz), different traffic bit rates (from 100 Mb/s to 1 Gb/s for each link) and different packet sizes (64 bytes, 576 bytes and 1500 bytes). Packet streams are sent from the four ports on the packet generator, routed through the four ports on the router and back to the corresponding ports on the packet generator.

Figure 10 shows an example of the total power consumption of the RR and the FSR under the same 1500-byte packet stream with different operating frequencies and different aggregated input traffic bit rates. Experimental results indicate that power consumption is proportional to the traffic bit rate and the operating frequency for both the RR and the FSR. Refer to Equation (1); a higher traffic bit rate means a higher activity factor

A, which represents the average number of switching events of the transistors in the chip. Increasing the operating frequency of a router also increases its power consumption.

One key point to be noted is that the power differences between the RR running at 125 MHz and 62.5 MHz come from the power consumption difference in both the core logic FPGA processor and the two SRAMs, whereas the power consumption differences in the FSR only come from the core logic FPGA processor because the two SRAMs are always running at 125 MHz.

Figure 10 also presents that at the same working frequencies of 125 MHz or 62.5 MHz, the FSR consumes more power than the RR, due to the additional AFIFO module and frequency division modules. Although at the same operating frequency, the FSR consumes around 6% more power than the RR, experiments with real traces in the next subsection indicate that the FSR can work at lower frequencies when the traffic is low, so that it will result in overall 46% power savings. For light load traffic, DFS-enabled routers can save a significant amount of power rather than leaving routers always on the maximum operating frequency all of the time.

To evaluate the impact of the operating frequency and the traffic characteristics on the performance, the link capacity is one of the most important metrics to be firstly measured. Link capacity is also known as the peak measured throughput.

Figure 11 presents the link capacity of the RR and the FSR under different operating frequencies and different typical packet sizes. Experimental results show that a higher operating frequency and a larger packet size could lead to higher link capacity. A higher frequency produces more cycles per second to fit more data in per second, and it thus provides higher routing capacity. Due to the less overhead incurred by packet head processing, a larger packet size typically means higher routing capacity for routers, or lower power consumption, or both. Therefore, it is advisable to use larger packet sizes whenever possible.

The operating frequency and the traffic characteristics could also affect the RTT. The RTT is measured using the NetFPGA packet generator [

26] to achieve more accurate RTT results due to the fact that timestamping in PG is performed in hardware. Compared to using hardware, the software RTT measurements, such as ping, involve the process of notifying the kernel when a packet arrives. Transferring the packet into the kernel introduces a variable delay, thus limiting the accuracy of the results. In addition, the RTT measurements are performed by passively measuring the RTT using widely-deployed TCP timestamp options carried in TCP headers. Thus, the hardware RTT measurements do not need to launch out-of-band Internet Control Message Protocol (ICMP) echo requests (pings) nor embed timing information in application traffic.

In the RTT measurements, the experimental setup is somewhat different from the lab setup in

Figure 9. All four ports are used to measure the RTT: two ports of the PG are connected directly to one another, and the RTT is measured to provide a baseline RTT reference as RTT

0; the other two ports of the PG are connected to the RR or the FSR under test; and the RTT is measured as RTT

1. The RTT is calculated as the difference between RTT

1 and RTT

0.

Figure 12 reports the RTT of the RR and the FSR under different operating frequencies and different packet sizes. Experimental results indicate that a higher operating frequency and a smaller packet size could give rise to a shorter round trip time. Compared to the smaller-sized packet, the larger-sized packet has a longer RTT because it takes longer time to buffer and process. For example, a packet 64 bytes long has a 2 µs RTT, while a packet 1500 bytes long has a 16 µs RTT with the same operating frequency of 125 MHz for the RR.

The traffic characteristics and the operating frequency also affect the packet loss.

Figure 13 presents the packet loss of the RR and the FSR under the same 1500-byte packet stream with different operating frequencies and different aggregated input traffic bit rates. Experimental results indicate that, for both the RR and the FSR, the packet loss is proportional to the traffic bit rate. Besides, a higher operating frequency could result in lower packet loss.

5.4. Experiments with Real Traces

To evaluate the proposed FSR, real traces with an application mix of Transmission Control Protocol (TCP) and User Datagram Protocol (UDP) traffic (including the Internet’s most popular applications, Hypertext Transfer Protocol (HTTP), File Transfer Protocol (FTP), email, Domain Name System (DNS), Simple Network Management Protocol (SNMP), streaming media, etc.) were captured from a border router in the Dublin City University campus network.

Figure 14 shows a clear link traffic volume diurnal pattern. In this diurnal pattern, the maximum traffic bit rate is approaching 649 Mb/s between 16:00 and 17:00, and the average traffic bit rate is about 280 Mb/s.

To quantify the benefits brought by the DFS technique, the captured traffic in

Figure 14 was routed through the RR and the FSR in turn for power consumption and performance comparison. With the same traffic pattern,

Figure 15 presents the corresponding operating frequencies of the RR and the FSR (packet loss-aware policy) for the day. As shown in

Figure 15, the upper red line at 125 MHz represents that the RR is constantly working at 125 MHz. The lower blue curve demonstrates frequency switching from time to time in the FSR, indicating that the FSR is capable of working at appropriate frequencies in response to the instantaneous traffic load.

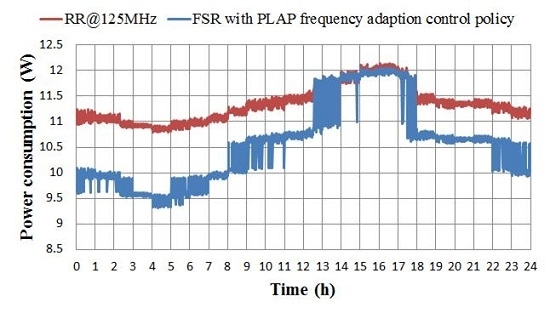

Figure 16 depicts the corresponding power consumption of the RR and the FSR for the day. Since the RR is running at the fixed frequency of 125 MHz, the power consumption of the RR (the upper red curve) fluctuates only in response to the traffic characteristics. However, the power consumption of the FSR (the lower blue curve) fluctuates sharply due to the fact that, in the FSR, both traffic characteristics and operating frequencies affect the power consumption. The difference between these two curves reveals the power savings from the DFS technique, which indicates that the FSR dynamically scales the operating frequency of the core FPGA processor among five different frequencies in response to the instantaneous traffic load, rather than leaving network routers running at the highest frequency all of the time.

If a fixed operating frequency is used throughout the day, a high frequency will ensure negligible packet loss, even during the peak period from 15:00 to 17:00 h in

Figure 14, at the expense of high energy consumption. Adapting the frequency in accordance with one of the control policies described in

Section 4 results in considerable energy savings. The resulting graph of operating frequency vs. time is shown in

Figure 15 for the PLAP policy. Broadly similar results were obtained for the other two policies. The aggregate duration in each frequency mode for different control policies is shown in

Table 5. The second highest available operating frequency (62.5 MHz) was selected for an aggregate of just less than ten hours during the day and the highest frequency (125 MHz) for about four and a half hours. Lower frequencies were needed for the remaining nine and a half hours of the day. This results in a significant power savings. The resulting power savings are shown in

Table 6.

5.5. Combined with Other Green Techniques

Dynamic frequency scaling provides an active performance scaling feature to achieve energy proportional routing, which is the appropriate energy saving approach for network routers given that their connections have to be always on to keep a heartbeat to deal with routing protocols and unpredictable traffic (e.g., core routers). However, the energy efficiency improvements from dynamic frequency scaling are usually less than those from the Ethernet port shut-down. There are challenges to applying Ethernet port shut-down in core routers because, compared to active performance scaling, it takes more time and power to transition between the on and off state. Predicting the off period and adapting to the appropriate state is still difficult. The end routers, such as home routers, on the other hand, usually follow human behaviour, and Ethernet ports can be shut down when people sleep to achieve more power savings.

As indicated in our previous work [

25,

30], more power consumption can be saved by re-routing traffic to other ports when traffic on a port is low and turning off the port. Disabling each port can save around 1 W, and turning off the ports at both ends of the connection can result in an overall savings of around 2 W. For the NetFPGA router, turning off all four Ethernet ports can save around 4 W, while scaling down the frequency from 125 MHz to 7.8 MHz while keeping all four Ethernet ports active can save a maximum of only around 2.2 W. Thus, traffic re-routing through network-wide energy-aware traffic engineering coupled with disabling Ethernet ports is a more effective way to reduce power consumption.

An Ethernet port shut-down module is integrated into the FSR to disable an Ethernet port when there is no traffic going through the port for a period of time. With this module, the router can save significant power compared to leaving Ethernet ports fully on all of the time. In the Ethernet port shut-down module, several byte counter modules are introduced to count the number of bytes passing through each Ethernet FIFO queue. For a period of time, if there is no traffic to handle for an Ethernet port, the port is disabled until new packets come in. This module provides a way to locally control the Ethernet ports. The Ethernet ports can be also controlled by a network level power management technique. Energy-efficient traffic engineering is a promising network level technique to manage the routing path and to disable as many Ethernet ports as possible without significant packet delay and loss. If a network level technique is involved, network-wide coordination, such as the green abstract layer (GAL) [

31], is compulsory. The GAL provides advanced power management capabilities to decouple distributed high level algorithms from heterogeneous hardware.

For Ethernet port shut-down, the control policy combines a network level power management technique with a local level approach. Ethernet port shut-down should be controlled at a higher level by a network-wide global power management policy with energy-aware traffic engineering capability, so that traffic destined for a port can be diverted to other active ports to enable the safe shut down of a port. Otherwise, a sudden surge of traffic to a disabled port can result in significant packet loss, as the disabled port will be unable to respond quickly enough when the new packets arrive. With energy-efficient traffic engineering, the disabled port is able to be woken up in advance, allowing traffic to traverse the port again. The energy-aware traffic engineering is performed at a scheduler to decide when to safely enable or disable every Ethernet port.

Figure 17 presents a simple example of energy-aware traffic engineering. Router A sends 300 Mb/s of aggregate traffic to Router E. To prevent network congestion from traffic bursts, traditional routing with load balancing may split the 300-Mb/s traffic into 100 Mp/s for each of the three links. Whereas, energy efficient traffic engineering reroutes and aggregates the 300-Mb/s traffic from three separate links (each link 100 Mb/s) to one single link (300 Mb/s), so that the Ethernet ports on the links without traffic can be disabled for a period of time to achieve more power savings.

If the router is in the idle state and no traffic is being processed, the FSR can disable all four Ethernet ports and switch to the lowest frequency 7.8 MHz with a power consumption of 5.118 W, instead of the RR, keeping all four Ethernet ports at 125 MHz with a power consumption of 10.725 W. Thus, in the idle state, the FSR can save up to 52.28% of the power consumption compared to the RR.

Table 6 summarizes the average power consumption for the experiments with real traces. The RR has the highest power consumption of 11.750 W. The FSR with STP consumes the least power of 10.565 W and saves 10.09% of power. The differences of power consumption among the three different control policies in the FSR are small because the differences only come from the different numbers of transitions. However, if dynamic frequency scaling (DFS), traffic re-routing through network-wide green traffic engineering (GTE) and Ethernet port shut-down (EPS) are all implemented, 46.04% of power can be saved in this specific experiment.

5.6. Transition Time

When toggling the operating frequency of the NetFPGA between 125 MHz and 62.5 MHz on the RR, the frequency switching causes a board reset, and all of the buffered packets are lost. However, in the FSR, three additional frequencies, 31.3 MHz, 15.6 MHz and 7.8 MHz, are introduced for more finely-tuned frequency scaling. The board reset problem is also eliminated without significant extra packet processing delay and loss. Since the RR does not provide DFS capability and all of the buffered packets are lost during the board reset, the FSR outperforms the RR from the transition time point of view.

As mentioned in the lab setup, the average power consumption is calculated from three million samples collected at 764 thousand samples per second. The NetFPGA board draws power from both the 3.3-V and 5-V power rails of the PCI bus on the host PC through a PCI bus extender. The real-time 3.3-V current and 5-V current can be read through the PCI bus extender. To calculate the transition time, the samples of the 3.3-V current are the focus. This is because scaling the operating frequency mainly affects the 3.3-V current, since the core FPGA draws power from the 3.3-V rail. The PHY components draw power from the 5-V rail, which is not affected by the frequency scaling.

Figure 18 is an example of scaling the operating frequency from 7.8 MHz to 15.6 MHz. As shown in

Figure 18, when the router is operating at 7.8 MHz with a very low aggregated traffic load of 108 Mb/s, the 3.3-V current read is around 0.78 A. Once the traffic load is increased from 108 Mbps to 228 Mbps at time 5.62 ms, the 3.3-V current is increased to around 0.82 A immediately. The maximum throughput that the router can handle when operating at 7.8 MHz is 117 Mb/s. Thus, the router switches to 15.6 MHz. The frequency transition time, calculated from captured current waveform of the 3.3-V pin of the PCI bus extender, is around 0.3–0.4 ms.

The time stamp is recorded for each sample of the 3.3-V current data collected. After scaling up and down the operating frequency between two different frequencies, the collected 3.3-V current data are exported to a file. Frequency switching will result in significant current change. Locating the starting and ending time stamp of significant current change can determine the duration of frequency switching. The transition time of frequency switching can be calculated with the following equation.

where

T is the transition time,

T is the starting time stamp for the significant increase or the decrease of current, while

T is the ending time stamp, and 764,000 denotes the sampling rate. In this equation,

T and

T can be tracked and obtained from the captured 3.3-V current data. Thus,

T can be calculated, and the results are shown in

Table 7. The frequencies in the column of the table represent the operating frequencies the router switches from. The frequencies in the row represent the frequencies the router switches to. The transition times in the two directions are different due to the fact that the readings from the 3.3-V current samples may vary slightly resulting from the effect of noise. However, all of the transition time in the FSR is in the same magnitude, ranging from around 0.3 ms to around 0.4 ms, as expected. With control policies implemented in hardware, the FSR reduces the transition time by more than 80% compared to the transition time in the RR (2 ms).

Software is slow compared to dedicated hardware. The dedicated hardware makes an enormous difference in the speed of time-sensitive operations, providing a significant utilisation monitoring advantage over software. The frequency transition time on the software implementation of the control policy consists of the delay in software reading buffer usage, frequency selection according to the control protocol and setting the appropriate frequency register, which involves communications between hardware and software through reading and writing registers. For example, in theory, the transition time in the ALR is significantly reduced to 1 ms through a newly-defined handshake mechanism. However, the control policy in the ALR is implemented by software-based utilisation monitoring and buffer thresholds. The actual transition time ranges from 10 ms to 100 ms due to software restraints [

1]. The transition time in the work [

20] built on the NetFPGA reference router is reported a little bit longer (3.4 ms) than that in the reference router (2 ms) [

20,

32]. In the work [

21], the transition time of adapting the link rates of Ethernet port is reported to be approximately 2 s. However, the transition time of frequency scaling is not measured in this work.

The power savings from most green approaches are mostly achieved at the cost of transition time overhead. The transition time is the time between the request for a new state and the actual state transition. Transition from one state to another can lead to potential performance degradation, especially in the case of transition from a lower performance state to higher ones. Longer transition times can result in higher network delay and even severe packet loss during the transition. The transition time in a hardware implementation eliminates the delay in communications between hardware and software. The control policy of the FSR is directly implemented in hardware. This involves building hardware modules and adding corresponding software instructions to incorporate with the hardware modules. Results reveal that the transition time in the FSR is only around 0.3–0.4 ms, which is the hardware frequency transition time without the delay caused by communications between hardware and software.