Thermal Airborne Optical Sectioning

Abstract

:1. Introduction

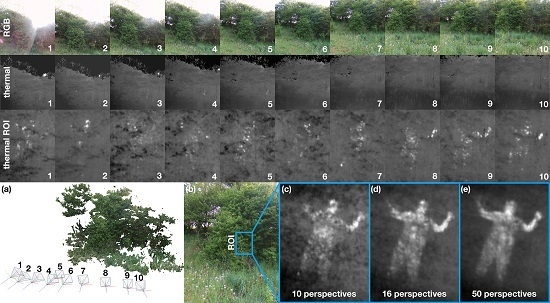

2. Results

3. Discussion

4. Materials and Methods

5. Conclusions

Supplementary Materials

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| AOS | airborne optical sectioning |

| TAOS | thermal airborne optical sectioning |

| SA | synthetic aperture |

| RGB | red, green, blue |

| ROI | region of interest |

| fps | frames per second |

| MP | mega-pixel |

| FOV | field of view |

| GPU | graphics processing unit |

References

- Moreira, A.; Prats-Iraola, P.; Younis, M.; Krieger, G.; Hajnsek, I.; Papathanassiou, K.P. A tutorial on synthetic aperture radar. IEEE Geosci. Remote Sens. Mag. 2013, 1, 6–43. [Google Scholar] [CrossRef] [Green Version]

- Li, C.J.; Ling, H. Synthetic aperture radar imaging using a small consumer drone. In Proceedings of the 2015 IEEE International Symposium on Antennas and Propagation USNC/URSI National Radio Science Meeting, Vancouver, BC, Canada, 19–24 July 2015; pp. 685–686. [Google Scholar] [CrossRef]

- Rosen, P.A.; Hensley, S.; Joughin, I.R.; Li, F.K.; Madsen, S.N.; Rodriguez, E.; Goldstein, R.M. Synthetic aperture radar interferometry. Proc. IEEE 2000, 88, 333–382. [Google Scholar] [CrossRef]

- Levanda, R.; Leshem, A. Synthetic aperture radio telescopes. IEEE Signal Process. Mag. 2010, 27, 14–29. [Google Scholar] [CrossRef]

- Dravins, D.; Lagadec, T.; Nuñez, P.D. Optical aperture synthesis with electronically connected telescopes. Nat. Commun. 2015, 6, 6852. [Google Scholar] [CrossRef] [PubMed]

- Ralston, T.S.; Marks, D.L.; Carney, P.S.; Boppart, S.A. Interferometric synthetic aperture microscopy (ISAM). Nat. Phys. 2007, 3, 965–1004. [Google Scholar] [CrossRef] [PubMed]

- Hayes, M.P.; Gough, P.T. Synthetic aperture sonar: A review of current status. IEEE J. Ocean. Eng. 2009, 34, 207–224. [Google Scholar] [CrossRef]

- Hansen, R.E. Introduction to synthetic aperture sonar. In Sonar Systems Edited; Intech Inc.: Acton, MA, USA, 2011. [Google Scholar]

- Jensen, J.A.; Nikolov, S.I.; Gammelmark, K.L.; Pedersen, M.H. Synthetic aperture ultrasound imaging. Ultrasonics 2006, 44, e5–e15. [Google Scholar] [CrossRef] [PubMed]

- Zhang, H.K.; Cheng, A.; Bottenus, N.; Guo, X.; Trahey, G.E.; Boctor, E.M. Synthetic tracked aperture ultrasound imaging: Design, simulation, and experimental evaluation. J. Med. Imaging 2016, 3, 027001. [Google Scholar] [CrossRef] [PubMed]

- Barber, Z.W.; Dahl, J.R. Synthetic aperture ladar imaging demonstrations and information at very low return levels. Appl. Opt. 2014, 53, 5531–5537. [Google Scholar] [CrossRef] [PubMed]

- Turbide, S.; Marchese, L.; Terroux, M.; Bergeron, A. Synthetic aperture lidar as a future tool for earth observation. In Proceedings of the International Conference on Space Optics—ICSO 2014, Canary Islands, Spain, 6–10 October 2014; p. 10563. [Google Scholar] [CrossRef]

- Vaish, V.; Wilburn, B.; Joshi, N.; Levoy, M. Using plane + parallax for calibrating dense camera arrays. In Proceedings of the 2004 IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Washington, DC, USA, 27 June–2 July 2004; Volume 1, p. I. [Google Scholar]

- Vaish, V.; Levoy, M.; Szeliski, R.; Zitnick, C.L. Reconstructing Occluded Surfaces Using Synthetic Apertures: Stereo, Focus and Robust Measures. In Proceedings of the 2006 IEEE Computer Society Conference on Computer Vision and Pattern Recognition (CVPR’06), New York, NY, USA, 17–22 June 2006; Volume 2, pp. 2331–2338. [Google Scholar] [CrossRef]

- Zhang, H.; Jin, X.; Dai, Q. Synthetic Aperture Based on Plenoptic Camera for Seeing Through Occlusions. In Proceedings of the Advances in Multimedia Information Processing—PCM 2018, Hefei, China, 21–22 September 2018; pp. 158–167. [Google Scholar]

- Yang, T.; Ma, W.; Wang, S.; Li, J.; Yu, J.; Zhang, Y. Kinect based real-time synthetic aperture imaging through occlusion. Multimed. Tools Appl. 2016, 75, 6925–6943. [Google Scholar] [CrossRef]

- Joshi, N.; Avidan, S.; Matusik, W.; Kriegman, D.J. Synthetic Aperture Tracking: Tracking through Occlusions. In Proceedings of the 2007 IEEE 11th International Conference on Computer Vision, Rio De Janeiro, Brazil, 14–21 October 2007; pp. 1–8. [Google Scholar]

- Pei, Z.; Li, Y.; Ma, M.; Li, J.; Leng, C.; Zhang, X.; Zhang, Y. Occluded-Object 3D Reconstruction Using Camera Array Synthetic Aperture Imaging. Sensors 2019, 19, 607. [Google Scholar] [CrossRef] [PubMed]

- Yang, T.; Zhang, Y.; Yu, J.; Li, J.; Ma, W.; Tong, X.; Yu, R.; Ran, L. All-In-Focus Synthetic Aperture Imaging. In Proceedings of the Computer Vision—ECCV 2014, Zurich, Switzerland, 6–12 September 2014; pp. 1–15. [Google Scholar]

- Pei, Z.; Zhang, Y.; Chen, X.; Yang, Y.H. Synthetic aperture imaging using pixel labeling via energy minimization. Pattern Recogn. 2013, 46, 174–187. [Google Scholar] [CrossRef]

- Kurmi, I.; Schedl, D.C.; Bimber, O. Airborne Optical Sectioning. J. Imaging 2018, 4, 102. [Google Scholar] [CrossRef]

- Bimber, O.; Kurmi, I.; Schedl, D.C.; Potel, M. Synthetic Aperture Imaging with Drones. IEEE Comput. Graph. Appl. 2019, 39, 8–15. [Google Scholar] [CrossRef] [PubMed]

- Kurmi, I.; Schedl, D.C.; Bimber, O. A Statistical View on Synthetic Aperture Imaging for Occlusion Removal. IEEE Sens. J. 2019, 39, 8–15. [Google Scholar] [CrossRef]

- Li, M.; Chen, X.; Peng, C.; Du, S.; Li, Y. Modeling the occlusion problem in thermal imaging to allow seeing through mist and foliage. J. Opt. Soc. Am. A 2019, 36, A67–A76. [Google Scholar] [CrossRef] [PubMed]

- Papachristos, C.; Mascarich, F.; Alexis, K. Thermal-Inertial Localization for Autonomous Navigation of Aerial Robots through Obscurants. In Proceedings of the 2018 International Conference on Unmanned Aircraft Systems (ICUAS), Dallas, TX, USA, 12–15 June 2018; pp. 394–399. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J. Structure-from-Motion Revisited. In Proceedings of the 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar] [CrossRef]

- Cavallari, T.; Golodetz, S.; Lord, N.; Valentin, J.; Prisacariu, V.; Di Stefano, L.; Torr, P.H.S. Real-Time RGB-D Camera Pose Estimation in Novel Scenes using a Relocalisation Cascade. IEEE Trans. Pattern Anal. Mach. Intell. (Early Access) 2019. [Google Scholar] [CrossRef] [PubMed]

- Shete, P.P.; Sarode, D.M.; Bose, S.K. Scalable high resolution panorama composition on data wall system. In Proceedings of the 2018 International Conference on Communication information and Computing Technology (ICCICT), Mumbai, India, 2–3 February 2018; pp. 1–6. [Google Scholar] [CrossRef]

- Birklbauer, C.; Opelt, S.; Bimber, O. Rendering Gigaray Light Fields. Comput. Graph. Forum 2013, 32, 469–478. [Google Scholar] [CrossRef]

- Wang, T.; Efros, A.A.; Ramamoorthi, R. Depth Estimation with Occlusion Modeling Using Light-Field Cameras. IEEE Trans. Pattern Anal. Mach. Intell. 2016, 38, 2170–2181. [Google Scholar] [CrossRef] [PubMed]

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kurmi, I.; Schedl, D.C.; Bimber, O. Thermal Airborne Optical Sectioning. Remote Sens. 2019, 11, 1668. https://doi.org/10.3390/rs11141668

Kurmi I, Schedl DC, Bimber O. Thermal Airborne Optical Sectioning. Remote Sensing. 2019; 11(14):1668. https://doi.org/10.3390/rs11141668

Chicago/Turabian StyleKurmi, Indrajit, David C. Schedl, and Oliver Bimber. 2019. "Thermal Airborne Optical Sectioning" Remote Sensing 11, no. 14: 1668. https://doi.org/10.3390/rs11141668

APA StyleKurmi, I., Schedl, D. C., & Bimber, O. (2019). Thermal Airborne Optical Sectioning. Remote Sensing, 11(14), 1668. https://doi.org/10.3390/rs11141668