Web-Net: A Novel Nest Networks with Ultra-Hierarchical Sampling for Building Extraction from Aerial Imageries

Abstract

:1. Introduction

1.1. Building Extraction with Machine Learning

1.2. Building Extraction with Deep Learning

1.3. The Motivation and Our Contribution

2. Related Work

2.1. Fully Convolution Methods

2.2. Encoder-Decoder Architectures

2.3. Nested Connected Architectures

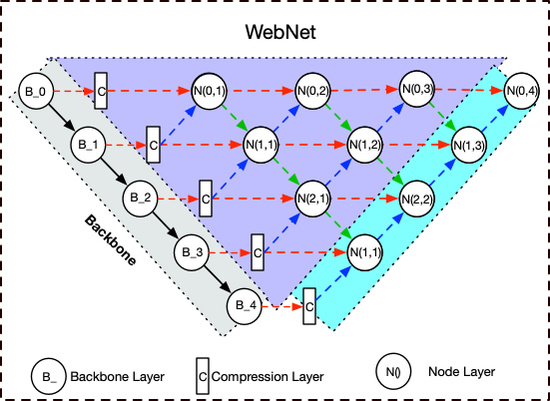

3. Proposed Method

3.1. Overview of the Proposed Networks

3.2. Node Layer and Ultra-Hierarchical Sampling Block

3.3. Dense Hierarchical Pathways and Deep Supervision

4. Experiments and Discussions

4.1. Training Details

4.1.1. Datasets

4.1.2. Metrics

4.1.3. Implement Details

4.2. Ablation Evaluation

4.2.1. Backbone Encoder Evaluation

4.2.2. Ultra-Hierarchical Samplings Evaluation

4.2.3. Pruning and Deep Supervision Evaluation

4.3. Best Performance and Comparisons with Related Networks

4.3.1. Best Performance Model

4.3.2. Comparison Experiments on the Inria Aerial Dataset

4.3.3. Comparison Experiments on WHU Dataset

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Xu, Y.; Wu, L.; Xie, Z.; Chen, Z. Building Extraction in Very High Resolution Remote Sensing Imagery Using Deep Learning and Guided Filters. Remote Sens. 2018, 10, 144. [Google Scholar] [CrossRef]

- Gao, L.; Shi, W.; Miao, Z.; Lv, Z. Method based on edge constraint and fast marching for road centerline extraction from very high-resolution remote sensing images. Remote Sens. 2018, 10, 900. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Segment-before-detect: Vehicle detection and classification through semantic segmentation of aerial images. Remote Sens. 2017, 9, 368. [Google Scholar] [CrossRef]

- Tuermer, S.; Kurz, F.; Reinartz, P.; Stilla, U. Airborne vehicle detection in dense urban areas using HoG features and disparity maps. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 2327–2337. [Google Scholar] [CrossRef]

- Cote, M.; Saeedi, P. Automatic rooftop extraction in nadir aerial imagery of suburban regions using corners and variational level set evolution. IEEE Trans. Geosci. Remote Sens. 2013, 51, 313–328. [Google Scholar] [CrossRef]

- Yang, Y.; Newsam, S. Comparing SIFT descriptors and Gabor texture features for classification of remote sensed imagery. In Proceedings of the 2008 15th IEEE International Conference on Image Processing, San Diego, CA, USA, 12–15 October 2008; pp. 1852–1855. [Google Scholar]

- Lowe, D.G. Object recognition from local scale-invariant features. In Proceedings of the 7th IEEE International Conference on Computer Vision, Kerkyra, Greece, 20–27 September 1999; pp. 1150–1157. [Google Scholar]

- Mountrakis, G.; Im, J.; Ogole, C. Support vector machines in remote sensing: A review. ISPRS J. Photogramm. 2011, 66, 247–259. [Google Scholar] [CrossRef]

- Maloof, M.A.; Langley, P.; Binford, T.O.; Nevatia, R.; Sage, S. Improved rooftop detection in aerial images with machine learning. Mach. Learn. 2003, 53, 157–191. [Google Scholar] [CrossRef]

- Senaras, C.; Ozay, M.; Vural, F.T.Y. Building detection with decision fusion. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2013, 6, 1295–1304. [Google Scholar] [CrossRef]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 8–10 June 2015; pp. 3431–3440. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv 2014, arXiv:1409.1556. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep residual learning for image recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Szegedy, C.; Ioffe, S.; Vanhoucke, V.; Alemi, A.A. Inception-v4, inception-resnet and the impact of residual connections on learning. In Proceedings of the Thirty-First AAAI Conference on Artificial Intelligence, San Francisco, CA, USA, 4–9 February 2017. [Google Scholar]

- Xie, S.; Girshick, R.; Dollár, P.; Tu, Z.; He, K. Aggregated residual transformations for deep neural networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 1492–1500. [Google Scholar]

- Chollet, F. Xception: Deep learning with depthwise separable convolutions. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 1251–1258. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely connected convolutional networks. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4700–4708. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-net: Convolutional networks for biomedical image segmentation. In Proceedings of the International Conference on Medical Image Computing and Computer-Assisted Intervention, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Badrinarayanan, V.; Kendall, A.; Cipolla, R. Segnet: A deep convolutional encoder-decoder architecture for image segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2017, 39, 2481–2495. [Google Scholar] [CrossRef]

- Chen, L.-C.; Papandreou, G.; Kokkinos, I.; Murphy, K.; Yuille, A.L. Deeplab: Semantic image segmentation with deep convolutional nets, atrous convolution, and fully connected crfs. IEEE Trans. Pattern Anal. Mach. Intell. 2018, 40, 834–848. [Google Scholar] [CrossRef] [PubMed]

- Zhao, H.; Shi, J.; Qi, X.; Wang, X.; Jia, J. Pyramid scene parsing network. In Proceedings of the IEEE conference on computer vision and pattern recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 2881–2890. [Google Scholar]

- Chen, L.-C.; Zhu, Y.; Papandreou, G.; Schroff, F.; Adam, H. Encoder-decoder with atrous separable convolution for semantic image segmentation. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; pp. 801–818. [Google Scholar]

- Huang, Z.; Cheng, G.; Wang, H.; Li, H.; Shi, L.; Pan, C. Building extraction from multi-source remote sensing images via deep deconvolution neural networks. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1835–1838. [Google Scholar]

- Zhong, Z.; Li, J.; Cui, W.; Jiang, H. Fully convolutional networks for building and road extraction: Preliminary results. In Proceedings of the 2016 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Beijing, China, 10–15 July 2016; pp. 1591–1594. [Google Scholar]

- Bittner, K.; Cui, S.; Reinartz, P. Building Extraction from Remote Sensing Data Using fully Convolutional networks. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing & Spatial Information Sciences, Hannover, Germany, 6–9 June 2017. [Google Scholar]

- Alshehhi, R.; Marpu, P.R.; Woon, W.L.; Dalla Mura, M. Simultaneous extraction of roads and buildings in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. 2017, 130, 139–149. [Google Scholar] [CrossRef]

- Wu, G.; Shao, X.; Guo, Z.; Chen, Q.; Yuan, W.; Shi, X.; Xu, Y.; Shibasaki, R. Automatic building segmentation of aerial imagery using multi-constraint fully convolutional networks. Remote Sens. 2018, 10, 407. [Google Scholar] [CrossRef]

- Chen, K.; Fu, K.; Yan, M.; Gao, X.; Sun, X.; Wei, X. Semantic segmentation of aerial images with shuffling convolutional neural networks. IEEE Geosci. Remote Sens. Lett. 2018, 15, 173–177. [Google Scholar] [CrossRef]

- Yang, H.; Wu, P.; Yao, X.; Wu, Y.; Wang, B.; Xu, Y. Building Extraction in Very High Resolution Imagery by Dense-Attention Networks. Remote Sens. 2018, 10, 1768. [Google Scholar] [CrossRef]

- Mou, L.; Zhu, X.X. RiFCN: Recurrent network in fully convolutional network for semantic segmentation of high resolution remote sensing images. arXiv 2018, arXiv:1805.02091. [Google Scholar]

- Mou, L.; Ghamisi, P.; Zhu, X.X. Deep recurrent neural networks for hyperspectral image classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 3639–3655. [Google Scholar] [CrossRef]

- Audebert, N.; Le Saux, B.; Lefèvre, S. Beyond RGB: Very high resolution urban remote sensing with multimodal deep networks. ISPRS J. Photogramm. 2018, 140, 20–32. [Google Scholar] [CrossRef] [Green Version]

- Pan, B.; Shi, Z.; Xu, X. MugNet: Deep learning for hyperspectral image classification using limited samples. ISPRS J. Photogramm. 2018, 145, 108–119. [Google Scholar] [CrossRef]

- Bittner, K.; Adam, F.; Cui, S.; Körner, M.; Reinartz, P. Building Footprint Extraction From VHR Remote Sensing Images Combined with Normalized DSMs Using Fused Fully Convolutional Networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 2615–2629. [Google Scholar] [CrossRef]

- Shrestha, S.; Vanneschi, L. Improved Fully Convolutional Network with Conditional Random Fields for Building Extraction. Remote Sens. 2018, 10, 1135. [Google Scholar] [CrossRef]

- Wang, Y.; Liang, B.; Ding, M.; Li, J. Dense Semantic Labelling with Atrous Spatial Pyramid Pooling and Decoder for High-Resolution Remote Sensing Imagery. Remote Sens. 2019, 11, 20. [Google Scholar] [CrossRef]

- Maggiori, E.; Tarabalka, Y.; Charpiat, G.; Alliez, P. Can semantic labelling methods generalize to any city? the inria aerial image labelling benchmark. In Proceedings of the 2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 3226–3229. [Google Scholar]

- Fourure, D.; Emonet, R.; Fromont, E.; Muselet, D.; Tremeau, A.; Wolf, C. Residual conv-deconv grid network for semantic segmentation. arXiv 2017, arXiv:1707.07958. [Google Scholar]

- Pohlen, T.; Hermans, A.; Mathias, M.; Leibe, B. Full-resolution residual networks for semantic segmentation in street scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Honolulu, HI, USA, 22–25 July 2017; pp. 4151–4160. [Google Scholar]

- Zhou, Z.; Siddiquee, M.M.R.; Tajbakhsh, N.; Liang, J. Unet++: A nested u-net architecture for medical image segmentation. In Deep Learning in Medical Image Analysis and Multimodal Learning for Clinical Decision Support; Springer: Berlin/Heidelberg, Germany, 2018; pp. 3–11. [Google Scholar]

- Wang, L.; Lee, C.-Y.; Tu, Z.; Lazebnik, S. Training deeper convolutional networks with deep supervision. arXiv 2015, arXiv:1505.02496. [Google Scholar]

- Hu, J.; Shen, L.; Sun, G. Squeeze-and-excitation networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 7132–7141. [Google Scholar]

- Wang, P.; Chen, P.; Yuan, Y.; Liu, D.; Huang, Z.; Hou, X.; Cottrell, G. Understanding convolution for semantic segmentation. In Proceedings of the 2018 IEEE Winter Conference on Applications of Computer Vision (WACV), Lake Tahoe, CA, USA, 12–15 March 2018; pp. 1451–1460. [Google Scholar]

- Ji, S.; Wei, S.; Lu, M. Fully Convolutional Networks for Multisource Building Extraction From an Open Aerial and Satellite Imagery Data Set. IEEE Trans. Geosci. Remote Sens. 2018, 574, 574–586. [Google Scholar] [CrossRef]

- Paszke, A.; Gross, S.; Chintala, S.; Chanan, G.; Yang, E.; DeVito, Z.; Lin, Z.; Desmaison, A.; Antiga, L.; Lerer, A. Automatic differentiation in pytorch. In Proceedings of the NIPS 2017 Autodiff Workshop: The Future of Gradient-basedMachine Learning Software and Techniques, Long Beach, CA, USA, 9 December 2017. [Google Scholar]

- Deng, J.; Dong, W.; Socher, R.; Li, L.-J.; Li, K.; Li, F.-F. Imagenet: A large-scale hierarchical image database. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Kingma, D.P.; Ba, J. Adam: A method for stochastic optimization. arXiv 2014, arXiv:1412.6980. [Google Scholar]

- Pleiss, G.; Chen, D.; Huang, G.; Li, T.; van der Maaten, L.; Weinberger, K.Q. Memory-efficient implementation of densenets. arXiv 2017, arXiv:1707.06990. [Google Scholar]

- Goodfellow, I.; Pouget-Abadie, J.; Mirza, M.; Xu, B.; Warde-Farley, D.; Ozair, S.; Courville, A.; Bengio, Y. Generative adversarial nets. In Proceedings of the Advances in Neural Information Processing Systems, Montréal, Canada, 8–13 December 2014; pp. 2672–2680. [Google Scholar]

- Chen, Q.; Wang, L.; Wu, Y.F.; Wu, G.M.; Guo, Z.L.; Waslander, S.L. Aerial imagery for roof segmentation: A large-scale dataset towards automatic mapping of buildings. ISPRS J. Photogramm. 2019, 147, 42–55. [Google Scholar] [CrossRef]

- He, K.; Gkioxari, G.; Dollár, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Honolulu, HI, USA, 22–25 July 2017; pp. 2961–2969. [Google Scholar]

- Bischke, B.; Helber, P.; Folz, J.; Borth, D.; Dengel, A. Multi-task learning for segmentation of building footprints with deep neural networks. arXiv 2017, arXiv:1709.05932. [Google Scholar]

- Lu, K.; Sun, Y.; Ong, S.-H. Dual-Resolution U-Net: Building Extraction from Aerial Images. In Proceedings of the 2018 24th International Conference on Pattern Recognition (ICPR), Beijing, China, 20–24 August 2018; pp. 489–494. [Google Scholar]

- Khalel, A.; El-Saban, M. Automatic pixelwise object labelling for aerial imagery using stacked u-nets. arXiv 2018, arXiv:1803.04953. [Google Scholar]

- Li, X.; Yao, X.; Fang, Y. Building-A-Nets: Robust Building Extraction From High-Resolution Remote Sensing Images With Adversarial Networks. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2018, 11, 3680–3687. [Google Scholar] [CrossRef]

| Backbones | IoU (%) | Acc. (%) | 1 TT(Min) | 2 MS(GB) |

|---|---|---|---|---|

| VGG-16 | 75.10 | 96.10 | 50 | 6.86 |

| Res-50 | 75.33 | 96.06 | 62 | 5.88 |

| Res-101 | 75.58 | 96.17 | 70 | 7.07 |

| ResNext-50 | 76.23 | 96.25 | 52 | 5.91 |

| ResNext-101 | 76.39 | 96.30 | 87 | 7.68 |

| Dense-121 | 75.93 | 96.20 | - | - |

| Dense-161 | 76.58 | 96.38 | - | - |

| Xception | 75.58 | 96.14 | 58 | 7.72 |

| Models | IoU (%) | Acc. (%) |

|---|---|---|

| Max-Bilinear | 75.96 | 96.19 |

| Avg-Bilinear | 75.82 | 96.16 |

| Max-Deconv | 76.20 | 96.25 |

| Avg-Deconv | 76.23 | 96.25 |

| UHS | 76.50 | 96.33 |

| Models | IoU (%) | Acc. (%) | Time(s) |

|---|---|---|---|

| Web-Net- | 67.90 | 94.73 | 15.4 |

| Web-Net- | 74.02 | 95.94 | 18.7 |

| Web-Net- | 75.20 | 96.14 | 23.2 |

| Web-Net- | 76.50 | 96.33 | 28.8 |

| Web-Net | ||||||

|---|---|---|---|---|---|---|

| Unet++ (ResNext50 × 32 × 4d) | ✓ | ✓ | ✓ | ✓ | ✓ | ✓ |

| Web-Net (ResNext50 × 32 × 4d) | ✓ | ✓ | ✓ | ✓ | ✓ | |

| Pretrained | ✓ | ✓ | ✓ | ✓ | ||

| DS 1 | ✓ | ✓ | ✓ | |||

| Web-Net (ResNext101 × 64 × 4d) | ✓ | ✓ | ||||

| Batch = 16 | ✓ | |||||

| Acc. (%) | 95.79 | 96.33 | 96.65 | 96.72 | 96.86 | 96.97 |

| IoU (%) | 73.32 | 76.50 | 78.37 | 78.69 | 79.52 | 80.10 |

| Methods | Acc. (%) | IoU (%) | Time (s) |

|---|---|---|---|

| FCN [50] | 92.79 | 53.82 | |

| Mask R-CNN [51] | 92.49 | 59.53 | - |

| MLP [50] | 94.42 | 64.67 | 20.4 |

| SegNet (Single-Loss) [52] | 95.17 | 70.14 | 26.0 |

| SegNet (Multi-Task Loss [52] | 95.73 | 73.00 | - |

| Unet++ (ResNext-50) [40] | 95.79 | 73.32 | 26.5 |

| RiFCN [30] | 95.82 | 74.00 | - |

| Dual-Resolution U-Nets [53] | - | 74.22 | - |

| 2-levels U-Nets [54] | 96.05 | 74.55 | 208.8 |

| Building-A-Net (Dense 52 layers) [55] | 96.01 | 74.75 | - |

| Proposed (ResNext-50) | 96.33 | 76.50 | 28.8 |

| Building-A-Net (Dense 152 layers pretrained) [55] | 96.71 | 78.73 | 150.5 |

| Proposed (ResNext-101 Pretrained) | 96.97 | 80.10 | 56.5 |

| Methods | Austin | Chicago | Kitsap Country | Western Tyrol | Vienna | Overall | |

|---|---|---|---|---|---|---|---|

| SegNet (Single-Loss) [52] | IoU | 74.81 | 52.83 | 68.06 | 65.68 | 72.90 | 70.14 |

| Acc. | 92.52 | 98.65 | 97.28 | 91.36 | 96.04 | 95.17 | |

| SegNet (Multi-Task Loss [52] | IoU | 76.76 | 67.06 | 73.30 | 66.91 | 76.68 | 73.00 |

| Acc. | 93.21 | 99.25 | 97.84 | 91.71 | 96.61 | 95.73 | |

| Unet++ (ResNext-50) [40] | IoU | 74.69 | 67.17 | 64.10 | 75.06 | 78.04 | 73.32 |

| Acc. | 96.28 | 91.88 | 99.21 | 97.99 | 93.61 | 95.79 | |

| RiFCN [30] | IoU | 76.84 | 67.45 | 63.95 | 73.19 | 79.18 | 74.00 |

| Acc. | 96.50 | 91.76 | 99.14 | 97.75 | 93.95 | 95.82 | |

| Proposed (ResNext-101 Pretrained) | IoU | 82.49 | 73.90 | 70.71 | 83.72 | 83.49 | 80.10 |

| Acc. | 97.47 | 93.90 | 99.35 | 98.73 | 95.35 | 96.97 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhang, Y.; Gong, W.; Sun, J.; Li, W. Web-Net: A Novel Nest Networks with Ultra-Hierarchical Sampling for Building Extraction from Aerial Imageries. Remote Sens. 2019, 11, 1897. https://doi.org/10.3390/rs11161897

Zhang Y, Gong W, Sun J, Li W. Web-Net: A Novel Nest Networks with Ultra-Hierarchical Sampling for Building Extraction from Aerial Imageries. Remote Sensing. 2019; 11(16):1897. https://doi.org/10.3390/rs11161897

Chicago/Turabian StyleZhang, Yan, Weiguo Gong, Jingxi Sun, and Weihong Li. 2019. "Web-Net: A Novel Nest Networks with Ultra-Hierarchical Sampling for Building Extraction from Aerial Imageries" Remote Sensing 11, no. 16: 1897. https://doi.org/10.3390/rs11161897

APA StyleZhang, Y., Gong, W., Sun, J., & Li, W. (2019). Web-Net: A Novel Nest Networks with Ultra-Hierarchical Sampling for Building Extraction from Aerial Imageries. Remote Sensing, 11(16), 1897. https://doi.org/10.3390/rs11161897