Precise Crop Classification Using Spectral-Spatial-Location Fusion Based on Conditional Random Fields for UAV-Borne Hyperspectral Remote Sensing Imagery

Abstract

:1. Introduction

2. Method

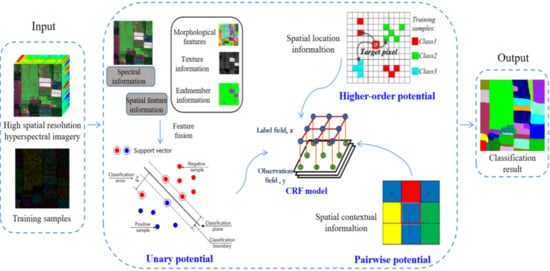

2.1. The SSLF-CRF Model

2.1.1. Unary Potential

2.1.2. Pairwise Potential

2.1.3. Higher-Order Potential

2.2. Algorithm Flowchart

- (1)

- According to the characteristics of high spatial resolution hyperspectral remote sensing data obtained by UAV, the original image is subjected to MNF rotation. The noise covariance matrix in the principal component is used to separate and readjust the noise in the data, to minimize the variance of the transformed noise data and bands that are not relevant.

- (2)

- The unary potential function model the spectral and spatial feature information. The spectral information is the most basic information for judging the various land-cover classes, and the spatial feature information provides more details for the classification. Representative features are selected from the perspective of mathematical morphology, spatial texture, and mixed pixel decomposition, and then combined with the spectral information of each pixel to form a spectral-spatial fusion feature vector. The SVM classifier is used to model the relationship between the label and the spectral-spatial fusion feature vector, and the probability estimate of each pixel is calculated independently, based on the feature vector, according to the given label.

- (3)

- The spatial smoothing term simulates the spatial context information for each pixel and its corresponding neighborhood through the label field and observation field, which is modeled by the pairwise potential function. According to spatial correlation theory, the spatial smoothing term encourages the neighboring pixels of the homogeneous regions to share the same class label, and punishes the class discontinuity in the local regions.

- (4)

- Based on the characteristics of the similar spectral properties of the same feature class in a local region, the higher-order potential function is used to explicitly model the spatial location information by considering the training data of the target pixel closest to its spatial location, and it makes full use of the non-local similarity of the feature class to provide auxiliary information for the features that are difficult to distinguish. Differing from the spectral, spatial feature, and spatial contextual information, the spatial location information uses the regularity of the image to model the nonlocal similarity of the land-cover types through the location information.

3. Experimental Results

3.1. Study Areas

3.2. Data Collection

3.3. Preprocessing of the UAV Images

- (1)

- The laboratory calibration of the sensor was the first step, converting the output signal of each sensor unit into an accurate radiance value.

- (2)

- The second step was geometric correction. The UAV integrates the sensors and the position and orientation system (POS) that combined with the differential GPS technology and Inertial Measurement Unit (IMU) technology, which can provide the position and attitude parameters of the sensor. For the geometric correction, the time standard of the POS data and hyperspectral image acquisition should be unified and corresponding. The correspondence between the image pixels and ground coordinates could thus be established by coordinate system transformation. Finally, the corrected pixels were resampled and the corrected image was reconstructed.

- (3)

- The third step was radiometric correction. We used a calibration blanket with a third-order reflectivity with reflectances of 11%, 32% and 56%, respectively. Before the UAV took off, the calibration blanket was placed in a suitable position in the study area to ensure that the study area and the calibration blanket appeared in the same image at the same time. Three sets of ROIs were selected in the three reflectance regions of the calibration blanket by means of ENVI, and the average radiation value of each group of ROIs was calculated. Finally, the standard reflectance of the calibration blanket and the radiation values could be linearly regressed in the IDL, according to Equation (25).

- (3)

- The fourth step was test sample production. We obtained GPS linear feature information at where the data were acquired, and obtained ground marks with reference to the relative features synchronously, to generate the ground-truth data.

3.4. Experimental Description

3.5. Experiment 1: Hanchuan Dataset

3.6. Experiment 2: Honghu Dataset

3.7. Sensitivity Analysis for the Training Sample Size

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Huang, J.; Ma, H.; Sedano, F.; Lewis, P.; Liang, S.; Wu, Q.; Su, W.; Zhang, X.; Zhu, D. Evaluation of regional estimates of winter wheat yield by assimilating three remotely sensed reflectance datasets into the coupled WOFOST–PROSAIL model. Eur. J. Agron. 2019, 102, 1–13. [Google Scholar] [CrossRef]

- Huang, J.; Sedano, F.; Huang, Y.; Ma, H.; Li, X.; Liang, S.; Tian, L.; Zhang, X.; Fan, J.; Wu, W. Assimilating a synthetic Kalman filter leaf area index series into the WOFOST model to improve regional winter wheat yield estimation. Agric. For. Meteorol. 2016, 216, 188–202. [Google Scholar] [CrossRef]

- Thenkabail, P.S. Global Croplands and their Importance for Water and Food Security in the Twenty-first Century: Towards an Ever Green Revolution that Combines a Second Green Revolution with a Blue Revolution. Remote Sens. 2010, 2, 2305–2312. [Google Scholar] [CrossRef] [Green Version]

- Shen, K.; He, H.; Meng, H.; Guannan, S. Review on Spatial Sampling Survey in Crop Area Estimation. Chin. J. Agric. Resour. Reg. Plan. 2012, 33, 11–16. [Google Scholar]

- Huang, J.; Tian, L.; Liang, S.; Ma, H.; Becker-Reshef, I.; Huang, Y.; Su, W.; Zhang, X.; Zhu, D.; Wu, W. Improving winter wheat yield estimation by assimilation of the leaf area index from Landsat TM and MODIS data into the WOFOST model. Agric. For. Meteorol. 2015, 204, 106–121. [Google Scholar] [CrossRef] [Green Version]

- Huang, J.; Ma, H.; Su, W.; Zhang, X.; Huang, Y.; Fan, J.; Wu, W. Jointly assimilating MODIS LAI and ET products into the SWAP model for winter wheat yield estimation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 4060–4071. [Google Scholar] [CrossRef]

- Lowder, S.K.; Skoet, J.; Raney, T. The number, size, and distribution of farms, smallholder farms, and family farms worldwide. World Dev. 2016, 87, 16–29. [Google Scholar] [CrossRef]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder Crop Area Mapped with a Semantic Segmentation Deep Learning Method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef]

- Qi, J.; Sheng, H.; Yi, P.; Yan, G.; Ren, Z.; Xiang, W.; Yi, M.; Bo, D.; Jian, L. UAV-Based Biomass Estimation for Rice-Combining Spectral, TIN-Based Structural and Meteorological Features. Remote Sens. 2019, 11, 890. [Google Scholar] [Green Version]

- Colomina, I.; Molina, P. Unmanned aerial systems for photogrammetry and remote sensing: A review. ISPRS J. Photogramm. Remote Sens. 2014, 92, 79–97. [Google Scholar] [CrossRef] [Green Version]

- Hugenholtz, C.H.; Moorman, B.J.; Riddell, K.; Whitehead, K. Small unmanned aircraft systems for remote sensing and Earth science research. Eos Trans. Am. Geophys. Union 2012, 93, 236. [Google Scholar] [CrossRef]

- Zhong, Y.; Wang, X.; Xu, Y.; Wang, S.; Jia, T.; Hu, X.; Zhao, J.; Wei, L.; Zhang, L. Mini-UAV-Borne Hyperspectral Remote Sensing: From Observation and Processing to Applications. IEEE Trans. Geosci. Remote Sens. Mag. 2018, 6, 46–62. [Google Scholar] [CrossRef]

- Pajares, G. Overview and Current Status of Remote Sensing Applications Based on Unmanned Aerial Vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef] [Green Version]

- Shahbazi, M.; Théau, J.; Ménard, P. Recent applications of unmanned aerial imagery in natural resource management. GISci. Remote Sens. 2014, 51, 339–365. [Google Scholar] [CrossRef]

- Pádua, L.; Marques, P.; Hruška, J.; Adão, T.; Peres, E.; Morais, R.; Sousa, J. Multi-Temporal Vineyard Monitoring through UAV-Based RGB Imagery. Remote Sens. 2018, 10, 1907. [Google Scholar] [CrossRef]

- Zhou, X.; Zheng, H.; Xu, X.; He, J.; Ge, X.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.; Tian, Y. Predicting grain yield in rice using multi-temporal vegetation indices from UAV-based multispectral and digital imagery. ISPRS J. Photogramm. Remote Sens. 2017, 130, 246–255. [Google Scholar] [CrossRef]

- Marcial-Pablo, M.d.J.; Gonzalez-Sanchez, A.; Jimenez-Jimenez, S.I.; Ontiveros-Capurata, R.E.; Ojeda-Bustamante, W. Estimation of vegetation fraction using RGB and multispectral images from UAV. Int. J. Remote Sens. 2019, 40, 420–438. [Google Scholar] [CrossRef]

- Tappen, M.F.; Liu, C.; Adelson, E.H.; Freeman, W.T. Learning Gaussian condition random fields for low-level vision. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Minneapolis, MN, USA, 17–22 June 2007. [Google Scholar]

- Zhao, W.; Du, S.; Wang, Q.; Emery, W.J. Contextually guided very-high-resolution imagery classification with semantic segments. ISPRS J. Photogramm. Remote Sens. 2017, 132, 48–60. [Google Scholar] [CrossRef]

- Bai, J.; Xiang, S.; Pan, C. A Graph-Based Classification Method for Hyperspectral Images. IEEE Trans. Geosci. Remote Sens. 2013, 51, 803–817. [Google Scholar] [CrossRef]

- Zhong, Y.; Lin, X.; Zhang, L. A support vector conditional random fields classifier with a Mahalanobis distance boundary constraint for high spatial resolution remote sensing imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 1314–1330. [Google Scholar] [CrossRef]

- Zhong, Y.; Zhao, J.; Zhang, L. A Hybrid Object-Oriented Conditional Random Field Classification Framework for High Spatial Resolution Remote Sensing Imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7023–7037. [Google Scholar] [CrossRef]

- Zhong, P.; Wang, R. Modeling and Classifying Hyperspectral Imagery by CRFs with Sparse Higher Order Potentials. IEEE Trans. Geosci. Remote Sens. 2011, 49, 688–705. [Google Scholar] [CrossRef]

- Zhao, J.; Zhong, Y.; Zhang, L. Detail-preserving smoothing classifier based on conditional random fields for high spatial resolution remote sensing imagery. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2440–2452. [Google Scholar] [CrossRef]

- Wei, L.; Yu, M.; Zhong, Y.; Zhao, J.; Liang, Y.; Hu, X. Spatial–spectral fusion based on conditional random fields for the fine classification of crops in UAV-borne hyperspectral remote sensing imagery. Remote Sens. 2019, 11, 780. [Google Scholar] [CrossRef]

- Lafferty, J.; Mccallum, A.; Pereira, F. Conditional random fields: Probabilistic models for segmenting and labeling sequence data. Proc. ICML 2001, 3, 282–289. [Google Scholar]

- Kumar, S.; Hebert, M. Discriminative random fields. Int. J. Comput. Vis. 2006, 68, 179–201. [Google Scholar] [CrossRef]

- Wu, T.; Lin, C.; Weng, R. Probability estimates for multi-class classification by pairwise coupling. J. Mach. Learn. Res. 2004, 5, 975–1005. [Google Scholar]

- Chang, C.; Lin, C. LIBSVM: A library for support vector machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Li, W.; Feng, F.; Li, H.; Du, Q. Discriminant analysis-based dimension reduction for hyperspectral image classification: A survey of the most recent advances and an experimental comparison of different techniques. IEEE Geosci. Remote Sens. Mag. 2018, 6, 15–34. [Google Scholar] [CrossRef]

- Hughes, G. On the mean accuracy of statistical pattern recognizers. IEEE Trans. Inf. Theory 1968, 14, 55–63. [Google Scholar] [CrossRef]

- Simard, M.; Saatchi, S.; De Grandi, G. The use of decision tree and multiscale texture for classification of JERS-1 SAR data over tropical forest. IEEE Trans. Geosci. Remote Sens. 2000, 38, 2310–2321. [Google Scholar] [CrossRef] [Green Version]

- Anys, H.; He, D.C. Evaluation of textural and multipolarization radar features for crop classification. IEEE Trans. Geosci. Remote Sens. 1995, 33, 1170–1181. [Google Scholar] [CrossRef]

- Laliberte, A.S.; Rango, A. Texture and scale in object-based analysis of subdecimeter resolution Unmanned Aerial Vehicle (UAV) imagery. IEEE Trans. Geosci. Remote Sens. 2009, 47, 761–770. [Google Scholar] [CrossRef]

- Szantoi, Z.; Escobedo, F.; Abd-Elrahman, A.; Smith, S.; Pearlstine, L. Analyzing fine-scale wetland composition using high resolution imagery and texture features. Int. J. Appl. Earth Obs. 2013, 23, 204–212. [Google Scholar] [CrossRef]

- Aguera, F.; Aguilar, F.J.; Aguilar, M.A. Using texture analysis to improve per-pixel classification of very high resolution images for mapping plastic greenhouses. ISPRS J. Photogramm. Remote Sens. 2008, 63, 635–646. [Google Scholar] [CrossRef]

- Fu, Q.; Wu, B.; Wang, X.; Sun, Z. Building extraction and its height estimation over urban areas based on morphological building index. Remote Sens. Technol. Appl. 2015, 30, 148–154. [Google Scholar]

- Zhang, L.; Huang, X. Object-oriented subspace analysis for airborne hyperspectral remote sensing imagery. Neurocomputing 2010, 73, 927–936. [Google Scholar] [CrossRef]

- Maillard, P. Comparing texture analysis methods through classification. Photogramm. Eng. Remote Sens. 2003, 69, 357–367. [Google Scholar] [CrossRef]

- Beguet, B.; Chehata, N.; Boukir, S.; Guyon, D. Classification of forest structure using very high resolution Pleiades image texture. In Proceedings of the 2014 IEEE International Geoscience and Remote Sensing Symposium, Quebec City, QC, Canada, 13–18 July 2014; Volume 2014, pp. 2324–2327. [Google Scholar]

- Gruninger, J.; Ratkowski, A.; Hoke, M. The sequential maximum angle convex cone (SMACC) endmember model. Proc. SPIE 2004, 5425, 1–14. [Google Scholar]

- Pesaresi, M.; Benediktsson, J. A new approach for the Morphological Segmentation of high-resolution satellite imagery. IEEE Trans. Geosci. Remote Sens. 2001, 39, 309–320. [Google Scholar] [CrossRef]

- Benediktsson, J.; Pesaresi, M.; Arnason, K. Classification and feature extraction for remote sensing image from urban areas based on morphological transformations. IEEE Trans. Geosci. Remote Sens. 2003, 41, 1940–1949. [Google Scholar] [CrossRef]

- Yu, Q.; Gong, P.; Clinton, N.; Biging, G.; Kelly, M.; Schirokauer, D. Object-based detailed vegetation classification with airborne high spatial resolution remote sensing imagery. Photogramm. Eng. Remote Sens. 2006, 72, 799–811. [Google Scholar] [CrossRef]

- Hu, R.; Huang, X.; Huang, Y. An enhanced morphological building index for building extraction from high-resolution images. Acta Geod. Cartogr. Sin. 2014, 43, 514–520. [Google Scholar]

- Rother, C.; Kolmogorov, V.; Blake, A. ‘GrabCut’: Interactive foreground extraction using iterated graph cuts. ACM Trans. Graph. 2004, 23, 309–314. [Google Scholar] [CrossRef]

- Shotton, J.; Winn, J.; Rother, C.; Criminisi, A. Textonboost: Joint appearance, shape and context modeling for multi-class object recognition and segmentation. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 1–15. [Google Scholar]

- Qiong, J.; Landgrebe, D. Adaptive Bayesian contextual classification based on Markov random fields. IEEE Trans. Geosci. Remote Sens. 2003, 40, 2454–2463. [Google Scholar]

- Moser, G.; Serpico, S. Combining support vector machines and Markov random fields in an integrated framework for contextual image classification. IEEE Trans. Geosci. Remote Sens. 2013, 51, 2734–2752. [Google Scholar] [CrossRef]

- Richards, J.; Jia, X. Remote Sensing Digital Image Analysis: An Introduction, 4th ed.; Springer: New York, NY, USA, 2006. [Google Scholar]

| Class | Parameter | Class | Parameter | ||||

|---|---|---|---|---|---|---|---|

| Wavelength range | 400–1000 nm | Field of view | 33 | 22 | 16 | ||

| Number of spectral channels | 270 | Storage | 480 GB | ||||

| Lens focal length | 8 mm | 12 mm | 17 mm | Camera type | COMS | ||

| Operating temperature | 0~50℃ | Weight | <0.6 kg (no lens) | ||||

| Class | Training Samples | Test Samples |

|---|---|---|

| Red roof | 65 | 6525 |

| Gray roof | 48 | 4766 |

| Tree | 117 | 11664 |

| Road | 72 | 7138 |

| Strawberry | 226 | 22402 |

| Pea | 110 | 10937 |

| Soy | 13 | 1322 |

| Shadow | 763 | 75609 |

| Iron sheet | 10 | 1006 |

| Class | Accuracy (%) | ||||||

|---|---|---|---|---|---|---|---|

| SVM | MS | SVRFMC | DPSCRF | MSVC | SSF-CRF | SSLF-CRF | |

| Red roof | 49.72 | 48.89 | 64.75 | 49.96 | 67.43 | 82.16 | 84.08 |

| Tree | 67.30 | 73.95 | 92.47 | 80.38 | 84.33 | 96.12 | 97.12 |

| Road | 65.07 | 66.77 | 74.91 | 62.58 | 75.39 | 76.42 | 82.57 |

| Strawberry | 94.55 | 95.37 | 97.54 | 96.89 | 95.74 | 98.00 | 97.96 |

| Pea | 64.12 | 65.49 | 79.55 | 67.51 | 78.37 | 91.66 | 92.90 |

| Soy | 35.78 | 29.95 | 47.81 | 13.92 | 78.67 | 89.26 | 86.46 |

| Shadow | 97.19 | 97.41 | 98.84 | 97.53 | 97.83 | 98.07 | 98.04 |

| Gray roof | 53.90 | 53.67 | 74.21 | 64.06 | 72.05 | 76.88 | 82.27 |

| Iron sheet | 42.25 | 43.54 | 22.07 | 37.57 | 43.84 | 71.97 | 69.88 |

| OA | 85.51 | 86.41 | 91.98 | 87.40 | 90.91 | 94.60 | 95.29 |

| Kappa | 0.7757 | 0.7890 | 0.8760 | 0.8043 | 0.8607 | 0.9177 | 0.9286 |

| Class | Training Samples | Test Samples | Class | Training Samples | Test Samples |

|---|---|---|---|---|---|

| Red roof | 22 | 2182 | Brassica chinensis | 72 | 7181 |

| Bare soil | 118 | 11692 | Small Brassica chinensis | 159 | 15769 |

| Cotton | 14 | 1410 | Lactuca sativa | 52 | 5220 |

| Rape | 379 | 37541 | Celtuce | 10 | 993 |

| Chinese cabbage | 107 | 10688 | Film-covered lettuce | 72 | 7191 |

| Pakchoi | 40 | 4015 | Romaine lettuce | 30 | 2981 |

| Cabbage | 103 | 10204 | Carrot | 27 | 2766 |

| Tuber mustard | 114 | 11327 | White radish | 40 | 4042 |

| Brassica parachinensis | 63 | 6240 | Spouting garlic | 20 | 2046 |

| Class | Accuracy (%) | ||||||

|---|---|---|---|---|---|---|---|

| SVM | MS | SVRFMC | DPSCRF | MSVC | SSF-CRF | SSLF-CRF | |

| Red roof | 77.59 | 93.77 | 99.40 | 86.16 | 89.18 | 98.49 | 98.53 |

| Bare soil | 93.86 | 94.97 | 98.12 | 96.07 | 94.02 | 99.66 | 99.82 |

| Cotton | 83.55 | 95.89 | 98.58 | 97.09 | 91.77 | 99.01 | 99.08 |

| Rape | 96.19 | 98.90 | 99.80 | 98.11 | 98.73 | 99.91 | 99.91 |

| Chinese cabbage | 88.00 | 94.60 | 99.00 | 93.86 | 93.04 | 99.44 | 99.46 |

| Pakchoi | 1.79 | 14.92 | 13.87 | 3.76 | 10.76 | 87.50 | 87.90 |

| Cabbage | 94.13 | 97.28 | 99.30 | 97.29 | 96.32 | 99.57 | 99.64 |

| Tuber mustard | 63.15 | 77.96 | 90.17 | 80.52 | 70.80 | 98.54 | 98.75 |

| Brassica parachinensis | 62.36 | 72.72 | 93.69 | 83.51 | 67.32 | 97.63 | 97.63 |

| Brassica chinensis | 39.02 | 66.02 | 75.20 | 34.38 | 65.76 | 98.45 | 99.09 |

| Small Brassica chinensis | 77.68 | 82.67 | 92.68 | 84.31 | 83.46 | 94.98 | 95.03 |

| Lactuca sativa | 71.63 | 76.38 | 85.75 | 74.75 | 80.65 | 97.18 | 97.28 |

| Celtuce | 42.30 | 68.98 | 87.51 | 46.02 | 71.40 | 78.15 | 78.45 |

| Film-covered lettuce | 88.65 | 96.37 | 98.69 | 97.68 | 95.61 | 99.74 | 99.75 |

| Romaine lettuce | 31.23 | 36.30 | 27.31 | 8.45 | 43.17 | 95.64 | 96.04 |

| Carrot | 34.89 | 48.48 | 82.43 | 58.68 | 60.48 | 95.41 | 96.28 |

| White radish | 51.31 | 72.64 | 89.46 | 59.35 | 78.33 | 92.45 | 92.75 |

| Sprouting garlic | 39.20 | 61.29 | 82.94 | 21.80 | 71.16 | 97.21 | 97.17 |

| OA | 76.97 | 84.77 | 91.08 | 81.97 | 84.32 | 97.95 | 98.07 |

| Kappa | 0.7367 | 0.8262 | 0.8985 | 0.7936 | 0.8217 | 0.9768 | 0.9782 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wei, L.; Yu, M.; Liang, Y.; Yuan, Z.; Huang, C.; Li, R.; Yu, Y. Precise Crop Classification Using Spectral-Spatial-Location Fusion Based on Conditional Random Fields for UAV-Borne Hyperspectral Remote Sensing Imagery. Remote Sens. 2019, 11, 2011. https://doi.org/10.3390/rs11172011

Wei L, Yu M, Liang Y, Yuan Z, Huang C, Li R, Yu Y. Precise Crop Classification Using Spectral-Spatial-Location Fusion Based on Conditional Random Fields for UAV-Borne Hyperspectral Remote Sensing Imagery. Remote Sensing. 2019; 11(17):2011. https://doi.org/10.3390/rs11172011

Chicago/Turabian StyleWei, Lifei, Ming Yu, Yajing Liang, Ziran Yuan, Can Huang, Rong Li, and Yiwei Yu. 2019. "Precise Crop Classification Using Spectral-Spatial-Location Fusion Based on Conditional Random Fields for UAV-Borne Hyperspectral Remote Sensing Imagery" Remote Sensing 11, no. 17: 2011. https://doi.org/10.3390/rs11172011

APA StyleWei, L., Yu, M., Liang, Y., Yuan, Z., Huang, C., Li, R., & Yu, Y. (2019). Precise Crop Classification Using Spectral-Spatial-Location Fusion Based on Conditional Random Fields for UAV-Borne Hyperspectral Remote Sensing Imagery. Remote Sensing, 11(17), 2011. https://doi.org/10.3390/rs11172011