A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance

Abstract

:1. Introduction

- The most relevant DL techniques for autonomous collision avoidance are reviewed, as well as their application to UAV systems.

- The latest DL datasets that can be used for testing collision avoidance techniques on DL-UAV systems are described.

- The hardware and communications architecture of the most recent DL-UAVs are thoroughly detailed in order to allow future researchers to design their own systems.

- The main open challenges and current technical limitations are enumerated.

2. Related Work

3. Deep Learning in the Context of Autonomous Collision Avoidance

3.1. On the Application of DL to UAVs

3.2. Datasets

- KITTI online benchmark [75] is a widely-used outdoor dataset that contains stereo gray and color video, 3D LIDAR, inertial, and GPS navigation data for depth estimation, depth completion or odometry estimation.

- IDSIA forest trail dataset [76] includes footage of hikes recorded by three cameras to predict view orientation (left/center/right) throughout a large variety of trails.

- Udacity [62] provides over 70,000 images of car driving distributed over six experiments, which include timestamped images from three cameras (left, central, right) and the data from different sensors (e.g., an IMU, GPS, gear, brake, throttle, steering angles, and speed).

- UAV crash dataset [49] focuses on representing the different ways in which a UAV can crash. It contains 11,500 trajectories of collisions in 20 diverse indoor environments collected over 40 flying hours.

- Oxford RobotCar Dataset [77] provides data of six cameras, LIDAR, GPS, and inertial navigation captured from 100 repetitions of a consistent route over a period of over a year. This dataset captures many different combinations of weather, traffic, and pedestrians, along with longer term changes such as construction and roadworks.

- SYNTHIA [78] is a synthetic dataset in the context of driving scenarios that consists of a collection of photo-realistic frames rendered from a virtual city including diverse scenes, variety of dynamic objects like cars, pedestrians and cyclists, different lighting conditions and weather. The sensors involved in the dataset include eight RGB cameras that conform a binocular 360º camera and eight depth sensors.

- AirSIM [60] is a simulation environment that allows for simulating UAV trajectories in different scenarios and conditions that produce raw images, depth maps and the data that represent the UAV trajectories.

4. DL-UAV Hardware and Communications Architecture

4.1. Typical Deep Learning UAV System Architecture

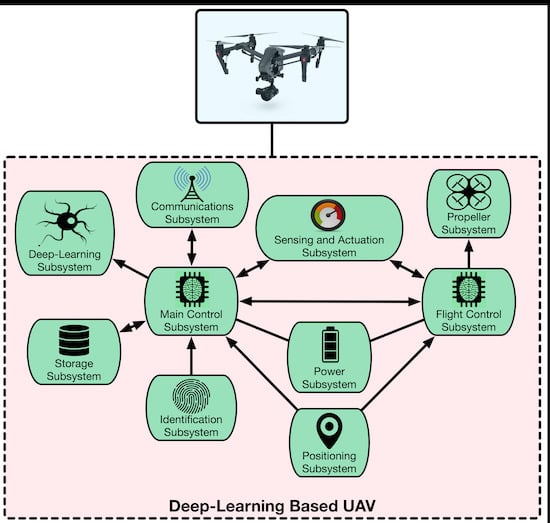

- The layer at the bottom is the UAV layer, which includes the aerial vehicles and their subsystems:

- –

- Propeller subsystem: it controls the UAV propellers through the motors that actuate on them.

- –

- Main control subsystem and flight control subsystem: it is responsible for coordinating the actions of the other subsystems and for collecting the data sent by them. In some cases, it delegates the maneuvering tasks on a flight controller that receives data from different sensors and then acts on the propeller subsystem.

- –

- Sensing and Actuation subsystem: it makes use of sensors to estimate diverse physical parameters of the environment and then actuates according to the instructions given by the on-board controllers.

- –

- Positioning subsystem: it is able to position the UAV outdoors (e.g., through GPS) or indoors (e.g., by using reference markers).

- –

- Communications subsystem: it allows for exchanging information wirelessly with remote stations.

- –

- Power subsystem: it provides energy and voltage regulation to the diverse electronic components of the UAV.

- –

- Storage subsystem: it stores the data collected by the different subsystems. For instance, it is often used for storing the video stream recorded by an on-board camera.

- –

- Identification subsystem: it is able to identify remotely other UAVs, objects, and obstacles. The most common identification subsystems are based on image/video processing, but wireless communications systems can also be used.

- –

- Deep Learning subsystem: it implements deep learning techniques to process the collected data and then determine the appropriate response from the UAV in terms of maneuvering.

- The data sent by every UAV are sent to either a remote control ground station or to a UAV pilot that makes use of a manual controller. The former case is related to autonomous guiding and collision-avoidance systems, and often involves the use of high-performance computers able to collect, process, and respond in real-time to the information gathered from the UAV. Thus, such high-performance computers run different data collection and processing services, algorithms that make use of deep learning techniques, and a subsystem that is responsible for sending control commands to the UAVs.

- At the top of the architecture is the remote service layer, which is essentially a cloud composed by multiple remote servers that store data and carry out the most computationally-intensive data processing tasks that do not require real-time responses (e.g., certain data analysis). It is also usual to provide through the cloud some kind of front-end so that remote users can manage the DL-UAV system in a user-friendly way.

4.2. Advanced UAV Architectures

- Fog Computing: It is based on the deployment of medium-performance gateways (known as fog gateways) that are placed locally (close to a UAV ground station). It is usual to make use of Single-Board Computers (SBCs), like Raspberry Pi [81], Beagle Bone [82], or Orange Pi PC [83], to implement such fog gateways. Despite its hardware, fog gateways are able to provide fast responses to the UAV, thus avoiding the communications with the cloud. It is also worth noting that fog gateways can collaborate among themselves and collect data from sensors deployed on the field, which can help to decide on the maneuver commands to be sent to the UAVs in order to detect obstacles and prevent collisions.

- Cloudlets. They are based on high-performance computers (i.e., powerful CPUs and GPUs) that carry out computationally intensive tasks that require real-time or quasi real-time responses [84]. Like fog gateways, cloudlets are deployed close to the ground station (they may even be part of the ground station hardware), so that the response lag is clearly lower than the one that would be provided by a remote Internet cloud.

4.3. Hardware for Collision Avoidance and Obstacle Detection Deep Learning UAV Systems

4.3.1. Platform Frame, Weight and Flight Time

4.3.2. Propeller Subsystem

4.3.3. Control Subsystem

4.3.4. Sensing Subsystem

- IMUs that embed 3-axis accelerometers, gyroscopes and magnetometers.

- High-precision pressure sensors (barometers).

- Photo and video cameras.

- 3D-camera systems (e.g., Kinect).

- Video Graphics Array (VGA)/Quarter Video Graphics Array (QVGA) cameras.

- Laser scanners (e.g., RP-LIDAR).

- Ultrasonic sensors.

4.3.5. Positioning Subsystem

4.3.6. Communications Subsystem

4.3.7. Power Subsystem

4.3.8. Storage Subsystem

4.3.9. Identification Subsystem

4.3.10. Deep Learning Subsystem

5. Main Challenges and Technical Limitations

5.1. DL Challenges

- A limiting factor of the supervised approaches is the large amount of data required to generate robust models with generalization capability in different real-world environments. In order to minimize annotation effort, self-supervised algorithms allow automating the collection and annotation processes to generate large-scale datasets, the results of which are bounded by the strategy for generating labeled data.

- The diversity of the datasets in terms of representative scenarios and conditions, the variety of sensors, or the balance between the different classes are also conditioning factors for learning processes.

- The trial and error nature of RL raises safety concerns and suffers from crashes operating in real-world environments [48]. An alternative is the use of synthetic data or virtual environments for generating the training datasets.

- The gap between real and virtual environments limits the applicability of the simulation policies in the physical world. The development of more realistic virtual datasets is still an open issue.

- The accuracy of the spatial and temporal alignment between different sensors in a multimodal robotic system impacts data quality.

5.2. Other Challenges

- Most current DL-UAV architectures rely on a remote cloud. This solution does not fulfill the requirements of IoT applications in terms of cost, coverage, availability, latency, power consumption, and scalability. Furthermore, a cloud may be compromised by cyberattacks. To confront this challenge, the fog/edge computing and blockchain architecture analyzed in Section 4.2 can help to comply with the strict requirements of IoT applications.

- Flight time is related directly to the drone weight and its maximum payload. Moreover, a trade-off among cost, payload capacity, and reliability should be achieved when choosing between single and multirotor UAVs (as of writing, quardrotors are the preferred solution for DL-UAVs).

- Considering the computational complexity of most DL techniques, the controller hardware must be much more powerful than traditional IoT nodes and UAV controllers, so such a hardware needs to be enhanced to provide a good trade-off between performance and power consumption. In fact, some of the analyzed DL-UAVs have been already enhanced, some of which even have the ability to run certain versions of Linux and embedding dedicated GPUs. The improvements to be performed can affect both the hardware to control the flight and the one aimed at interacting with the other UAV subsystems.

- Although some flight controllers embed specific hardware, most of the analyzed DL-UAVs share similar sensors, mainly differing in the photo and video camera features. Future DL-UAV developers should consider complementing visual identification techniques with other identification technologies (e.g., UWB and RFID) in order to improve their accuracy.

- Regarding the ways for powering a UAV, most DL-UAVs are limited by the use of heavy Li-Po or Li-ion batteries, but future developers should analyze the use of energy harvesting mechanisms to extend UAV flight autonomy.

- Robustness against cyberattacks is a key challenge. In order to ensure a secure operation, challenges such as interference management, mobility management or cooperative data transmission have to be taken into account. For instance, in [154], the authors provide a summary of the main wireless and security challenges, and introduce different AI-based solutions for addressing them. DL can be leveraged to classify various security events and alerts. For example, in the work by the authors of [155], the ML and DL methods are reviewed for network analysis of intrusion detection. Nonetheless, there are few examples in the literature that deal with cybersecure DL-UAV systems. One such work is by the authors of [156], where an RNN-based abnormal behavior detection scheme for UAVs is presented. Another relevant work is by the authors of [157], which details a DL framework for reconstructing the missing data in remote sensing analysis. Other authors focused on studying drone detection and identification methods using DL techniques [158]. In such a paper, the proposed algorithms exploit the unique acoustic fingerprints of UAVs to detect and identify them. In addition, a comparison on the performance of different neural networks (e.g., CNN, RNN, and Convolutional Recurrent Neural Network (CRNN)) tested with different audio samples is also presented. In the case of DL-UAV systems, the integrity of the classification is of paramount importance (e.g., to avoid adversarial samples [159] or inputs that deliberately result in an incorrect output classification). Adversarial samples are created at test time and do not require any alteration of the training process. The defense against the so-called adversarial machine learning [160] focuses on hardening the training phase of the DL algorithm. Finally, it is worth pointing out that cyber-physical attacks (e.g., spoofing attacks [161] and signal jamming) and authentication issues (e.g., forging of the identities of the transmitting UAVs or sending disrupted data using their identities) remain open research topics.

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

Abbreviations

| COTS | Commercial Off-The-Shelf |

| CNN | Convolutional Neural Network |

| CRNN | Convolutional Recurrent Neural Network |

| DL | Deep Learning |

| DL-UAV | Deep Learning Unmanned Aerial Vehicle |

| DNN | Deep Neural Network |

| DoF | Degrees of Freedom |

| IMU | Inertial Measurement Unit |

| IoT | Internet of Things |

| LIDAR | Light Detection and Ranging |

| LSTM | Long Short-Term Memory |

| ML | Machine Learning |

| RFID | Radio-Frequency IDentification |

| RL | Reinforcement Learning |

| RSSI | Received Signal Strength Indicator |

| RNN | Recurrent Neural Network |

| SLAM | Simultaneous Localization and Mapping |

| SOC | System-on-Chip |

| UAV | Unmanned Aerial Vehicle |

| UHF | Ultra High Frequency |

| UAS | Unmanned Aerial System |

| VGA | Video Graphics Array |

References

- IHS. Internet of Things (IoT) Connected Devices Installed Base Worldwide from 2015 to 2025 (In Billions). Available online: https://www.statista.com/statistics/471264/iot-number-of-connected-devices-worldwide/ (accessed on 5 September 2019).

- Pérez-Expósito, J.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Castedo, L. VineSens: An Eco-Smart Decision-Support Viticulture System. Sensors 2017, 17, 465. [Google Scholar] [CrossRef] [PubMed]

- Hernández-Rojas, D.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Escudero, C. A Plug-and-Play Human-Centered Virtual TEDS Architecture for the Web of Things. Sensors 2018, 18, 2052. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Caramés, T.M.; Fraga-Lamas, P. A Review on Human-Centered IoT-Connected Smart Labels for the Industry 4.0. IEEE Access 2018, 6, 25939–25957. [Google Scholar] [CrossRef]

- Garrido-Hidalgo, C.; Hortelano, D.; Roda-Sanchez, L.; Olivares, T.; Ruiz, M.C.; Lopez, V. IoT Heterogeneous Mesh Network Deployment for Human-in-the-Loop Challenges Towards a Social and Sustainable Industry 4.0. IEEE Access 2018, 6, 28417–28437. [Google Scholar] [CrossRef]

- Blanco-Novoa, O.; Fernández-Caramés, T.M.; Fraga-Lamas, P.; Castedo, L. A Cost-Effective IoT System for Monitoring Indoor Radon Gas Concentration. Sensors 2018, 18, 2198. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Caramés, T.M.; Fraga-Lamas, P.; Suárez-Albela, M.; Castedo, L. A methodology for evaluating security in commercial RFID systems. In Radio Frequency Identification; Crepaldi, P.C., Pimenta, T.C., Eds.; IntechOpen: London, UK, 2017. [Google Scholar]

- Burg, A.; Chattopadhyay, A.; Lam, K. Wireless Communication and Security Issues for Cyber-Physical Systems and the Internet of Things. Proc. IEEE 2018, 106, 38–60. [Google Scholar] [CrossRef]

- Hayat, S.; Yanmaz, E.; Muzaffar, R. Survey on Unmanned Aerial Vehicle Networks for Civil Applications: A Communications Viewpoint. IEEE Commun. Surv. Tutorials 2016, 18, 2624–2661. [Google Scholar] [CrossRef]

- Shakhatreh, H.; Sawalmeh, A.; Al-Fuqaha, A.I.; Dou, Z.; Almaita, E.; Khalil, I.M.; Othman, N.S.; Khreishah, A.; Guizani, M. Unmanned Aerial Vehicles: A Survey on Civil Applications and Key Research Challenges. arXiv 2018, arXiv:1805.00881. [Google Scholar] [CrossRef]

- Hassanalian, M.; Abdelkefi, A. Classifications, applications, and design challenges of drones: A review. Prog. Aerosp. Sci. 2017, 91, 99–131. [Google Scholar] [CrossRef]

- Lu, J.; Xu, X.; Li, X.; Li, L.; Chang, C.-C.; Feng, X.; Zhang, S. Detection of Bird’s Nest in High Power Lines in the Vicinity of Remote Campus Based on Combination Features and Cascade Classifier. IEEE Access 2018, 6, 39063–39071. [Google Scholar] [CrossRef]

- Zhou, Z.; Zhang, C.; Xu, C.; Xiong, F.; Zhang, Y.; Umer, T. Energy-Efficient Industrial Internet of UAVs for Power Line Inspection in Smart Grid. IEEE Trans. Ind. Inform. 2018, 14, 2705–2714. [Google Scholar] [CrossRef] [Green Version]

- Lim, G.J.; Kim, S.; Cho, J.; Gong, Y.; Khodaei, A. Multi-UAV Pre-Positioning and Routing for Power Network Damage Assessment. IEEE Trans. Smart Grid 2018, 9, 3643–3651. [Google Scholar] [CrossRef]

- Wang, L.; Zhang, Z. Automatic Detection of Wind Turbine Blade Surface Cracks Based on UAV-Taken Images. IEEE Trans. Ind. Electron. 2017, 64, 7293–7303. [Google Scholar] [CrossRef]

- Peng, K.; Liu, W.; Sun, Q.; Ma, X.; Hu, M.; Wang, D.; Liu, J. Wide-Area Vehicle-Drone Cooperative Sensing: Opportunities and Approaches. IEEE Access 2018, 7, 1818–1828. [Google Scholar] [CrossRef]

- Rossi, M.; Brunelli, D. Autonomous Gas Detection and Mapping With Unmanned Aerial Vehicles. IEEE Trans. Instrum. Meas. 2016, 65, 765–775. [Google Scholar] [CrossRef]

- Misra, P.; Kumar, A.A.; Mohapatra, P.; Balamuralidhar, P. Aerial Drones with Location-Sensitive Ears. IEEE Commun. Mag. 2018, 56, 154–160. [Google Scholar] [CrossRef]

- Olivares, V.; Córdova, F. Evaluation by computer simulation of the operation of a fleet of drones for transporting materials in a manufacturing plant of plastic products. In Proceedings of the 2015 CHILEAN Conference on Electrical, Electronics Engineering, Information and Communication Technologies (CHILECON), Santiago, Chile, 28–30 October 2015; pp. 847–853. [Google Scholar]

- Zhao, S.; Hu, Z.; Yin, M.; Ang, K.Z.Y.; Liu, P.; Wang, F.; Dong, X.; Lin, F.; Chen, B.M.; Lee, T.H. A Robust Real-Time Vision System for Autonomous Cargo Transfer by an Unmanned Helicopter. IEEE Trans. Ind. Electron. 2015, 62, 1210–1219. [Google Scholar] [CrossRef]

- Kuru, K.; Ansell, D.; Khan, W.; Yetgin, H. Analysis and Optimization of Unmanned Aerial Vehicle Swarms in Logistics: An Intelligent Delivery Platform. IEEE Access 2019, 7, 15804–15831. [Google Scholar] [CrossRef]

- Li, H.; Savkin, A.V. Wireless Sensor Network Based Navigation of Micro Flying Robots in the Industrial Internet of Things. IEEE Trans. Ind. Inform. 2018, 14, 3524–3533. [Google Scholar] [CrossRef]

- Kendoul, F. Survey of Advances in Guidance, Navigation, and Control of Unmanned Rotorcraft Systems. J. Field Robot 2012, 29, 315–378. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1417–1463. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part I. IEEE Robot. Autom. Mag. 2006, 13, 99–110. [Google Scholar] [CrossRef]

- Durrant-Whyte, H.; Bailey, T. Simultaneous localization and mapping: Part II. IEEE Robot. Autom. Mag. 2006, 13, 108–117. [Google Scholar] [CrossRef]

- Schönberger, J.L.; Frahm, J. Structure-from-Motion Revisited. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 4104–4113. [Google Scholar]

- Gageik, N.; Benz, P.; Montenegro, S. Obstacle Detection and Collision Avoidance for a UAV with Complementary Low-Cost Sensors. IEEE Access 2015, 3, 599–609. [Google Scholar] [CrossRef]

- Krämer, M.S.; Kuhnert, K.D. Multi-Sensor Fusion for UAV Collision Avoidance. In Proceedings of the 2018 2Nd International Conference on Mechatronics Systems and Control Engineering, Amsterdam, The Netherlands, 21–23 February 2018; ACM: New York, NY, USA, 2018; pp. 5–12. [Google Scholar]

- Bonin-Font, F.; Ortiz, A.; Oliver, G. Visual Navigation for Mobile Robots: A Survey. J. Intell. Robot. Syst. 2008, 53, 263. [Google Scholar] [CrossRef]

- Lu, Y.; Xue, Z.; Xia, G.S.; Zhang, L. A survey on vision-based UAV navigation. Geo-Spat. Inf. Sci. 2018, 21, 21–32. [Google Scholar] [CrossRef] [Green Version]

- Mukhtar, A.; Xia, L.; Tang, T.B. Vehicle detection techniques for collision avoidance systems: A review. IEEE Trans. Intell. Transp. Syst. 2015, 16, 2318–2338. [Google Scholar] [CrossRef]

- Rybus, T. Obstacle avoidance in space robotics: Review of major challenges and proposed solutions. Prog. Aerosp. Sci. 2018, 101, 31–48. [Google Scholar] [CrossRef]

- Campbell, S.; Naeem, W.; Irwin, G. A review on improving the autonomy of unmanned surface vehicles through intelligent collision avoidance manoeuvres. Annu. Rev. Control. 2012, 36, 267–283. [Google Scholar] [CrossRef] [Green Version]

- Shabbir, J.; Anwer, T. A Survey of Deep Learning Techniques for Mobile Robot Applications. arXiv 2018, arXiv:1803.07608. [Google Scholar]

- Whitehead, K.; Hugenholtz, C.H. Remote sensing of the environment with small unmanned aircraft systems (UASs), part 1: A review of progress and challenges. J. Unmanned Veh. Syst. 2014, 2, 69–85. [Google Scholar] [CrossRef]

- Lippitt, C.D.; Zhang, S. The impact of small unmanned airborne platforms on passive optical remote sensing: A conceptual perspective. J. Remote. Sens. 2018, 39, 4852–4868. [Google Scholar] [CrossRef]

- Yao, H.; Qin, R.; Chen, X. Unmanned aerial vehicle for remote sensing applications—A review. Remote Sens. 2019, 11, 1443. [Google Scholar] [CrossRef]

- Carrio, A.; Sampedro, C.; Rodriguez-Ramos, A.; Cervera, P.C. A Review of Deep Learning Methods and Applications for Unmanned Aerial Vehicles. J. Sens. 2017, 2017. [Google Scholar] [CrossRef]

- Ammour, N.; Alhichri, H.; Bazi, Y.; Benjdira, B.; Alajlan, N.; Zuair, M. Deep Learning Approach for Car Detection in UAV Imagery. Remote Sens. 2017, 9, 312. [Google Scholar] [CrossRef]

- Zhao, Y.; Ma, J.; Li, X.; Zhang, J. Saliency Detection and Deep Learning-Based Wildfire Identification in UAV Imagery. Sensors 2018, 18, 712. [Google Scholar] [CrossRef] [PubMed]

- Zeggada, A.; Melgani, F.; Bazi, Y. A Deep Learning Approach to UAV Image Multilabeling. IEEE Geosci. Remote. Sens. Lett. 2017, 14, 694–698. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Silver, D.; Huang, A.; Maddison, C.J.; Guez, A.; Sifre, L.; van den Driessche, G.; Schrittwieser, J.; Antonoglou, I.; Panneershelvam, V.; Lanctot, M.; et al. Mastering the Game of Go with Deep Neural Networks and Tree Search. Nature 2016, 529, 484–489. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageNet Classification with Deep Convolutional Neural Networks. Adv. Neural Inf. Process. Syst. 2012, 521, 1097–1105. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In International Conference on Medical Image Computing and Computer-Assisted Intervention; Springer: Cham, Switzerland, 2015; pp. 234–241. [Google Scholar] [Green Version]

- Deng, L.; Hinton, G.; Kingsbury, B. New types of deep neural network learning for speech recognition and related applications: An overview. In Proceedings of the 2013 IEEE International Conference on Acoustics, Speech and Signal Processing, Vancouver, BC, Canada, 26–31 May 2013. [Google Scholar]

- Kouris, A.; Bouganis, C. Learning to Fly by MySelf: A Self-Supervised CNN-Based Approach for Autonomous Navigation. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018. [Google Scholar]

- Gandhi, D.; Pinto, L.; Gupta, A. Learning to fly by crashing. IEEE/RSJ Int. Conf. Intell. Robot. Syst. (IROS) 2017, 2017, 3948–3955. [Google Scholar]

- Gandhi, D.; Pinto, L.; Gupta, A.; Ram Prasad, P.; Sachin, V.; Shahzad, A.; Suman Kumar, C.; Pankaj Kumar, S. Deep Neural Network for Autonomous UAV Navigation in Indoor Corridor Environments. Procedia Comput. Sci. 2018, 133, 643–650. [Google Scholar]

- Michels, J.; Saxena, A.; Ng, A.Y. High Speed Obstacle Avoidance Using Monocular Vision and Reinforcement Learning. In Proceedings of the 22nd International Conference on Machine Learning, Bonn, Germany, 7–11 August 2005; pp. 593–600. [Google Scholar]

- Tai, L.; Li, S.; Liu, M. A deep-network solution towards model-less obstacle avoidance. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Daejeon, Korea, 9–14 October 2016; pp. 2759–2764. [Google Scholar]

- Singla, A.; Padakandla, S.; Bhatnagar, S. Memory-based Deep Reinforcement Learning for Obstacle Avoidance in UAV with Limited Environment Knowledge. arXiv 2018, arXiv:1811.03307. [Google Scholar]

- Xie, L.; Wang, S.; Markham, A.; Trigoni, N. Towards Monocular Vision based Obstacle Avoidance through Deep Reinforcement Learning. arXiv 2017, arXiv:1706.09829. [Google Scholar]

- Smolyanskiy, N.; Kamenev, A.; Smith, J.; Birchfield, S. Toward low-flying autonomous MAV trail navigation using deep neural networks for environmental awareness. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 4241–4247. [Google Scholar]

- Kearns, M.; Singh, S. Finite-sample Convergence Rates for Q-learning and Indirect Algorithms. In Proceedings of the 1998 conference on Advances in Neural Information Processing Systems II; MIT Press: Cambridge, MA, USA, 1999; pp. 996–1002. [Google Scholar]

- Chen, Y.F.; Liu, M.; Everett, M.; How, J.P. Decentralized non-communicating multiagent collision avoidance with deep reinforcement learning. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Singapore, 29 May–3 June 2017; pp. 3285–3292. [Google Scholar]

- Tai, L.; Paolo, G.; Liu, M. Virtual-to-real deep reinforcement learning: Continuous control of mobile robots for mapless navigation. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 31–36. [Google Scholar]

- Sadeghi, F.; Sadeghi, F.; Levine, S. CAD2RL: Real Single-Image Flight Without a Single Real Image. arXiv 2016, arXiv:1611.04201. [Google Scholar]

- Shah, S.; Kapoor, A.; Dey, D.; Lovett, C. AirSim: High-Fidelity Visual and Physical Simulation for Autonomous Vehicles. In Field and Service Robotics; Springer: Cham, Switzerland, 2018; pp. 621–635. [Google Scholar]

- Pan, S.J.; Yang, Q. A Survey on Transfer Learning. IEEE Trans. Knowl. Data Eng. 2010, 22, 1345–1359. [Google Scholar] [CrossRef]

- Loquercio, A.; Maqueda, A.I.; del-Blanco, C.R.; Scaramuzza, D. DroNet: Learning to Fly by Driving. IEEE Robot. Autom. Lett. 2018, 3, 1088–1095. [Google Scholar] [CrossRef]

- Tobin, J.; Fong, R.H.; Ray, A.; Schneider, J.; Zaremba, W.; Abbeel, P. Domain randomization for transferring deep neural networks from simulation to the real world. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Vancouver, BC, Canada, 24–28 September 2017; pp. 23–30. [Google Scholar]

- Varol, G.; Romero, J.; Martin, X.; Mahmood, N.; Black, M.J.; Laptev, I. Learning from synthetic humans. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 4627–4635. [Google Scholar]

- Atapour-Abarghouei, A.; Breckon, T.P. Real-time monocular depth estimation using synthetic data with domain adaptation via image style transfer. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 2800–2810. [Google Scholar]

- Mikolov, T.; Karafiát, M.; Burget, L.; Cernocký, J.; Khudanpur, S. Recurrent neural network based language model. In Proceedings of the INTERSPEECH 2010 11th Annual Conference of the International Speech Communication AssociationMakuhari, Chiba, Japan, 26–30 September 2010; pp. 1045–1048. [Google Scholar]

- Pei, W.; Baltrušaitis, T.; Tax, D.M.J.; Morency, L. Temporal Attention-Gated Model for Robust Sequence Classification. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 820–829. [Google Scholar]

- Hochreiter, S.; Schmidhuber, J. Long Short-Term Memory. Neural Comput. 1997, 9, 1735–1780. [Google Scholar] [CrossRef] [PubMed]

- Kim, D.K.; Chen, T. Deep Neural Network for Real-Time Autonomous Indoor Navigation. Available online: https://arxiv.org/abs/1511.04668 (accessed on 31 July 2019).

- Jung, S.; Hwang, S.; Shin, H.; Him, D.H. Perception, Guidance, and Navigation for Indoor Autonomous Drone Racing Using Deep Learning. IEEE Robot. Autom. Lett. 2018, 3, 2539–2544. [Google Scholar] [CrossRef]

- Dionisio-Ortega, S.; Rojas-Perez, L.O.; Martinez-Carranza, J.; Cruz-Vega, I. A deep learning approach towards autonomous flight in forest environments. In Proceedings of the 2018 International Conference on Electronics, Communications and Computers (CONIELECOMP), Cholula, Mexico, 21–23 February 2018; pp. 139–144. [Google Scholar]

- Chen, P.; Lee, C. UAVNet: An Efficient Obstacel Detection Model for UAV with Autonomous Flight. In Proceedings of the 2018 International Conference on Intelligent Autonomous Systems (ICoIAS), Singapore, 1–3 March 2018; pp. 217–220. [Google Scholar]

- Khan, A.; Hebert, M. Learning safe recovery trajectories with deep neural networks for unmanned aerial vehicles. In Proceedings of the 2018 IEEE Aerospace Conference, Big Sky, MT, USA, 3–10 March 2018. [Google Scholar]

- Huang, G.; Liu, Z.; Van Der Maaten, L.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. 2013, 32, 1231–1237. [Google Scholar] [CrossRef] [Green Version]

- Giusti, A.; Guzzi, J.; CireÅŸan, D.C.; He, F.; RodrÃguez, J.P.; Fontana, F.; Faessler, M.; Forster, C.; Schmidhuber, J.; Caro, G.D.; et al. A Machine Learning Approach to Visual Perception of Forest Trails for Mobile Robots. IEEE Robot. Autom. Lett. 2016, 1, 661–667. [Google Scholar] [CrossRef]

- Maddern, W.; Pascoe, G.; Linegar, C.; Newman, P. 1 Year, 1000 km: The Oxford RobotCar Dataset. Int. J. Robot. Res. (IJRR) 2017, 36, 3–15. [Google Scholar] [CrossRef]

- Ros, G.; Sellart, L.; Materzynska, J.; Vazquez, D.; Lopez, A.M. The SYNTHIA Dataset: A Large Collection of Synthetic Images for Semantic Segmentation of Urban Scenes. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Las Vegas, NV, USA, 27–30 June 2016; pp. 3234–3243. [Google Scholar]

- Li, B.; Dai, Y.; He, M. Monocular depth estimation with hierarchical fusion of dilated CNNs and soft-weighted-sum inference. Pattern Recognit. 2018, 83, 328–339. [Google Scholar] [CrossRef] [Green Version]

- Suárez-Albela, M.; Fernández-Caramés, T.; Fraga-Lamas, P.; Castedo, L. A Practical Evaluation of a High-Security Energy-Efficient Gateway for IoT Fog Computing Applications. Sensors 2017, 17, 1978. [Google Scholar] [CrossRef] [PubMed]

- Raspberry Pi Official Webpage. Available online: https://www.raspberrypi.org/ (accessed on 31 July 2019).

- Beagle Bone Official Webpage. Available online: https://beagleboard.org/black (accessed on 31 July 2019).

- Orange Pi Official Webpage. Available online: http://www.orangepi.org/ (accessed on 31 July 2019).

- Fernández-Caramés, T.M.; Fraga-Lamas, P.; Suárez-Albela, M.; Vilar-Montesinos, M. A Fog Computing and Cloudlet Based Augmented Reality System for the Industry 4.0 Shipyard. Sensors 2018, 18, 1798. [Google Scholar] [CrossRef] [PubMed]

- Fernández-Caramés, T.M.; Fraga-Lamas, P. A Review on the Use of Blockchain for the Internet of Things. IEEE Access 2018, 6, 32979–33001. [Google Scholar] [CrossRef]

- Fernández-Caramés, T.M.; Froiz-Míguez, I.; Blanco-Novoa, O.; Fraga-Lamas, P. Enabling the Internet of Mobile Crowdsourcing Health Things: A Mobile Fog Computing, Blockchain and IoT Based Continuous Glucose Monitoring System for Diabetes Mellitus Research and Care. Sensors 2019, 19, 3319. [Google Scholar] [CrossRef] [PubMed]

- Huang, J.; Kong, L.; Chen, G.; Wu, M.; Liu, X.; Zeng, P. Towards Secure Industrial IoT: Blockchain System With Credit-Based Consensus Mechanism. IEEE Trans. Ind. Inf. 2019, 15, 3680–3689. [Google Scholar] [CrossRef]

- Fernández-Caramés, T.M.; Blanco-Novoa, O.; Froiz-Míguez, I.; Fraga-Lamas, P. Towards an Autonomous Industry 4.0 Warehouse: A UAV and Blockchain-Based System for Inventory and Traceability Applications in Big Data-Driven Supply Chain Management. Sensors 2019, 19, 2394. [Google Scholar] [CrossRef]

- Zyskind, G.; Nathan, O.; Pentland, A.S. Decentralizing Privacy: Using Blockchain to Protect Personal Data. In Proceedings of the 2015 IEEE Security and Privacy Workshops, San Jose, CA, USA, 21–22 May 2015; pp. 180–184. [Google Scholar]

- Christidis, K.; Devetsikiotis, M. Blockchains and Smart Contracts for the Internet of Things. IEEE Access 2016, 4, 2292–2303. [Google Scholar] [CrossRef]

- Parrot’s SDK APIs. Available online: https://developer.parrot.com (accessed on 31 July 2019).

- Broecker, B.; Tuyls, K.; Butterworth, J. Distance-Based Multi-Robot Coordination on Pocket Drones. Proceedings of 2018 IEEE International Conference on Robotics and Automation (ICRA), Brisbane, QLD, Australia, 21–25 May 2018; pp. 6389–6394. [Google Scholar]

- Anwar, M.A.; Raychowdhury, A. NavREn-Rl: Learning to fly in real environment via end-to-end deep reinforcement learning using monocular images. In Proceedings of the 2018 25th International Conference on Mechatronics and Machine Vision in Practice (M2VIP), Stuttgart, Germany, 20–22 November 2018. [Google Scholar]

- Lee, D.; Lee, H.; Lee, J.; Shim, D.H. Design, implementation, and flight tests of a feedback linearization controller for multirotor UAVs. Int. J. Aeronaut. Space Sci. 2017, 18, 112–121. [Google Scholar] [CrossRef]

- Junoh, S.; Aouf, N. Person classification leveraging Convolutional Neural Network for obstacle avoidance via Unmanned Aerial Vehicles. In Proceedings of the 2017 Workshop on Research, Education and Development of Unmanned Aerial Systems (RED-UAS), Linkoping, Sweden, 3–5 October 2017; pp. 168–173. [Google Scholar]

- Carrio, A.; Vemprala, S.; Ripoll, A.; Saripalli, S.; Campoy, P. Drone Detection Using Depth Maps. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1034–1037. [Google Scholar]

- Pixhawk Official Webpage. Available online: http://pixhawk.org (accessed on 31 July 2019).

- Guzmán-Rabasa, J.A.; López-Estrada, F.R.; González-Contreras, B.M.; Valencia-Palomo, G.; Chadli, M.; Pérez-Patricio, M. Actuator fault detection and isolation on a quadrotor unmanned aerial vehicle modeled as a linear parameter-varying system. Meas. Control 2019. [Google Scholar] [CrossRef]

- Guo, D.; Zhong, M.; Ji, H.; Liu, Y.; Yang, R. A hybrid feature model and deep learning based fault diagnosis for unmanned aerial vehicle sensors. Neurocomputing 2018, 319, 155–163. [Google Scholar] [CrossRef]

- Mai, V.; Kamel, M.; Krebs, M.; Schaffner, A.; Meier, D.; Paull, L.; Siegwart, R. Local Positioning System Using UWB Range Measurements for an Unmanned Blimp. IEEE Robot. Autom. Lett. 2018, 3, 2971–2978. [Google Scholar] [CrossRef]

- He, S.; Chan, S.H.G. Wi-Fi Fingerprint-Based Indoor Positioning: Recent Advances and Comparisons. IEEE Commun. Surv. Tutor. 2015, 18, 466–490. [Google Scholar] [CrossRef]

- Fraga-Lamas, P.; Fernández-Caramés, T.M.; Noceda-Davila, D.; Vilar-Montesinos, M. RSS Stabilization Techniques for a Real-Time Passive UHF RFID Pipe Monitoring System for Smart Shipyards. In Proceedings of the 2017 IEEE International Conference on RFID (IEEE RFID 2017), Phoenix, AZ, USA, 9–11 May 2017; pp. 161–166. [Google Scholar]

- Yan, J.; Zhao, H.; Luo, X.; Chen, C.; Guan, X. RSSI-Based Heading Control for Robust Long-Range Aerial Communication in UAV Networks. IEEE Internet Things J. 2019, 6, 1675–1689. [Google Scholar] [CrossRef]

- Wang, W.; Bai, P.; Zhou, Y.; Liang, X.; Wang, Y. Optimal Configuration Analysis of AOA Localization and Optimal Heading Angles Generation Method for UAV Swarms. IEEE Access 2019, 7, 70117–70129. [Google Scholar] [CrossRef]

- Okello, N.; Fletcher, F.; Musicki, D.; Ristic, B. Comparison of Recursive Algorithms for Emitter Localisation using TDOA Measurements from a Pair of UAVs. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1723–1732. [Google Scholar] [CrossRef]

- Li, B.; Fei, Z.; Zhang, Y. UAV Communications for 5G and Beyond: Recent Advances and Future Trends. IEEE Internet Things J. 2019, 6, 2241–2263. [Google Scholar] [CrossRef]

- Zhang, L.; Zhao, H.; Hou, S.; Zhao, Z.; Xu, H.; Wu, X.; Wu, Q.; Zhang, R. A Survey on 5G Millimeter Wave Communications for UAV-Assisted Wireless Networks. IEEE Access 2019, 7, 117460–117504. [Google Scholar] [CrossRef]

- Hristov, G.; Raychev, J.; Kinaneva, D.; Zahariev, P. Emerging Methods for Early Detection of Forest Fires Using Unmanned Aerial Vehicles and Lorawan Sensor Networks. In Proceedings of the 2018 28th EAEEIE Annual Conference (EAEEIE), Reykjavik, Iceland, 26–28 September 2018. [Google Scholar]

- Nijsure, Y.A.; Kaddoum, G.; Khaddaj Mallat, N.; Gagnon, G.; Gagnon, F. Cognitive Chaotic UWB-MIMO Detect-Avoid Radar for Autonomous UAV Navigation. IEEE Trans. Intell. Transp. Syst. 2016, 17, 3121–3131. [Google Scholar] [CrossRef]

- Ali, M.Z.; Misic, J.; Misic, V.B. Extending the Operational Range of UAV Communication Network using IEEE 802.11ah. In Proceedings of the ICC 2019—2019 IEEE International Conference on Communications (ICC), Shanghai, China, 20–24 May 2019; pp. 1–6. [Google Scholar]

- Khan, M.A.; Hamila, R.; Kiranyaz, M.S.; Gabbouj, M. A Novel UAV-Aided Network Architecture Using Wi-Fi Direct. IEEE Access 2019, 7, 67305–67318. [Google Scholar] [CrossRef]

- Bluetooth 5 Official Webpage. Available online: https://www.bluetooth.com/bluetooth-resources/bluetooth-5-go-faster-go-further/ (accessed on 31 July 2019).

- Dae-Ki, C.; Chia-Wei, C.; Min-Hsieh, T.; Gerla, M. Networked medical monitoring in the battlefield. In Proceedings of the 2008 IEEE Military Communications Conference (MILCOM), San Diego, CA, USA, 16–19 November 2008; pp. 1–7. [Google Scholar]

- IQRF Official Webpage. Available online: https://www.iqrf.org/ (accessed on 31 July 2019).

- 3GPP NB-IoT Official Webpage. Available online: https://www.3gpp.org/news-events/1785-nb_iot_complete (accessed on 31 July 2019).

- LoRa-Alliance. LoRaWAN What is it. In A Technical Overview of LoRa and LoRaWAN; White Paper; The LoRa Alliance: San Ramon, CA, USA, 2015. [Google Scholar]

- SigFox Official Web Page. Available online: https://www.sigfox.com (accessed on 31 July 2019).

- Valisetty, R.; Haynes, R.; Namburu, R.; Lee, M. Machine Learning for US Army UAVs Sustainment: Assessing Effect of Sensor Frequency and Placement on Damage Information in the Ultrasound Signals. In Proceedings of the 2018 17th IEEE International Conference on Machine Learning and Applications (ICMLA), Orlando, FL, USA, 17–20 December 2018; pp. 165–172. [Google Scholar]

- Weightless Official Web Page. Available online: http://www.weightless.org (accessed on 31 July 2019).

- Wi-Fi Hallow Official Webpage. Available online: https://www.wi-fi.org/discover-wi-fi/wi-fi-halow (accessed on 31 July 2019).

- Wi-Sun Alliance Official Web Page. Available online: https://www.wi-sun.org (accessed on 31 July 2019).

- ZigBee Alliance. Available online: http://www.zigbee.org (accessed on 31 July 2019).

- Jawhar, I.; Mohamed, N.; Al-Jaroodi, J. UAV-based data communication in wireless sensor networks: Models and strategies. In Proceedings of the 2015 International Conference on Unmanned Aircraft Systems (ICUAS), Denver, CO, USA, 9–12 June 2015; pp. 687–694. [Google Scholar]

- Wu, J.; Ma, J.; Rou, Y.; Zhao, L.; Ahmad, R. An Energy-Aware Transmission Target Selection Mechanism for UAV Networking. IEEE Access 2019, 7, 67367–67379. [Google Scholar] [CrossRef]

- Say, S.; Inata, H.; Liu, J.; Shimamoto, S. Priority-Based Data Gathering Framework in UAV-Assisted Wireless Sensor Networks. IEEE Sens. J. 2016, 16, 5785–5794. [Google Scholar] [CrossRef]

- Donateo, T.; Ficarella, A.; Spedicato, L.; Arista, A.; Ferraro, M. A new approach to calculating endurance in electric flight and comparing fuel cells and batteries. Appl. Energy 2017, 187, 807–819. [Google Scholar] [CrossRef]

- Ji, B.; Li, Y.; Zhou, B.; Li, C.; Song, K.; Wen, H. Performance Analysis of UAV Relay Assisted IoT Communication Network Enhanced With Energy Harvesting. IEEE Access 2019, 7, 38738–38747. [Google Scholar] [CrossRef]

- Gong, A.; MacNeill, R.; Verstraete, D.; Palmer, J.L. Analysis of a Fuel-Cell/Battery /Supercapacitor Hybrid Propulsion System for a UAV Using a Hardware-in-the-Loop Flight Simulator. In Proceedings of the 2018 AIAA/IEEE Electric Aircraft Technologies Symposium (EATS), Cincinnati, OH, USA, 12–14 July 2018; pp. 1–17. [Google Scholar]

- Fleming, J.; Ng, W.; Ghamaty, S. Thermoelectric-based power system for UAV/MAV applications. In Proceedings of the 1st UAV Conference, Portsmouth, Virginia, 20–23 May 2002; p. 3412. [Google Scholar]

- Martinez, V.; Defaÿ, F.; Salvetat, L.; Neuhaus, K.; Bressan, M.; Alonso, C.; Boitier, V. Study of Photovoltaic Cells Implantation in a Long-Endurance Airplane Drone. In Proceedings of the 2018 7th International Conference on Renewable Energy Research and Applications (ICRERA), Paris, France, 14–17 October 2018; pp. 1299–1303. [Google Scholar]

- Khristenko, A.V.; Konovalenko, M.O.; Rovkin, M.E.; Khlusov, V.A.; Marchenko, A.V.; Sutulin, A.A.; Malyutin, N.D. Magnitude and Spectrum of Electromagnetic Wave Scattered by Small Quadcopter in X-Band. IEEE Trans. Antennas Propag. 2018, 66, 1977–1984. [Google Scholar] [CrossRef]

- Xu, J.; Zeng, Y.; Zhang, R. UAV-Enabled Wireless Power Transfer: Trajectory Design and Energy Optimization. IEEE Trans. Wirel. Commun. 2018, 17, 5092–5106. [Google Scholar] [CrossRef] [Green Version]

- Xie, L.; Xu, J.; Zhang, R. Throughput Maximization for UAV-Enabled Wireless Powered Communication Networks. IEEE Internet Things J. 2019, 6, 1690–1703. [Google Scholar] [CrossRef]

- Ure, N.K.; Chowdhary, G.; Toksoz, T.; How, J.P.; Vavrina, M.A.; Vian, J. An Automated Battery Management System to Enable Persistent Missions With Multiple Aerial Vehicles. IEEE/ASME Trans. Mech. 2015, 20, 275–286. [Google Scholar] [CrossRef]

- Amorosi, L.; Chiaraviglio, L.; Galán-Jiménez, J. Optimal Energy Management of UAV-Based Cellular Networks Powered by Solar Panels and Batteries: Formulation and Solutions. IEEE Access 2019, 7, 53698–53717. [Google Scholar] [CrossRef]

- Huang, F.; Chen, J.; Wang, H.; Ding, G.; Xue, Z.; Yang, Y.; Song, F. UAV-Assisted SWIPT in Internet of Things With Power Splitting: Trajectory Design and Power Allocation. IEEE Access 2019, 7, 68260–68270. [Google Scholar] [CrossRef]

- Belotti, M.; Božić, N.; Pujolle, G.; Secci, S. A Vademecum on Blockchain Technologies: When, Which and How. IEEE Commun. Surv. Tutorials 2019, 6, 1690–1703. [Google Scholar] [CrossRef]

- Fraga-Lamas, P.; Fernández-Caramés, T.M. A Review on Blockchain Technologies for an Advanced and Cyber-Resilient Automotive Industry. IEEE Access 2019, 7, 17578–17598. [Google Scholar] [CrossRef]

- Trump, B.D.; Florin, M.; Matthews, H.S.; Sicker, D.; Linkov, I. Governing the Use of Blockchain and Distributed Ledger Technologies: Not One-Size-Fits-All. IEEE Eng. Manag. Rev. 2018, 46, 56–62. [Google Scholar] [CrossRef]

- Fernández-Caramés, T.M.; Fraga-Lamas, P. A Review on the Application of Blockchain for the Next Generation of Cybersecure Industry 4.0 Smart Factories. IEEE Access 2019, 7, 45201–45218. [Google Scholar] [CrossRef]

- Liang, X.; Zhao, J.; Liang, X.; Zhao, J.; Shetty, S.; Liu, J.; Li, D. Integrating blockchain for data sharing and collaboration in mobile healthcare applications. In Proceedings of the 2017 IEEE 28th Annual International Symposium on Personal, Indoor, and Mobile Radio Communications (PIMRC), Montreal, QC, Canada, 8–13 October 2017; pp. 1–5. [Google Scholar]

- Dinh, P.; Nguyen, T.M.; Sharafeddine, S.; Assi, C. Joint Location and Beamforming Design for Cooperative UAVs with Limited Storage Capacity. IEEE Trans. Commun. 2019. [Google Scholar] [CrossRef]

- Gaynor, P.; Coore, D. Towards distributed wilderness search using a reliable distributed storage device built from a swarm of miniature UAVs. In Proceedings of the 2014 International Conference on Unmanned Aircraft Systems (ICUAS), Orlando, FL, USA, 27–30 May 2014; pp. 596–601. [Google Scholar]

- Beul, M.; Droeschel, D.; Nieuwenhuisen, M.; Quenzel, J.; Houben, S.; Behnke, S. Fast Autonomous Flight in Warehouses for Inventory Applications. IEEE Robot. Autom. Lett. 2018, 3, 3121–3128. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J.; Wang, X.; Yu, Z.; Lyu, Y.; Mao, S.; Periaswamy, S.C.; Patton, J.; Wang, X. Robust RFID Based 6-DoF Localization for Unmanned Aerial Vehicles. IEEE Access 2019, 7, 77348–77361. [Google Scholar] [CrossRef]

- Hardis Group, EyeSee Official Webpage. Available online: http://www.eyesee-drone.com (accessed on 31 March 2019).

- Geodis and Delta Drone Official Communication. Available online: www.goo.gl/gzeYV7 (accessed on 31 March 2019).

- DroneScan Official Webpage. Available online: www.dronescan.co (accessed on 31 March 2019).

- Cho, H.; Kim, D.; Park, J.; Roh, K.; Hwang, W. 2D Barcode Detection using Images for Drone-assisted Inventory Management. In Proceedings of the 15th International Conference on Ubiquitous Robots (UR), Honolulu, HI, USA, 26–30 June 2018. [Google Scholar]

- Macoir, N.; Bauwens, J.; Jooris, B.; Van Herbruggen, B.; Rossey, J.; Hoebeke, J.; De Poorter, E. UWB Localization with Battery-Powered Wireless Backbone for Drone-Based Inventory Management. Sensors 2019, 19, 467. [Google Scholar] [CrossRef]

- Bae, S.M.; Han, K.H.; Cha, C.N.; Lee, H.Y. Development of Inventory Checking System Based on UAV and RFID in Open Storage Yard. In Proceedings of the International Conference on Information Science and Security (ICISS), Pattaya, Thailand, 19–22 December 2016. [Google Scholar]

- Ong, J.H.; Sanchez, A.; Williams, J. Multi-UAV System for Inventory Automation. In Proceedings of the 1st Annual RFID Eurasia, Istanbul, Turkey, 5–6 September 2007. [Google Scholar]

- Harik, E.H.C.; Guérin, F.; Guinand, F.; Brethé, J.; Pelvillain, H. Towards An Autonomous Warehouse Inventory Scheme. In Proceedings of the IEEE Symposium Series on Computational Intelligence (SSCI), Athens, Greece, 6–9 December 2016. [Google Scholar]

- Challita, U.; Ferdowsi, A.; Chen, M.; Saad, W. Machine Learning for Wireless Connectivity and Security of Cellular-Connected UAVs. IEEE Wirel. Commun. 2019, 26, 28–35. [Google Scholar] [CrossRef] [Green Version]

- Xin, Y.; Kong, L.; Liu, Z.; Chen, Y.; Li, Y.; Zhu, H.; Gao, M.; Hou, H.; Wang, C. Machine Learning and Deep Learning Methods for Cybersecurity. IEEE Access 2018, 6, 35365–35381. [Google Scholar] [CrossRef]

- Xiao, K.; Zhao, J.; He, Y.; Li, C.; Cheng, W. Abnormal Behavior Detection Scheme of UAV Using Recurrent Neural Networks. IEEE Access 2019, 7, 110293–110305. [Google Scholar] [CrossRef]

- Das, M.; Ghosh, S.K. A deep-learning-based forecasting ensemble to predict missing data for remote sensing analysis. IEEE J. Sel. Top. Appl. Earth Observ. Remote Sens. 2017, 10, 5228–5236. [Google Scholar] [CrossRef]

- Al-Emadi, S.; Al-Ali, A.; Mohammad, A.; Al-Ali, A. Audio Based Drone Detection and Identification using Deep Learning. In Proceedings of the 2019 15th International Wireless Communications & Mobile Computing Conference (IWCMC), Tangier, Morocco, 24–28 June 2019; pp. 459–464. [Google Scholar]

- Ball, J.E.; Anderson, D.T.; Chan, C.S.C. Comprehensive survey of deep learning in remote sensing: theories, tools, and challenges for the community. J. Appl. Remote. Sens. 2017, 11, 042609. [Google Scholar] [CrossRef]

- Papernot, N.; McDaniel, P.; Jha, S.; Fredrikson, M.; Celik, Z.B.; Swami, A. The limitations of deep learning in adversarial settings. In Proceedings of the 2016 IEEE European Symposium on Security and Privacy (EuroS&P), Saarbrucken, Germany, 21–24 March 2016; pp. 372–387. [Google Scholar]

- Davidson, D.; Wu, H.; Jellinek, R.; Singh, V.; Ristenpart, T. Controlling UAVs with sensor input spoofing attacks. In Proceedings of the 10th USENIX Workshop on Offensive Technologies (WOOT 16), Austin, TX, USA, 8–9 August 2016. [Google Scholar]

| Reference | Architecture | Goal and Details | Scenario |

|---|---|---|---|

| [55] | CNN | Two deep networks: one for detection of the | Outdoors |

| rail center and another for obstacle detection | |||

| [70] | CNN | The network detects the gate center. Then, | Indoors |

| an external guidance algorithm is applied. | |||

| The network returns the next flight command. | |||

| [69] | CNN | The commands are learned as a classification. | Indoors |

| The dataset contains several sample flights performed by a pilot. | |||

| The network returns the next flight command. | |||

| [49] | CNN | The commands are learned as a classification. | Indoors |

| Self-supervised data for training. | |||

| The network returns the next flight command. | |||

| [50] | CNN | The commands are learned as a classification. | Indoors |

| The dataset contains several sample flights annotated manually. | |||

| The network returns the next steering angle | |||

| [62] | CNN | and the probability of collision. | Outdoors |

| The steering angle is learned by a regression. | |||

| The CNN returns a map that represents the action | |||

| [59] | CNN + RL | space for the RL method. | Indoors |

| The method is trained on a virtual environment. | |||

| [53] | cGAN | The first cGAN estimates a depth map from the RGB image. | Indoors |

| + LSTM + RL | Then, these maps feed an RL with LSTM to return the flight command. | ||

| [71] | CNN | The network returns the distance to tree obstacles. | Outdoors |

| The distance is learned as three classes. | |||

| [72] | CNN | Object detection. | Outdoors |

| [73] | CNN | The network computes feature extraction | Outdoors |

| for learning safe trajectories. | |||

| The network makes use of two consecutive RGB images | |||

| [48] | Two stream CNN | and returns the distance to obstacles in | Indoors |

| three directions. |

| Reference | UAV Platform | Weight | Max. Payload | Flight Time | Control Subsystem | Sensors | Comm. Interfaces | Positioning Subsystem | Power Subsystem | Storage Subsystem | Propeller Subsystem | Identification Subsystem | DL Subsystem Technique | Onboard/ External DL Processing | Training Hardware |

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| [92] | Crazyflie (assumed version: 2.1) | 27 g | 15 g | 7 min | STM32F405 (Cortex-M4, 168MHz, 192 KB SRAM, 1 MB flash) | 3-axis accelerometer/gyroscope (BMI088), high-precision pressure sensor (BMP388), external optical tracking system | BLE | Ultra-Wide Band distance sensor | LiPo Batteries | 8 KB EEPROM, 1 MB flash | Quadrotor Micro-Aerial Vehicle (MAV) | UWB | Recurrent Neural Network, Deep Q-Learning Network | N/A | - |

| [93] | Parrot AR.Drone 2.0 | 420 g (with hull) | ≈500g | 12 min (up to 36 min with Power Edition) | Internal: ARM Cortex A8@1 GHz (with a Linux 2.6.32 OS), 1 GB of RAM, video DSP@800 MHz (TMS320DMC64x) | 720p@30 fps video camera, 3-axis gyroscope, 3-axis accelerometer, 3-axis magnetometer, pressure sensor, vertical QVGA camera @ 60 fps for measuring flight speed | Wi-Fi (IEEE 802.11 b/g/n) | Ultrasonic altimeter | LiPo Batteries | Up to 4 GB of flash | Quadrotor | Visual identification | Deep Reinforcement Learning | External (Workstation/cloud with i7 cores and a GTX1080 GPU) | - |

| [70] | Self-assembled | - | - | - | NVIDIA Jetson TX2, 8 GB of RAM, operating system is based on Ubuntu 16.04 and works in a ROS-Kinetic environment, Flight Control Computer: in-house board ([94]) | a PX4Flow for ground speed measurement, PointGrey Firefly VGA camera@30 fps | Wi-Fi (assumed) | ZED stereo camera for odometry measurement, Terabee TeraRanger-One one-dimensional LIDAR for altitude measurement | - | 32 GB of eMMC (embedded Multi Media Card) | Quadrotor | Visual identification | Deep Convolutional Neural Network | Onboard (in real-time) | Intel i7-6700 CPU 3.40 GHz, 16 G RAM, NVIDIA GTX 1080 Ti |

| [95] | Parrot AR.Drone 2.0 Elite Edition | 420 g (with hull) | ≈500 g | 12 min | Internal: ARM Cortex A8@1 GHz (with a Linux 2.6.32 OS), 1 GB of RAM, video DSP@800 MHz (TMS320DMC64x) | 720p@30 fps video camera, 3-axis gyroscope, 3-axis accelerometer, 3-axis magnetometer, pressure sensor, vertical QVGA camera @ 60 fps for measuring flight speed | Wi-Fi (IEEE 802.11 b/g/n) | Ultrasonic altimeter | LiPo Batteries | - | Quadrotor | Visual identification | Deep Convolutional Neural Network | The UAV camera was only used for generating a dataset | Not specified CPU |

| [62] | Parrot Bebop 2.0 | 500 g | - | 25 min | Dual-core processor with quad-core GPU | 1080p video camera, pressure sensor, 3-axis gyroscope, 3-axis accelerometer, 3-axis magnetometer | Wi-Fi (IEEE 802.11 b/g/n/ac) | GPS/GLONASS, height ultrasonic sensor, | Li-ion Batteries | 8 GB Flash | Quadrotor | Visual identification | Deep Convolutional Neural Network | External (the training hardware receives images at 30 Hz from the drone through Wi-Fi) | Intel Core i7 2.6 GHz CPU |

| [93] | Parrot AR.Drone | 420 g (with hull) | - | 12 min | ARM9@468 MHz, 128 MB of RAM, Linux OS | VGA camera, vertical video camera @ 60 fps, 3-axis acceleration sensor, 2-axis gyroscope, 1-axis Yaw precision gyroscope | Wi-Fi (IEEE 802.11 b/g) | Ultrasonic altimeter | LiPo Batteries | Quadrotor | Visual identification | Deep Convolutional Neural Network | External (Jetson TX development board) | Desktop computer with an NVIDIA GTX 1080Ti | |

| [96] | DJI Matrice 100 | ≈2400 g | ≈1200 g | 19–40 min | Not indicated | - | - | - | LiPo Batteries | - | Quadrotor | Visual identification | Deep Convolutional Neural Network | External (Jetson TX development board) | Desktop computer with an NVIDIA GTX 1080Ti |

| [71] | Parrot Bebop 2.0 | 500 g | - | 25 min | Dual-core processor with quad-core GPU | 1080p video camera, pressure sensor, 3-axis gyroscope, 3-axis accelerometer, 3-axis magnetometer | Wi-Fi (IEEE 802.11 b/g/n/ac) | GPS/GLONASS, height ultrasonic sensor, | Li-ion Batteries | 8 GB Flash | Quadrotor | Visual identification | Deep Convolutional Neural Network (AlexNet) | External | Computer with a NVIDIA GeForce GTX 970M GPU |

| [72] | Self-assembled | - | - | - | Pixhawk as flight controller, NVIDIA TX2 for the neural network | - | - | - | - | - | - | - | Depthwise Convolutional Neural Network | Onboard (tested also in external hardware) | Nvidia GTX 1080 and Nvidia TX2 |

| [73] | Modified 3DR ArduCopter | - | - | - | Quad-core ARM | Microstrain 3DM-GX3-25 IMU, PlayStation Eye camera facing downward for real-time pose estimation and one high-dynamic range PointGrey Chameleon camera for monocular navigation | - | - | - | - | Quadrotor | Visual identification | Deep Convolutional Neural Network | External | - |

| [69] | Parrot Bebop | 390 g (with battery) | - | ≈10 min | Parrot P7 Dual-Core | 1080p video camera, accelerometer, barometer, vertical camera, gyroscope, magnetometer | Wi-Fi (IEEE 802.11 b/g/n/ac) | GPS, ultrasonic altimeter | Li-ion Batteries | 8 GB Flash | Quadrotor | Visual identification | Deep Convolutional Neural Network | External (in the training hardware) | NVIDIA GeForce GTX 970M, Intel Core i5, 16 GB memory |

| [59] | Parrot Bebop | 390 g (with battery) | - | ≈10 min | Parrot P7 Dual-Core | 1080p video camera, accelerometer, barometer, vertical camera, gyroscope, magnetometer | Wi-Fi (IEEE 802.11 b/g/n/ac) | GPS, ultrasonic altimeter | Li-ion Batteries | 8 GB Flash | Quadrotor | Visual identification | Deep Convolutional Neural Network | - | |

| [59] | Parrot Bebop 2.0 | 500 g | - | 25 min | Dual-core processor with quad-core GPU | 1080p video camera, pressure sensor, 3-axis gyroscope, 3-axis accelerometer, 3-axis magnetometer | Wi-Fi (IEEE 802.11 b/g/n/ac) | GPS/GLONASS, height ultrasound sensor, | Li-ion Batteries | 8 GB Flash | Quadrotor | Visual identification | Deep Convolutional Neural Network (AlexNet) | - |

| Technology | Frequency Band | Maximum Range | Data Rate | Power Consumption | Potential UAV-Based Applications |

|---|---|---|---|---|---|

| Bluetooth 5 [112] | 2.4 GHz | <250 m | up to 2 Mbit/s | Low power and rechargeable (days to weeks) | Beacons, medical monitoring in battlefield [113] |

| IQRF [114] | 868 MHz | hundreds of meters | 100 kbit/s | Low power and long range | Internet of Things and M2M applications |

| Narrowband IoT (NB-IoT) [115] | LTE in-band, guard-band | <35 km | <250 kbit/s | Low power and wide area | IoT applications |

| LoRa, LoRaWAN [116] | 2.4 GHz | < 15 km | 0.25–50 kbit/s | Long battery life and range | Smart cities, M2M applications, forest fire detection [108] |

| SigFox [117] | 868–902 MHz | 50 km | 100 kbit/s | Global cellular network | IoT and M2M applications |

| Ultrasounds | >20 kHz (2–10 MHz) | <10 m | 250 kbit/s | Based on sound wave propagation | Asset positioning and location [118] |

| UWB/IEEE 802.15.3a | 3.1 to 10.6 GHz | < 10 m | >110 Mbit/s | Low power, rechargeable (hours to days) | Fine location, short-distance streaming [109] |

| Weightless-P [119] | License-exempt sub-GHz | 15 km | 100 kbit/s | Low power | IoT applications |

| Wi-Fi (IEEE 802.11b/g/n/ac) | 2.4–5 GHz | <150 m | up to 433 Mbit/s (one stream) | High power, rechargeable (hours) | Mobile, business and home applications |

| Wi-Fi HaLow/IEEE 802.11ah [120] | 868–915 MHz | <1 km | 100 Kbit/s per channel | Low power | IoT and long-range applications [110] |

| Wi-Sun/IEEE 802.15.4g [121] | <2.4 GHz | 1000 m | 50 kbit/s–1 Mbit/s | - | Field and home area networking, smart grid and metering |

| ZigBee [122] | 868–915 MHz, 2.4 GHz | <100 m | 20–250 kbit/s | Very low power (batteries last months to years), up to 65,536 nodes | Industrial applications [103] |

| Type of Solution | UAV Characteristics | Identification Technology | Architecture | Application | Additional features |

|---|---|---|---|---|---|

| Academic [88] | Indoor/outdoor hexacopter designed from scratch as a trade-off between cost, modularity, payload capacity, and robustness. | RFID | Modular and scalable architecture using Wi-Fi infrastructure. | Inventory and traceability applications | Performance experiments, ability to run decentralized applications. |

| Academic [145] | Parrot AR Drone2.0 UAV with three attached RFID tags | UHF RFID | RFID tracker and pose estimator, precise 6-DoF poses for the UAV in a 3D space with a singular value decomposition method | Localization | Experimental results demonstrate precise poses with only 0.04 m error in position and 2 error in orientation |

| Commercial, Hardis Group [146] | Autonomous quadcopter with a high-performance scanning system and an HD camera. Battery duration around 20 min (50 min to charge it). | Barcodes | Indoor localization technology. Automatic flight area and plan, 360 anti-collision system. | Inventory | Automatic acquisition of photo data. It includes cloud applications to manage mapping, data processing and reporting. Compatible with all WMS and ERPS and managed by a tablet app. |

| Commercial, Geodis, and delta drone [147] | Autonomous quadcopter equipped with four HD cameras | Barcodes | Autonomous indoor geolocation technology, it operates when the site is closed. | Plug-and-play solution to all types of Warehouse Management Systems (WMS) | Real-time data reporting (e.g., the processing of data, and its restitution in the warehouse’s information system). Reading rates close to 100%. |

| Commercial, Drone Scan [148] | Camera and a mounted display | Barcodes | DroneScan base station communicates via a dedicated RF frequency with a range of over 100 m. Its software uploads scanned data and position to the Azure IoT cloud and to the customer systems. The imported data are used to re-build a virtual map of the warehouse so that the location of the drone can be determined. | Inventory | Live feedback both on a Windows touch screen and from audible cues as the drone scans and records customizable data. 50 times faster than manual capturing. |

| Academic [144] | Autonomous Micro Aerial Vehicles (MAVs), RFID reader, and two high-resolution cameras | RFID, multimodal tag detection. | Fast fully autonomous navigation and control, including avoidance of static and dynamic obstacles in indoor and outdoor environments. Robust self-localization based on an onboard LIDAR at high velocities (up to 7.8 m/s) | Inventory | - |

| Academic [149] | IR-based camera | QR | Computer vision techniques (region candidate detection, feature extraction, and SVM classification) for barcode detection and recognition. | Inventory management with improved path planning and reduced power consumption | Experiment performance results of 2D barcode images demonstrating a precision of 98.08% and a recall of 98.27% within a fused feature ROC curve. |

| Academic [150] | - | QR | It combines sub-GHz wireless technology for IoT-standardized long-range wireless communication backbone and UWB for localization. Plug-and-play capabilities. | Autonomous navigation in a warehouse mock-up, tracking of runners in sport halls. | Theoretical evaluation in terms of update rate, energy consumption, maximum communication range, localization accuracy and scalability. Open source code of the UWB hardware and MAC protocol software available online. |

| Academic [151] | Phantom 2 vision DJI (weight 1242 g, maximum speed 15 m/s and up to 700 m) | RFID | Drone with a Windows CE 5.0 portable PDA (AT-880) that acts as a UHF RFID reader moves around an open storage yard | Inventory | No performance experiments |

| Academic [152] | DraganFly radio-controlled helicopters 82×82 cm, with an average flight time of 12 min. and RFID readers attached. | RFID (EPC) | Three-dimensional graphical simulator framework has been designed using Microsoft XNA | Inventory | Coordinated distribution of the UAVs |

| Academic [153] | UAVs and UGVs with LIDARs | Barcodes, AR markers | Cooperative work using vision techniques. The UAC acts as a mobile scanner, whereas the UGV acts as a carrying platform and as an AR ground reference for the indoor flight. | Inventory | - |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fraga-Lamas, P.; Ramos, L.; Mondéjar-Guerra, V.; Fernández-Caramés, T.M. A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance. Remote Sens. 2019, 11, 2144. https://doi.org/10.3390/rs11182144

Fraga-Lamas P, Ramos L, Mondéjar-Guerra V, Fernández-Caramés TM. A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance. Remote Sensing. 2019; 11(18):2144. https://doi.org/10.3390/rs11182144

Chicago/Turabian StyleFraga-Lamas, Paula, Lucía Ramos, Víctor Mondéjar-Guerra, and Tiago M. Fernández-Caramés. 2019. "A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance" Remote Sensing 11, no. 18: 2144. https://doi.org/10.3390/rs11182144

APA StyleFraga-Lamas, P., Ramos, L., Mondéjar-Guerra, V., & Fernández-Caramés, T. M. (2019). A Review on IoT Deep Learning UAV Systems for Autonomous Obstacle Detection and Collision Avoidance. Remote Sensing, 11(18), 2144. https://doi.org/10.3390/rs11182144