1. Introduction

Change detection has a wide range of applications such as fire damage assessment, deforestation monitoring, urban change detection, etc. It is challenging to carry out change detection for several reasons. First, there are changes due to illumination, seasonal variations, view angles, etc. Second, there are also mis-alignment errors due to registration. Third, changes are also related to what one is looking for. For example, in vegetation monitoring, one needs to focus on changes due to vegetation growth; whereas in urban change detection, one will focus on man-made changes and ignore vegetation changes. In the past decades, there are many papers discussing change detection, some of which include deep learning-based architectures [

1,

2,

3]. For survey papers about change detection, one can see [

4,

5].

Change detection can be done using electro-optical/infra-red (EO/IR) [

6], multispectral [

7,

8,

9], radar [

10,

11], and hyperspectral imagers [

12,

13,

14]. Recently, new papers utilizing multimodal imagers also emerged [

15,

16,

17,

18,

19]. Here, multimodal sensors mean that the two images at two different times may have different characteristics such as visible vs. radar, visible vs. infrared, etc.

In some applications such as flooding and fire damage assessment, it is difficult to have the same type of images at two different times. For example, optical images are affected by the presence of clouds, and therefore cannot be used on cloudy and rainy dates. Instead, one may only have radar image at an earlier time and an optical image at a later time, or vice versa. In some cases, such as fire events, smoke may prohibit optical sensors from collecting any meaningful data, in which case only radar or infrared images may be available. Due to different sensor characteristics, it will be impossible to directly perform image differencing to obtain the change map. Another practical concern is that some images such as radar or infrared may have only one band. The limited number of bands may seriously affect the change detection performance.

In recent research papers [

20,

21,

22], it was discovered that the use of synthetic bands can help object detection when the original data have only a few bands. In particular, the Extended Multi-Attribute Profile (EMAP) has shown some promising potential for target detection applications [

20,

21,

22]. The EMAP technique provides an array of images resulting from filtering the input image with a sequence of morphological attribute filters on different threshold levels. These images are stacked together and form the EMAP-synthetic bands.

Since some practical applications may only have single band images such as synthetic aperture radar (SAR) and infrared, it will be important to explore the potential of using EMAP to generate some synthetic bands and investigate its impact for change detection enhancement.

In this paper, we focus on performance evaluations of several change detection algorithms using multimodal image pairs with and without EMAPs. These algorithms are unsupervised pixel-based algorithms with the exception of one, which is semi-supervised. Deep learning-based change detection algorithms are not included in this paper and they are beyond the scope of this paper. Among the investigated change detection algorithms, one of them is recently developed by us. It is an unsupervised pixel-based change detection algorithm and it utilizes pixel pair statistics in the pre-event and post-event images, and uses the distance information to infer changes between images. We also enhanced a recent semi-supervised change detection algorithm in [

18] by adopting a weighted fusion to improve its consistency. We present experimental results using five benchmark data sets and ten change detection algorithms. The performance of algorithms with and without EMAPs have been thoroughly investigated. Receiver operating characteristics (ROC) curves, area under the curves (AUC), and visual inspections of the change detections score images were used in the comparisons. It was observed that several algorithms can consistently benefit from the use of EMAP for change detection.

Our paper is organized as follows.

Section 2 summarizes the applied change detection algorithms.

Section 3 briefly introduces EMAP and the parameters used with EMAP.

Section 4 includes comparative studies using heterogeneous image pairs.

Section 5 includes some observations on performance and computational speeds of the applied change detection algorithms. Finally, remarks and future directions are mentioned in

Section 6.

2. Heterogeneous Change Detection Approaches

In this section, we first introduce the Pixel Pair (PP) unsupervised change detection algorithm, which was recently developed by us [

11]. We then briefly review several other algorithms used in this paper, including the Structural Similarity (SSIM) based algorithm, Image Ratioing (IR), Chronochrome (CC), Covariance Equalization (CE), Anomalous Change Detection (ACD), Multi-Dimension Scaling (MDS), Homogeneous Pixel Transformation (HPT), and Markov Model for Multimodal Change Detection (M3CD). The above list is definitely not exhaustive.

2.1. Pixel Pair (PP) Algorithm

The key idea in the Pixel Pair (PP) algorithm is to compute differences between pixels in each image separately. The difference scores are then compared between images in the pair to generate the change map. Most importantly, our approach does not require the image pair to come from the same imager. The idea assumes that the mapping between the pixel values of the images in the image pair are monotonic.

2.1.1. Algorithm for Single Band Case

Suppose

I(

T1) and

I(

T2) are

M ×

N size grayscale images, which are looked at for changes. Suppose

is the vector containing the pixel value differences from pixel (

s), where

s = 1, ...,

MN, to all other pixels,

ti where

i = 1, ...,

MN, in

I(

T1). That is,

where

is the pixel value at location

ti of

I(

T1).

Suppose is the vector containing the pixel value differences from pixel (s) to all other pixels, (ti), where ti = 1, ..., MN, in I(T2). Suppose is the maximum value in and is the minimum value in . Similarly, suppose is the maximum value in and is the minimum value in .

The normalized difference vector for pixel (

s) in

I(

T1),

, is found as:

where ./ denotes element-wise division.

Similarly, the normalized difference vector for pixel (s) in

I(

T2) is found as:

The change map contribution from pixel (

s) is then computed as:

It is hypothesized that the pixel locations in

which yield high values are linked to changes. The final change map consists of the sum of the contributions from all the pixels in the image,

s = 1, ...,

MN. That is, the final change map,

Dfinal, is found by summing the contributions from all pixels,

s = 1, ...,

MN where:

The estimated change map plots related to PP in this paper correspond to Dfinal values.

2.1.2. Algorithm for the Multi-Band Case

In the multi-band case, each pixel contains a vector of values. That is, each pixel at location

ti is a vector denoted as

. Now, we have two ways to compute

. One is to use the Euclidean norm as shown in (6):

where

denotes the Euclidean distance between two vectors.

Another way is to compute the angle between the vectors. We can use similarity angle mapper (SAM) between two vectors

and

, which is defined as:

where

and

denotes the Euclidean norm of a vector.

The rest of the steps will be similar as the single band case.

In the past, we have applied the PP algorithm to some change detection applications [

6,

10,

11] and observed reasonable performance.

2.2. Structural Similarity (SSIM)

There are a number of image quality metrics such as mean-squared error (MSE) and peak signal-to-noise ratio (PSNR). The structural similarity index measure (SSIM) is one of them and has been a well-known metric for image quality assessment. This image quality metric [

23] reflects the structural similarity between two images. The SSIM index is computed on various blocks of an image. The measure between two blocks

x and

y from two images can be defined as:

where

μx and

μy are the means of blocks

x and

y, respectively;

and

, are the variances of blocks

x and

y, respectively;

is the covariance of blocks

x and

y; and

c1 and

c2 are small values (0.01, for instance) to avoid numerical instability. The ideal value of SSIM is 1 for perfect matching.

The SSIM index and its variant Advanced SSIM (ASSIM) were used for change detection in [

24]. Here, we use a similar adaption of the SSIM for change detection. The key idea is to use the SSIM to compare two image patches and the SSIM score will indicate the similarity between two patches. The signal flow is shown in

Figure 1. It is self-explanatory. The last step is a complement of the SSIM scores from all patches. This is simply because the SSIM measures the similarity between two patches and higher means high similarity. Taking the complement of the SSIM scores will yield the normal change map where high values mean more changes. The patch size used with SSIM is a design parameter. A large patch size tends to give blurry change maps and vice versa. The SSIM may be more suitable for change detection using mono-modal images in comparison to multimodal image pairs because the image characteristics are similar in mono-modal image pairs. In any event, we have included SSIM in our change detection studies in this paper.

2.3. Image Rationing (IR)

IR has been used for anomaly detection and change detection [

13] before. It has been used for change detection with SAR imagery [

25] as well. The Normalized Difference Vegetation Index (NDVI) and the Normalized Difference Soil Index (NDSI) and other indices have been developed for vegetation detection and soil detection [

7], etc. The calculation of IR is very simple and efficient, which is simply the ratio between two corresponding pixels in the pre- and post-event images.

2.4. Markov Model for Multimodal Change Detection (M3CD)

This is an unsupervised statistical approach for multimodal change detection [

26]. A pixel pairwise modeling is utilized and its distribution mixture is estimated. After this, the maximum a posteriori (MAP) solution of the change detection map is computed with a stochastic optimization process [

26].

2.5. Multi-Dimension Scaling (MDS)

This method aims to create a new mapping such that in this new mapping, two pixels that are distant from each other but with the same local texture have the same grayscale intensity [

27]. Histograms are created at each pixel location in each direction. The resulting gradients are stacked to form an 80-element textural feature vector for each pixel. There are two variants. The direct approach (D-MDS) uses FastMap [

27] to detect change using the pre-event and post-event images one band at a time. The change maps from all of the 80 bands are then summed to yield the final change map. The single band approach (T-MDS) developed by us is a variant of D-MDS. T-MDS uses a single band image made up from the magnitude of each pixel location of the textural feature vectors and applies FastMap to detect the changes.

2.6. Covariance Equalization (CE)

Suppose

I(

T1) is the reference (R) and

I(

T2) is the test image (T). The algorithm is as follows [

28]:

Compute the mean and covariance of R and T as , , ,

Do eigen-decomposition (or SVD).

The residuals between PR and PT will reflect changes.

2.7. Chronochrome (CC)

Suppose

I(

T1) is the reference (R) and a later image

I(

T2) the test image (T), the algorithm is as follows [

29]:

Compute the mean and covariance of R and T as , , ,

Compute cross-covariance between R and T as

Normally, there is an additional step to compute the change detection results between PR and PT. One can use simple differencing or Mahalanobis distance to generate the change maps.

2.8. Anomalous Change Detection (ACD)

ACD is based on an anomalous change detection framework that is applied to the Gaussian model [

30]. Suppose

x and

y are mean subtracted pixel vectors in two images (R and T) for the same pixel location. We denote the covariance of R and T as

and

, and the cross covariance between R and T as

. The change value at pixel location (where

x and

y are) is then computed using:

The change map is computed by applying (9) for all pixels in R and T. In (9), R corresponds to the reference image, T corresponds to the test image and Q is computed as:

Different from Chronochrome (CC) and Covariance Equalization (CE) techniques, in ACD, the lines that separate normal from abnormal ones are hyperbolic.

2.9. Improved HPT

In a recent work [

18], the authors developed a method known as Homogeneous Pixel Transformation (HPT), which focuses on heterogeneous change detection. In summary, HPT transforms one image type from its original feature space to another space in pixel-level. This way, both the pre-event and post-event images can be represented in the same space and change detection can be applied. HPT consists of forward transformation and backward transformation. In forward transformation, a set of unchanged pixels that are identified in advance are used to estimate the mapping of the pre-event image pixels to the post-event image pixel space. For mapping, the unchanged pixels are used with the

k-nearest neighbors (

k-NN) method and a weighted sum fusion is incorporated to the identified nearest pixels among the unchanged pixels. After the pre-event image pixels are transformed to the post-event image space, the difference values between the transformed pre-event image and the post-event image is found. The same process is then repeated backwards which forms the backward transformation which associates the post-event image with the first feature space. The two difference values coming from forward and backward estimations are combined to improve the robustness of detection [

18]. In [

18], after HPT, the authors also apply a fusion-based filtering which utilizes the neighbor pixels’ decisions to reduce false alarm rates.

When we applied HPT for the before-flood SAR and the after-flood optical Système Probatoire d’Observation dela Terre (SPOT) image pair, we noticed that if the gamma parameter value of HPT is not selected properly, the amplitude scale of the transformed pre-event image may not be close to the scale of the post-event image. This inconsistency can then result in unreliable results since there is a difference operation involved which computes the difference between the transformed pre-event image and original post-event image. In order to eliminate the scaling inconsistencies, we incorporated weight normalization when the transformed image pixels are computed utilizing k-nearest pixels among the unchanged pixels library. Each weight value is normalized with the sum of the k weights.

3. EMAP

In this section, we briefly introduce EMAP. Mathematically, given an input grayscale image

and a sequence of threshold levels {

Th1,

Th2, …

Thn}, the attribute profile (AP) of

is obtained by applying a sequence of thinning and thickening attribute transformations to every pixel in

as follows:

where

and

are the thickening and thinning operators at threshold

, respectively. The EMAP of

is then acquired by stacking two or more APs using any feature reduction technique on multispectral/hyperspectral images, such as purely geometric attributes (e.g., area, length of the perimeter, image moments, shape factors), or textural attributes (e.g., range, standard deviation, entropy) [

31,

32,

33].

More technical details about EMAP can be found in [

31,

32,

33].

In this paper, the “area (a)” and “length of the diagonal of the bounding box (d)” attributes of EMAP [

22] were used. The lambda parameters for the area attribute of EMAP, which is a sequence of thresholds used by the morphological attribute filters, were set to 10 and 15, respectively. The lambda parameters for the Length attribute of EMAP were set to 50, 100, and 500. With this parameter setting, EMAP creates 11 synthetic bands for a given single band image. One of the bands comes from the original image. For change detection methods that can only handle single band images, each of the 11 EMAP-synthetic bands is processed individually to get a change map and the resulting 11 change maps from each band are averaged to get the final change detection map. Similarly, for the reference case where the original single band images are used for change detection without EMAP, if a change detection method can only handle single band images, each of the original band images is processed individually to get a change map. The resulting change maps from these original bands are averaged to get the final change detection map where this final change detection map corresponds to the reference case.

4. Results

In this paper, we used receiver operating characteristics (ROC) curves to evaluate the different change detection algorithms. Each ROC curve is a plot of correct detection rates versus false alarm rates. A high performing algorithm should be close to a step function. We extracted AUC values from the ROC curves to compare different methods. An AUC value of one means perfect detection. We also generated change detection maps for visual inspection. Each detection map can be visually compared to the ground truth change map for performance evaluation.

We used the four image pairs mentioned in [

26] and the Institute of Electrical and Electronics Engineers (IEEE) contest dataset flood multimodal image pair [

34] for extensive change detection performance comparisons. In these investigations, when applying HPT, the gamma parameter was set to 100 and the k parameter was set to 500. All methods, except HPT, are unsupervised algorithms. For the SSIM algorithm, we used an image patch size of 30. For other algorithms such as IR, CC, CE, ACD, PP, D-MDS, and T-MDS, we do not need to set any specific parameters. For M3CD, we used the default settings in the original source codes [

26]. For the PP algorithm, we applied the basic version (Equations (4) and (5)) because the Euclidean and SAM versions show some improvement in some cases but not so good results in other cases.

Table 1 summarizes the five datasets used in our experiments. The first dataset corresponds to the IEEE SAR/SPOT image pair [

34]. The pre-event image from the SAR instrument is a single band and the post-event SPOT optical image is made up of 3 bands. For the single band case, the third band in the SPOT image is used since this band is the most sensitive one to water detection. The other four image pairs were taken from the Montreal M3CD dataset, which is publicly available in [

26]. The four Montreal image pairs in [

26] are greyscale single band images. These five datasets have diverse image characteristics with different resolutions, image sizes, events, and sensing modalities. Some of them are quite challenging in terms of detecting the changes.

In the following, brief information about the instruments used for capturing the images are briefly mentioned for each image pair. The five image pairs are then displayed followed by the ROC curves for all the change detection techniques applied, the ground truth change map, and the change detection score images.

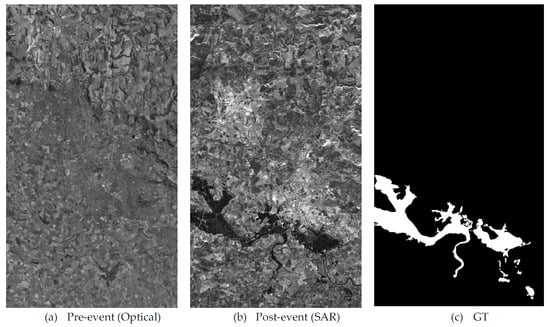

4.1. Image Pair 1: SAR-SPOT (Near Infrared)

This data set was the IEEE Contest data set [

34]. The image size is 472 × 264 pixels. The event is flooding near Gloucester, UK.

Figure 2 shows the pre- and post-event images. The pre-event image is a single band SAR (

Figure 2a) and the post-event image is a near infrared (NIR) band of the SPOT image (

Figure 2b).

Figure 2c shows the ground truth (GT) change map.

Speckle filtering is a critical step before processing any SAR images especially when using them for change detection purposes. In this multimodal image pair, which the pre-event image is acquired by a SAR, we applied a Wiener filter for speckle filtering before applying the ten change detection algorithms.

Figure 3 and

Figure 4 show the ROC curves of ten algorithms without and with using EMAP, respectively. It can be seen that the performance variation is huge. We observe that IR, M3CD, CC, and HPT performed better than others. SSIM is the worst. We also observe that some methods have improved after using EMAP. For instance, HPT with EMAP was improved even further.

Table 2 summarizes the AUC metrics. The last column of

Table 2 shows the difference between the AUCs with EMAP and without EMAP. Positive numbers indicate improvements when using EMAP and negative numbers otherwise. It can be seen that six out of ten methods have benefitted from the use of EMAP.

Figure 5 compares the change maps produced by the various algorithms with and without using EMAP. Some change maps such as M3CD, HPT, CC, ACD, and IR look decent. However, CE, PP, and SSIM maps do not look that good. Moreover, change maps of T-MDS and PP appear to look worse with EMAP.

4.2. Image Pair 2: NIR-Optical

The image pair as shown in

Figure 6 is between near infrared and optical where the pre-event image (

Figure 6a) was captured with Landsat-5 Thermic (NIR band) and the post-event image (

Figure 6b) was captured with optical imager.

Figure 6c shows the ground truth. The image size is 412 × 300 pixels. The two images captured lake overfill after some rains. This pair was obtained from [

26]. Only the greyscale images are available.

Figure 7 and

Figure 8 show 20 ROC curves generated by ten algorithms without and with EMAP, respectively. It can be seen that HPT, IR, CC, and M3CD have good performance. SSIM and D-MDS are the worst. Since there are many curves, it is difficult to judge which methods have benefitted from the use of EMAP.

Table 3 summarizes the AUC of all methods. Positive numbers in the last column indicate improved results and negative numbers correspond to worsening results. It can be observed that five methods have improved when EMAP was used. D-MDS improved the most from 0.53 to 0.93. SSIM suffered the most after using EMAP.

Figure 9 shows the change maps from all methods. M3CD and HPT have the cleanest change detection maps, followed by CC, ACD, IR, and CE. SSIM has the worst change map visually.

4.3. Image Pair 3: Optical-SAR

Here, the pre-event image (

Figure 10a) is a QuickBird image and the post-event image (

Figure 10b) is a TerraSAR-X image. The image size is 2325 × 4135. The ground truth change map is shown in

Figure 10c. The event is flooding. This pair was obtained from [

26]. Although Quickbird has four bands, only the grayscale image is available.

From the ROC curves shown in

Figure 11 and

Figure 12, one can observe that HPT and IR performed the best. D-MDS, T-MDS, ACD, CE, and SSIM did not perform well. In this dataset, it can be seen that quite a few methods with EMAP have improved significantly over those without EMAP.

To quantify the performance gain of using EMAP, one can look at

Table 4. We can see that eight out of ten methods have benefitted from the use of EMAP. The HPT method increased from 0.847 to 0.9427, which is quite significant.

Figure 13 compares the change maps of all methods. It can be seen that HPT looks cleaner and has fewer false alarms. The IR and M3CD results also look decent. The change map of CC with EMAP has improved over that without EMAP. Other methods do not seem to yield good results.

4.4. Image Pair 4: SAR-Optical

This pair was obtained from [

26]. As shown in

Figure 14, the pre-event image is a TerraSAR-X image (

Figure 14a) and the post-event image (

Figure 14b) is an optical image from Pleiades. The ground truth change map is shown in

Figure 14c. The image size is 4404 × 2604. The objective is to detect changes due to construction. Only the grayscale image (Pleiades) was available.

From the ROC curves shown in

Figure 15 and

Figure 16, we can see that M3CD and CC performed better than others. Others did not perform well. It appears that the pre-event SAR image is quite noisy. However, some filtering operations such as median filtering did not enhance the performance any further. The methods with EMAP have seen dramatic improvements. For instance, HPT with EMAP (dotted red) improved quite a lot over the case of without EMAP (red). The D-MDS method suffered some drawback when EMAP was used.

Table 5 summarizes the AUC values of all the methods. One can see that seven out of ten methods have seen improvements when EMAP was used. HPT received the most performance boost.

Figure 17 compares the change maps of all the methods with and without EMAP. We can see that M3CD, HPT, CC, IR, CE, and PP have relatively clean change maps. However, D-MDS, T-MDS, and SSIM methods do not have clean change maps.

4.5. Image Pair 5: Optical-Optical

This is an optical pair with only grayscale images. This pair was obtained from [

26]. As shown in

Figure 18, the pre-event image (

Figure 18a) was captured with Pleiades and the post-event image (

Figure 18b) was captured with Worldview 2.

Figure 18c shows the ground truth change map. The image size is 2000 × 2000. The grey images are formed by taking the average of several bands. The band compositions are different in the pre- and post-event images. Since the spectral bands in the two images are different, the appearance of the two images are quite different, making this pair very difficult for change detection. The objective of the change detection is to capture construction activities.

From the ROC curves in

Figure 19 and

Figure 20, M3CD was the best. SSIM also performed well perhaps due to the fact this pair is an optical-optical image pair. SSIM is likely to work better for homogeneous images. D-MDS, T-MDS, ACD, CE, IR, and PP did not work well. We can also see that some methods with EMAP such as HPT have seen performance improvements.

Table 6 summarizes all the AUC values of the methods with and without EMAP. It is very clear to see that eight out of ten methods have seen improved performance. The HPT method improved from 0.52 to 0.69. It is also observed that SSIM suffered some setback when EMAP was used.

Figure 21 compares the change maps of all methods with and without EMAP. M3CD has the best visual performance. HPT with EMAP also has good change detection map. Others do not have distinct change detection. It can be seen that SSIM map is somewhat fuzzy, but nevertheless captures most of the changes.

5. Observations

To quantify the performance gain, the AUC measure was applied to the resultant ROC curves of all methods for the two change detection cases (using the original single band and using EMAP-synthetic bands) to assess the impact of using EMAP synthetic bands for change detection. The EMAP results for a change detection method are then compared with the corresponding reference case (that uses original single band) of the same change detection method. The AUC differences for the reference and EMAP cases are used as a measure to assess how EMAP affects the performance of a change detection method.

Table 7 shows the AUC differences between using original single band case and using EMAP-synthetic bands for change detection. The positive-valued cells in

Table 7 indicate that using EMAP-synthetic bands improved the detection performance and negative-valued cells indicate that it degraded the detection performance for that method. Overall, it is observed that the change detection performances of many of the applied change detection methods are improved by using the EMAP synthetic bands. A very consistent detection performance boost in all five datasets was observed for HPT, CC, and CE methods. Especially, HPT tends to be significantly improved by using EMAP bands except in the dataset-1 where it already performs well. Similarly, detection performance was improved for the ACD method in four of the five datasets. The only dataset where ACD’s performance slightly decreased is the third dataset. M3CD is affected slightly by using the EMAP bands since in most datasets the ROC curves are very close to each other for original single band case and EMAP case. Image Ratio, Pixel Pair, D-MDS and T-MDS results with EMAP are found to be inconsistent. In some datasets, these methods perform better and in some worse using EMAP showing no clear pattern. SSIM tends to perform worse with the EMAP synthetic bands with the exception of the first dataset.

The computational complexity of the ten algorithms varies quite a lot.

Table 8 summarizes the processing times of different algorithms with and without using EMAP for Dataset-1. The algorithms, excluding M3CD, were run using a computer (Windows 7) with an Intel Pentium CPU with 2.90 GHz, 2 Cores, and 4 GB of RAM. The M3CD algorithm was executed in a Linux computer with Intel i7-4790 CPU at 3.60 GHz and 24 GB of RAM.

It can be seen that HPT is the most time-consuming algorithm, followed by M3CD and PP. CC, ACD, CE, IR, and D-MDS are all low complexity algorithms.

6. Conclusions

In this paper, we investigated the use of EMAP to generate synthetic bands and examined the impact of EMAP use in change detection performance using heterogeneous images. We presented extensive comparative studies with ten change detection algorithms (nine of them from the literature and one of our own) using five multimodal datasets. From the investigations, we observed that, in 34 out of 50 cases, change detection performance was improved, which shows a strong indication about the positive impact of using EMAP for change detection, especially when the number of original bands in the image pair is not that many. A consistent change detection performance boost in all five datasets was observed with the use of EMAP for HPT, CC, and CE.