Improving Field-Scale Wheat LAI Retrieval Based on UAV Remote-Sensing Observations and Optimized VI-LUTs

Abstract

:1. Introduction

| LUTs | Platforms | Sensors | References |

|---|---|---|---|

| Reflectance-LUTs | UAV/Airborne | Multispectral camera | [12,15] |

| Hyperspectral camera | [11,13] | ||

| Satellite | ZY-3 MUX, GF-1 WFV, HJ-1 CCD | [18] | |

| Landsat | [19,20,21] | ||

| Sentinel-2 | [22,23,24] | ||

| RapidEye | [22] | ||

| CHRIS/PROBA | [25] | ||

| MODIS | [20,26] | ||

| AWiFS | [27] | ||

| Ground-based | Hyperspectral spectrometer | [28,29,30] | |

| VI-LUTs | satellite | CHRIS/PROBA | [31] |

| RapidEye | [17] | ||

| Landsat | [32] |

2. Methodology

2.1. Study Area and Long-Term Experimental Plots

2.2. Ground Measurements and UAV Flight Missions

2.2.1. Ground Measurements of LAI

2.2.2. UAV Flight Missions and Data Pre-Processing

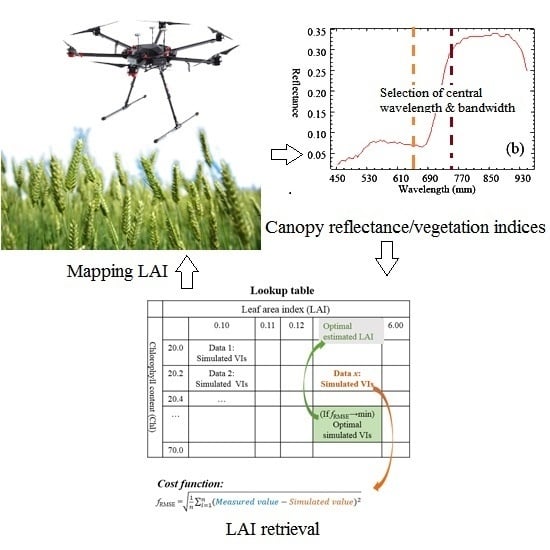

2.3. Retrieving LAI from UAV Data Using PROSAIL Model

2.3.1. Selecting Optimal VIs for LAI Retrieval (Global Sensitivity Analysis)

| m-VIs | Equations | R-VIs | G-VIs | E-VIs | Ref. |

|---|---|---|---|---|---|

| ρ2 = ρR | ρ2 = ρE | ρ2 = ρE | |||

| m-ARVI | (ρ1 − 2ρ2 + ρB)/(ρ1 + 2ρ2 − ρB) | √ | √ | √ | [3] |

| m-EVI | 2.5 ((ρ1 − ρ2)/(ρ1 + 6ρ2 − 7.5ρB + 1)) | √ | √ | √ | [46] |

| m-WDRVI | (0.12ρ1 − ρ2)/(0.12ρ1 + ρ2) | √ | √ | √ | [46] |

| m-MSR | √ | √ | √ | [47,48] | |

| m-MSAVI2 | √ | √ | √ | [38] | |

| m-MCARI2 | √ | √ | √ | [48] | |

| m-MTVI1 | 1.2(1.2(ρ1 − ρG) − 2.5(ρ2 − ρG)) | √ | √ | [48] | |

| m-TVI | 0.5(120(ρ1 − ρG)) − 200(ρ2 − ρG) | √ | √ | [48] | |

| m-NDVI | (ρ1 − ρ2)/(ρ1 + ρ2) | √ | √ | √ | [28] |

| m-OSAVI | 1.16(ρ1 − ρ2)/(ρ1 + ρ2 + 0.16) | √ | √ | √ | [46] |

| m-RVI | ρ1/ρ2 | √ | √ | √ | [3] |

| m-SAVI | (1 + 0.5)((ρ1 − ρ2)/(ρ1 + ρ2 + 0.5)) | √ | √ | √ | [3] |

| m-NRI | ρ2/(ρ2 + ρE + ρ1) | √ | √ | [49] | |

| m-EVI2 | 2.5(ρ1 − ρ2)/(ρ1 + 2.4ρ2 + 1) | √ | √ | √ | [35,46] |

2.3.2. Generating Reflectance-LUTs and VI-LUTs

2.3.3. Retrieving LAI through Cost Functions

2.3.4. Optimizing LAI Retrieval Using Hyperspectral Datasets

2.4. Statistical Analysis

3. Results

3.1. Optimal VIs Selected through Global Sensitivity Analyses

3.2. LAI Retrieval Based on Two LUT Strategies Using Multispectral UAV Data

3.3. Optimization of VI-LUTs for LAI Retrieval Using Hyperspectral UAV Data

3.3.1. Optimization of Central Wavelengths for VI Calculation

3.3.2. LAI Retrieval Based on Two LUT Strategies Using Hyperspectral UAV Data

3.3.3. Evaluation of Optimized VI-LUTs Using Hyperspectral Data for LAI Retrieval

4. Discussion

4.1. Analyses of LAI Retrieval Performance for Reflectance-LUTs and VI-LUTs

4.2. Analyses of LAI Retrieval Performance for Different VI-LUTs

4.3. Other Issues Regarding LAI Retrieval Accuracy

4.4. Analyses of Optimization for LUT Strategies

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Crops | Year | Reflectance-LUTs | VIs-LUTs | ||||||

|---|---|---|---|---|---|---|---|---|---|

| fRMSE Input | R2 | RMSE | MRE | fRMSE Input | R2 | RMSE | MRE | ||

| Wheat | 2016 | R,NIR | 0.41 | 2.49 | 1.23 | NDVI | 0.70 | 0.47 | 0.16 |

| n = 72 | R,NIR,G | 0.41 | 2.49 | 1.23 | MCARI | 0.75 | 0.83 | 0.34 | |

| R,NIR,E | 0.41 | 2.49 | 1.23 | NRI | 0.74 | 1.04 | 0.40 | ||

| 2018 | R,NIR | 0.42 | 0.94 | 0.70 | NDVI | 0.76 | 0.44 | 0.25 | |

| n = 107 | R,NIR,B | 0.42 | 0.94 | 0.70 | ARVI | 0.74 | 0.51 | 0.30 | |

| R,NIR,G | 0.42 | 0.94 | 0.70 | MCARI | 0.75 | 0.38 | 0.22 | ||

| R,NIR, E | 0.42 | 0.94 | 0.70 | NRI | 0.75 | 0.46 | 0.25 | ||

| Maize | 2016 | R,NIR | 0.00 | 1.48 | 0.42 | NDVI | 0.68 | 0.73 | 0.17 |

| n = 40 | R,NIR,G | 0.00 | 1.48 | 0.42 | MCARI | 0.73 | 0.58 | 0.16 | |

| R,NIR,E | 0.00 | 1.48 | 0.42 | NRI | 0.61 | 1.56 | 0.40 | ||

| 2018 | R,NIR | 0.00 | 2.21 | 0.87 | NDVI | 0.61 | 0.62 | 0.18 | |

| n = 113 | R,NIR,B | 0.00 | 2.21 | 0.87 | ARVI | 0.59 | 0.66 | 0.19 | |

| R,NIR,G | 0.00 | 2.21 | 0.87 | MCARI | 0.71 | 1.19 | 0.42 | ||

| R,NIR, E | 0.00 | 2.21 | 0.87 | NRI | 0.62 | 0.66 | 0.20 | ||

| Crops | Reflectance-LUTs | VIs-LUTs | ||||||

|---|---|---|---|---|---|---|---|---|

| Bands | R2 | RMSE | MRE | VIs | R2 | RMSE | MRE | |

| Wheat | R, NIR | 0.27 | 2.576 | 2.22 | NDVI | 0.80 | 0.55 | 0.31 |

| R, NIR, B | 0.27 | 2.576 | 2.22 | ARVI | 0.76 | 0.66 | 0.40 | |

| R, NIR, G | 0.27 | 2.579 | 2.22 | MCARI2 | 0.82 | 0.37 | 0.26 | |

| R, NIR, E | 0.32 | 2.603 | 2.24 | NRI | 0.75 | 0.58 | 0.32 | |

| Maize | R, NIR | 0.07 | 2.599 | 2.56 | NDVI | 0.22 | 0.75 | 0.36 |

| R, NIR, B | 0.12 | 2.550 | 2.50 | ARVI | 0.28 | 0.90 | 0.45 | |

| R, NIR, G | 0.12 | 2.550 | 2.50 | MCARI2 | 0.39 | 0.44 | 0.17 | |

| R, NIR, E | 0.11 | 2.547 | 2.50 | NRI | 0.33 | 0.78 | 0.37 | |

References

- Swain, K.C. Suitability of low-altitude remote sensing images for estimating nitrogen treatment variations in rice cropping for precision agriculture adoption. J. Appl. Remote Sens. 2007, 1, 013547. [Google Scholar] [CrossRef]

- Zhang, C.; Kovacs, J.M. The application of small unmanned aerial systems for precision agriculture: A review. Precis. Agric. 2012, 13, 693–712. [Google Scholar] [CrossRef]

- Soudani, K.; François, C.; Le Maire, G.; Le Dantec, V.; Dufrêne, E. Comparative analysis of IKONOS, SPOT, and ETM+ data for leaf area index estimation in temperate coniferous and deciduous forest stands. Remote Sens. Environ. 2006, 102, 161–175. [Google Scholar] [CrossRef] [Green Version]

- Ni, J.; Yao, L.; Zhang, J.; Cao, W.; Zhu, Y.; Tai, X. Development of an unmanned aerial vehicle-borne crop-growth monitoring system. Sensors 2017, 17, 502. [Google Scholar] [CrossRef] [PubMed]

- Potgieter, A.B.; George-Jaeggli, B.; Chapman, S.C.; Laws, K.; Suárez Cadavid, L.A.; Wixted, J.; Watson, J.; Eldridge, M.; Jordan, D.R.; Hammer, G.L. Multi-spectral imaging from an unmanned aerial vehicle enables the assessment of seasonal leaf area dynamics of sorghum breeding lines. Front. Plant Sci. 2017, 8, 1532. [Google Scholar] [CrossRef] [PubMed]

- Mueller, N.D.; Gerber, J.S.; Johnston, M.; Ray, D.K.; Ramankutty, N.; Foley, J.A. Closing yield gaps through nutrient and water management. Nature 2012, 490, 254–257. [Google Scholar] [CrossRef]

- Duveiller, G.; Weiss, M.; Baret, F.; Defourny, P. Retrieving wheat Green Area Index during the growing season from optical time series measurements based on neural network radiative transfer inversion. Remote Sens. Environ. 2011, 115, 887–896. [Google Scholar] [CrossRef]

- Geipel, J.; Link, J.; Claupein, W. Combined spectral and spatial modeling of corn yield based on aerial images and crop surface models acquired with an unmanned aircraft system. Remote Sens. 2014, 6, 10335–10355. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef]

- Walter, A.; Finger, R.; Huber, R.; Buchmann, N. Opinion: Smart farming is key to developing sustainable agriculture. Proc. Natl. Acad. Sci. USA 2017, 114, 6148–6150. [Google Scholar] [CrossRef]

- Duan, S.-B.; Li, Z.-L.; Wu, H.; Tang, B.-H.; Ma, L.; Zhao, E.; Li, C. Inversion of the PROSAIL model to estimate leaf area index of maize, potato, and sunflower fields from unmanned aerial vehicle hyperspectral data. Int. J. Appl. Earth Obs. Geoinf. 2014, 26, 12–20. [Google Scholar] [CrossRef]

- Roosjen, P.P.J.; Brede, B.; Suomalainen, J.M.; Bartholomeus, H.M.; Kooistra, L.; Clevers, J.G.P.W. Improved estimation of leaf area index and leaf chlorophyll content of a potato crop using multi-angle spectral data—Potential of unmanned aerial vehicle imagery. Int. J. Appl. Earth Obs. Geoinf. 2018, 66, 14–26. [Google Scholar] [CrossRef]

- Locherer, M.; Hank, T.; Danner, M.; Mauser, W. Retrieval of seasonal Leaf Area Index from simulated EnMAP data through optimized LUT-Based inversion of the PROSAIL model. Remote Sens. 2015, 7, 10321–10346. [Google Scholar] [CrossRef]

- Yao, X.; Wang, N.; Liu, Y.; Cheng, T.; Tian, Y.; Chen, Q.; Zhu, Y. Estimation of wheat LAI at middle to high levels using unmanned aerial vehicle narrowband multispectral imagery. Remote Sens. 2017, 9, 1304. [Google Scholar] [CrossRef]

- Xu, X.Q.; Lu, J.S.; Zhang, N.; Yang, T.C.; He, J.Y.; Yao, X.; Cheng, T.; Zhu, Y.; Cao, W.X.; Tian, Y.C. Inversion of rice canopy chlorophyll content and leaf area index based on coupling of radiative transfer and Bayesian network models. ISPRS J. Photogramm. Remote Sens. 2019, 150, 185–196. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, K.; Tang, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Estimation of rice growth parameters based on linear mixed-effect model using multispectral images from Fixed-Wing Unmanned Aerial Vehicles. Remote Sens. 2019, 11, 1371. [Google Scholar] [CrossRef]

- Dong, T.; Liu, J.; Shang, J.; Qian, B.; Ma, B.; Kovacs, J.M.; Walters, D.; Jiao, X.; Geng, X.; Shi, Y. Assessment of red-edge vegetation indices for crop leaf area index estimation. Remote Sens. Environ. 2019, 222, 133–143. [Google Scholar] [CrossRef]

- Zhao, J.; Li, J.; Liu, Q.; Wang, H.; Chen, C.; Xu, B.; Wu, S. Comparative analysis of Chinese HJ-1 CCD, GF-1 WFV and ZY-3 MUX sensor data for leaf area index estimations for maize. Remote Sens. 2018, 10, 68. [Google Scholar] [CrossRef]

- Ding, Y.; Zhang, H.; Li, Z.; Xin, X.; Zheng, X.; Zhao, K. Comparison of fractional vegetation cover estimations using dimidiate pixel models and look- up table inversions of the PROSAIL model from Landsat 8 OLI data. J. Appl. Remote Sens. 2016, 10, 036022. [Google Scholar] [CrossRef]

- Mridha, N.; Sahoo, R.N.; Sehgal, V.K.; Krishna, G.; Pargal, S.; Pradhan, S.; Gupta, V.K.; Kumar, D.N. Comparative evaluation of inversion approaches of the radiative transfer model for estimation of crop biophysical parameters. Int. Agrophys. 2015, 29, 201–212. [Google Scholar] [CrossRef]

- Su, W.; Huang, J.; Liu, D.; Zhang, M. Retrieving Corn Canopy Leaf Area Index from Multitemporal Landsat Imagery and Terrestrial LiDAR Data. Remote Sens. 2019, 11, 572. [Google Scholar] [CrossRef]

- Darvishzadeh, R.; Wang, T.; Skidmore, A.; Vrieling, A.; O’Connor, B.; Gara, T.; Ens, B.; Paganini, M. Analysis of Sentinel-2 and RapidEye for Retrieval of Leaf Area Index in a Saltmarsh Using a Radiative Transfer Model. Remote Sens. 2019, 11, 671. [Google Scholar] [CrossRef]

- Richter, K.; Hank, T.B.; Vuolo, F.; Mauser, W.; D’Urso, G. Optimal exploitation of the Sentinel-2 spectral capabilities for crop leaf area index mapping. Remote Sens. 2012, 4, 561–582. [Google Scholar] [CrossRef]

- Xie, Q.; Dash, J.; Huete, A.; Jiang, A.; Yin, G.; Ding, Y.; Peng, D.; Hall, C.C.; Brown, L.; Shi, Y.; et al. Retrieval of crop biophysical parameters from Sentinel-2 remote sensing imagery. Int. J. Appl. Earth Obs. Geoinf. 2019, 80, 187–195. [Google Scholar] [CrossRef]

- Danner, M.; Berger, K.; Wocher, M.; Mauser, W.; Hank, T. Retrieval of biophysical crop variables from multi-angular canopy spectroscopy. Remote Sens. 2017, 9, 726. [Google Scholar] [CrossRef]

- Fei, Y.; Jiulin, S.; Hongliang, F.; Zuofang, Y.; Jiahua, Z.; Yunqiang, Z.; Kaishan, S.; Zongming, W.; Maogui, H. Comparison of different methods for corn LAI estimation over northeastern China. Int. J. Appl. Earth Obs. Geoinf. 2012, 18, 462–471. [Google Scholar] [CrossRef]

- Nigam, R.; Bhattacharya, B.K.; Vyas, S.; Oza, M.P. Retrieval of wheat leaf area index from AWiFS multispectral data using canopy radiative transfer simulation. Int. J. Appl. Earth Obs. Geoinf. 2014, 32, 173–185. [Google Scholar] [CrossRef]

- Jay, S.; Maupas, F.; Bendoula, R.; Gorretta, N. Retrieving LAI, chlorophyll and nitrogen contents in sugar beet crops from multi-angular optical remote sensing: Comparison of vegetation indices and PROSAIL inversion for field phenotyping. Field Crops Res. 2017, 210, 33–46. [Google Scholar] [CrossRef] [Green Version]

- Tripathi, R.; Sahoo, R.N.; Sehgal, V.K.; Tomar, R.K.; Chakraborty, D.; Nagarajan, S. Inversion of PROSAIL model for retrieval of plant biophysical parameters. J. Indian Soc. Remote Sens. 2012, 40, 19–28. [Google Scholar] [CrossRef]

- Wang, S.; Gao, W.; Ming, J.; Li, L.; Xu, D.; Liu, S.; Lu, J. A TPE based inversion of PROSAIL for estimating canopy biophysical and biochemical variables of oilseed rape. Comput. Electron. Agric. 2018, 152, 350–362. [Google Scholar] [CrossRef]

- Lin, J.; Pan, Y.; Lyu, H.; Zhu, X.; Li, X.; Dong, B.; Li, H. Developing a two-step algorithm to estimate the leaf area index of forests with complex structures based on CHRIS/PROBA data. For. Ecol. Manag. 2019, 441, 57–70. [Google Scholar] [CrossRef]

- Quan, X.; He, B.; Li, X.; Liao, Z. Retrieval of grassland live fuel moisture content by parameterizing radiative transfer model with interval estimated LAI. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 910–920. [Google Scholar] [CrossRef]

- Teillet, P. Effects of spectral, spatial, and radiometric characteristics on remote sensing vegetation indices of forested regions. Remote Sens. Environ. 1997, 61, 139–149. [Google Scholar] [CrossRef]

- Steven, M.D.; Malthus, T.J.; Baret, F.; Xu, H.; Chopping, M.J. Intercalibration of vegetation indices from different sensor systems. Remote Sens. Environ. 2003, 88, 412–422. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.; Didan, K.; Miura, T. Development of a two-band enhanced vegetation index without a blue band. Remote Sens. Environ. 2008, 112, 3833–3845. [Google Scholar] [CrossRef]

- Kaufman, Y.J.; Tanre, D. Atmospherically resistant vegetation index (ARVI) for EOS-MODIS. IEEE Trans. Geosci. Remote Sens. 1992, 30, 261–270. [Google Scholar] [CrossRef]

- Qi, J.; Chehbouni, A.; Huete, A.R.; Kerr, Y.H.; Sorooshian, S. A modified soil adjusted vegetation index. Remote Sens. Environ. 1994, 48, 119–126. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant remote sensing vegetation indices: A review of developments and applications. J. Sens. 2017, 2017, 1–17. [Google Scholar] [CrossRef]

- Chen, H.; Huang, W.; Li, W.; Niu, Z.; Zhang, L.; Xing, S. Estimation of LAI in winter wheat from multi-angular hyperspectral VNIR data: Effects of view angles and plant architecture. Remote Sens. 2018, 10, 1630. [Google Scholar] [CrossRef]

- Broge, N.H.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Liu, K.; Zhou, Q.; Wu, W.; Xia, T.; Tang, H. Estimating the crop leaf area index using hyperspectral remote sensing. J. Integr. Agric. 2016, 15, 475–491. [Google Scholar] [CrossRef] [Green Version]

- Nilson, T.; Kuusk, A. A reflectance model for the homogeneous plant canopy and its inversion. Remote Sens. Environ. 1989, 27, 157–167. [Google Scholar] [CrossRef]

- Berger, K.; Atzberger, C.; Danner, M.; D’Urso, G.; Mauser, W.; Vuolo, F.; Hank, T. Evaluation of the PROSAIL model capabilities for future hyperspectral model environments: A review study. Remote Sens. 2018, 10, 85. [Google Scholar] [CrossRef]

- Zhang, L.; Guo, C.L.; Zhao, L.Y.; Zhu, Y.; Cao, W.X.; Tian, Y.C.; Cheng, T.; Wang, X. Estimating wheat yield by integrating the WheatGrow and PROSAIL models. Field Crops Res. 2016, 192, 55–66. [Google Scholar] [CrossRef]

- Saltelli, A.; Tarantola, S.; Chan, K.P.-S. A quantitative model-independent method for global sensitivity analysis of model output. Technometrics 1999, 41, 39–56. [Google Scholar] [CrossRef]

- Zou, X.; Mottus, M. Sensitivity of Common Vegetation Indices to the Canopy Structure of Field Crops. Remote Sens. 2017, 9, 994. [Google Scholar] [CrossRef]

- Chen, J.M. Valuation of vegetation indices and a modified simple ratio for boreal applications. Can. J. Remote Sens. 1996, 22, 229–242. [Google Scholar] [CrossRef]

- Haboudane, D. Hyperspectral vegetation indices and novel algorithms for predicting green LAI of crop canopies: Modeling and validation in the context of precision agriculture. Remote Sens. Environ. 2004, 90, 337–352. [Google Scholar] [CrossRef]

- Schleicher, T.D.; Bausch, W.C.; Delgado, J.A.; Ayers, P.D. Evaluation and refinement of the nitrogen reflectance index (NRI) for site-specific fertilizer management. In Proceedings of the American Society of Agricultural and Biological Engineers, Sacramento, CA, USA, 29 July–1 August 2001. [Google Scholar]

- Gao, B. NDWI—A normalized difference water index for remote sensing of vegetation liquid water from space. Remote Sens. Environ. 1996, 58, 257–266. [Google Scholar] [CrossRef]

- Verger, A.; Vigneau, N.; Cheron, C.; Gilliot, J.-M.; Comar, A.; Baret, F. Green area index from an unmanned aerial system over wheat and rapeseed crops. Remote Sens. Environ. 2014, 152, 654–664. [Google Scholar] [CrossRef]

- Burkart, A.; Aasen, H.; Alonso, L.; Menz, G.; Bareth, G.; Rascher, U. Angular dependency of hyperspectral measurements over wheat characterized by a novel UAV based goniometer. Remote Sens. 2015, 7, 725–746. [Google Scholar] [CrossRef]

- Gitelson, A.A. Remote estimation of canopy chlorophyll content in crops. Geophys. Res. Lett. 2005, 32. [Google Scholar] [CrossRef] [Green Version]

- Hunt, E.R.; Horneck, D.A.; Spinelli, C.B.; Turner, R.W.; Bruce, A.E.; Gadler, D.J.; Brungardt, J.J.; Hamm, P.B. Monitoring nitrogen status of potatoes using small unmanned aerial vehicles. Precis. Agric. 2018, 19, 314–333. [Google Scholar] [CrossRef]

- Gitelson, A.A. Wide dynamic range vegetation index for remote quantification of biophysical characteristics of vegetation. J. Plant Physiol. 2004, 161, 165–173. [Google Scholar] [CrossRef]

- Fang, H.; Ye, Y.; Liu, W.; Wei, S.; Ma, L. Continuous estimation of canopy leaf area index (LAI) and clumping index over broadleaf crop fields: An investigation of the PASTIS-57 instrument and smartphone applications. Agric. For. Meteorol. 2018, 253–254, 48–61. [Google Scholar] [CrossRef]

- Luo, S.; Wang, C.; Xi, X.; Nie, S.; Fan, X.; Chen, H.; Yang, X.; Peng, D.; Lin, Y.; Zhou, G. Combining hyperspectral imagery and LiDAR pseudo-waveform for predicting crop LAI, canopy height and above-ground biomass. Ecol. Indic. 2019, 102, 801–812. [Google Scholar] [CrossRef]

- Kross, A.; McNairn, H.; Lapen, D.; Sunohara, M.; Champagne, C. Assessment of RapidEye vegetation indices for estimation of leaf area index and biomass in corn and soybean crops. Int. J. Appl. Earth Obs. Geoinf. 2015, 34, 235–248. [Google Scholar] [CrossRef] [Green Version]

- Chianucci, F.; Disperati, L.; Guzzi, D.; Bianchini, D.; Nardino, V.; Lastri, C.; Rindinella, A.; Corona, P. Estimation of canopy attributes in beech forests using true colour digital images from a small fixed-wing UAV. Int. J. Appl. Earth Obs. Geoinf. 2016, 47, 60–68. [Google Scholar] [CrossRef] [Green Version]

- Pisek, J.; Buddenbaum, H.; Camacho, F.; Hill, J.; Jensen, J.L.R.; Lange, H.; Liu, Z.; Piayda, A.; Qu, Y.; Roupsard, O.; et al. Data synergy between leaf area index and clumping index Earth Observation products using photon recollision probability theory. Remote Sens. Environ. 2018, 215, 1–6. [Google Scholar] [CrossRef]

- Darvishzadeh, R.; Matkan, A.A.; Ahangar, A.D. Inversion of a radiative transfer model for estimation of rice canopy chlorophyll content using a lookup-table approach. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1222–1230. [Google Scholar] [CrossRef]

- Richter, K.; Atzberger, C.; Vuolo, F.; D’Urso, G. Evaluation of sentinel-2 spectral sampling for radiative transfer model based LAI estimation of wheat, sugar beet, and maize. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 4, 458–464. [Google Scholar] [CrossRef]

- Becker-Reshef, I.; Vermote, E.; Lindeman, M.; Justice, C. A generalized regression-based model for forecasting winter wheat yields in Kansas and Ukraine using MODIS data. Remote Sens. Environ. 2010, 114, 1312–1323. [Google Scholar] [CrossRef]

| Field (Plot Number, Plot Size) | Treatments 1 (Plot Number) |

|---|---|

| Field A (16, 2×2 m2) | CT-RR 2 (3), NT-RR (3), CT-S (3), NT-S (4), CT-1/2S (3) |

| Field B (25, 5×6 m2) | CK (4), NP (4), NK (4), PK (4), NPK (7), NPK-S (2) |

| Field C (6, 10×10 m2) | CT (6) |

| Field D (32, 5×10 m2) | N0-60%fc (3), N70-60%fc (3), N140-60%fc (3), N210-60%fc (3) N280-60%fc (4), N0-80%fc (3), N70-80%fc (3), N140-80%fc (3) N210-80%fc(3), N280-80%fc(4) |

| Field E (32, 5×10 m2) | 0%fc (8), 40%fc (8), 60%fc (8), 80%fc (8) |

| Field F (18, 8×40 m2) | NT-S-F1 (3), NT-S-F2 (3), NT-RR-F1 (3), NT-RR-F2 (3), CT-S-F1 (3), CT-S-F2 (3) |

| Abbr. | Definitions | |

|---|---|---|

| Tillage treatment | CT | conventional tillage |

| NT | non-tillage | |

| Residual treatments | RR | residual removal |

| S | returning the whole straw of each plot to soil | |

| 1/2S | returning half straw of each plot to soil | |

| Fertilizer applications | CK | no N, P, K fertilizer |

| N | nitrogenous fertilizer | |

| K | potash fertilizer | |

| P | phosphate fertilizer | |

| N0, N70, N140, N210, N280 | N70 | 70 kg N ha−1 for each crop season |

| 0%fc, 40%fc, 60%fc, 80%fc | 60%fc | irrigation to 60% of the field water capacity |

| Fertilization methods | F1 | only base fertilizer application |

| F2 | base fertilizer along with two topdressings |

| Cameras | Date | Field (Samples) | Min | Mean | Max | Standard Deviation | CV (%) * |

|---|---|---|---|---|---|---|---|

| Multi- | 15 May 2018 | A (4) | 1.65 | 1.78 | 1.85 | 0.10 | 5.40 |

| B (25) | 0.51 | 1.27 | 2.30 | 0.59 | 46.34 | ||

| C (6) | 2.08 | 2.59 | 3.05 | 0.37 | 14.18 | ||

| D (32) | 0.31 | 1.83 | 3.15 | 0.77 | 42.20 | ||

| E (32) | 0.63 | 1.37 | 1.85 | 0.30 | 22.16 | ||

| F (8) | 1.78 | 2.06 | 2.50 | 0.25 | 11.92 | ||

| Total (107) | 0.31 | 1.62 | 3.15 | 0.65 | 40.03 | ||

| 16 May 2019 | B (25) | 0.21 | 1.25 | 2.56 | 0.79 | 63.30 | |

| D (32) | 0.46 | 1.51 | 2.93 | 0.78 | 51.73 | ||

| Total (57) | 0.21 | 1.40 | 2.93 | 0.81 | 57.70 | ||

| Hyper- | 15 May 2018 | B (25) | 0.51 | 1.27 | 2.30 | 0.59 | 46.34 |

| D (32) | 0.31 | 1.83 | 3.15 | 0.77 | 42.20 | ||

| E (32) | 0.63 | 1.37 | 1.85 | 0.30 | 22.16 | ||

| Total (89) | 0.31 | 1.51 | 3.15 | 0.63 | 41.85 |

| Sensor | Spectral Channels (Central Wavelength/Spectral Ranges) | Spatial Resolution | Spectral Resolution (nm) |

|---|---|---|---|

| Cubert S185 | 450–950 nm | 1 cm | 4 nm |

| RedEdge-M | B475, G560, R668, E717, NIR840 | 4 cm | B (20), G (20), R (10), E (10), NIR (40) |

| Variable | Abbr. | Unit | Value (LUT) * | Range (EFAST) ** |

|---|---|---|---|---|

| Leaf structure parameter | N | Unitless | 1.5 | 1–2 |

| Leaf chlorophyll content | Chl | μg·cm−2 | 20–70 (step = 0.2) | 20–70 |

| Leaf carotenoid content | caro | μg·cm−2 | 10 | 3–30 |

| Brown pigment content | - | arbitrary units | 0 | 0 |

| Blade equivalent thickness | EWT | cm | 0.01 | 0.005–0.03 |

| Leaf water mass per area | LMA | g·cm−2 | 0.005 | 0.004–0.007 |

| Soil brightness parameter | psoil | Unitless | 0.1 | 0.01–0.3 |

| Leaf area index | LAI | m2 m−2 | 0.1–6 (step = 0.01) | 0.1–6 |

| Hot-spot size parameter | hot spot | m m−1 | 0.2 | 0.05-1 |

| Solar zenith angle | - | degrees | 20 | 20 |

| Solar azimuth angle | - | degrees | 185 | 185 |

| View zenith angle | - | degrees | 0 | 0 |

| View azimuth angle | - | degrees | 0 | 0 |

| Average leaf angle | ALA | degrees | 70 | 30–70 |

| Dataset | Value of Central Wavelengths (Bandwidth) (nm) | ||||

|---|---|---|---|---|---|

| B | G | R | E | NIR | |

| Dataset 1 | 475/20 | 560/20 | 668/10 | 717/10 | 840/40 |

| Dataset 2 | 475/20 | 560/20 | 672 or 612/10 * | 717/10 | 752 or 756/10 ** |

| Dataset 3 | 475/4 | 560/4 | 668/4 | 717/4 | 840/4 |

| Dataset 4 | 475/4 | 560/4 | 672 or 612/10 * | 717/4 | 752 or 756/10 ** |

| Year | Reflectance-LUTs | VI-LUTs | ||||||

|---|---|---|---|---|---|---|---|---|

| Bands | R2 | RMSE | MRE | VIs | R2 | RMSE | MRE | |

| 2018 n = 107 | R,NIR | 0.42 | 0.94 | 0.70 | NDVI | 0.76 | 0.44 | 0.25 |

| R,NIR,B | 0.42 | 0.94 | 0.70 | ARVI | 0.74 | 0.51 | 0.30 | |

| R,NIR,G | 0.42 | 0.94 | 0.70 | MCARI2 | 0.75 | 0.38 | 0.22 | |

| R,NIR, E | 0.42 | 0.94 | 0.70 | NRI | 0.75 | 0.46 | 0.25 | |

| 2019 n = 57 | R,NIR | 0.01 | 2.23 | 2.71 | NDVI | 0.78 | 0.38 | 0.27 |

| R,NIR,B | 0.01 | 2.23 | 2.71 | ARVI | 0.74 | 0.47 | 0.23 | |

| R,NIR,G | 0.01 | 2.23 | 2.71 | MCARI2 | 0.83 | 0.33 | 0.30 | |

| R,NIR, E | 0.01 | 2.23 | 2.71 | NRI | 0.74 | 0.43 | 0.31 | |

| Crops | VIs | ρ1 Bands (nm) | ρ2 Bands (nm) | r Value |

|---|---|---|---|---|

| Wheat | m-NDVI | 752 | 672 | 0.86 ** |

| m-NRI | 752 | 672 | −0.86 ** | |

| m-MCARI2 | 756 | 612 | 0.87 ** | |

| m-ARVI | 752 | 672 | 0.86 ** |

| Reflectance-LUTs | VIs-LUTs | ||||||

|---|---|---|---|---|---|---|---|

| Bands | R2 | RMSE | MRE | VIs | R2 | RMSE | MRE |

| R, NIR | 0.27 | 2.58 | 2.22 | NDVI | 0.80 | 0.55 | 0.31 |

| R, NIR, B | 0.27 | 2.58 | 2.22 | ARVI | 0.76 | 0.66 | 0.40 |

| R, NIR, G | 0.27 | 2.58 | 2.22 | MCARI2 | 0.82 | 0.37 | 0.26 |

| R, NIR, E | 0.32 | 2.60 | 2.24 | NRI | 0.75 | 0.58 | 0.32 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, W.; Sun, Z.; Huang, Y.; Lai, J.; Li, J.; Zhang, J.; Yang, B.; Li, B.; Li, S.; Zhu, K.; et al. Improving Field-Scale Wheat LAI Retrieval Based on UAV Remote-Sensing Observations and Optimized VI-LUTs. Remote Sens. 2019, 11, 2456. https://doi.org/10.3390/rs11202456

Zhu W, Sun Z, Huang Y, Lai J, Li J, Zhang J, Yang B, Li B, Li S, Zhu K, et al. Improving Field-Scale Wheat LAI Retrieval Based on UAV Remote-Sensing Observations and Optimized VI-LUTs. Remote Sensing. 2019; 11(20):2456. https://doi.org/10.3390/rs11202456

Chicago/Turabian StyleZhu, Wanxue, Zhigang Sun, Yaohuan Huang, Jianbin Lai, Jing Li, Junqiang Zhang, Bin Yang, Binbin Li, Shiji Li, Kangying Zhu, and et al. 2019. "Improving Field-Scale Wheat LAI Retrieval Based on UAV Remote-Sensing Observations and Optimized VI-LUTs" Remote Sensing 11, no. 20: 2456. https://doi.org/10.3390/rs11202456

APA StyleZhu, W., Sun, Z., Huang, Y., Lai, J., Li, J., Zhang, J., Yang, B., Li, B., Li, S., Zhu, K., Li, Y., & Liao, X. (2019). Improving Field-Scale Wheat LAI Retrieval Based on UAV Remote-Sensing Observations and Optimized VI-LUTs. Remote Sensing, 11(20), 2456. https://doi.org/10.3390/rs11202456