Online Correction of the Mutual Miscalibration of Multimodal VIS–IR Sensors and 3D Data on a UAV Platform for Surveillance Applications

Abstract

:1. Introduction

2. Related Work

2.1. IR–VIS Image Registration

2.2. Calibration of Multimodal VIS–IR

2.3. In Situ Calibration Correction

3. Materials and Methods

3.1. Design of the Calibration Target

3.2. IR–VIS Image Registration Procedure

3.2.1. Perspective Transformation

(1) Homography

(2) Ray–Plane Intersections

(3) Depth Map

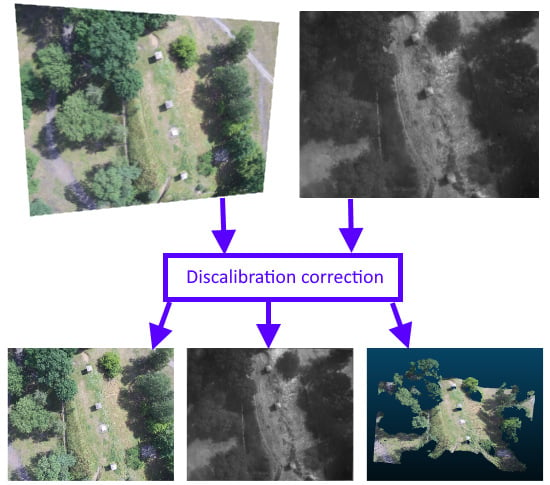

3.3. Online Miscalibration Detection and Correction

- detection of miscalibration between cameras, and

- mutual calibration correction.

3.3.1. Miscalibration Detection Method

3.3.2. Multimodal Calibration Correction

(1) Assessment of the Reliability of the Determined Transformation

(2) Assessment of Transformation Similarity

4. Experimental Results

4.1. Calibration and IR–VIS Image Mapping

- FLIR Duo Pro R camera, consisting of a pair of IR and VIS sensors in one case,

- Sony Alpha a6000 camera and IR FLIR Vue Pro R camera,

- Logitech C920 webcam and FLIR A35 camera.

4.2. Automatic Calibration Correction

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Rutkiewicz, J.; Malesa, M.; Karaszewski, M.; Foryś, P.; Siekański, P.; Sitnik, R. The method of acquiring and processing 3D data from drones. In Speckle 2018: VII International Conference on Speckle Metrology; Józwik, M., Jaroszewicz, L.R., Kujawińska, M., Eds.; SPIE: Bellingham, DC, USA, 2018; p. 97. [Google Scholar]

- Özyeşil, O.; Voroninski, V.; Basri, R.; Singer, A. A survey of structure from motion. Acta Numer. 2017, 26, 305–364. [Google Scholar] [CrossRef]

- Maes, W.H.; Huete, A.R.; Steppe, K. Optimizing the processing of UAV-based thermal imagery. Remote Sens. 2017, 9, 476. [Google Scholar] [CrossRef]

- Padró, J.C.; Muñoz, F.J.; Planas, J.; Pons, X. Comparison of four UAV georeferencing methods for environmental monitoring purposes focusing on the combined use with airborne and satellite remote sensing platforms. Int. J. Appl. Earth Obs. Geoinf. 2019, 75, 130–140. [Google Scholar] [CrossRef]

- Turner, D.; Lucieer, A.; Malenovský, Z.; King, D.H.; Robinson, S.A. Spatial co-registration of ultra-high resolution visible, multispectral and thermal images acquired with a micro-UAV over antarctic moss beds. Remote Sens. 2014, 6, 4003–4024. [Google Scholar] [CrossRef]

- Putz, B.; Bartyś, M.; Antoniewicz, A.; Klimaszewski, J.; Kondej, M.; Wielgus, M. Real-time image fusion monitoring system: Problems and solutions. In Advances in Intelligent Systems and Computing; Springer: Berlin, Germany, 2013; Volume 184, pp. 143–152. [Google Scholar]

- Pohit, M.; Sharma, J. Image registration under translation and rotation in two-dimensional planes using Fourier slice theorem. Appl. Opt. 2015, 54, 4514. [Google Scholar] [CrossRef] [PubMed]

- Huang, X.; Chen, Z. A wavelet-based multisensor image registration algorithm. In Proceedings of the 6th International Conference on Signal Processing (ICSP), Beijing, China, 26–30 August 2002; IEEE: Piscataway, NJ, USA, 2002; Volume 1, pp. 773–776. [Google Scholar]

- Li, H.; Ding, W.; Cao, X.; Liu, C. Image registration and fusion of visible and infrared integrated camera for medium-altitude unmanned aerial vehicle remote sensing. Remote Sens. 2017, 9, 441. [Google Scholar] [CrossRef]

- Huang, Q.; Yang, J.; Wang, C.; Chen, J.; Meng, Y. Improved registration method for infrared and visible remote sensing image using NSCT and SIFT. In Proceedings of the International Geoscience and Remote Sensing Symposium (IGARSS), Munich, Germany, 22–27 July 2012; IEEE: Piscataway, NJ, USA, 2012; pp. 2360–2363. [Google Scholar]

- Liu, L.; Tuo, H.Y.; Xu, T.; Jing, Z.L. Multi-spectral image registration and evaluation based on edge-enhanced MSER. Imaging Sci. J. 2013, 62, 228–235. [Google Scholar] [CrossRef]

- Yahyanejad, S.; Rinner, B. A fast and mobile system for registration of low-altitude visual and thermal aerial images using multiple small-scale UAVs. ISPRS J. Photogramm. Remote Sens. 2015, 104, 189–202. [Google Scholar] [CrossRef]

- Li, H.; Zhang, A.; Hu, S. A registration scheme for multispectral systems using phase correlation and scale invariant feature matching. J. Sensors 2016, 2016, 3789570. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–110. [Google Scholar] [CrossRef]

- Kelcey, J.; Lucieer, A.; Kelcey, J.; Lucieer, A. Sensor Correction of a 6-Band Multispectral Imaging Sensor for UAV Remote Sensing. Remote Sens. 2012, 4, 1462–1493. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Lagüela, S.; González-Jorge, H.; Armesto, J.; Herráez, J. High performance grid for the metric calibration of thermographic cameras. Meas. Sci. Technol. 2012, 23, 015402. [Google Scholar] [CrossRef]

- Luhmann, T.; Piechel, J.; Roelfs, T. Geometric calibration of thermographic cameras. In Remote Sensing and Digital Image Processing; Springer: Dordrecht, The Netherlands, 2013; Volume 17, pp. 27–42. [Google Scholar]

- Usamentiaga, R.; Garcia, D.F.; Ibarra-Castanedo, C.; Maldague, X. Highly accurate geometric calibration for infrared cameras using inexpensive calibration targets. Measurement 2017, 112, 105–116. [Google Scholar] [CrossRef]

- Shibata, T.; Tanaka, M.; Okutomi, M. Accurate Joint Geometric Camera Calibration of Visible and Far-Infrared Cameras. Electron. Imaging 2017, 2017, 7–13. [Google Scholar] [CrossRef]

- Vidas, S.; Lakemond, R.; Denman, S.; Fookes, C.; Sridharan, S.; Wark, T. A mask-based approach for the geometric calibration of thermal-infrared cameras. IEEE Trans. Instrum. Meas. 2012, 61, 1625–1635. [Google Scholar] [CrossRef]

- Saponaro, P.; Sorensen, S.; Rhein, S.; Kambhamettu, C. Improving calibration of thermal stereo cameras using heated calibration board. In Proceedings of the International Conference on Image Processing (ICIP), Quebec City, QC, Canada, 27–30 September 2015; IEEE: Piscataway, NJ, USA, 2015; Volume 2015, pp. 4718–4722. [Google Scholar]

- Lagüela, S.; González-Jorge, H.; Armesto, J.; Arias, P. Calibration and verification of thermographic cameras for geometric measurements. Infrared Phys. Technol. 2011, 54, 92–99. [Google Scholar] [CrossRef]

- Yang, R.; Yang, W.; Chen, Y.; Wu, X. Geometric calibration of IR camera using trinocular vision. J. Light. Technol. 2011, 29, 3797–3803. [Google Scholar] [CrossRef]

- Heather, J.P.; Smith, M.I. Multimodal image registration with applications to image fusion. In Proceedings of the 2005 7th International Conference on Information Fusion, Philadelphia, PA, USA, 25–28 July 2005; IEEE: Piscataway, NJ, USA, 2005; Volume 1, pp. 372–379. [Google Scholar]

- Brown, M.; Lowe, D.G. Automatic panoramic image stitching using invariant features. Int. J. Comput. Vis. 2007, 74, 59–73. [Google Scholar] [CrossRef]

- Bradski, G. The OpenCV Library. Dr. Dobb’s J. Softw. Tools 2000, 25, 120–125. [Google Scholar]

- Yahyanejad, S.; Quaritsch, M.; Rinner, B. Incremental, orthorectified and loop-independent mosaicking of aerial images taken by micro UAVs. In Proceedings of the ROSE 2011-IEEE International Symposium on Robotic and Sensors Environments, Montreal, QC, Canada, 17–18 September 2011; pp. 137–142. [Google Scholar]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Fang, M.; Yue, G.; Yu, Q. The study on an application of otsu method in canny operator. In Proceedings of the 2009 International Symposium on Information Processing (ISIP 2009), Huangshan, China, 21–23 August 2009; pp. 109–112. [Google Scholar]

- Evangelidis, G.; Psarakis, E. Parametric Image Alignment Using Enhanced Correlation Coefficient Maximization. IEEE Trans. Pattern Anal. Mach. Intell. 2008, 30, 1858–1865. [Google Scholar] [CrossRef] [PubMed]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A Paradigm for Model Fitting with Applications to Image Analysis and Automated Cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

| Camera | FLIR Duo Pro R (VIS) | FLIR Duo Pro R (IR) | Sony Alpha a6000 | FLIR Vue Pro R | Logitech C920 | FLIR A35 |

|---|---|---|---|---|---|---|

| Resolution | 4000 × 3000 | 640 × 512 | 6000 × 4000 | 640 × 512 | 1920 × 1080 | 320 × 256 |

| Focal length [mm] | 7.64 | 13.20 | 26.6 | 13.12 | 3.47 | 12.99 |

| Principal point (x, y) [mm] | (3.80, 2.81) | (5.26, 4.40) | (11.97, 7.52) | (5.53, 4.18) | (2.37, 1.34) | (4.13, 3.89) |

| Reprojection error [px] | 0.25 | 0.22 | 0.18 | 0.13 | 0.13 | 0.24 |

| Stereo reprojection error [px] | 0.48 | 0.79 | 0.78 | |||

| Translation (x, y, z) [mm] | (39.4, 1.1, −7.5) | (−46.8, −13.2, −35.9) | (−95.2, −7.1, −63.7) | |||

| Rotation (x, y, z) [°] | (0.11, 1.77, −0.29) | (1.44, −0.09, −0.35) | (3.79, 1.50, 2.37) | |||

| Preprocessing Method | ECC | ECC with Histogram Equalization | Phase Correlation | Phase Correlation with Histogram Equalization |

|---|---|---|---|---|

| None | 0.38/0.66 | 0.32/0.70 | 0.53/0.18 | 0.85/0.36 |

| Gradient | 0.49/0.33 | 0.80/0.20 | 0.95/0.94 | 0.95/0.93 |

| Canny | 0.50/0.18 | 0.33/0.01 | 0.66/0.98 | 0.77/0.98 |

| Calibration Correction Method | Detected Miscalibrations | Reliable Transformations | Calibration Correction Required | Erroneously Detected the Need for Correction |

|---|---|---|---|---|

| Affine transform | 79 | 79 | 63 | 0 |

| Perspective transform | 79 | 78 | 75 | 2 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Siekański, P.; Paśko, S.; Malowany, K.; Malesa, M. Online Correction of the Mutual Miscalibration of Multimodal VIS–IR Sensors and 3D Data on a UAV Platform for Surveillance Applications. Remote Sens. 2019, 11, 2469. https://doi.org/10.3390/rs11212469

Siekański P, Paśko S, Malowany K, Malesa M. Online Correction of the Mutual Miscalibration of Multimodal VIS–IR Sensors and 3D Data on a UAV Platform for Surveillance Applications. Remote Sensing. 2019; 11(21):2469. https://doi.org/10.3390/rs11212469

Chicago/Turabian StyleSiekański, Piotr, Sławomir Paśko, Krzysztof Malowany, and Marcin Malesa. 2019. "Online Correction of the Mutual Miscalibration of Multimodal VIS–IR Sensors and 3D Data on a UAV Platform for Surveillance Applications" Remote Sensing 11, no. 21: 2469. https://doi.org/10.3390/rs11212469

APA StyleSiekański, P., Paśko, S., Malowany, K., & Malesa, M. (2019). Online Correction of the Mutual Miscalibration of Multimodal VIS–IR Sensors and 3D Data on a UAV Platform for Surveillance Applications. Remote Sensing, 11(21), 2469. https://doi.org/10.3390/rs11212469