UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence

Abstract

:1. Introduction

2. Materials and Methods

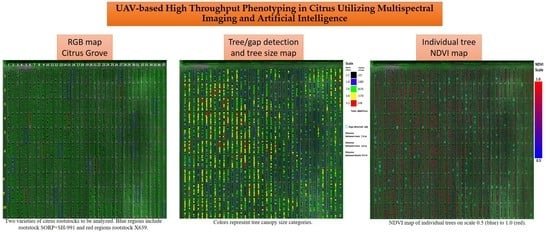

2.1. Study Area

2.2. Tree Detection and Analysis Process

2.2.1. UAV Imagery Acquisition

2.2.2. Photogrammetric and Multispectral Image Processing

2.2.3. First Tree Detection using Convolutional Neural Networks

2.2.4. Recognize Map Dimensions, Properties, and Second CNN Tree Detection

2.2.5. Calculate Individual Tree’s Canopy Area and NDVI

3. Evaluation Metrics

3.1. Tree and Tree Gap Detection

3.2. Tree Canopy Area Estimation

3.3. Statistical Indices Comparison Between Two Citrus Rootstocks

4. Results

4.1. Tree Detections

4.2. Canopy Area Estimation

4.3. Indivudual Plant Indices

4.4. Plant Indices Comparison for Two Citrus Rootstocks

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Cuenca, J.; Aleza, P.; Vicent, A.; Brunel, D.; Ollitrault, P.; Navarro, L. Genetically based location from triploid populations and gene ontology of a 3.3-Mb genome region linked to Alternaria brown spot resistance in citrus reveal clusters of resistance genes. PLoS ONE 2013, 8, e767553. [Google Scholar] [CrossRef] [PubMed]

- Rambla, J.; Gonzalez-Mas, M.C.; Pons, C.; Bernet, G.; Asins, M.J.; Granell, A. Fruit volatile profiles of two citrus hybrids are dramatically different from their parents. J. Agric. Food Chem. 2014, 62, 11312–11322. [Google Scholar] [CrossRef] [PubMed]

- Sahin-Çevik, M.; Moore, G.A. Quantitative trait loci analysis of morphological traits in citrus. Plant Biotechnol. 2012, Rep 6, 47–57. [Google Scholar] [CrossRef]

- Vardi, A.; Levin, I.; Carmi, N. Induction of seedlessness in citrus: From classical techniques to emerging biotechnological approaches. J Am. Soc. Hortic. Sci. 2008, 133, 117–126. [Google Scholar] [CrossRef]

- Zheng, Q.M.; Tang, Z.; Xu, Q.; Deng, X.X. Isolation, phylogenetic relationship and expression profiling of sugar transporter genes in sweet orange (Citrus sinensis) plant cell tissue and organ. Culture 2014, 119, 609–624. [Google Scholar]

- Albrecht, U.; Fiehn, O.; Bowman, K.D. Metabolic variations in different citrus rootstock cultivars associated with different responses to Huanglongbing. Plant Physiol. Biochem. 2016, 107, 33–44. [Google Scholar] [CrossRef] [PubMed]

- Aleza, P.; Juarez, J.; Hernandez, M.; Ollitrault, P.; Navarro, L. Implementation of extensive citrus triploid breeding programs based on 4x × 2x sexual hybridisations. Tree Genet. Genomes 2012, 8, 1293–1306. [Google Scholar] [CrossRef]

- Mahlein, A.K. Plant disease detection by imaging sensors—parallels and specific demands for precision agriculture and plant phenotyping. Plant Dis. 2016, 100, 241–251. [Google Scholar] [CrossRef] [PubMed]

- Shakoor, N.; Lee, S.; Mockler, T.C. High throughput phenotyping to accelerate crop breeding and monitoring of diseases in the field. Curr. Opin. Plant Biol. 2017, 38, 184–192. [Google Scholar] [CrossRef] [PubMed]

- Luvisi, A.; Ampatzidis, Y.; Bellis, L.D. Plant pathology and information technology: Opportunity and uncertainty in pest management. Sustainability 2016, 8, 831. [Google Scholar] [CrossRef]

- Cruz, A.C.; Luvisi, A.; De Bellis, L.; Ampatzidis, Y. X-FIDO: An Effective Application for Detecting Olive Quick Decline Syndrome with Novel Deep Learning Methods. Front. Plant Sci. 2017, 8, 1741. [Google Scholar] [CrossRef] [PubMed]

- Cruz, A.; Ampatzidis, Y.; Pierro, R.; Materazzi, A.; Panattoni, A.; De Bellis, L.; Luvisi, A. Detection of Grapevine Yellows Symptoms in Vitis vinifera L. with Artificial Intelligence. Comput. Electron. Agric. 2019, 157, 63–76. [Google Scholar] [CrossRef]

- Pajares, G. Overview and current status of remote sensing applications based on unmanned aerial vehicles (UAVs). Photogramm. Eng. Remote Sens. 2015, 81, 281–330. [Google Scholar] [CrossRef]

- Singh, A.; Ganapathysubramanian, B.; Singh, A.K.; Sarkar, S. Machine Learning for High-Throughput Stress Phenotyping in Plants. Trends Plant Sci. 2016, 21, 110–124. [Google Scholar] [CrossRef] [PubMed]

- Abdullahi, H.S.; Mahieddine, F.; Sheriff, R.E. Technology impact on agricultural productivity: A review of precision agriculture using unmanned aerial vehicles. In Proceedings of the International Conference on Wireless and Satellite Systems, Bradford, UK, 6–7 July 2015; pp. 388–400. [Google Scholar]

- Abdulridha, J.; Ampatzidis, Y.; Ehsani, R.; de Castro, A. Evaluating the Performance of Spectral Features and Multivariate Analysis Tools to Detect Laurel Wilt Disease and Nutritional Deficiency in Avocado. Comput. Electron. Agric. 2018, 155, 203–2011. [Google Scholar] [CrossRef]

- Abdulridha, J.; Ehsani, R.; Abd-Elrahman, A.; Ampatzidis, Y. A Remote Sensing technique for detecting laurel wilt disease in avocado in presence of other biotic and abiotic stresses. Comput. Electron. Agric. 2019, 156, 549–557. [Google Scholar] [CrossRef]

- Nebiker, S.; Annen, A.; Scherrer, M.; Oesch, D. A light-weight multispectral sensor for micro UAV—Opportunities for very high resolution airborne remote sensing. In International Archives of the Photogrammetry. Remote Sens. Spat. Inf. Sci. 2008, 37, B1. [Google Scholar]

- Hunt, E.R., Jr.; Hively, W.D.; Fujikawa, S.J.; Linden, D.S.; Daughtry, C.S.T.; McCarty, G.W. Acquisition of nir-green-blue digital photographs from Unmanned Aircraft for crop monitoring. Remote Sens. 2010, 2, 290–305. [Google Scholar] [CrossRef]

- Xiang, H.; Tian, L. Development of a low-cost agricultural remote sensing system based on an autonomous unmanned aerial vehicle (UAV). Biosyst. Eng. 2011, 108, 174–190. [Google Scholar] [CrossRef]

- Matese, A.; Capraro, F.; Primicerio, J.; Gualato, G.; Di Gennaro, S.F.; Agati, G. Mapping of vine vigor by UAV and anthocyanin content by a non-destructive fluorescence technique. Precis. Agric. 2013, 13, 201–208. [Google Scholar]

- Malek, S.; Bazi, Y.; Alajlan, N.; AlHichri, H.; Melgani, F. Efficient Framework for Palm Tree Detection in UAV Images. IEEE J-STARS 2014, 7, 4692–4703. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Sankaran, S.; Maja, J.M.; Buchanon, S.; Ehsani, R. Huanglongbing (citrus greening) detection using visible, near infrared and thermal imaging techniques. Sensors 2013, 13, 2117–2130. [Google Scholar] [CrossRef] [PubMed]

- Romero-Trigueros, C.; Nortes, P.A.; Alarcón, J.J.; Hunink, J.E.; Parra, M.; Contreras, S.; Nicolás, E. Effects of saline reclaimed waters and deficit irrigation on Citrus physiology assessed by UAV remote sensing. Agric. Water Manag. 2017, 183, 60–69. [Google Scholar] [CrossRef]

- Csillik, O.; Cherbini, J.; Johnson, R.; Lyons, A.; Kelly, M. Identification of Citrus Trees from Unmanned Aerial Vehicle Imagery Using Convolutional Neural Networks. Drones 2018, 2, 39. [Google Scholar] [CrossRef]

- Kussul, N.; Lavreniuk, M.; Skakun, S.; Shelestov, A. Deep Learning Classification of Land Cover and Crop Types Using Remote Sensing Data. IEEE Geosci. Remote Sens. Lett. 2017, 14, 778–782. [Google Scholar] [CrossRef]

- Ampatzidis, Y.; Bellis, L.D.; Luvisi, A. iPathology: Robotic applications and management of plants and plant diseases. Sustainability 2017, 9, 1010. [Google Scholar] [CrossRef]

- Partel, V.; Kakarla, S.C.; Ampatzidis, Y. Development and Evaluation of a Low-Cost and Smart Technology for Precision Weed Management Utilizing Artificial Intelligence. Comput. Electron. Agric. 2019, 157, 339–350. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep learning. Nature 2015, 521, 436. [Google Scholar] [CrossRef]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. Imagenet classification with deep convolutional neural networks. In Advances in Neural Information Processing Systems; MIT Press: Cambridge, MA, USA, 2012; pp. 1097–1105. [Google Scholar]

- Simonyan, K.; Zisserman, A. Very deep convolutional networks for large-scale image recognition. arXiv, 2015; arXiv:1409.1556. [Google Scholar]

- Ghatrehsamani, S.; Wade, T.; Ampatzidis, Y. The adoption of precision agriculture technologies by Florida growers: A comparison of 2005 and 2018 survey data. In Proceedings of the XXX International Horticultural Congress, II International Symposium on Mechanization, Precision Horticulture, and Robotics, Istanbul, Turkey, 12–16 August 2018. [Google Scholar]

- Redmon, J.; Farhadi, A. Yolov3: An incremental improvement. arXiv, 2018; arXiv:1804.02767. [Google Scholar]

- Mohan, M.; Silva, C.A.; Klauberg, C.; Jat, P.; Catts, G.; Cardil, A.; Hudak, A.T.; Dia, M. Individual tree detection from unmanned aerial vehicle (UAV) derived canopy height model in an open canopy mixed conifer forest. Forests 2017, 8, 340. [Google Scholar] [CrossRef]

- Nevalainen, O.; Honkavaara, E.; Tuominen, S.; Viljanen, N.; Hakala, T.; Yu, X.; Hyyppä, J.; Saari, H.; Pölönen, I.; Imai, N.N.; et al. Individual tree detection and classification with UAV-based photogrammetric point clouds and hyperspectral imaging. Remote Sens. 2017, 9, 185. [Google Scholar] [CrossRef]

- Wallace, L.; Lucieer, A.; Watson, C.S. Evaluating tree detection and segmentation routines on very high resolution UAV LiDAR data. IEEE Trans. Geosci. Remote Sens. 2014, 52, 7619–7628. [Google Scholar] [CrossRef]

| Number of Detections | TP | FP | FN | Ground Truth | Precision | Recall | F-Score | |

|---|---|---|---|---|---|---|---|---|

| First CNN detection | 4889 | 4823 | 66 | 93 | 4916 | 98.7% | 98.1% | 98.4% |

| After second detection refinement | 4904 | 4899 | 5 | 17 | 99.9% | 99.7% | 99.8% |

| Number of Detections | TP | FP | FN | Ground Truth | Precision | Recall | F-Score | |

|---|---|---|---|---|---|---|---|---|

| Gaps detection | 106 | 106 | 0 | 6 | 112 | 100% | 94.6% | 97.3% |

| Trees | Ground-Truth Measured Area (m2) | Rectangle Area (m2) | NDVI-Based Image Segmentation Area (m2) | Rectangle Area Error (%) | NDVI-Based Image Segmentation Area Error (%) |

|---|---|---|---|---|---|

| 1 | 2.05 | 2.62 | 1.35 | 27.7% | 34.4% |

| 2 | 5.03 | 6.29 | 4.26 | 25.2% | 15.3% |

| 3 | 3.44 | 3.07 | 2.31 | 10.8% | 32.8% |

| 4 | 4.94 | 5.58 | 4.06 | 13.0% | 17.6% |

| 5 | 4.77 | 5.58 | 4.22 | 17.1% | 11.5% |

| 6 | 4.13 | 4.06 | 2.88 | 1.5% | 30.1% |

| 7 | 2.13 | 2.88 | 1.89 | 35.3% | 11.3% |

| 8 | 6.32 | 5.94 | 5.23 | 5.9% | 17.1% |

| 9 | 5.80 | 8.19 | 5.25 | 41.2% | 9.4% |

| 10 | 1.60 | 1.02 | 0.98 | 36.2% | 38.9% |

| 11 | 6.44 | 5.50 | 4.36 | 14.5% | 32.3% |

| 12 | 4.49 | 4.43 | 4.15 | 1.4% | 7.5% |

| 13 | 4.54 | 4.55 | 4.04 | 0.2% | 11.2% |

| 14 | 4.21 | 4.53 | 3.48 | 7.6% | 17.4% |

| 15 | 6.44 | 6.13 | 4.46 | 4.7% | 30.7% |

| 16 | 5.82 | 6.84 | 4.86 | 17.5% | 16.6% |

| 17 | 5.58 | 5.20 | 4.37 | 6.9% | 21.8% |

| 18 | 5.48 | 5.50 | 4.47 | 0.3% | 18.6% |

| 19 | 5.61 | 5.37 | 4.90 | 4.4% | 12.7% |

| 20 | 7.23 | 5.85 | 5.71 | 19.0% | 21.0% |

| Average Error | 14.5% | 20.4% | |||

| Standard Deviation | 12.7% | 9.4% | |||

| Rootstock SORP+SH-991 | Rootstock X639 | |||||||

|---|---|---|---|---|---|---|---|---|

| Blocks | Area in m2 | NDVI | NIR/Red | Blocks | Area in m2 | NDVI | NIR/Red | |

| 1 | 1.27 | 0.70 | 2.81 | 1 | 4.14 | 0.84 | 5.65 | |

| 2 | 1.13 | 0.69 | 3.07 | 2 | 3.47 | 0.83 | 5.24 | |

| 3 | 1.54 | 0.69 | 2.95 | 3 | 3.25 | 0.81 | 4.75 | |

| 4 | 1.53 | 0.72 | 3.64 | 4 | 4.59 | 0.85 | 4.62 | |

| 5 | 1.01 | 0.72 | 3.19 | 5 | 3.67 | 0.84 | 4.26 | |

| 6 | 0.99 | 0.68 | 2.56 | 6 | 2.56 | 0.81 | 4.24 | |

| 7 | 1.01 | 0.70 | 2.87 | 7 | 2.88 | 0.81 | 4.82 | |

| 8 | 1.90 | 0.75 | 3.78 | 8 | 3.57 | 0.79 | 5.22 | |

| 9 | 2.86 | 0.80 | 4.33 | 9 | 3.34 | 0.83 | 5.55 | |

| 10 | 1.65 | 0.70 | 3.24 | 10 | 3.04 | 0.78 | 4.79 | |

| 11 | 0.75 | 0.63 | 2.36 | 11 | 4.25 | 0.84 | 5.11 | |

| 12 | 1.18 | 0.68 | 2.70 | 12 | 2.86 | 0.83 | 4.82 | |

| 13 | 1.65 | 0.72 | 3.25 | 13 | 4.24 | 0.84 | 4.96 | |

| 14 | 1.15 | 0.70 | 2.88 | 14 | 3.93 | 0.85 | 6.22 | |

| 15 | 1.47 | 0.78 | 3.78 | 15 | 2.23 | 0.77 | 4.35 | |

| 16 | 1.75 | 0.76 | 3.58 | |||||

| 17 | 2.85 | 0.81 | 5.39 | Average | 3.47 | 0.82 | 4.97 | |

| 18 | 2.30 | 0.72 | 3.57 | Standard Deviation | 0.68 | 0.03 | 0.55 | |

| 19 | 1.32 | 0.69 | 3.19 | |||||

| 20 | 1.04 | 0.69 | 3.05 | |||||

| 21 | 1.84 | 0.77 | 3.76 | |||||

| 22 | 1.43 | 0.71 | 3.25 | |||||

| 23 | 1.63 | 0.73 | 3.46 | |||||

| 24 | 1.17 | 0.70 | 3.30 | |||||

| 25 | 1.55 | 0.75 | 4.29 | |||||

| 26 | 1.40 | 0.72 | 3.68 | |||||

| 27 | 1.88 | 0.76 | 3.65 | |||||

| 28 | 1.97 | 0.71 | 3.00 | |||||

| Average | 1.54 | 0.72 | 3.38 | |||||

| Standard Deviation | 0.51 | 0.04 | 0.62 | |||||

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ampatzidis, Y.; Partel, V. UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence. Remote Sens. 2019, 11, 410. https://doi.org/10.3390/rs11040410

Ampatzidis Y, Partel V. UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence. Remote Sensing. 2019; 11(4):410. https://doi.org/10.3390/rs11040410

Chicago/Turabian StyleAmpatzidis, Yiannis, and Victor Partel. 2019. "UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence" Remote Sensing 11, no. 4: 410. https://doi.org/10.3390/rs11040410

APA StyleAmpatzidis, Y., & Partel, V. (2019). UAV-Based High Throughput Phenotyping in Citrus Utilizing Multispectral Imaging and Artificial Intelligence. Remote Sensing, 11(4), 410. https://doi.org/10.3390/rs11040410