1. Introduction

Synthetic aperture radar (SAR) is a significant coherent imaging system that generates high-resolution images of terrain and targets. Since SAR possesses inherent all-time and all-weather features that can overcome the shortcomings of the optical and infrared systems, it is widely used in ocean monitoring, resource exploration, and military development. Multiplicative noise, called speckle, often interferes with SAR images. Speckle is formed by interference echo of each resolving unit and brings difficulties to the analysis and processing on computer vision systems. Therefore, removing the coherent noise is very important for applications in the SAR image field.

Over the past few decades, scholars have proposed a lot of methods for SAR image denoising. Some denoising methods are based on spatial filtering, for example, Lee filtering [

1], Kuan filtering [

2], Frost filtering [

3], Gamma maximum a posteriori (MAP) filtering [

4], and non-local means (NLM) denoising [

5]. Since the spatial filtering tends to darken the denoised SAR images, denoising algorithms based on the transform domain have been developed and have had remarkable achievements in recent years. These transform domain filters are mainly based on wavelet transform and multi-scale geometric transforms, such as wavelet-domain Bayesian denoising [

6], contourlet-domain SAR image denoising [

7,

8], Shearlet-domain SAR image denoising [

9,

10,

11], and so on. The general procedure of transform domain filtering is, firstly, to transform the original images; then, the noise-free coefficients are estimated; and, finally, the denoised images are achieved via the inverse-transform from the processed coefficients. The transform domain algorithms can effectively suppress the speckle. However, due to some inherent disadvantages of the transform domain, the denoising algorithms cause pixel distortion. Moreover, the statistical relationship between a pixel and its neighboring pixels is used mostly in the speckle suppression algorithms, and they do not utilize the information of similar local regions or the natural statistical characteristics of the whole image, which could be utilized to enhance the image denoising effect further.

Through the study of multifarious inverse problems in low-level vision, scholars have found that optimization methods based on model and discriminant learning methods have become vital strategies for solving such problems, including image denoising problems [

12]. Model-based methods have been used in SAR image denoising widely in the last few years, including the sparse representation-based SAR image denoising algorithm [

13], the block sorting for SAR image denoising algorithm [

14], the non-local prior based SAR image denoising algorithm [

15,

16], and the low rank matrix for SAR image denoising algorithm [

17,

18]. A denoising algorithm based on discriminant learning tries to learn a degenerated matrix of the model via machine learning algorithms for the forthcoming noise reducing step. Nowadays, the commonly used discriminant models are linear regression, logistic regression (LR), neural network, support vector machine, Gaussian process, conditional random field (CRF), and classification and regression trees (CART). With recent machine learning technologies, a lot of new discriminant learning-based SAR image denoising algorithms have emerged. These kinds of algorithms include the neural network-based SAR image denoising algorithm [

19], the support vector machine-based SAR image denoising algorithm [

20], the convolutional neural network-based SAR image denoising algorithm [

21] which uses the CNN network containing the residual learning to recover the speckle component and subtracts this component from the noise image to achieve denoising [

22], SAR despeckling with a dilated residual network including skip connections and residual learning [

23], and so on. Zhang et al. [

12] pointed out that the biggest difference between the optimization methods based on models and the discriminant-based learning method is that the former has to specify the degradation matrix, while the latter tries to learn the degradation matrix through the training data set. Moreover, the model-based optimization method can solve different inverse problems adaptively, but this is usually time-consuming. The discriminant learning method is able to suppress noise efficiently, but its application area is limited by specific tasks. In order to take advantage of both methods, Zhang et al. [

12] trained a series of efficient and effective discriminant denoisers using a convolutional neural network and used the variable splitting technique to integrate the prior denoiser as a module into an optimization method based on a model to solve the inverse problem. This method achieved a promising performance in solving the classic inverse problem. Thus, this paper extends this method to SAR image denoising, which achieves a superior effect on speckle noise suppression.

The CNN is a popular discriminant model for deep learning [

24]. Deep learning-based methods, such as super resolution and image denoising, have demonstrated the most promising performance in image processing [

25,

26]. The characteristic of deep learning is feature extraction, which means that the most discriminative features are capable of learning from the relatively abstract high-level representation by learning the lower-level features of the input data. In [

12], a series of efficient and effective CNN denoisers were trained and integrated into the model-based denoising algorithm, which obtained a superior denoising effect. In the process of denoising, the algorithm in [

12] constructs a CNN denoiser based on different noise variances, and then a series of CNN-based prior denoisers can be obtained, which means each denoiser works for its corresponding noise variance. However, as the noise level of speckle in the SAR image is unknown, this algorithm cannot directly be applied on SAR images for de-speckling. In order to solve the above problem, we select five denoisers to denoise the SAR image respectively, and then use an image fusion algorithm to integrate the five denoised images into a final noise-free SAR image.

Recently, scholars have proposed many image fusion algorithms. Data-driven image fusion methods and multi-scale image fusion methods are the two most popular image fusion methods [

27]. However, these methods do not fully consider spatial consistency and therefore tend to produce brightness and color distortion. Then, a variety of optimization-based image fusion methods were introduced, for example, image fusion algorithms based on generalized random walks [

28], which can utilize the spatial information of an image fully. These methods try to estimate the weights of pixels in different source images via energy functions that work on the same positions in different source images, and then the source images are fused into one image through the weighted average of the pixel values. Nevertheless, the optimization-based methods are affected by the computational complexity because, to find a global optimal solution, several iterations are needed. Another disadvantage is that methods based on global optimization tend to over-smooth the weights, which is harmful to image fusion [

29,

30]. To overcome the above-mentioned problems, Li et al. [

30] proposed a method called fusion based on guided filtering (GFF), which is able to combine the pixel saliency with the spatial information of the image to produce image fusion without relying on specific image decomposition to achieve the rapid fusion of images. Thus, the GFF algorithm is employed to fuse the denoised images after using the CNN denoisers in our paper.

Traditionally, most of the noise suppression algorithms need to know the variance of noise. Normally, it is difficult to estimate the level of noise of SAR images, but the level of noise has a great influence on denoising. As an example, the performance of the denoising algorithm proposed in [

12] relies on the noise level of the image. Generally, when the estimated noise level is larger than the ground truth noise level of the image, the last denoised image relying on the model-based denoising algorithm tends to be over-smoothed [

31], and if the estimated noise level is smaller than the ground truth noise level of the image, the final denoised image contains more noise and artificial textures [

31,

32]. That is to say, for SAR image denoising, there would be many speckles left after the image processing through the denoisers at a low noise level, and the final denoised image obtained via the denoiser at a high noise level would appear over-smoothed as a side effect. However, a feasible idea [

32] is to fuse the denoised images obtained from different denoising algorithms through a fusion algorithm to achieve a superior performance. Obviously, CNN prior denoising algorithms that rely on different levels of noise training and processing can work as different denoising algorithms, while the fusion algorithm based on guided filtering is also a fast and advanced fusion algorithm. Therefore, according to this idea, this paper proposes a new SAR image denoising algorithm based on convolutional neural networks and guided filtering. Firstly, the algorithm chooses five noise level CNN prior denoisers to denoise the SAR image and then fuses the denoised images through the GFF fusion algorithm to obtain the final denoised image. Compared with the traditional despeckling methods and CNN based despeckling methods, the most obvious advantage of the proposed algorithm is the combination of model-based optimization method and discriminant learning method. The discriminant denoisers, which are obtained by CNN, are plugged in the model-based optimization method to solve the speckle suppression problem. It not only can suppress the speckle like the model-based optimization method, but also has the advantage of the discriminative learning method, which is fast. The experimental results show that the algorithm can remove noise effectively and retain the detailed texture in the final images.

3. Image Fusion-Based Guided Filtering

Derived from a local linear model, the guided filter computes the filtering output by considering the content of a guidance image, which can be the input image itself or another different image. The guided filter can be used as an edge-preserving smoothing operator, like the popular bilateral filter, but it has better behavior near the edges. The guided filter is also a more generic concept beyond smoothing: it can transfer the structures of the guidance image to the filtering output. Moreover, the guided filter naturally has a fast and non-approximate linear time algorithm, regardless of the kernel size and the intensity range. Thus, guided filtering is applied widely to the image processing field [

37]. We suppose that the guided image is

, the input image is

(i.e., the image needs to be filtered), and the output image is

. The local linear model is the vital assumption of guided filtering between the guided image and the output image, that is,

where

are the linear coefficients,

is a pixel index, and

is the local window centered on the point

in the guided image

. It is a square window whose size is

.

The edge-preserving filtering problem of the image is transformed into an optimization problem. The optimization problem involves minimizing the difference between

and

when meeting the linear relationship in Equation (11). That is, we should solve the minimization optimization problem, Equation (12):

where

is the normalization factor. We can use linear regression [

37] to solve Equation (12):

where

and

represent the mean and variance of the local window

in

.

represents the number of pixels in the window, and the mean of

p in the window

is

. To ensure the calculation amount of

in Equation (11) does not change with the change of the local window, we apply mean filtering to

and

in the local window after calculating

and

. For simplicity, we adopt

to represent guided filtering, where

is the size of the filtering kernel, and

is the normalization factor.

Figure 3 gives an example to show the guided filtering process when

and

.

An image fusion algorithm with guided filtering is given in

Figure 2 [

30]. First of all, the source image

is decomposed into two scales by mean filtering, namely the basic layer

and the detailed layer

. Then, we apply Laplacian filtering to obtain the high-pass portion

for each source image

. The saliency map

is constructed through the local averages of the absolute values of

. We use a large saliency map in the source image to construct the weight map

. Next, we use the corresponding source image

as a guide image for performing guided filtering on each weight map

. We get

where

,

,

and

are the parameters of the filter, and

and

are the final weight maps of the basic layer and the detailed layer.

Then, the basic layers and the detailed layers of different original images are merged by the weighted average:

Finally, the fused image is acquired by .

In GFF, the size of

should be decided experimentally. To fuse the base layers, the size of

is

. A big filter size

is preferred. To fuse the detailed layers, the size of

is

, and the fusion performance will be worse when the filter size

is too big or too small. In this paper, the value of

was set to 45 and the value of

was set to 7 based on the experiment. The flow diagram of GFF is shown in

Figure 4.

4. CNN Denoiser Prior and Guided Filtering for SAR Image Denoising

In the SAR image, the relative phase between the scattering points in each resolution unit is closely related to the radar azimuth. The speckle is considered to be produced by the coherent superposition of the echoes of many scattering points, which randomly distribute in the same resolution of the scene. It has been proven that fully developed speckle is multiplicative noise by Goodman [

9], and its multiplicative model is as follows:

where

denotes the SAR image contaminated by speckle;

indicates the radar scattering characteristic of the ground target (i.e., the clear image); and

denotes the speckle due to fading. The random process

and

are independent.

conforms to a Gamma distribution where the mean is one and the variance is

:

where

,

,

is the Gamma function, and

is the equivalent number of looks (ENL).

The main purpose of denoising is to eliminate

and to restore

from

. To facilitate the denoising process, homomorphic filtering is usually chosen in Equation (17), and the multiplication model is replaced by an addition model, as shown in Equation (19):

It can be seen that the current noise can be assumed to obey a Gaussian distribution [

38]. Thus, Equation (19) can be rewritten as

, where

represents the observed image,

is the additive noise, and

is the clean image. Therefore, the SAR image can be denoised by the above denoising algorithm.

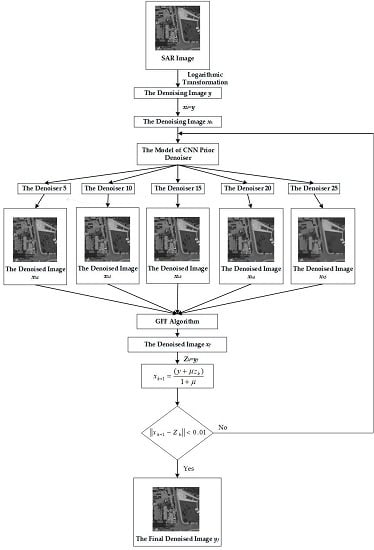

Figure 5 gives the flow chart of SAR image denoising based on CNN denoiser priors and the guided filtering fusion algorithm.

The specific algorithm workflow of this paper is as follows:

Step 1: Equation (19) is used to process the original SAR image by homomorphic filtering and to obtain the denoising image called ;

Step 2: Train the CNN prior denoisers;

Step 3: The initial value of is ;

Step 4: The CNN denoisers are adopted with noise levels of 5, 10, 15, 20, and 25 to denoise the image and to get the denoised images , , , , and by Equation (10);

Step 5: The denoised images

,

,

,

, and

are fused to obtain the denoised image

by the GFF fusion algorithm with Equations (15) and (16). Here, five images are fused instead of two images through the process in

Figure 3;

Step 6: Assign the value of to . From Equation (8), we can get ;

Step 7: Let , and repeat Step 4, Step 5, and Step 6 until the norm of and is less than 0.01;

Step 8: The image is indexed to obtain the final denoised image .

5. Experimental Results

The training sets and training process of the CNN denoisers used in this paper are the same as those described in the literature [

12]. The denoiser model training platform used was Matlab R2014b which is from Mathworks company in Natick, MA, USA, the CNN toolbox was MatConvnet (MatConvnet-1.0-beta24, Mathworks, Natick, Massachusetts, USA), and the GPU platform was Nvidia Titan X Quadro K6000 (Santa Clara, California, USA) which is from NVIDIA Corporation in Santa Clara, CA, USA. The parameters of the GFF algorithm used in our algorithm were the same as those described in the literature [

30].

In order to verify the reliability and effectiveness of the proposed algorithm, the proposed algorithm was tested on a simulated SAR image. The specific steps of the experiment were as follows:

The first step was to convert the clean SAR image into the logarithmic domain to obtain the logarithmic SAR image by using the logarithmic function.

In the second step, a random matrix whose size is the same as the logarithmic SAR image is produced according to various noise variance, the noise variance that this paper is based on is 0.04, 0.05, 0.06, respectively. Then, add the random matrix, which is Gaussian noise to the logarithmic SAR image. Finally, the simulated noise SAR images are obtained.

In the third step, with the simulated noise SAR image as input, the proposed algorithm was used to obtain the denoised image.

In the fourth step, the denoised image was exponentially transformed to obtain the final denoised image.

Figure 6a,b show the original image and the noise image, respectively, the six images in (c), (d), (e), (f), (g), and (h) are the denoised images produced through denoisers whose noise levels were 5, 10, 15, 20, and 25, and the final denoised image was produced by using the proposed method.

Figure 6c indicates that, when the selected denoiser level is smaller than the ground truth noise level, the denoised image still has a lot of noise, while (f) and (g) illustrate that, when the selected denoiser level is bigger than the ground truth noise level, the denoised image will appear to be over-smoothed. Thus, we fused all the denoised images using the GFF algorithm in order to obtain better denoised results, as shown in

Figure 6h. It turned out that the denoised image obtained by the proposed algorithm had less noise while retaining the detailed texture and having a promising visual effect.

Figure 7 shows a comparison between the proposed algorithm and other denoising algorithms. For all algorithms, the images in the figure had added Gaussian noise with 0.05 noise variance. The denoising algorithms used were the Lee filter [

1]; the sparse representation-based Bayesian threshold shrinkage denoising algorithm in the Shearlet domain (BSS-SR), as described in [

39]; the local linear minimum-mean-square-error (LLMMSE) wavelet shrinkage-based nonlocal denoising algorithm for SAR image (SAR-BM3D), as described in [

40]; SAR image denoising based on continuous cycle spinning via sparse representation in the Shearlet domain (CS-BSR), as described in [

41]; probabilistic patch-based weights iteration weighted maximum likelihood denoising (PPB), as described in [

42]; the use of texture strength and weighted nuclear norm minimization for SAR image denoising (BWNNM), as described in [

43]; deep CNN based on residual learning for image denoising (DnCNN), as described in [

44]; and the proposed algorithm.

By observing the experimental results, we found that

Figure 7a still retains much noise after Lee filtering, and the edge of the denoised images shown in

Figure 7b,d have some blur after the BSS-SR and CS-BSR methods. Although the SAR-BM3D and the PPB methods effectively suppress the speckle, they lose a lot of detail and appear to be over-smoothed, as shown in

Figure 7c,e. The BWNNM method and DnCNN algorithm have a good noise suppression effect and better preserve the edge, but there is still some residual noise, as shown in

Figure 7f,g. The proposed algorithm, as shown in

Figure 7h, achieves a better visual effect than BWNNM and SAR-BM3D, and the noise is more effectively suppressed than with BSS-SR and CS-BSR. These experimental results demonstrate the advantages of the denoising algorithm based on convolutional neural networks and guided filtering.

To further validate the advantages of the algorithm in this thesis, we used five objective evaluation indexes to evaluate the above denoised algorithms: the peak signal to noise ratio (PSNR) [

45], the equivalent number of looks (ENL) [

45], the edge preservation index (EPI) [

14], the structural similarity index measurement (SSIM), and an unassisted measure of the quality of the first-order and second-order descriptors of the denoised image ratio (UM) [

14]. The higher the PSNR value is, the stronger the denoising ability of the algorithm is. If the ENL value is bigger, the visual effect is better. The EPI value reflects the retentive ability of the boundary, and a bigger value is better. The SSIM indicates the similarity of the image structure after denoising, and it is as big as possible. The UM does not depend on the source image to assess the denoised image—when the value is smaller, the ability of the speckle suppression is stronger. The evaluation parameter values of the denoised images are given in

Table 1.

We can obtain from

Table 1 that the PSNR value of all the algorithms improved compared to that of the original image when noise with variance of 0.04 was added to images. Obviously, all algorithms can effectively suppress speckle, but our method had the highest PSNR value. The ENL value of the proposed algorithm improved compared with that of DnCNN, which indicates the effectiveness of the fusion algorithm based on guided filtering. Because of the complex and inherent denoising structure of CNN, it had a worse ENL value than the other algorithms. The results of our method in terms of retaining image edge information and texture detail are satisfying. The ENL value was higher than most of the other algorithms by about 0.1 to 0.2, and it was higher than the Lee filter by about 0.4. At the same time, the SSIM results show that the proposed method maintains the integrity of the image structure and has minimum structural distortion.

Meanwhile, our method also achieved the best results on the three evaluation indexes of PSNR, EPI and SSIM when noise with variance of 0.05 was added to images. Through

Table 1, we can see that the SSIM of BSS-SR, SAR-BM3D, and other algorithms decreased slightly, which means that these algorithms cannot retain the detail and reduce the distortion simultaneously, but our method, as well as the DnCNN and Lee filter, showed satisfactory results. Moreover, the ENL value of our method remained stable, which is better than that of DnCNN. When we added noise with variance of 0.06 to the image, the experimental results were the same as those analyzed above.

Generally speaking, whatever the noise level is, our proposed algorithm can preserve the structural information of the image, suppress the noise effectively, and retain the edge details to some extent.

Moreover, our proposed algorithm was tested by using the actual SAR image. The test images were the SAR images of TerraSar-X, and they can be downloaded from the website of Federico II University in Naples, Italy. They are shown in

Figure 8.

Figure 8a shows an SAR image of trees,

Figure 8b shows an SAR image of a city area, and

Figure 8c shows an SAR image of a lake. They were denoised by the above denoising algorithms.

Figure 9 shows the denoised images of

Figure 8a. In addition, the red boxes in

Figure 9,

Figure 10 and

Figure 11 mark out the region of the objective evaluation parameter UM. The specific values are given in the objective evaluation index section. We can see that the Lee filter is the worst denoising algorithm from

Figure 9. BSS-SR and CS-BSR blurred some edge texture, while SAR-BM3D and PPB brought in little artificial texture. BWNNM and DnCNN produced over-smoothing. Our algorithm not only preserved the texture and edge information well, but it also suppressed the generation of artificial texture.

Figure 10 shows the denoised images of the city area in

Figure 8b by using different denoising algorithms, and

Figure 11 shows the denoised images of a lake SAR in

Figure 8c by using different denoising algorithms.

As shown in

Figure 10 and

Figure 11, the performances of the eight denoising algorithms presented in this paper are similar to the results shown in

Figure 8a. The denoising effect of our proposed algorithms is the most promising. However, we have neither the clean image nor an expert interpreter, which is difficult to ensure whether such artifacts mean any loss of detail. Some help comes from the analysis of ratio images obtained, as mentioned in [

40], as the pointwise ratio between the original SAR image and de-noised SAR images. Given a perfect denoising, the ratio image should only contain speckle. On the contrary, the existence of structures or details related to the original image shows that the algorithm has removed not only noise but also some useful information. In order to highlight the better visual effect of our method, we give the ratio images in

Figure 12,

Figure 13 and

Figure 14.

From

Figure 12, we can see that the ratio image of our algorithm is closer to speckle.

Figure 13 gives the ratio images from

Figure 10.

From the ratio images in

Figure 12,

Figure 13 and

Figure 14, we can find that our method have no obvious pattern and obtain the least signal information. From this view, it can show that our method can attain a better visual effect.

To show the superiority of our algorithm, we used various common objective evaluation parameters for the denoising algorithms, including UM, ENL, EPI and SSIM.

Table 2,

Table 3 and

Table 4 give the experimental results of the objective evaluation results of the above denoised images.

Table 2 presents the results of evaluation indexes of the tree SAR image denoised by eight algorithms. First of all, the UM value was 25.4 of our proposed algorithm, and it was the smallest and the best of the eight algorithms. This shows that the proposed algorithm has an excellent comprehensive performance in terms of noise suppression. The ENL value of our method was not ideal, but its value was still bigger than the DnCNNs. The reason for this phenomenon is not only the complex and inherent denoising structure of CNN, but it is also related to the texture, light, and shade of SAR images. Finally, it is easy to see that the EPI and SSIM values of our method were the biggest, which shows that our proposed method has the strongest ability to preserve edges, and the integrity of the image structure was also the best.

As shown in

Table 3 and

Table 4, the performances of all the algorithms basically showed a similar trend to those in

Table 2. Compared with other methods, we found that our method significantly improved UM, EPI, and SSIM. In summary, our algorithm possesses the best denoising ability, the strongest edge and detail preservation ability, and the most promising visual effects.

Without any loss of generality, the abilities to preserve detailed information and smoothness are contradictory in our method. Although our method is better than the Lee filter for ENL, it is not as good as PPB or SAR-BM3D because the selection of the CNN model and fusion algorithm is just empirical. If there were more suitable models and fusion methods, the performance of our method could be improved furtherly.