Tracking and Simulating Pedestrian Movements at Intersections Using Unmanned Aerial Vehicles

Abstract

:1. Introduction

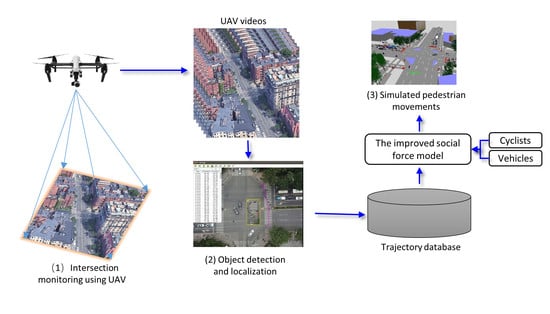

2. Study Area and Methodology

2.1. Pedestrian, Cyclist, and Vehicle Detection Using a UAV

2.2. Pedestrian Movement Modeling

2.2.1. Self-Driving Force

2.2.2. Boundary Force

2.2.3. Repulsive Force Exerted by Other Pedestrians

2.2.4. Repulsive Force Exerted by Cyclists on Pedestrians

2.2.5. Vehicle Force

2.3. Simulation of Pedestrian Movements at Complex Traffic Intersections

2.4. Calibration of the Pedestrian-Cyclist Conflict Model

3. Experiment and Result Analysis

3.1. Experimental Configuration

3.2. Pedestrian and Cyclist Detection and Localization

3.3. Performance of the Improved Social Force Model

4. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Wu, Y.; Zhang, S.J.; Hao, J.M.; Liu, H.; Wu, X.M.; Hu, J.N.; Walsh, M.P.; Wallington, T.J.; Zhang, K.M.; Stevanovic, S. On-road vehicle emissions and their control in China: A review and outlook. Sci. Total Environ. 2017, 574, 332–349. [Google Scholar] [CrossRef]

- Fishman, E.; Washington, S.; Haworth, N. Bike share’s impact on car use: Evidence from the united states, Great Britain, and Australia. Transp. Res. Part D Transp. Environ. 2014, 31, 13–20. [Google Scholar] [CrossRef]

- WHO. Global Status Report on Road Safety 2015. World Health Organization, 2015. Available online: https://www.who.int/violence_injury_prevention/road_safety_status/2015/en/ (accessed on 2 April 2019).

- Transport Canada. Canadian Motor Vehicle Traffic Collision 2014. Transport Canada, 2016. Available online: https://www.tc.gc.ca/media/documents/roadsafety/cmvtcs2014_eng.pdf (accessed on 2 April 2019).

- Jan Gehl. Cities for People. Isl. Press, 2013. Available online: https://islandpress.org/books/cities-people (accessed on 15 April 2019).

- Svensson, A.; Pauna-Gren, J. Safety at cycle crossings: The relationship between motor vehicle driver’s yielding behavior and cyclists’ traffic safety. In Proceedings of the 28th ICTCT Workshop, Ashdod, Israel, 29–30 October 2015. [Google Scholar]

- Lee, J.; Abdel-Aty, M.; Cai, Q. Intersection crash prediction modeling with macro-level data from various geographic units. Accid. Anal. Prev. 2017, 102, 213–226. [Google Scholar] [CrossRef] [PubMed]

- Battiato, S.; Farinella, G.M.; Giudice, O.; Cafiso, S.; Graziano, A.D. Vision based traffic conflict Analysis. In Proceedings of the AEIT Annual Conference, Mondello, Italy, 3–5 October 2013. [Google Scholar]

- Brow, G.R. Traffic conflicts for road user safety studies. Can. J. Civ. Eng. 1994, 21, 1–15. [Google Scholar] [CrossRef]

- Zaki, M.H.; Sayed, T. Automated Analysis of Pedestrians’ Nonconforming Behavior and Data Collection at an Urban Crossing. Transp. Res. Rec. 2014, 2443, 123–133. [Google Scholar] [CrossRef]

- Sacchi, E.; Sayed, T.; Deleur, P. A comparison of collision-based and conflict-based safety evaluations: The case of right-turn smart channels. Accid. Anal. Prev. 2013, 59, 260–266. [Google Scholar] [CrossRef] [PubMed]

- Li, J.; Shao, C.; Xu, W.T. Real-time system for tracking and classification of pedestrians and bicycles. Transp. Res. Rec. 2010, 2198, 83–92. [Google Scholar] [CrossRef]

- Cao, R.; Zhu, J.; Tu, W.; Li, Q.; Cao, J.; Liu, B.; Zhang, Q.; Qiu, G. Integrating Aerial and Street View Images for Urban Land Use Classification. Remote Sens. 2018, 10, 1553. [Google Scholar] [CrossRef]

- Tu, W.; Cao, J.Z.; Yue, Y.; Shaw, S.L.; Zhou, M.; Wang, Z.S.; Chang, X.M.; Xu, Y.; Li, Q.Q. Coupling mobile phone and social media data: A new approach to understanding urban functions and diurnal patterns. Int. J. Geogr. Inf. Sci. 2017, 31, 2331–2358. [Google Scholar] [CrossRef]

- Veeraraghavan, H.; Masoud, O.; Papanikolopoulos, N.P. Computer vision algorithms for intersection monitoring. IEEE Trans. Int. Transp. Syst. 2003, 4, 78–89. [Google Scholar] [CrossRef] [Green Version]

- Kaur, S.; Nieuwenhuijsen, M.J.; Colvile, R.N. Fine particulate matter and carbon monoxide exposure concentrations in urban street transport microenvironments. Atmos. Environ. 2007, 41, 4781–4810. [Google Scholar] [CrossRef]

- Dimitrievski, M.; Veelaert, P.; Philips, W. Behavioral pedestrian tracking using a camera and LiDAR sensors on a moving vehicle. Sensors 2019, 19, 391. [Google Scholar] [CrossRef] [PubMed]

- Wu, J.Q.; Xu, H.; Zheng, Y.C.; Tian, Z. A novel method of vehicle-pedestrian near-crash identification with roadside LiDAR data. Accid. Anal. Prev. 2018, 121, 238–249. [Google Scholar] [CrossRef]

- Kastrinaki, V.; Zervakis, M.; Kalaitzakis, K. A survey of video processing techniques for traffic applications. Image Vis. Comput. 2003, 21, 359–381. [Google Scholar] [CrossRef] [Green Version]

- Zhou, H.; Kong, H.; Wei, L.; Creighton, D.; Nahavandi, S. Efficient road detection and tracking for unmanned aerial vehicle. IEEE Trans. Int. Transp. Syst. 2015, 16, 297–309. [Google Scholar] [CrossRef]

- Joshi, K.A.; Thakore, D.G. A survey on moving object detection and tracking in video surveillance system. Int. J. Soft Comput. Eng. 2012, 2, 44–48. [Google Scholar]

- Kim, S.W.; Kook, H.; Sun, J.; Kang, M.; Ko, S. Parallel feature pyramid network for object detection. In Proceedings of the European Conference on Computer Vision (ECCV 2018), Munich, Germany, 8 September 2018. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Spatial pyramid pooling in deep convolutional networks for visual recognition. IEEE Trans. Int. Transp. Syst. 2015, 37, 1904–1916. [Google Scholar] [CrossRef]

- Hussein, M.; Sayed, T. Validation of an agent-based microscopic pedestrian simulation model in a crowded pedestrian walking environment. Transp. Plan. Technol. 2019, 42, 1–22. [Google Scholar] [CrossRef]

- Liang, M.; Hu, X.L. Recurrent Convolutional Neural Network for Object Recognition. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 3367–3375. Available online: https://www.cv-foundation.org/openaccess/content_cvpr_2015/papers/Liang_Recurrent_Convolutional_Neural_2015_CVPR_paper.pdf (accessed on 15 April 2019).

- Girshick, R. Fast R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Las Condes, Chile, 11–18 December 2015; pp. 1440–1448. Available online: https://www.cv-foundation.org/openaccess/content_iccv_2015/papers/Girshick_Fast_R-CNN_ICCV_2015_paper.pdf (accessed on 15 April 2019).

- Liu, W.; Anguelov, D.; Erhan, D.; Szegedy, C.; Reed, S.; Fu, C.Y. Ssd: Single shot multibox detector. In Proceedings of the European Conference on Computer Vision, Amsterdam, The Netherlands, 8–16 October 2016; pp. 21–37. Available online: https://arxiv.org/abs/1512.02325 (accessed on 15 April 2019).

- Redmon, J.; Farhadi, A. YOLOv3: An Incremental Improvement. arXiv: Computer Vision and Pattern Recognition. 2018. Available online: https://arxiv.org/abs/1804.02767 (accessed on 1 April 2019).

- Cai, Z.W.; Vasconcelos, N. Cascade r-cnn: Delving into high quality object detection. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–22 June 2018; pp. 6154–6162. Available online: https://arxiv.org/abs/1712.00726 (accessed on 15 April 2019).

- Kim, S.W.; Kook, H.K.; Sun, J.Y.; Kang, M.C.; Ko, S.J. Parallel feature pyramid network for object detection. In Proceedings of the European Conference on Computer Vision (ECCV), Munich, Germany, 8–14 September 2018; Available online: https://link.springer.com/chapter/10.1007/978-3-030-01228-1_15 (accessed on 1 April 2019).

- Kerner, B.S.; Klenov, S.L. A microscopic model for phase transitions in traffic flow. J. Phys. A Math. Gen. 2002, 35, 31–43. [Google Scholar] [CrossRef]

- Lovas, G.G. Modeling and simulation of pedestrian traffic flow. Transp. Res. Part B Meth. 1994, 28, 429–443. [Google Scholar] [CrossRef]

- Helbing, D.; Molnar, P. Social force model for pedestrian dynamics. Phys. Rev. E 1995, 51, 4282–4286. [Google Scholar] [CrossRef] [Green Version]

- Helbing, D.; Farkas, I.J.; Vicsek, T. Simulating dynamical features of escape panic. Nature 2000, 407, 487–490. [Google Scholar] [CrossRef] [Green Version]

- Helbing, D.; Johansson, A. Pedestrian, crowd and evacuation dynamics. Encycl. Complex. Syst. Sci. 2010, 16, 6475–6495. [Google Scholar]

- Helbing, D.; Molnar, P.; Farkas, I.J.; Bolay, K. Self-organizing pedestrian movement. Environ. Plan. B 2001, 28, 361–384. [Google Scholar] [CrossRef]

- Helbing, D.; Buzna, L.; Johansson, A.; Werner, T. Self-organized pedestrian crowd dynamics: Experiments, simulations, and design solutions. Transp. Sci. 2005, 39, 1–24. [Google Scholar] [CrossRef]

- Johansson, A.; Helbing, D.; Pradyumn, K.S. Specification of the social force pedestrian model by evolutionary adjustment to video tracking data. Adv. Complex Syst. 2007, 10, 271–288. [Google Scholar] [CrossRef]

- Hou, L.; Liu, J.G.; Pan, X. A social force evacuation model with the leadership effect. Physica A 2014, 400, 93–99. [Google Scholar] [CrossRef]

- Zeng, W.L.; Chen, P.; Nakamura, H.; Iryo-Asano, M. Application of social force model to pedestrian behavior analysis at signalized crosswalk. Transp. Res. Part C Emerg. Technol. 2014, 40, 143–159. [Google Scholar] [CrossRef]

- Zeng, W.L.; Nakamura, H.; Chen, P. A modified social force model for pedestrian behavior simulation at signalized crosswalks. Procedia Soc. Behav. Sci. 2014, 138, 521–530. [Google Scholar] [CrossRef]

- Liu, M.X.; Zeng, W.L.; Chen, P.; Wu, X.Y. A microscopic simulation model for pedestrian-pedestrian and pedestrian-vehicle interactions at crosswalks. PLoS ONE 2017, 12, e0180992. [Google Scholar] [CrossRef]

- Salamí, E.; Gallardo, A.; Skorobogatov, G.; Barrado, C. On-the-Fly Olive Tree Counting Using a UAS and Cloud Services. Remote Sens. 2019, 11, 316. [Google Scholar] [CrossRef]

- Zhu, J.; Sun, K.; Jia, S.; Lin, W.; Hou, X.; Liu, B.; Qiu, G. Bidirectional Long Short-Term Memory Network for Vehicle Behavior Recognition. Remote Sens. 2018, 10, 887. [Google Scholar] [CrossRef]

- Zhu, J.S.; Sun, K.; Jia, S.; Li, Q.Q.; Hou, X.X.; Lin, W.D.; Liu, B.Z.; Qiu, G.P. Urban Traffic Density Estimation Based on Ultrahigh-Resolution UAV Video and Deep Neural Network. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 12, 4968–4981. [Google Scholar] [CrossRef]

- Khan, M.A.; Ectors, W.; Bellemans, T.; Janssens, D.; Wets, G. Unmanned Aerial Vehicle-Based Traffic Analysis: A Case Study for Shockwave Identification and Flow Parameters Estimation at Signalized Intersect. Remote Sens. 2018, 10, 458. [Google Scholar] [CrossRef]

- Simonyan, K.; Zisserman, A. Very Deep Convolutional Networks for Large-scale Image Recognition. 2015. Available online: https://arxiv.org/pdf/1409.1556.pdf (accessed on 3 April 2019).

- Russakovsky, O.; Deng, J.; Su, H.; Krause, J.; Satheesh, S.; Ma, S. ImageNet Large Scale Visual Recognition challenge. Int. J. Comput. Vis. 2014, 115, 211–252. [Google Scholar] [CrossRef]

- Huang, G.; Liu, Z.; Laurens, V.D.M.; Weinberger, K.Q. Densely Connected Convolutional Networks. In Proceedings of the Conference on Computer Vision and Pattern Recognition (CVPR 2017), Honolulu, HI, USA, 21–26 July 2017; pp. 2261–2269. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the International Conference on Machine Learning (ICML 2015), Lille, France, 6–11 July 2015; pp. 448–456. [Google Scholar]

- Neubeck, A.; Gool, L.J. Efficient Non-maximum Suppression. In Proceedings of the 18th International Conference on Pattern Recognition (ICPR 2006), Hong Kong, China, 20–24 August 2006. [Google Scholar]

- Leitloff, J.; Rosenbaum, D.; Kurz, F.; Meynberg, O.; Reinartz, P. An Operational System for Estimating Road Traffic Information from Aerial Images. Remote Sens. 2014, 6, 11315–11341. [Google Scholar] [CrossRef] [Green Version]

- Tuyishimire, E.; Bagula, A.; Rekhis, S.; Boudriga, N. Cooperative Data Muling From Ground Sensors to Base Stations Using UAVs. In Proceedings of the 2017 IEEE Symposium on Computers and Communications, Heraklion, Crete, Greece, 3–6 July 2017. [Google Scholar]

- Tuyishimire, E.; Adiel, I.; Rekhis, S.; Bagula, B.A.; Boudriga, N. Internet of Things in Motion: A Cooperative Data Muling Model Under Revisit Constraints. In Proceedings of the 2016 International IEEE Conferences on Ubiquitous Intelligence & Computing, Advanced and Trusted Computing, Scalable Computing and Communications, Cloud and Big Data Computing, Internet of People, and Smart World Congress, Toulouse, France, 18–21 July 2016; pp. 1123–1130. [Google Scholar]

- Tuyishimire, E.; Bagula, A.; Ismail, A. Clustered Data Muling in the Internet of Things in Motion. Sensors 2019, 19, 484. [Google Scholar] [CrossRef] [PubMed]

| Type | TP | FP | FN | Correctness | Completeness | Quality |

|---|---|---|---|---|---|---|

| Pedestrian | 132 | 5 | 3 | 0.964 | 0.978 | 0.943 |

| Cyclist | 29 | 2 | 2 | 0.935 | 0.935 | 0.879 |

| Vehicle | 37 | 0 | 0 | 1.0 | 1.0 | 1.0 |

| Overall | 198 | 7 | 5 | 0.966 | 0.975 | 0.943 |

| Classical SFM | Improved SFM | |

|---|---|---|

| Positioning accuracy (meters) | 0.33 | 0.25 |

| MAPE | 12.43% | 9.04% |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, J.; Chen, S.; Tu, W.; Sun, K. Tracking and Simulating Pedestrian Movements at Intersections Using Unmanned Aerial Vehicles. Remote Sens. 2019, 11, 925. https://doi.org/10.3390/rs11080925

Zhu J, Chen S, Tu W, Sun K. Tracking and Simulating Pedestrian Movements at Intersections Using Unmanned Aerial Vehicles. Remote Sensing. 2019; 11(8):925. https://doi.org/10.3390/rs11080925

Chicago/Turabian StyleZhu, Jiasong, Siyuan Chen, Wei Tu, and Ke Sun. 2019. "Tracking and Simulating Pedestrian Movements at Intersections Using Unmanned Aerial Vehicles" Remote Sensing 11, no. 8: 925. https://doi.org/10.3390/rs11080925

APA StyleZhu, J., Chen, S., Tu, W., & Sun, K. (2019). Tracking and Simulating Pedestrian Movements at Intersections Using Unmanned Aerial Vehicles. Remote Sensing, 11(8), 925. https://doi.org/10.3390/rs11080925