A Novel Active Contours Model for Environmental Change Detection from Multitemporal Synthetic Aperture Radar Images

Abstract

:1. Introduction

2. Materials and Methods

2.1. New Difference Image Operator

2.2. DFLAC Model

2.3. Minimization of the Energy Function

2.4. Training Data Sampling

2.5. Evaluation Indices

3. Implementation Results

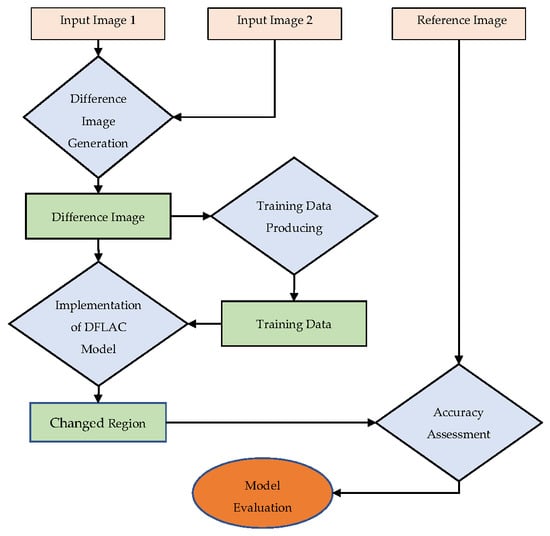

3.1. Algorithm’s Workflow

- At the first step of difference image generation, two SAR images of the data set (before and after change) are introduced to the model, and the difference image is then produced based on one of Equations (1) to (4). Secondly, in the training data sampling step, a threshold T was first estimated using Otsu’s method, then, the training data of changed and unchanged classes were selected based on this threshold. In the third step, the DFLAC model was implemented. The DFLAC model starts with defining the initial curve implicitly based on a level set theory, which is a simple shape as a square and circle. Then, the evolution of the DFLAC model’s curve was done over time using Equation (17). Next, the parameters , , and were then estimated according to Equations (22), (24), and (25). These last previous steps were repeated until the curve model reached stability and was not changed (i.e., ). Finally, the output of the model was generated by separating changed regions (pixels inside the curve that ) from unchanged areas (pixels outside the curve that ).

- The accuracy assessment was the last step of the workflow, in which the error image was computed by subtracting the output image from the reference image as follows:

- Finally, the accuracy assessment of the model using the error map and some accuracy criteria, such as PCC, OE, and the Kappa, were estimated based on Equations (26)–(28).

3.2. SAR Datasets

3.3. Difference Image

3.4. Model Implementation

3.5. Accuracy of the Proposed Model

4. Discussion

4.1. Accuracy Assessment

4.1.1. The Constant parameters

4.1.2. The Difference Image Operator Type

4.1.3. Numbers of Training Data

4.2. Running Time Comparison

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Giustarini, L.; Hostache, R.; Matgen, P.; Schumann, G.J.-P.; Bates, P.D.; Mason, D.C. A change detection approach to flood mapping in urban areas using TerraSAR-X. IEEE Trans. Geosci. Remote Sens. 2012, 51, 2417–2430. [Google Scholar] [CrossRef] [Green Version]

- Tzeng, Y.-C.; Chiu, S.-H.; Chen, D.; Chen, K.-S. Change detections from SAR images for damage estimation based on a spatial chaotic model. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 1926–1930. [Google Scholar]

- Li, N.; Wang, R.; Deng, Y.; Chen, J.; Liu, Y.; Du, K.; Lu, P.; Zhang, Z.; Zhao, F. Waterline mapping and change detection of Tangjiashan Dammed Lake after Wenchuan Earthquake from multitemporal high-resolution airborne SAR imagery. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2014, 7, 3200–3209. [Google Scholar] [CrossRef]

- Jin, S.; Yang, L.; Danielson, P.; Homer, C.; Fry, J.; Xian, G. A comprehensive change detection method for updating the National Land Cover Database to circa 2011. Remote Sens. Environ. 2013, 132, 159–175. [Google Scholar] [CrossRef] [Green Version]

- Quegan, S.; Le Toan, T.; Yu, J.J.; Ribbes, F.; Floury, N. Multitemporal ERS SAR analysis applied to forest mapping. IEEE Trans. Geosci. Remote Sens. 2000, 38, 741–753. [Google Scholar] [CrossRef]

- Nonaka, T.; Shibayama, T.; Umakawa, H.; Uratsuka, S. A comparison of the methods for the urban land cover change detection by high-resolution SAR data. In Proceedings of the 2007 IEEE International Geoscience and Remote Sensing Symposium, Barcelona, Spain, 23–28 July 2007; pp. 3470–3473. [Google Scholar]

- Ban, Y.; Yousif, O.A. Multitemporal spaceborne SAR data for urban change detection in China. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2012, 5, 1087–1094. [Google Scholar] [CrossRef]

- Gong, M.; Zhang, P.; Su, L.; Liu, J. Coupled dictionary learning for change detection from multisource data. IEEE Trans. Geosci. Remote Sens. 2016, 54, 7077–7091. [Google Scholar] [CrossRef]

- Samaniego, L.; Bárdossy, A.; Schulz, K. Supervised classification of remotely sensed imagery using a modified k-NN technique. IEEE Trans. Geosci. Remote Sens. 2008, 46, 2112–2125. [Google Scholar] [CrossRef]

- Frate, F.D.; Schiavon, G.; Solimini, C. Application of neural networks algorithms to QuickBird imagery for classification and change detection of urban areas. In Proceedings of the IGARSS 2004, 2004 IEEE International Geoscience and Remote Sensing Symposium, Anchorage, AK, USA, 20–24 September 2004; Volume 2, pp. 1091–1094. [Google Scholar]

- Fernàndez-Prieto, D.; Marconcini, M. A novel partially supervised approach to targeted change detection. IEEE Trans. Geosci. Remote Sens. 2011, 49, 5016–5038. [Google Scholar] [CrossRef]

- Roy, M.; Ghosh, S.; Ghosh, A. A novel approach for change detection of remotely sensed images using semi-supervised multiple classifier system. Inf. Sci. 2014, 269, 35–47. [Google Scholar] [CrossRef]

- Yuan, Y.; Lv, H.; Lu, X. Semi-supervised change detection method for multi-temporal hyperspectral images. Neurocomputing 2015, 148, 363–375. [Google Scholar] [CrossRef]

- Zheng, Y.; Jiao, L.; Liu, H.; Zhang, X.; Hou, B.; Wang, S. Unsupervised saliency-guided SAR image change detection. Pattern Recognit. 2017, 61, 309–326. [Google Scholar] [CrossRef]

- Bazi, Y.; Bruzzone, L.; Melgani, F. An unsupervised approach based on the generalized Gaussian model to automatic change detection in multitemporal SAR images. IEEE Trans. Geosci. Remote Sens. 2005, 43, 874–887. [Google Scholar] [CrossRef] [Green Version]

- Bazi, Y.; Bruzzone, L.; Melgani, F. Automatic identification of the number and values of decision thresholds in the log-ratio image for change detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2006, 3, 349–353. [Google Scholar] [CrossRef] [Green Version]

- Gong, M.; Cao, Y.; Wu, Q. A neighborhood-based ratio approach for change detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2011, 9, 307–311. [Google Scholar] [CrossRef]

- Sumaiya, M.N.; Kumari, R.S.S. Logarithmic mean-based thresholding for sar image change detection. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1726–1728. [Google Scholar] [CrossRef]

- Sumaiya, M.N.; Kumari, R.S.S. Gabor filter based change detection in SAR images by KI thresholding. Opt. Int. J. Light Electron Opt. 2017, 130, 114–122. [Google Scholar] [CrossRef]

- Bazi, Y.; Melgani, F.; Bruzzone, L.; Vernazza, G. A genetic expectation-maximization method for unsupervised change detection in multitemporal SAR imagery. Int. J. Remote Sens. 2009, 30, 6591–6610. [Google Scholar] [CrossRef]

- Belghith, A.; Collet, C.; Armspach, J.P. Change detection based on a support vector data description that treats dependency. Pattern Recognit. Lett. 2013, 34, 275–282. [Google Scholar] [CrossRef]

- Shang, R.; Qi, L.; Jiao, L.; Stolkin, R.; Li, Y. Change detection in SAR images by artificial immune multi-objective clustering. Eng. Appl. Artif. Intell. 2014, 31, 53–67. [Google Scholar] [CrossRef]

- Zheng, Y.; Zhang, X.; Hou, B.; Liu, G. Using combined difference image and $ k $-means clustering for SAR image change detection. IEEE Geosci. Remote Sens. Lett. 2014, 11, 691–695. [Google Scholar] [CrossRef]

- Li, H.; Gong, M.; Wang, Q.; Liu, J.; Su, L. A multi-objective fuzzy clustering method for change detection in SAR images. Appl. Soft Comput. 2016, 46, 767–777. [Google Scholar] [CrossRef]

- Tian, D.; Gong, M. A novel edge-weight based fuzzy clustering method for change detection in SAR images. Inf. Sci. 2018, 467, 415–430. [Google Scholar] [CrossRef]

- Gong, M.; Yang, H.; Zhang, P. Feature learning and change feature classification based on deep learning for ternary change detection in SAR images. ISPRS J. Photogramm. Remote Sens. 2017, 129, 212–225. [Google Scholar] [CrossRef]

- Li, M.; Li, M.; Zhang, P.; Wu, Y.; Song, W.; An, L. SAR Image Change Detection Using PCANet Guided by Saliency Detection. IEEE Geosci. Remote Sens. Lett. 2019, 16, 402–406. [Google Scholar] [CrossRef]

- Chen, H.; Jiao, L.; Liang, M.; Liu, F.; Yang, S.; Hou, B. Fast unsupervised deep fusion network for change detection of multitemporal SAR images. Neurocomputing 2019, 332, 56–70. [Google Scholar] [CrossRef]

- Carincotte, C.; Derrode, S.; Bourennane, S. Unsupervised change detection on SAR images using fuzzy hidden Markov chains. IEEE Trans. Geosci. Remote Sens. 2006, 44, 432–441. [Google Scholar] [CrossRef]

- Ma, J.; Gong, M.; Zhou, Z. Wavelet fusion on ratio images for change detection in SAR images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 1122–1126. [Google Scholar] [CrossRef]

- Gong, M.; Li, Y.; Jiao, L.; Jia, M.; Su, L. SAR change detection based on intensity and texture changes. ISPRS J. Photogramm. Remote Sens. 2014, 93, 123–135. [Google Scholar] [CrossRef]

- Li, C.; Huang, R.; Ding, Z.; Gatenby, J.C.; Metaxas, D.N.; Gore, J.C. A level set method for image segmentation in the presence of intensity inhomogeneities with application to MRI. IEEE Trans. Image Process. 2011, 20, 2007. [Google Scholar]

- Ahmadi, S.; Zoej, M.J.V.; Ebadi, H.; Moghaddam, H.A.; Mohammadzadeh, A. Automatic urban building boundary extraction from high resolution aerial images using an innovative model of active contours. Int. J. Appl. Earth Obs. Geoinf. 2010, 12, 150–157. [Google Scholar] [CrossRef]

- Chan, T.F.; Vese, L.A. Active contours without edges. IEEE Trans. Image Process. 2001, 10, 266–277. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Otsu, N. A threshold selection method from gray-level histograms. IEEE Trans. Syst. Man Cybern. 1979, 9, 62–66. [Google Scholar] [CrossRef] [Green Version]

- Gong, M.; Su, L.; Jia, M.; Chen, W. Fuzzy clustering with a modified MRF energy function for change detection in synthetic aperture radar images. IEEE Trans. Fuzzy Syst. 2013, 22, 98–109. [Google Scholar] [CrossRef]

- Moser, G.; Serpico, S.B. Generalized minimum-error thresholding for unsupervised change detection from SAR amplitude imagery. IEEE Trans. Geosci. Remote Sens. 2006, 44, 2972–2982. [Google Scholar] [CrossRef]

- Li, C.; Xu, C.; Gui, C.; Fox, M.D. Distance regularized level set evolution and its application to image segmentation. IEEE Trans. Image Process. 2010, 19, 3243–3254. [Google Scholar]

| Data Set | Size (Pixel) | Resolution (m) | Date of the First Image | Date of the Second Image | Location | Sensor |

|---|---|---|---|---|---|---|

| Yellow River Estuary | 289 × 257 | 8 | June 2008 | June 2009 | Dongying, Shandong Province of China | Radarsat 2 |

| Bern | 301 × 301 | 30 | April 1999 | May 1999 | a region near the city of Bern | European Remote Sensing 2 satellite |

| Ottawa | 350 × 290 | 10 | July 1997 | August 1997 | Ottawa City | Radarsat 2 |

| Dataset | Changed Regions | Unchanged Regions | ||||

|---|---|---|---|---|---|---|

| Yellow river Estuary | 127.00 | 169.67 | 212.33 | 255 | 0 | 42.167 |

| Bern | 112.40 | 159.94 | 207.47 | 255 | 0 | 32.44 |

| Ottawa | 127.00 | 169.67 | 212.33 | 255 | 0 | 42.17 |

| Dataset | Method | PCC % | OE % | Kappa % |

|---|---|---|---|---|

| Yellow River Estuary | SGK | 98.06 | 1.92 | 85.24 |

| NR | 88.33 | 79.99 | ||

| LN-GKIT | 69.60 | 30.82 | 33.78 | |

| CV | 95.37 | 4.63 | 84.38 | |

| DRLSE | 90.84 | 9.16 | 69.47 | |

| DFLAC | 95.49 | 4.51 | 84.65 | |

| Bern | SGK | 99.68 | 0.32 | 87.05 |

| NR | 99.66 | 0.34 | 85.90 | |

| LN-GKIT | 99.90 | 0.35 | 85.37 | |

| CV | 99.61 | 0.39 | 85.32 | |

| DRLSE | 98.70 | 1.30 | 63.32 | |

| DFLAC | 99.68 | 0.32 | 87.07 | |

| Ottawa | SGK | 98.95 | 1.05 | 95.98 |

| NR | 97.91 | 2.09 | 92.2 | |

| LN-GKIT | 98.35 | 2.22 | 91.87 | |

| CV | 97.06 | 2.93 | 88.92 | |

| DRLSE | 95.44 | 4.56 | 81.37 | |

| DFLAC | 99.00 | 1.00 | 96.26 |

| Subtraction | Log-Ratio | Normal Difference | RMLND | |

|---|---|---|---|---|

| Yellow River Estuary | 75.22 | 83.97 | 84.71 | 84.65 |

| Bern | 32.44 | 81.26 | 83.14 | 87.07 |

| Ottawa | 83.81 | 95.26 | 94.92 | 96.26 |

| Average | 63.82 | 86.83 | 87.59 | 89.33 |

| Data Sets | DFLAC Time Steps | SGK | SGK/DFLAC | |||||

|---|---|---|---|---|---|---|---|---|

| 1 | 2 | 3 | 4 | 5 | Total Time | |||

| Yellow River Estuary | 0.01 | 0.04 | 0.01 | 8.90 | 0.01 | 8.97 | 60.53 | 6.75 |

| Bern | 0.02 | 0.05 | 0.01 | 8.31 | 0.01 | 8.40 | 86.60 | 10.31 |

| Ottawa | 0.02 | 0.05 | 0.02 | 9.34 | 0.01 | 9.44 | 101.17 | 10.72 |

| Average | 0.02 | 0.05 | 0.01 | 8.85 | 0.01 | 8.94 | 82.77 | 9.25 |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Ahmadi, S.; Homayouni, S. A Novel Active Contours Model for Environmental Change Detection from Multitemporal Synthetic Aperture Radar Images. Remote Sens. 2020, 12, 1746. https://doi.org/10.3390/rs12111746

Ahmadi S, Homayouni S. A Novel Active Contours Model for Environmental Change Detection from Multitemporal Synthetic Aperture Radar Images. Remote Sensing. 2020; 12(11):1746. https://doi.org/10.3390/rs12111746

Chicago/Turabian StyleAhmadi, Salman, and Saeid Homayouni. 2020. "A Novel Active Contours Model for Environmental Change Detection from Multitemporal Synthetic Aperture Radar Images" Remote Sensing 12, no. 11: 1746. https://doi.org/10.3390/rs12111746

APA StyleAhmadi, S., & Homayouni, S. (2020). A Novel Active Contours Model for Environmental Change Detection from Multitemporal Synthetic Aperture Radar Images. Remote Sensing, 12(11), 1746. https://doi.org/10.3390/rs12111746