Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing

Abstract

:1. Introduction

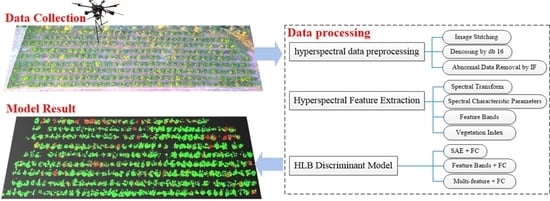

2. Materials and Data Pre-Processing Methods

2.1. Data Collection

2.2. UAV Hyperspectral Data Quality Evaluation

2.3. UAV Spectral Data Pre-Processing Methods

2.3.1. UAV Hyperspectral Image Stitching

2.3.2. Canopy Spectral Data Extraction at Pixel Level and Dataset Establishment

2.3.3. UAV Hyperspectral Data Denoising and Abnormal Sample Removal

3. Method Description

3.1. Spectral Transformation

3.2. Canopy Spectral Characteristic Parameter

3.3. Band Selection Based on Genetic Algorithm (GA) with Improved Selection Operator

3.4. Vegetation Index Features Constructed on the Basis of Feature Bands

3.5. Modelling Methods

4. Experiments and Results

4.1. Results of Feature Band Extraction

4.2. Modelling Results

4.2.1. SAE Modelling for HLB Detection Based on Full-Band Original and FDR Spectra

4.2.2. HLB Detection Model Based on Feature Band

4.2.3. Results of HLB Detection Model Based on Multi-Feature Fusion

4.2.4. Verification of Model Detection Effect

5. Discussion

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Dobbert, J.P. Food and agriculture organization of the united nations. Int. Organ. Gen. Univ. Int. Organ. Coop. 1983, 15–20. [Google Scholar]

- Bai, Z.Q. The Research Progress of Citrus Huanglongbing on Pathogen Diversity and Epidemiology. Chin. Agric. Sci. Bull. 2012, 28, 133–137. [Google Scholar]

- Bové, J.M.; Barros, A.P.D. Huanglongbing: A destructive, newly emerging, century-old disease of citrus. J. Plant Pathol. 2006, 88, 7–37. [Google Scholar]

- Chen, J.; Pu, X.; Deng, X.; Liu, S.; Li, H.; Civerolo, E. A Phytoplasma Related to ‘CandidatusPhytoplasma asteris’ Detected in Citrus Showing Huanglongbing (Yellow Shoot Disease) Symptoms in Guangdong, P.R. China. Phytopathology 2009, 99, 236–242. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hall, D.G.; Shatters, R.; Carpenter, J.E.; Shapiro, J.P. Research toward an Artificial Diet for Adult Asian Citrus Psyllid. Ann. Entomol. Soc. Am. 2010, 103, 611–617. [Google Scholar] [CrossRef] [Green Version]

- Graham, J.H.; Stelinski, L.L. Abstracts from the 4th International Research Conference on Huanglongbing. J. Citrus Pathol. 2015, 2, 1–49. [Google Scholar]

- Li, X.; Lee, W.S.; Li, M.; Ehsani, R.; Mishra, A.R.; Yang, C.; Mangan, R.L. Feasibility study on Huanglongbing (citrus greening) detection based on WorldView-2 satellite imagery. Biosyst. Eng. 2015, 132, 28–38. [Google Scholar] [CrossRef]

- Pourreza, A.; Lee, W.S.; Etxeberria, E.; Banerjee, A. An evaluation of a vision-based sensor performance in Huanglongbing disease identification. Biosyst. Eng. 2015, 130, 13–22. [Google Scholar] [CrossRef]

- Deng, X.; Lan, Y.; Hong, T.; Chen, J. Citrus greening detection using visible spectrum imaging and C-SVC. Comput. Electron. Agric. 2016, 130, 177–183. [Google Scholar] [CrossRef]

- Deng, X.; Lan, Y.; Xing, X.Q.; Mei, H.L.; Liu, J.K.L.; Hong, T.S. Detection of citrus Huanglongbing based on image feature extraction and two-stage BPNN modeling. Int. J. Agric. Biol. Eng. 2016, 9, 20–26. [Google Scholar]

- Wetterich, C.B.; Neves, R.F.D.O.; Belasque, J.; Marcassa, L.G. Detection of citrus canker and Huanglongbing using fluorescence imaging spectroscopy and support vector machine technique. Appl. Opt. 2016, 55, 400–407. [Google Scholar] [CrossRef] [PubMed]

- Deng, X.L.; Lin, L.S.; Lan, Y.B. Citrus Huanglongbing detection based on modulation chlorophyll fluorescence measurement. J. South China Agric. Univ. 2016, 37, 113–116. [Google Scholar]

- Lan, Y.; Chen, S. Current status and trends of plant protection UAV and its spraying technology in China. Int. J. Precis. Agric. Aviat. 2018, 1, 1–9. [Google Scholar] [CrossRef]

- Tahir, M.N.; Lan, Y.; Zhang, Y.; Wang, Y.; Nawaz, F.; Shah, M.A.A.; Gulzar, A.; Qureshi, W.S.; Naqvi, S.M. Real time estimation of leaf area index and groundnut yield using multispectral UAV. Int. J. Precis. Agric. Aviat. 2018, 1, 1–6. [Google Scholar] [CrossRef]

- Tahir, M.N.; Naqvi, S.Z.A.; Lan, Y.; Zhang, Y.; Wang, Y.; Afzal, M.; Cheema, M.J.M.; Amir, S. Real time estimation of chlorophyll content based on vegetation indices derived from multispectral UAV in the kinnow orchard. Int. J. Precis. Agric. Aviat. 2018, 1, 24–31. [Google Scholar] [CrossRef] [Green Version]

- Yang, C.; Everitt, J.H.; Fernández, C.J. Comparison of airborne multispectral and hyperspectral imagery for mapping cotton root rot. Biosyst. Eng. 2010, 107, 131–139. [Google Scholar] [CrossRef]

- Weng, H.; Lv, J.; Cen, H.; He, M.; Zeng, Y.; Hua, S.; Li, H.; Meng, Y.; Fang, H.; He, Y. Hyperspectral reflectance imaging combined with carbohydrate metabolism analysis for diagnosis of citrus Huanglongbing in different seasons and cultivars. Sens. Actuators B Chem. 2018, 275, 50–60. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.-C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of Winter Wheat Above-Ground Biomass Using Unmanned Aerial Vehicle-Based Snapshot Hyperspectral Sensor and Crop Height Improved Models. Remote. Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Abdulridha, J.; Batuman, O.; Ampatzidis, Y. UAV-Based Remote Sensing Technique to Detect Citrus Canker Disease Utilizing Hyperspectral Imaging and Machine Learning. Remote. Sens. 2019, 11, 1373. [Google Scholar] [CrossRef] [Green Version]

- Garza, B.N.; Ancona, V.; Enciso, J.; Perotto-Baldivieso, H.L.; Kunta, M.; Simpson, C. Quantifying Citrus Tree Health Using True Color UAV Images. Remote. Sens. 2020, 12, 170. [Google Scholar] [CrossRef] [Green Version]

- Mei, H.; Deng, X.; Hong, T.; Luo, X.; Deng, X. Early detection and grading of citrus Huanglongbing using hyperspectral imaging technique. Trans. Chin. Soc. Agric. Eng. 2014, 30, 140–147. [Google Scholar]

- Liu, Y.; Xiao, H.; Sun, X.; Zhu, D.; Han, R.; Ye, L.; Wang, J.; Ma, K. Spectral feature selection and detection model of citrus leaf yellow dragon disease. Trans. Chin. Soc. Agric. Eng. 2018, 34, 180–187. [Google Scholar]

- Liu, Y.; Xiao, H.; Sun, X.; Han, R.; Liao, X. Study on the Quick Non-Destructive Detection of Citrus Huanglongbing Based on the Spectrometry of VIS and NIR. Spectrosc. Spectr. Anal. 2018, 38, 528–534. [Google Scholar]

- Deng, X.; Huang, Z.; Zheng, Z.; Lan, Y.; Dai, F. Field detection and classification of citrus Huanglongbing based on hyperspectral reflectance. Comput. Electron. Agric. 2019, 167, 105006. [Google Scholar] [CrossRef]

- Li, X.-H.; Li, M.-Z.; Suk, L.W.; Reza, E.; Ashish, R.M. Visible-NIR spectral feature of citrus greening disease. Spectrosc. Spectr. Anal. 2014, 34, 1553–1559. [Google Scholar]

- Sankaran, S.; Maja, J.M.; Buchanon, S.; Ehsani, R. Huanglongbing (Citrus Greening) Detection Using Visible, Near Infrared and Thermal Imaging Techniques. Sensors 2013, 13, 2117–2130. [Google Scholar] [CrossRef] [Green Version]

- Sankaran, S.; Ehsani, R.; Etxeberria, E. Mid-infrared spectroscopy for detection of Huanglongbing (greening) in citrus leaves. Talanta 2010, 83, 574–581. [Google Scholar] [CrossRef]

- Mishra, A.R.; Ehsani, R.; Lee, W.S.; Albrigo, G. Spectral Characteristics of Citrus Greening (Huanglongbing). Am. Soc. Agric. Biol. Eng. 2007, 1. [Google Scholar] [CrossRef]

- Mishra, A.; Karimi, D.; Ehsani, R.; Albrigo, L.G. Evaluation of an active optical sensor for detection of Huanglongbing (HLB) disease. Biosyst. Eng. 2011, 110, 302–309. [Google Scholar] [CrossRef]

- Lee, W.S.; Kumar, A.; Ehsani, R.J.; Albrigo, L.G.; Yang, C.; Mangan, R.L. Citrus greening disease detection using aerial hyperspectral and multispectral imaging techniques. J. Appl. Remote. Sens. 2012, 6, 063542. [Google Scholar] [CrossRef]

- Garcia-Ruiz, F.; Sankaran, S.; Maja, J.M.; Lee, W.S.; Rasmussen, J.; Ehsani, R. Comparison of two aerial imaging platforms for identification of Huanglongbing-infected citrus trees. Comput. Electron. Agric. 2013, 91, 106–115. [Google Scholar] [CrossRef]

- Li, X.; Lee, W.S.; Li, M.; Ehsani, R.; Mishra, A.R.; Yang, C.; Mangan, R.L. Spectral difference analysis and airborne imaging classification for citrus greening infected trees. Comput. Electron. Agric. 2012, 83, 32–46. [Google Scholar] [CrossRef]

- Li, H.; Lee, W.S.; Wang, K.; Ehsani, R.; Yang, C. ‘Extended spectral angle mapping (ESAM)’ for citrus greening disease detection using airborne hyperspectral imaging. Precis. Agric. 2014, 15, 162–183. [Google Scholar] [CrossRef]

- Lan, Y.; Zhu, Z.; Deng, X.; Lian, B.; Huang, J.; Huang, Z.; Hu, J. Monitoring and classification of Huanglongbing plants of citrus based on UAV hyperspectral remote sensing. Trans. Chin. Soc. Agric. Eng. 2019, 35, 92–100. [Google Scholar]

- Lan, Y.; Huang, Z.; Deng, X.; Zhu, Z.; Huang, H.; Zheng, Z.; Lian, B.; Zeng, G.; Tong, Z. Comparison of machine learning methods for citrus greening detection on UAV multispectral images. Comput. Electron. Agric. 2020, 171, 105234. [Google Scholar] [CrossRef]

- Daubechies, I. The wavelet transform, time-frequency localization and signal analysis. IEEE Trans. Inf. Theory 1990, 36, 961–1005. [Google Scholar] [CrossRef] [Green Version]

- Liu, F.T.; Ting, K.M.; Zhou, Z.H. Isolation Forest. In Proceeding of the Eighth IEEE International Conference on IEEE, Scottsdale, AZ, USA, 24 October 2009. [Google Scholar]

- Sims, D.A.; Gamon, J.A. Relationships between leaf pigment content and spectral reflectance across a wide range of species, leaf structures and developmental stages. Remote. Sens. Environ. 2002, 81, 337–354. [Google Scholar] [CrossRef]

- David, E.G. Genetic Algorithm in Search, Optimization and Machine Learning; Addison Wesley: Boston, MA, USA, 1989; pp. 2104–2116. [Google Scholar]

- Tucker, C.J. Red and photographic infrared linear combinations for monitoring vegetation. Remote. Sens. Environ. 1979, 8, 127–150. [Google Scholar] [CrossRef] [Green Version]

- Jordan, C.F. Derivation of Leaf-Area Index from Quality of Light on the Forest Floor. Ecology 1969, 50, 663–666. [Google Scholar] [CrossRef]

- Matsas, V.J.; Newson, T.P.; Richardson, D.J.; Payne, D.N. Selfstarting passively made-locked fibre ring soliton laser exploiting nonlinear polarisation rotation. Opt. Commun. 1992, 92, 61–66. [Google Scholar] [CrossRef] [Green Version]

- Clark, W.H.; Peoples, J.R.; Briggs, M. Occurrence and Inhibition of Large Yawing Moments during High-Incidence Flight of Slender Missile Configurations. J. Spacecr. Rocket. 1973, 10, 510–519. [Google Scholar] [CrossRef]

- Vlassara, H.; Li, Y.M.; Imam, F.; Wojciechowicz, D.; Yang, Z.; Liu, F.T.; Cerami, A. Identification of galectin-3 as a high-affinity binding protein for advanced glycation end products (AGE): A new member of the AGE-rece complex. Mol. Med. 1995, 1, 634–646. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Broge, N.; Leblanc, E. Comparing prediction power and stability of broadband and hyperspectral vegetation indices for estimation of green leaf area index and canopy chlorophyll density. Remote. Sens. Environ. 2001, 76, 156–172. [Google Scholar] [CrossRef]

- Lichtenthaler, H.K.; Gitelson, A.; Lang, M. Non-Destructive Determination of Chlorophyll Content of Leaves of a Green and an Aurea Mutant of Tobacco by Reflectance Measurements. J. Plant. Physiol. 1996, 148, 483–493. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Merzlyak, M.N. Use of a green channel in remote sensing of global vegetation from EOS-MODIS. Remote. Sens. Environ. 1996, 58, 289–298. [Google Scholar] [CrossRef]

- Vincini, M.; Frazzi, E. Comparing narrow and broad-band vegetation indices to estimate leaf chlorophyll content in planophile crop canopies. Precis. Agric. 2010, 12, 334–344. [Google Scholar] [CrossRef]

- Liu, Y.; Chen, Q.; Tang, Y.; He, Q. An Incremental Updating Method for Support Vector Machines. Lect. Notes Comput. Sci. 2004, 13, 426–435. [Google Scholar] [CrossRef]

- Vincent, P.; Larochelle, H.; Lajoie, I.; Bengio, Y.; Manzagol, P.A.; Bottou, L. Stacked Denoising Autoencoders: Learning Useful Representations in a Deep Network with a Local Denoising Criterion. J. Mach. Learn. Res. 2010, 11, 3371–3408. [Google Scholar]

| Name | Spectral Range (Nm) | Sampling Interval (Nm) | Spectral Resolution (Nm) | Channel Number | Data Type | Size (Mm) | Weight (Kg) |

|---|---|---|---|---|---|---|---|

| Cubert S185 | 450–950 | 4 | 8@532 | 125 | Grayscale image pixel 1000 × 1000 Hyperspectral pixels 50 × 50 | 195 × 67 × 60 | 0.47 |

| HH2 | 325–1075 | 1 | <3.0 @700 | 700 | Spectral reflectance | 90 × 140 × 215 | 1.2 |

| Spectral Characteristics | Abbreviations/Formula | Definition |

|---|---|---|

| Blue Edge Amplitude | Maximum value of FDR in the wavelength range of 490–470 nm | |

| Blue Edge Position | Wavelength corresponding to the maximum FDR in the 490–470 nm range | |

| Blue Area | Integration of FDR in the wavelength range of 490–470 nm | |

| Yellow Edge Amplitude | Maximum value of FDR in the wavelength range of 560–620 nm | |

| Yellow Edge Position | Wavelength corresponding to the maximum FDR in the range of 560–620 nm | |

| Yellow Area | Integration of FDR in the wavelength range of 560–620 nm | |

| Red Edge Amplitude | Maximum value of FDR in the wavelength range of 640–780 nm | |

| Red Edge Position | Wavelength corresponding to the maximum FDR in the range of 640–780 nm | |

| Red Area | Integration of FDR in the wavelength range of 640–780 nm | |

| Green Peak Value | Maximum value of spectral reflectance in the wavelength range of 510–570 nm | |

| Green Peak Position | Wavelength corresponding to the maximum FDR in the range of 510–570 nm | |

| Green Peak Reflection Height | Maximum intensity of the spectral reflectance in the wavelength range of 510–570 nm | |

| Red Valley Value | Minimum spectral reflectance in the 640–700 nm wavelength range | |

| Red Valley Position | Wavelength corresponding to the minimum value of spectral reflectance in the range of 640–700 nm | |

| Red Valley Absorption | Absorption intensity of the minimum spectral reflectance in the wavelength range of 510–570 nm |

| Name | Formula | |

|---|---|---|

| Ratio Vegetation Index (RVI) | Jordan, 1969 [41] | |

| Difference Vegetation Index (DVI) | Matsas, 1992 [42] | |

| Normalised Vegetation Index (NDVI) | Clark, 1973 [43] | |

| Enhanced Vegetation Index (EVI) | Vlassara, 1995 [44] | |

| Triangle Vegetation Index (TVI) | Borge, 2001 [45] | |

| Normalised Greenness Vegetation Index (NDGI) | Lichtenthaler, 1996 [46] | |

| Green Ratio Vegetation Index (GRVI) | Anatoly, 1996 [47] | |

| Chlorophyll Vegetation Index (CVI) | Vincini, 2008 [48] |

| Vegetation Index | Number of Features |

|---|---|

| RVI, DVI, NDVI, EVI | 3 |

| GRVI | 5 |

| TVI, NDGI, CVI | 15 |

| Parameter | Central Wavelength of the Selected Band (Nm) | Accuracy |

|---|---|---|

| Cross probability = 0.5 Mutation probability = 0.02 | 468, 504, 512, 516, 528, 536, 632, 680, 688 | 91.92% |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Deng, X.; Zhu, Z.; Yang, J.; Zheng, Z.; Huang, Z.; Yin, X.; Wei, S.; Lan, Y. Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing. Remote Sens. 2020, 12, 2678. https://doi.org/10.3390/rs12172678

Deng X, Zhu Z, Yang J, Zheng Z, Huang Z, Yin X, Wei S, Lan Y. Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing. Remote Sensing. 2020; 12(17):2678. https://doi.org/10.3390/rs12172678

Chicago/Turabian StyleDeng, Xiaoling, Zihao Zhu, Jiacheng Yang, Zheng Zheng, Zixiao Huang, Xianbo Yin, Shujin Wei, and Yubin Lan. 2020. "Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing" Remote Sensing 12, no. 17: 2678. https://doi.org/10.3390/rs12172678

APA StyleDeng, X., Zhu, Z., Yang, J., Zheng, Z., Huang, Z., Yin, X., Wei, S., & Lan, Y. (2020). Detection of Citrus Huanglongbing Based on Multi-Input Neural Network Model of UAV Hyperspectral Remote Sensing. Remote Sensing, 12(17), 2678. https://doi.org/10.3390/rs12172678