Fusion Approach for Remotely-Sensed Mapping of Agriculture (FARMA): A Scalable Open Source Method for Land Cover Monitoring Using Data Fusion

Abstract

:1. Introduction

2. Materials and Methods

2.1. Software and Libraries

- RSGISLib

- KEA

- Pandas/GeoPandas

- GeoPackage

- Docker/Singularity

2.1.1. Remote Sensing and GIS Library (RSGISLib)

2.1.2. KEA

2.1.3. Pandas/GeoPandas

2.1.4. GeoPackage

2.1.5. Docker/Singularity

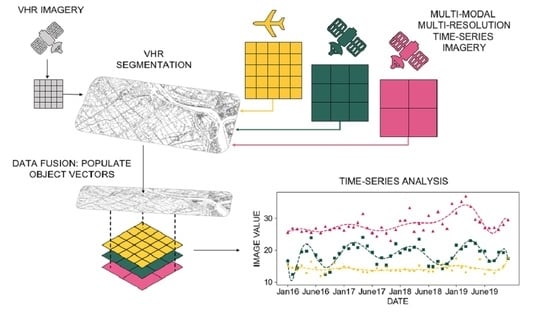

2.2. Fusion Approach for Remotely-Sensed Mapping of Agriculture (FARMA)

2.2.1. Image Segmentation

2.2.2. Image Tiling

2.2.3. Image Statistics

3. Example Studies

3.1. Remote Sensing Imagery

3.1.1. WorldView

3.1.2. Sentinel-1 Time-Series

3.1.3. Sentinel-3 Time-Series

3.1.4. Sentinel-2 Time-Series

3.2. Senegal River Valley

3.2.1. Senegal Study Site A: Broad Scale Photosynthetic Cover Mapping

3.2.2. Senegal Study Site B: High-Resolution Agriculture Monitoring

3.3. Mekong Delta, Vietnam

4. Discussion

4.1. Comparison to Other Methods

- FARMA provides an efficient and scalable method for the fusion of multi-resolution data. High- and moderate-resolution time-series imagery was populated into the VHR derived objects, but the segmentation and imagery used for analysis can be any combination of sizes. Furthermore, multiple resolution pixel values can be populated into the same image object, allowing a user to analyze multiple raster datasets per object at their native resolution. In addition, the system is fully open source and does not require proprietary software at any stage of the process.

- It has the ability to tile the imagery so that smaller portions of data can be processed more efficiently even with modest computing resources. However, within a large distributed computing environment, this enables the advantage of splitting the data volume across a large number of CPUs. The run time subsequently becomes trivial as all tiles can be run simultaneously. A primary advantage of FARMA is that the tile size is entirely customizable, so a user can trade tile size for number of tiles, depending on the computing architecture available. FARMA can be fully operated on a single thread or it can be distributed across as many CPUs as possible.

- It is able to tile polygons without creating artificial boundaries between them. While the benefit of processing large data volumes as individual tiled datasets is known, this has not been readily demonstrated with image objects which do not conform to neat tile boundaries. FARMA enables objects to be assigned to a tile using a mode value, subsequently enabling a segmentation to be tiled using soft tile boundaries. Furthermore, by creating these tiles as vector datasets, this has the benefit of enabling zonal statistics to be populated into objects irrespective of spatial resolution while being processed in parallel.

- FARMA reads each vector layer into memory before calculating and assigning zonal statistics. While this may pose an issue for very large vectors (>10,000 ha), typically not associated with agriculture fields, this has the benefit of reducing the vector I/O and thus is more computationally efficient. This contributes to the framework being fully scalable.

4.2. Performance

4.3. Framework Expansion

4.4. Data Processing Considerations and Limitations

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Mendelsohn, R. The impact of climate change on agriculture in developing countries. J. Nat. Resour. Policy Res. 2008, 1, 5–19. [Google Scholar] [CrossRef] [Green Version]

- Fritz, S.; See, L.; McCallum, I.; You, L.; Bun, A.; Moltchanova, E.; Duerauer, M.; Albrecht, F.; Schill, C.; Perger, C. Mapping global cropland and field size. Glob. Chang. Biol. 2015, 21, 1980–1992. [Google Scholar] [CrossRef] [PubMed]

- Alexandratos, N.; Bruinsma, J. World agriculture towards 2030/2050: The 2012 revision. Agric. Food Policy 2012. [Google Scholar] [CrossRef]

- Haberl, H.; Erb, K.H.; Krausmann, F.; Bondeau, A.; Lauk, C.; Müller, C.; Plutzar, C.; Steinberger, J.K. Global bioenergy potentials from agricultural land in 2050: Sensitivity to climate change, diets and yields. Biomass Bioenergy 2011, 35, 4753–4769. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Challinor, A.J.; Watson, J.; Lobell, D.B.; Howden, S.M.; Smith, D.R.; Chhetri, N. A meta-analysis of crop yield under climate change and adaptation. Nat. Clim. Chang. 2014, 4, 287–291. [Google Scholar] [CrossRef]

- World Health Organization. The State of Food Security and Nutrition in the World 2018: Building Climate Resilience for Food Security and Nutrition; Food & Agriculture Organization: Rome, Italy, 2018. [Google Scholar]

- Samberg, L.H.; Gerber, J.S.; Ramankutty, N.; Herrero, M.; West, P.C. Subnational distribution of average farm size and smallholder contributions to global food production. Environ. Res. Lett. 2016, 11, 124010. [Google Scholar] [CrossRef]

- Connor, M.; Annalyn, H.; Quilloy, R.; Van Nguyen, H.; Gummert, M.; Sander, B.O. When climate change is not psychologically distant–Factors influencing the acceptance of sustainable farming practices in the Mekong river Delta of Vietnam. World Dev. Perspect. 2020, 100204. [Google Scholar] [CrossRef]

- Xiao, X.; Boles, S.; Liu, J.; Zhuang, D.; Frolking, S.; Li, C.; Salas, W.; Moore, B., III. Mapping paddy rice agriculture in southern China using multi-temporal MODIS images. Remote Sens. Environ. 2005, 95, 480–492. [Google Scholar] [CrossRef]

- Yin, H.; Prishchepov, A.V.; Kuemmerle, T.; Bleyhl, B.; Buchner, J.; Radeloff, V.C. Mapping agricultural land abandonment from spatial and temporal segmentation of Landsat time series. Remote Sens. Environ. 2018, 210, 12–24. [Google Scholar] [CrossRef]

- Jain, M.; Mondal, P.; DeFries, R.S.; Small, C.; Galford, G.L. Mapping cropping intensity of smallholder farms: A comparison of methods using multiple sensors. Remote Sens. Environ. 2013, 134, 210–223. [Google Scholar] [CrossRef] [Green Version]

- Xiao, X.; Boles, S.; Frolking, S.; Li, C.; Babu, J.Y.; Salas, W.; Moore, B., III. Mapping paddy rice agriculture in South and Southeast Asia using multi-temporal MODIS images. Remote Sens. Environ. 2006, 100, 95–113. [Google Scholar] [CrossRef]

- Whitcraft, A.K.; Vermote, E.F.; Becker-Reshef, I.; Justice, C.O. Cloud cover throughout the agricultural growing season: Impacts on passive optical earth observations. Remote Sens. Environ. 2015, 156, 438–447. [Google Scholar] [CrossRef]

- Nguyen, D.B.; Clauss, K.; Cao, S.; Naeimi, V.; Kuenzer, C.; Wagner, W. Mapping rice seasonality in the Mekong Delta with multi-year Envisat ASAR WSM data. Remote Sens. 2015, 7, 15868–15893. [Google Scholar] [CrossRef] [Green Version]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Torbick, N.; Chowdhury, D.; Salas, W.; Qi, J. Monitoring rice agriculture across myanmar using time series Sentinel-1 assisted by Landsat-8 and PALSAR-2. Remote Sens. 2017, 9, 119. [Google Scholar] [CrossRef] [Green Version]

- Clauss, K.; Ottinger, M.; Leinenkugel, P.; Kuenzer, C. Estimating rice production in the Mekong Delta, Vietnam, utilizing time series of Sentinel-1 SAR data. Int. J. Appl. Earth Obs. Geoinf. 2018, 73, 574–585. [Google Scholar] [CrossRef]

- Singha, M.; Dong, J.; Zhang, G.; Xiao, X. High resolution paddy rice maps in cloud-prone Bangladesh and Northeast India using Sentinel-1 data. Sci. Data 2019, 6, 1–10. [Google Scholar] [CrossRef]

- Bazzi, H.; Baghdadi, N.; El Hajj, M.; Zribi, M.; Minh, D.H.T.; Ndikumana, E.; Courault, D.; Belhouchette, H. Mapping paddy rice using Sentinel-1 SAR time series in Camargue, France. Remote Sens. 2019, 11, 887. [Google Scholar] [CrossRef] [Green Version]

- Clauss, K.; Ottinger, M.; Künzer, C. Mapping rice areas with Sentinel-1 time series and superpixel segmentation. Int. J. Remote Sens. 2018, 39, 1399–1420. [Google Scholar] [CrossRef] [Green Version]

- Chuang, Y.C.M.; Shiu, Y.S. A comparative analysis of machine learning with WorldView-2 pan-sharpened imagery for tea crop mapping. Sensors 2016, 16, 594. [Google Scholar] [CrossRef] [Green Version]

- Hively, W.D.; Lamb, B.T.; Daughtry, C.S.T.; Shermeyer, J.; McCarty, G.W.; Quemada, M. Mapping crop residue and tillage intensity using WorldView-3 satellite shortwave infrared residue indices. Remote Sens. 2018, 10, 1657. [Google Scholar] [CrossRef] [Green Version]

- Robson, A.; Rahman, M.M.; Muir, J. Using worldview satellite imagery to map yield in Avocado (Persea americana): A case study in Bundaberg, Australia. Remote Sens. 2017, 9, 1223. [Google Scholar] [CrossRef] [Green Version]

- Xie, B.; Zhang, H.K.; Xue, J. Deep convolutional neural network for mapping smallholder agriculture using high spatial resolution satellite image. Sensors 2019, 19, 2398. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhao, J.; Zhong, Y.; Hu, X.; Wei, L.; Zhang, L. A robust spectral-spatial approach to identifying heterogeneous crops using remote sensing imagery with high spectral and spatial resolutions. Remote Sens. Environ. 2020, 239, 111605. [Google Scholar] [CrossRef]

- Cucho-Padin, G.; Loayza, H.; Palacios, S.; Balcazar, M.; Carbajal, M.; Quiroz, R. Development of low-cost remote sensing tools and methods for supporting smallholder agriculture. Appl. Geomat. 2020, 12, 247–263. [Google Scholar] [CrossRef] [Green Version]

- Stratoulias, D.; Tolpekin, V.; De By, R.A.; Zurita-Milla, R.; Retsios, V.; Bijker, W.; Hasan, M.A.; Vermote, E. A workflow for automated satellite image processing: From raw vhsr data to object-based spectral information for smallholder agriculture. Remote Sens. 2017, 9, 1048. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Du, Z.; Yang, J.; Ou, C.; Zhang, T. Smallholder crop area mapped with a semantic segmentation deep learning method. Remote Sens. 2019, 11, 888. [Google Scholar] [CrossRef] [Green Version]

- Shang, J.; Liu, J.; Ma, B.; Zhao, T.; Jiao, X.; Geng, X.; Huffman, T.; Kovacs, J.M.; Walters, D. Mapping spatial variability of crop growth conditions using RapidEye data in Northern Ontario, Canada. Remote Sens. Environ. 2015, 168, 113–125. [Google Scholar] [CrossRef]

- Shang, J.; Liu, J.; Huffman, T.C.; Qian, B.; Pattey, E.; Wang, J.; Zhao, T.; Geng, X.; Kroetsch, D.; Dong, T. Estimating plant area index for monitoring crop growth dynamics using Landsat-8 and RapidEye images. J. Appl. Remote Sens. 2014, 8, 85196. [Google Scholar] [CrossRef] [Green Version]

- Kim, H.O.; Yeom, J.M. Effect of red-edge and texture features for object-based paddy rice crop classification using RapidEye multi-spectral satellite image data. Int. J. Remote Sens. 2014, 35, 7046–7068. [Google Scholar] [CrossRef]

- Tewes, A.; Thonfeld, F.; Schmidt, M.; Oomen, R.J.; Zhu, X.; Dubovyk, O.; Menz, G.; Schellberg, J. Using RapidEye and MODIS data fusion to monitor vegetation dynamics in semi-arid rangelands in South Africa. Remote Sens. 2015, 7, 6510–6534. [Google Scholar] [CrossRef] [Green Version]

- Crnojević, V.; Lugonja, P.; Brkljač, B.N.; Brunet, B. Classification of small agricultural fields using combined Landsat-8 and RapidEye imagery: Case study of northern Serbia. J. Appl. Remote Sens. 2014, 8, 83512. [Google Scholar] [CrossRef] [Green Version]

- Jin, Z.; Azzari, G.; Burke, M.; Aston, S.; Lobell, D.B. Mapping smallholder yield heterogeneity at multiple scales in Eastern Africa. Remote Sens. 2017, 9, 931. [Google Scholar] [CrossRef] [Green Version]

- Zhang, J. Multi-source remote sensing data fusion: Status and trends. Int. J. Image Data Fusion 2010, 1, 5–24. [Google Scholar] [CrossRef] [Green Version]

- Ozdogan, M.; Yang, Y.; Allez, G.; Cervantes, C. Remote sensing of irrigated agriculture: Opportunities and challenges. Remote Sens. 2010, 2, 2274–2304. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Manjunath, B.S.; Mitra, S.K. Multisensor image fusion using the wavelet transform. Graph. Models Image Process. 1995, 57, 235–245. [Google Scholar] [CrossRef]

- Torbick, N.; Salas, W.; Xiao, X.; Ingraham, P.; Fearon, M.; Biradar, C.; Zhao, D.; Liu, Y.; Li, P.; Zhao, Y. Integrating SAR and optical imagery for regional mapping of paddy rice attributes in the Poyang Lake Watershed, China. Can. J. Remote Sens. 2011, 37, 17–26. [Google Scholar] [CrossRef] [Green Version]

- Torbick, N.; Salas, W.; Chowdhury, D.; Ingraham, P.; Trinh, M. Mapping rice greenhouse gas emissions in the Red River Delta, Vietnam. Carbon Manag. 2017, 8, 99–108. [Google Scholar] [CrossRef]

- Gbodjo, Y.J.E.; Ienco, D.; Leroux, L.; Interdonato, R.; Gaetano, R.; Ndao, B. Object-based multi-temporal and multi-source land cover mapping leveraging hierarchical class relationships. Remote Sens. 2020, 12, 2814. [Google Scholar] [CrossRef]

- Houborg, R.; McCabe, M.F. A cubesat enabled spatio-temporal enhancement method (cestem) utilizing planet, landsat and modis data. Remote Sens. Environ. 2018, 209, 211–226. [Google Scholar] [CrossRef]

- Liu, J.; Shi, L.; Zhang, C.; Yang, J.; Zhu, D.; Yang, J. A variable multi-scale segmentation method for spatial pattern analysis using multispectral WorldView-2 images. Sens. Lett. 2013, 11, 1055–1061. [Google Scholar] [CrossRef]

- Persello, C.; Tolpekin, V.A.; Bergado, J.R.; de By, R.A. Delineation of agricultural fields in smallholder farms from satellite images using fully convolutional networks and combinatorial grouping. Remote Sens. Environ. 2019, 231, 111253. [Google Scholar] [CrossRef] [PubMed]

- McCarty, J.L.; Neigh, C.S.R.; Carroll, M.L.; Wooten, M.R. Extracting smallholder cropped area in Tigray, Ethiopia with wall-to-wall sub-meter WorldView and moderate resolution Landsat 8 imagery. Remote Sens. Environ. 2017, 202, 142–151. [Google Scholar] [CrossRef]

- Clewley, D.; Bunting, P.; Shepherd, J.; Gillingham, S.; Flood, N.; Dymond, J.; Lucas, R.; Armston, J.; Moghaddam, M. A python-based open source system for geographic object-based image analysis (GEOBIA) utilizing raster attribute tables. Remote Sens. 2014, 6, 6111–6135. [Google Scholar] [CrossRef] [Green Version]

- Bunting, P.; Clewley, D.; Lucas, R.M.; Gillingham, S. The Remote Sensing and GIS Software Library (RSGISLib). Comput. Geosci. 2014, 62, 216–226. [Google Scholar] [CrossRef]

- Bunting, P.; Gillingham, S. The KEA image file format. Comput. Geosci. 2013, 57, 54–58. [Google Scholar] [CrossRef]

- Neigh, C.S.R.; Carroll, M.L.; Wooten, M.R.; McCarty, J.L.; Powell, B.F.; Husak, G.J.; Enenkel, M.; Hain, C.R. Smallholder crop area mapped with wall-to-wall WorldView sub-meter panchromatic image texture: A test case for Tigray, Ethiopia. Remote Sens. Environ. 2018, 212, 8–20. [Google Scholar] [CrossRef]

- Shepherd, J.D.; Bunting, P.; Dymond, J.R. Operational large-scale segmentation of imagery based on iterative elimination. Remote Sens. 2019, 11, 658. [Google Scholar] [CrossRef] [Green Version]

- Neigh, C.S.R.; Masek, J.G.; Nickeson, J.E. High-Resolution Satellite Data Open for Government Research. Eos Trans. Am. Geophys. Union 2013, 94, 121–123. [Google Scholar] [CrossRef] [Green Version]

- Neigh, C.S.R.; Carroll, M.L.; Montesano, P.M.; Slayback, D.A.; Wooten, M.R.; Lyapustin, A.I.; Shean, D.E.; Alexandrov, O.; Macander, M.J.; Tucker, C.J. An API for Spaceborne Sub-Meter Resolution Products for Earth Science. In Proceedings of the IGARSS 2019 IEEE International Geoscience and Remote Sensing Symposium, Yokohama, Japan, 28 July–2 August 2019; pp. 5397–5400. [Google Scholar]

- Staatz, J.; Hollinger, F. West African Food Systems and Changing Consumer DEMANDS; OECD: Paris, France, 2016. [Google Scholar]

- Allen, T.; Heinrigs, P. Emerging Opportunities in the West African Food Economy; OECD: Paris, France, 2016. [Google Scholar]

- Perez, C.; Jones, E.M.; Kristjanson, P.; Cramer, L.; Thornton, P.K.; Förch, W.; Barahona, C.A. How resilient are farming households and communities to a changing climate in Africa? A gender-based perspective. Glob. Environ. Chang. 2015, 34, 95–107. [Google Scholar] [CrossRef] [Green Version]

- Diagne, M.; Demont, M.; Seck, P.A.; Diaw, A. Self-sufficiency policy and irrigated rice productivity in the Senegal River Valley. Food Secur. 2013, 5, 55–68. [Google Scholar] [CrossRef]

- De Graaff, J.; Kessler, A.; Nibbering, J.W. Agriculture and food security in selected countries in Sub-Saharan Africa: Diversity in trends and opportunities. Food Secur. 2011, 3, 195–213. [Google Scholar] [CrossRef] [Green Version]

- Djaman, K.; Balde, A.B.; Rudnick, D.R.; Ndiaye, O.; Irmak, S. Long-term trend analysis in climate variables and agricultural adaptation strategies to climate change in the Senegal River Basin. Int. J. Climatol. 2017, 37, 2873–2888. [Google Scholar] [CrossRef]

- Dorosh, P.A.; Wailes, E.J.; Pandey, S.; Byerlee, D.; Dawe, D.; Dobermann, A.; Mohanty, S. The international rice trade: Structure, conduct, and performance. In The Rice in the Global Economy: Strategic Research and Policy Issues for Food Security; Pandey, S., Byerlee, D., Dawe, D., Dobermann, A., Mohanty, S., Rozelle, S., Hardy, B., Eds.; International Rice Research Institute: LosBaños, Philippines, 2010; pp. 359–378. [Google Scholar]

- Hazell, P.B.R. Asia’s Green Revolution: Past achievements and future challenges. In Rice in the Global Economy: Strategic Research and Policy Issues for Food Security; International Rice Research Institute: LosBaños, Philippines, 2010; pp. 61–92. [Google Scholar]

- Dawe, D.; Pandey, S.; Nelson, A. Emerging trends and spatial patterns of rice production. In Rice in the Global Economy: Strategic Research and Policy Issues for Food Security; International Rice Research Institute (IRRI): LosBaños, Philippines, 2010. [Google Scholar]

- Lasko, K.; Vadrevu, K.P.; Tran, V.T.; Justice, C. Mapping double and single crop paddy rice with Sentinel-1A at varying spatial scales and polarizations in Hanoi, Vietnam. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 498–512. [Google Scholar] [CrossRef] [PubMed]

- Nguyen, D.B.; Gruber, A.; Wagner, W. Mapping rice extent and cropping scheme in the Mekong Delta using Sentinel-1A data. Remote Sens. Lett. 2016, 7, 1209–1218. [Google Scholar] [CrossRef]

- Jin, Z.; Azzari, G.; You, C.; Di Tommaso, S.; Aston, S.; Burke, M.; Lobell, D.B. Smallholder maize area and yield mapping at national scales with Google Earth Engine. Remote Sens. Environ. 2019, 228, 115–128. [Google Scholar] [CrossRef]

- Ehlers, M. Spectral characteristics preserving image fusion based on Fourier domain filtering. In Remote Sensing for Environmental Monitoring, GIS Applications, and Geology IV; International Society for Optics and Photonics: Maspalomas, Canary Islands, Spain, 2004; Volume 5574, pp. 1–13. [Google Scholar]

- Gašparović, M.; Rumora, L.; Miler, M.; Medak, D. Effect of fusing Sentinel-2 and WorldView-4 imagery on the various vegetation indices. J. Appl. Remote Sens. 2019, 13, 36503. [Google Scholar] [CrossRef]

- Gibril, M.B.A.; Bakar, S.A.; Yao, K.; Idrees, M.O.; Pradhan, B. Fusion of RADARSAT-2 and multispectral optical remote sensing data for LULC extraction in a tropical agricultural area. Geocarto Int. 2017, 32, 735–748. [Google Scholar] [CrossRef]

- Pedregosa, F.; Varoquaux, G.; Gramfort, A.; Michel, V.; Thirion, B.; Grisel, O.; Blondel, M.; Prettenhofer, P.; Weiss, R.; Dubourg, V. Scikit-learn: Machine learning in Python. J. Mach. Learn. Res. 2011, 12, 2825–2830. [Google Scholar]

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Thomas, N.; Neigh, C.S.R.; Carroll, M.L.; McCarty, J.L.; Bunting, P. Fusion Approach for Remotely-Sensed Mapping of Agriculture (FARMA): A Scalable Open Source Method for Land Cover Monitoring Using Data Fusion. Remote Sens. 2020, 12, 3459. https://doi.org/10.3390/rs12203459

Thomas N, Neigh CSR, Carroll ML, McCarty JL, Bunting P. Fusion Approach for Remotely-Sensed Mapping of Agriculture (FARMA): A Scalable Open Source Method for Land Cover Monitoring Using Data Fusion. Remote Sensing. 2020; 12(20):3459. https://doi.org/10.3390/rs12203459

Chicago/Turabian StyleThomas, Nathan, Christopher S. R. Neigh, Mark L. Carroll, Jessica L. McCarty, and Pete Bunting. 2020. "Fusion Approach for Remotely-Sensed Mapping of Agriculture (FARMA): A Scalable Open Source Method for Land Cover Monitoring Using Data Fusion" Remote Sensing 12, no. 20: 3459. https://doi.org/10.3390/rs12203459

APA StyleThomas, N., Neigh, C. S. R., Carroll, M. L., McCarty, J. L., & Bunting, P. (2020). Fusion Approach for Remotely-Sensed Mapping of Agriculture (FARMA): A Scalable Open Source Method for Land Cover Monitoring Using Data Fusion. Remote Sensing, 12(20), 3459. https://doi.org/10.3390/rs12203459