1. Introduction

Coastal zones are among the most populated and most productive areas in the world, offering a variety of habitats and ecosystem services. The European Commission highlights the importance of coastal zone management with the application of different policies and related activities, which were adopted through the joint initiatives of Maritime Spatial Planning and Integrated Coastal Management [

1]. The aim is to promote sustainable growth of maritime and coastal activities and to use coastal and marine resources sustainably. Several other environmental policies are included in this initiative, like the Marine Strategy Framework Directive, the Climate Change Adaptation, and the Common Fisheries Policy [

1]. Marine habitats have important ecological and regulatory functions and should be monitored in order to detect ecosystem changes [

2,

3]. Thus, habitat mapping is a prime necessity for environmental planning and management [

4,

5]. The continued provision of updated habitat maps has a decisive contribution to the design and coordination of relevant actions, the conservation of natural resources, and the monitoring of changes caused by natural disasters or anthropogenic effects [

6]. Habitat maps are in critical demand, raising increasing interest among scientists monitoring sensitive coastal areas. The significance of coastal habitat mapping lies in the need to prevent anthropogenic interventions and other factors that affect the coastal environment [

7]. Habitat maps are spatial representations of natural discrete seabed areas associated with particular species, communities, or co-occurrences. These maps can reflect the nature, distribution, and extent of disparate natural environments and can predict the species distribution [

8].

Remote sensing has long-been identified as a technology capable of supporting the development of coastal zone monitoring and habitat mapping over large areas [

1,

9]. These processes require multitemporal data, either from satellites or from unmanned aerial systems (UAS). The availability of very high-resolution orthomosaics presents increasing interest, as it provides to scientists and relevant stakeholders detailed information for understanding coastal dynamics and implementing environmental policies [

6,

10,

11,

12,

13]. However, the use of high-resolution orthomosaics created from UAS data is expected to improve mapping accuracy. This improvement is due to the high-spatial resolution of the orthomosaics less than 30 cm.

The increasing demand of detailed maps for monitoring the coastal areas requires automatic algorithms and techniques. Object-based image analysis (OBIA) is an object-based analysis of remote sensing imagery that uses automated methods to partition imagery into meaningful image-objects and generate geographic information in a GIS-ready format, from which new knowledge can be obtained [

6,

14,

15].

In literature, there are several studies presenting the combination of OBIA with UAS imagery in habitat mapping. Husson et al. 2016 demonstrated an automated classification of nonsubmerged aquatic vegetation using OBIA and tested two classification methods (threshold classification and random forest) using eCognition® to true-color UAS images [

2]. Furthermore, the produced automated classification results were compared to those of the manual mapping. In another study, Husson et al. 2017 combined height data from a digital surface model (DSM) created from overlapping UAS images with the spectral and textural features from the UAS orthomosaic to test if classification accuracy can be further improved [

3]. They proved that the use of DSM-derived height data increased significantly the overall accuracy by 4%–21% for growth forms and 3%–30% for the dominant class. They concluded that height data have a significant potential to efficiently increase the accuracy of the automated classification of nonsubmerged aquatic vegetation.

Ventura et al. 2016 [

16] carried out habitat mapping using a low-cost UAS. They tried to locate coastal areas suitable for fish nursery in the study area using UAS data and applying three different classification approaches. UAS data were collected using a video camera, and the acquired video was converted into a photo sequence, resulting in the orthomosaic of the study area. In this study, three classifications were performed using three different methods: (i) maximum likelihood in ArcGIS 10.4, (ii) extraction and classification of homogeneous objects (ECHO) in MultiSpec 3.4, and (iii) OBIA in eCognition Developer 8.7, with an overall accuracy of 78.8%, 80.9%, and 89.01%, respectively [

16]. In a subsequent study, Ventura et al. 2018 [

12] referred to the island of Giglio in Central Italy, where they carried out habitat mapping in three different coastal environments. Using OBIA and the nearest neighbor algorithm as classifiers, four different classifications were applied to identify Posidonia Oceanica meadows, nurseries for juvenile fish, and biogenic reefs with overall accuracies of 85%, 84%, and 80%, respectively. In another study, Makri D. et al. 2018 [

17], a multiscale image analysis methodology was performed at Livadi Beach located on Folegandros Island, Greece. Landsat-8 and Sentinel-2 imagery were georeferenced, and atmospheric and water columns were corrected and analyzed using OBIA. As in situ data, high-resolution UAS data were collected. These data were used, as well in the classification and accuracy assessment. The nearest neighbor algorithm and fuzzy logic rules were used as classifiers. In this study, the overall accuracy was calculated to be 53% for Landsat-8 and 66% for Sentinel- 2 imagery. Duffy J. et al. 2018 [

18] studied the creation of seagrass maps in Wales using a light drone for data acquisition. Three different classification methods were examined using the R 3.3.1 software [

19]. The first classification performed unsupervised classification to true-color RGB (tc-RGB) data implemented in the ‘RStoolbox’ package [

20,

21]. The second classification was realized using tc-RGB data in combination with the texture of the image. Finally, the third classification was based on the object-based image analysis in the Geographic Resources Analysis Support System (GRASS) 7.0 software [

22,

23]. The accuracy of the classifications was based on the root mean square deflection (RMSD), and the results showed that unsupervised classifications had better accuracy in the seagrass coverage in comparison with the object-based image analysis method. These studies demonstrate that UAS data can provide critical information regarding the OBIA classification process and have the potential to increase classification accuracy in habitat mapping.

This study aimed to investigate the use of an automated classification approach by applying OBIA to high-resolution UAS multispectral and true-color RGB imagery for marine habitat mapping. Based on orthorectified image mosaics (here called UAS orthomosaics), we perform OBIA to map marine habitats in areas with varying levels of habitat complexity on the coastal zone. For the first time, UAS tc-RGB and multispectral orthomosaics were processed following OBIA methodology, and the classification results were compared by applying different classifiers for marine habitat mapping. Furthermore, the performance of the same classifier when applied to different orthomosaics (tc-RGB and multispectral) was examined in terms of accuracy and efficiency in classifications for marine habitat mapping. The object-based image analysis was performed using as classifiers the k-nearest neighbor algorithm and fuzzy logic rules. The validity of the produced maps was estimated using the overall accuracy and the Kappa index coefficient. Furthermore, divers’ photos and roughness information derived from echo sounders were used as in situ data to assess the final results. Finally, we compared the results between multispectral and true-color UAS data for the automatic classification habitat mapping and analyzed them concerning the classification accuracy.

2. Materials and Methods

2.1. Study Area

The area used in this study is located in the coastal zone of Pamfila Beach on Lesvos Island, Greece (

Figure 1). Lesvos is the third-largest Greek island, having 320 km of coastline, located in the Northeastern Aegean Sea. Pamfila Beach lies in the eastern part of Lesvos Island (39° 9′30.17″ N, 26° 31′53.35″ E), and the islet called Pamfilo is in front of the beach. Furthermore, an olive press and a petroleum storage facility are located close to the beach. This combination creates a unique marine environment; thus, sea meadows mapping is in critical demand for this area. There are four main marine habitats: hard bottom, sand, seagrass, and mix hard substrate. The hard substrate appears in the intertidal and the very shallow zone (0 to 1.5 m). The sand class covers mainly the southern part of the study area, and the mixed hard substrate is mainly located in the center of the study area, at depths of about 2.5 to 6.5 m. The seagrass class (

Posidonia Oceanica) is dominant in the area and is presented at a depth of about 1.5 to 3.5 m. and 6.5 to 7.9 m.

2.2. Classification Scheme

Four categories of the European Nature Information System (EUNIS, 2007) habitat classification list were selected for the classification process: (i) hard substrate, (ii) seagrass, (iii) sand, and (iv) mixed hard substrate. Due to the high resolution of orthomosaics (2 to 5 cm), we assume that each pixel is covered 100% by its class; i.e., a pixel is categorized as seagrass when it is covered 100%. The four classes selected as the predominant classes were previously known from local observations and studies. The seagrass class (code A5.535)—namely, Posidonia Oceanica beds—is characterized by the presence of the marine seagrass (phanerogam) Posidonia Oceanica. This habitat is an endemic Mediterranean species creating natural formations called Posidonia meadows. These meadows are found at depths of 1 to 50 m. The sand class (code A5.235) is encountered in very shallow water where the sea bottom is characterized by fine sand, usually with homogeneous granulometry and of terrigenous origin. The class of hard substrate (code A3.23) is characterized by the presence of many photophilic algae covering hard bottoms in moderately exposed areas. Finally, the mixed hard substrate class is considered as an assemblage of sand, seagrass, and dead seagrass leaves covering a hard substrate.

The depth in the study area was measured using a single-beam echo sounder and had a variation of 0 to 8 m. The depth zones accurately defined and proved very useful in the explanation of the results (

Figure 2).

2.3. In Situ Data

In the present study, a combination of photographs taken while snorkeling and measurements derived from a single-beam echo sounder attached to a small inflatable boat were used as in situ data.

2.3.1. Divers’ Data Acquisition

The study area was initially investigated using an orthomosaic map from a previous demo flight to create sections and transects that divers would follow to capture underwater images. The selected transect orientation was designed to target all four selected classes equally. Furthermore, reference spots were selected using the abovementioned orthomosaic map to help the divers’ orientation during snorkeling. The divers’ equipment used for taking photos as in situ data was a GoPro 4 camera. The divers team followed the predetermined transects in the area and captured with an underwater image all the preselected spots having one of the four classes selected for classification. The in situ sampling took place on 06/07/2018, having a shape of a trapezium, and lasted one hour. In total, 125 underwater images were collected from the divers using a GoPro 4 Hero underwater camera. Each one of the underwater images was captured in a way to represent one training class. After quality control, 33 images were properly classified. The number of the images used for the classification was reduced by 92 due to the following reasons: (i) 18 images (14.4%) were not clearly focused on the sea bottom due to the depth; (ii) 21 images (16,8%) were not geolocated due to the repetitive sea bottom pattern (for example, sandy sea bottom); (iii) 25 images (20%) were duplicated, as they were captured in the same position by the divers to assure that the dominant class will be captured; and, finally, (iv) 28 images (23%) were blurry due to the sea state and the water quality. All images were interpreted to define the classes. Additionally, the position where these images were captured was identified by photointerpretation from the divers’ team using the tc-RGB orthomosaic.

2.3.2. Echo Sounder Data Acquisition

Echo sounder data were collected by SEMANTIC TS personnel using a single-beam sound device attached to a small inflatable vessel on 06/07/2018. The measurements were based on the reflection of the acoustic pulse of the echo sounder device. SEMANTIC TS has developed a signal-processing algorithm based on discriminant analysis to scrutinize the response energy level of the sea bottom pulses [

24]. The derived information includes the depth and the substrate roughness, while the precise geographical positions of the acquired data are also recorded. Roughness and bathymetry products of the study area are provided as a raster dataset (

Figure 2 and

Figure 3). ArcMap 10.3.1 software [

25] was used to process the data, and the canvas was converted into a point vector shapefile. The point vector shapefile contained a total of 3.364 points with the roughness and bathymetry info.

2.4. UAS Data Acquisition

The UAS used for data acquisition was a vertical take-off and landing (VTOL) configuration capable of autonomous flights using preprogrammed flight paths. The configuration was a custom-made airborne system based on the S900 DJI hexacopter airframe, having a 25-min flight time with an attached payload of 1.5 Kg. The payload consisted of two sensors: a multispectral and a tc-RGB. The configuration lies in the Pixhawk autopilot system, which is an open-source UAS [

26,

27]. For the positioning of the UAS during the flight, a real-time kinematic (RTK) global positioning system was connected to the autopilot.

2.4.1. Air-Born Sensors Used

The true-color RGB sensor used in this study was a Sony A5100 24.3-megapixel camera with interchangeable Sigma ART 19 mm 1:2.8 DN0.2M/0.66Feet lens capable of precise autofocusing in 0.06 sec, capturing high-quality true-color RGB (tc-RGB) images. This sensor was selected because of the lightweight (0.224 kg), manual parameterization and auto-triggering capabilities, using an electronic pulse due to its autopilot. The multispectral camera was a Slantrange 3P (S3P) sensor equipped with an ambient illumination sensor for deriving spectral reflectance-based end-products, an integrated global positioning system, and an inertial measurement unit system. The S3P has a quad-core 2.26 GHz processor and an embedded 2GB RAM for onboard preprocessing. The S3P used is a modified multispectral sensor, having the wavebands adjusted to match the coastal, blue, green, and near-infrared (NIR) wavebands on the Sentinel-2 mission (

Table 1). The scope of the waveband modification to the S3P imager aimed at simulating Sentinel-2 data in finer geospatial resolution.

The open-access Mission Planner v1.25 software was used as a ground station for real-time monitoring of the UAS telemetry and for programming the survey missions [

28].

2.4.2. Flight Parameters

Data acquisition took place on the 8

th July 2018 in the Pamfila area. Before the data acquisition, the UAS toolbox was used to predict the optimal flight time [

29]. The toolbox automates a protocol, which summarizes the parameters that affect the reliability and the quality of the data acquisition process over the marine environment using UAS. Each preprogrammed acquisition flight had the following flight parameters: 85% overlap in-track and 80% side-lap. The UAV was flying at a height of 120 m above sea level (absolute height), having a velocity of 5 m/s. Thus, the tc-RGB sensor was adjusted for capturing a photograph every 3.28 s in the nadir direction and the multispectral every 1 s. The obtained ground resolution of the tc-RGB images acquired from the UAS was 2.15 cm/pixel, and for the multispectral imager was 4.84 cm. Ground sampling resolution varied due to the focal length, pixel pitch, and sensor size of the sensors used. After a quality control inspection, the majority of the images were selected for further processing. The data acquisition information is depicted in the following table (

Table 2), followed by the final orthomosaics obtained from the UAV (

Figure 4).

Prior to the survey missions, georeferenced targets, designed in a black and white pattern, were used as ground control points (GCP), having dimensions of 40 × 40 cm. In total, 18 targets were placed on the coastal zone of the study area. The GCP’s coordinates were measured in the Hellenic geodetic system using a real-time kinematic method yielding a total root mean square error (RMSE) of 0.244 cm.

2.5. Methodological Workflow

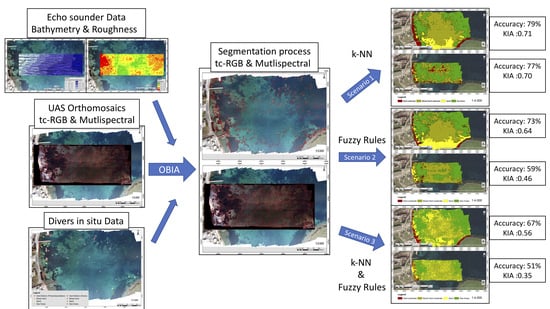

An overview of the followed methodological workflow is given below (

Figure 5). The methodology is organized into four steps: (i) data acquisition and creation of tc-RGB and multispectral orthomosaics using the UAS-SfM (structure-from-motion) framework [

10,

30], (ii) orthomosaics preprocessing, (iii) object-based image analysis, and (iv) accuracy assessment. After UAS and in situ data acquisition, the divers’ photos passed quality control and were interpreted to define the class that is depicted. Furthermore, in the preprocessing stage, a land mask was applied to both orthomosaics. Then, the OBIA analysis was performed, starting with the objects’ segmentation and then performing the classification of benthic substrates of the study area. The classification was implemented following three scenarios. In the first and second scenarios, the k-nearest neighbor and fuzzy rules were applied as classifiers, respectively. In the third scenario, both fuzzy rules and k-NN were applied. The in situ data collected were separated into two nonoverlapping datasets: one for training and one for accuracy assessment.

2.6. Orthomosaic Creation

Structure-from-motion (SfM) photogrammetry applied to images captured from UAS platforms is increasingly being used for a wide range of applications. SfM is a photogrammetric technique that creates two and three-dimensional visualizations from two-dimensional image sequences [

31,

32]. The methodology is one of the most effective methods in the computer vision field, consisting of a series of algorithms that detect common features in images and convert them into three-dimensional information. For the realization of this study, the Agisoft Photoscan 1.4.1 [

33] was used, since it automates the SfM process in a user-friendly interface with a concrete workflow [

32,

34,

35,

36,

37].

Georeferencing of the tc-RGB orthomosaic was achieved using 18 ground control points (GCPs). The use of GCPs for the geolocation of the tc-RGB orthomosaic had, as a result, an RMSE of 1.56 (cm). The achieved accuracy met the requirements of the authors for creating highly detailed maps. The S3P multispectral orthomosaic was georeferenced using the georeferenced tc-RGB orthomosaic as a base map [

38]. An inter-comparison of the georeferenced orthomosaics was implemented to best match common characteristic reference points. Final end-products consisted of georeferenced (i) S3P—coastal (450 nm), blue (500 nm), green (550 nm), and NIR (850 nm) and (ii) Sony A5100 in true-color RGB orthomosaics. The size in pixels for the produced derivatives created from SfM is presented in the following table (

Table 3).

Orthomosaic Preprocessing

Before the preprocessing step, the divers’ in situ data were interpreted, and the two orthomosaics were initially checked for their geolocation accuracy. Then, the produced orthomosaics were land-masked for extracting information based solely on the pixels of the sea. The land mask was created by editing the coastline as a vector in a shapefile format using ArcMap 10.3.1 software [

25].

2.7. Object-Based Image Analysis (OBIA)

For the OBIA, three necessary steps were required: (i) the segmentation procedure; (ii) the definition of the classes that will be later classified; and (iii) the delineation of the classifier, i.e., the classification algorithm defining the class where the segments will be classified.

The first step of the OBIA analysis is to create objects from orthomosaics through the segmentation procedure. The orthomosaics are segmented by a multiresolution segmentation algorithm in eCognition software [

39] (

Figure 6). The initial outcome of the segmentation is meaningful objects defined from the scale parameters, image layer weights, and composition of the homogeneity criterion [

40]. The thresholds used for these parameters were determined empirically, based on the expertise of the image interpreter. For the tc-RGB orthomosaic, the parameters of scale, shape, and compactness were set to 100, 0.1, and 0.9, respectively. For the multispectral orthomosaic, the parameters were set: 15 for the scale, 0.1 for the shape, and 0.9 for the compactness.

In the classification step, two algorithms were selected, the k-nearest neighbor (k-NN) and the fuzzy classification, using eCognition®. The k-nearest neighbor (k-NN) algorithm classifies image objects into a specific feature range and with given samples pertaining to preselected categories. Once a representative set of samples is declared, each object is classified on the resemblance of band values between the training object and the objects to be classified among the k-nearest neighbors. Thus, each segment in the image is denoted with a value equal to 1 or 0, expressing whether the object belongs to a class or not. In fuzzy classification, instead of binary decision-making, the probability of whether an object belongs to a class or not is calculated using membership functions. The limits of each class are no longer restricted using thresholds, but classification functions are used within the dataset, in which every single parameter value will have a chance of being assigned to a class [

41,

42]. Both classifiers are part of the eCognition® software. The k-NN algorithm was selected as a historical classification algorithm (fast deployment without the need of many samples), and fuzzy logic was selected to add specific knowledge into the classification derived from the training areas.

2.8. Classification Scenarios and Accuracy Assesment

The classification step of the present study was performed in sub-decimeter UAS orthomosaics using three different scenarios for defining the best classifier for marine habitat mapping in complex coastal areas. In the first and second scenarios, the k-nearest neighbor and fuzzy rules were applied as classifiers, respectively. The third scenario was realized by applying fuzzy rules in the echo sounder data for sample creation, and the classification was performed using k-NN.

In the first scenario, in total, 60 segments were selected as training samples (15 samples per class) based on the divers’ underwater images. Each segment of the orthomosaic was classified into one of the four predefined classes using the k-NN algorithm as the classifier, and the segments of the same class were merged into one single object. The resulting classification was used for the creation of the final habitat map. This procedure was followed in both tc-RGB and multispectral orthomosaics for the first scenario.

In the second scenario, the same training sample as in the first scenario was used, and the fuzzy rules were defined and applied. The appropriate fuzzy expression for each class was created after examining the relationship between the classes for the mean segment value of the three image bands (tc-RGB). Then, the mean value of the three bands was selected as an input, and the logical rules “AND” and “OR” were used where necessary. The segments were classified using the fuzzy expressions for all classes, and the objects in the same class were merged into one single object. Thus, the results were exported as one polygon vector shapefile. As in the first scenario, the above-presented process was applied to both the tc-RGB and multispectral orthomosaics.

Finally, for the third scenario, the analysis was designed based on the following objectives: (i) examination of usefulness of the roughness echo sounder info to the classification procedure and (ii) comparison of the roughness efficiency against the underwater images photo interpretation for the creation of training samples. More specifically, a new training dataset was created based on the echo sounder’s roughness information. In total, 3028 roughness points were derived from the echo sounder dataset, and fuzzy rules were applied to 90% of the points (2724) for the creation of training samples for the classification classes. In the produced segmentation results, the k-NN algorithm was applied as the classifier for the calculation of the final classification for each of the four classes. As the small research vessel was not able to access for safety reasons to depths less than 1.5 m, we manually added samples where necessary.

Accuracy assessment calculates the percentage of the produced map that approaches the actual field reality. In this study, we created a validation dataset that was not overlapped with the calibration dataset. In total, 120 points were generated using the geolocated underwater images (in situ divers’ data) and an expert’s photointerpretation. Initially, a point vector file was created by locating the 33 underwater images in the tc-RGM orthomosaic. Due to the small number of points produced from in situ data, the dataset was densified via photointerpretation by an expert using the high-resolution tc-RGB orthomosaic. In total, 87 points were added, and, therefore, the final point vector file ended with 120 points (30 per class). In

Figure 7, all points are illustrated with colors according to their assigned taxon. In this figure, divers and photointerpretation points are depicted in round and star shapes, respectively. The 120 accuracy assessment points were firstly assigned to their relevant segments (i.e., forming 120 segments) and the total number of pixels forming the segments was used as the accuracy assessment dataset for all classifications. Furthermore, in the third scenario, 10% of the roughness points (304 points) equally distributed to all four classes were used also as accuracy assessment data. The accuracy matrices created for all scenarios provided information regarding the user and producer accuracy, overall accuracy, and the Kappa index coefficient (

Table 4,

Table 5,

Table 6,

Table 7,

Table 8, and

Table 9).

4. Discussion and Conclusions

In this work, we have shown that the utilization of UAS high in resolution and accuracy aerial photographs, in conjunction with the OBIA, can create quality habitat mapping. We demonstrated that habitat mapping information could be automatically extracted from sub-decimeter spatial true-color RGB images acquired from UAS. High-resolution classification maps produced from UAS orthomosaic using the OBIA approach enables the identification and measurement of habitat classifications (sand, hard bottom, seagrass, and mixed hard substrate) in the coastal zone over the total extent of the mapped area. The detailed geoinformation produced provides scientists with valuable information regarding the current state of the habitat species, i.e., the environmental state of sensitive coastal areas. Moreover, the derived data products enable in-depth analysis and, therefore, the identification of change detections caused by anthropogenic interventions and other factors.

The purpose of this paper was twofold: (a) to compare two types of orthomosaics, the tc-RGB and the multispectral, captured over a coastal area using OBIA with different classifiers to map the selected classes and (b) to examine the usefulness of the bathymetry and the roughness information derived from the echo sounder as training data to the UAS-OBIA methodology for marine habitat mapping.

From the comparison of the classification results, it can be concluded that the tc-RGB orthomosaic produces more valuable and robust results than the multispectral one. This is caused due to the multispectral imager specifications. More specifically, the multispectral sensor receives data from four discrete bands, and a global shutter is used. As a result, the sensor captures photos in a shorter time compared to the tc-RGB camera. Thus, the exposure time is shorter in the multispectral (SlantRange modified imager) than in the true-color RGB (Sony A5100) sensor, causing less light energy. In addition, during the process of the multispectral orthomosaic creation due to the data quality of the initial images, the final derivative was not uniform, thus presenting discrete irregularities. These anomalies appeared due to different illumination conditions and the sun glint; therefore, the multispectral orthomosaic classification presents lower accuracy values. It is crucial to follow a specific UAS flight protocol before each data acquisition, as presented by Doukari et al. in [

29,

43], for eliminating these anomalies. It should be mentioned that the UAS data acquisition procedure works fine over land, presenting a high accuracy level. However, it is not performed adequately over moving water bodies, especially when having a large extent; thus, no land is presented in the data.

Three scenarios were examined for the classification of the marine habitats using different classifiers. The k-nearest neighbor and fuzzy rules were applied in the first and second scenarios, respectively, and a combination of the fuzzy rules and k-NN algorithm in the third scenario. From the evaluation of the three scenarios’ classification results, it can be concluded that the use of the tc-RGB instead of the multispectral data provides better accuracy in detecting and classifying marine habitats by applying the k-NN as the classifier. The overall accuracy using the K-NN classifier was 79%, and the Kappa index (KIA) was equal to 0.71. The results illustrate the effectiveness of the proposed approach when applied to sub-decimeter resolution UAS data for marine habitat mapping in complex coastal areas.

Furthermore, this study demonstrated that the roughness information derived from the echo sounder did not increase the final classification accuracy. Although the echo sounder roughness can be used to discriminate classes and produce maps of the substrates, it cannot be used directly as training data for classifying UAS aerial data. Based on the echo sounder signal, a proper roughness analysis should be initially performed to produce habitat maps, which in later stages could be used as training and validation data for the UAS data.

The results showed that UAS data revealed the sub-bottom complexity to a large extent in relatively shallow areas, providing accurate information and high spatial resolution, which permits habitat mapping with extreme detail. The produced habitat vectors are ideal as reference data for studies with satellite data of lower spatial resolution. Since UAS sub-decimeter spatial resolution imagery will be increasingly available in the future, it could play an important role in habitat mapping, as it serves the needs of various studies in the coastal environment. Finally, the combination of OBIA classification with UAS sub-decimeter orthomosaics implements a very accurate methodology for ecological applications. This approach is capable of recording the high spatiotemporal variability needed for habitat mapping, which has turned into a prime necessity for environmental planning and management.

UAS are increasingly used in habitat mapping [

7,

12,

16,

17,

18,

44], since they provide high-resolution data to inaccessible areas at a low cost and with high temporal repeatability [

10,

45,

46]. The use of a multispectral camera with similar wavelengths to the Sentinel-2 satellite wavelengths was examined for the first time in the present study. Results indicated that the tc-RGB and multispectral orthomosaic perform similarly, and there is no significant advantage of the multispectral camera. This can be explained twofold: (a) due to the fact that the multispectral camera is designed for land measurements and due to the inherited optical properties that cannot distinguish small radiometric differences in water, and (b) the multispectral orthomosaic was problematic due to the large differences in actual multispectral images as a result of large overlaps between them. The multispectral imager over sea areas should contain small overlaps and should gain data in short shutter speeds, i.e., with larger acquisition times. Additionally, results show that echo sounder roughness should not be used for training classification algorithms. The total accuracy of the third scenario in both orthomosaics clearly indicates the inadequacy of bottom roughness for training datasets.

Moving forward, the authors believe that the rapidly developing field of lightweight drones and the miniaturization and the rapid advance of true-color RGB, multispectral, and hyperspectral sensors for close remote sensing will soon allow a more detailed mapping of marine habitats based on spectral signatures.