Applying Satellite Data Assimilation to Wind Simulation of Coastal Wind Farms in Guangdong, China

Abstract

:1. Introduction

2. Materials and Methods

2.1. Wind Observation Data

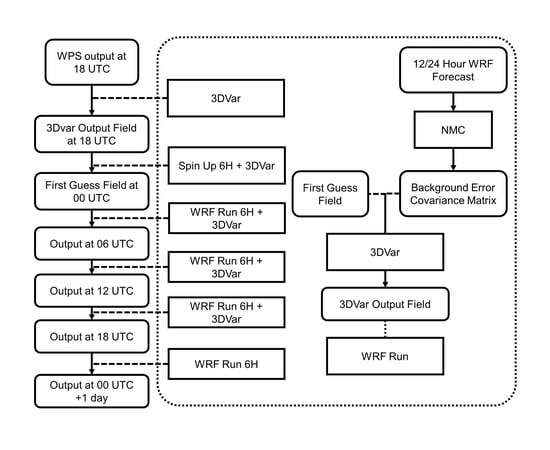

2.2. Numerical Model and Data Assimilation

2.3. Results Measurements

2.3.1. Root-Mean-Square Error (RMSE)

2.3.2. Index of Agreement (IA)

2.3.3. Pearson Correlation Coefficient (R)

2.3.4. Weibull Distribution of Wind Speed

3. Results

3.1. The Wind Distribution Results

3.2. The RMSE, IA, and R Results

3.3. Wind Speed Simulation Results Analysis

3.4. Data Assimilation Results Analysis

4. Discussion

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

Appendix A

| Tower 1 | Tower 2 | Tower 3 | Tower 4 | Tower 5 | Tower 6 | Tower 7 | ||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | ||

| Jan. | RMSE (m/s) | 2.80 | 2.33 | 2.47 | 3.23 | 2.90 | 3.10 | 3.22 | 2.98 | 3.08 | 3.38 | 3.22 | 3.28 | |||||||||

| IA | 0.65 | 0.77 | 0.73 | 0.55 | 0.59 | 0.57 | 0.66 | 0.70 | 0.68 | 0.60 | 0.64 | 0.63 | ||||||||||

| R | 0.64 * | 0.67 * | 0.66 * | 0.54 * | 0.50 * | 0.52 * | 0.54 * | 0.69 * | 0.62 * | 0.61 * | 0.60 * | 0.61 * | ||||||||||

| Feb. | RMSE (m/s) | 2.62 | 2.66 | 2.63 | 3.42 | 3.22 | 3.33 | 3.66 | 3.45 | 3.52 | 2.87 | 2.87 | 2.87 | |||||||||

| IA | 0.66 | 0.75 | 0.72 | 0.54 | 0.57 | 0.55 | 0.58 | 0.60 | 0.59 | 0.68 | 0.72 | 0.71 | ||||||||||

| R | 0.60 * | 0.57 * | 0.59 * | 0.41 * | 0.43 * | 0.42 * | 0.36 * | 0.44 * | 0.42 * | 0.68 * | 0.64 * | 0.66 * | ||||||||||

| Mar. | RMSE (m/s) | 3.64 | 2.96 | 3.24 | 4.13 | 3.51 | 3.70 | 2.63 | 2.87 | 2.72 | 2.73 | 2.72 | 2.72 | 4.21 | 3.70 | 3.95 | 3.84 | 3.19 | 3.40 | 2.71 | 2.74 | 2.71 |

| IA | 0.58 | 0.58 | 0.58 | 0.65 | 0.70 | 0.69 | 0.62 | 0.68 | 0.67 | 0.59 | 0.72 | 0.67 | 0.47 | 0.51 | 0.49 | 0.58 | 0.64 | 0.62 | 0.66 | 0.72 | 0.65 | |

| R | 0.36 * | 0.39 * | 0.38 * | 0.58 * | 0.72 * | 0.68 * | 0.41 * | 0.55 * | 0.50 * | 0.49 * | 0.54 * | 0.52 * | 0.24 * | 0.37 * | 0.30 * | 0.48 * | 0.55 * | 0.52 * | 0.59 * | 0.59 * | 0.59 * | |

| Apr. | RMSE (m/s) | 3.01 | 2.31 | 2.60 | 3.32 | 2.52 | 2.69 | 3.90 | 3.33 | 3.47 | 3.37 | 2.83 | 2.98 | 3.98 | 3.09 | 3.56 | 3.36 | 2.76 | 3.00 | 2.50 | 2.33 | 2.40 |

| IA | 0.46 | 0.62 | 0.56 | 0.46 | 0.63 | 0.59 | 0.57 | 0.63 | 0.62 | 0.59 | 0.62 | 0.61 | 0.44 | 0.57 | 0.50 | 0.51 | 0.63 | 0.58 | 0.58 | 0.58 | 0.58 | |

| R | 0.13 * | 0.40 * | 0.30 * | 0.15 * | 0.44 * | 0.37 * | 0.39 * | 0.54 * | 0.49 * | 0.37 * | 0.50 * | 0.46 * | 0.16 * | 0.48 * | 0.29 * | 0.24 * | 0.43 * | 0.35 * | 0.40 * | 0.33 * | 0.38 * | |

| May | RMSE (m/s) | 2.58 | 2.24 | 2.38 | 2.29 | 1.91 | 2.02 | 2.86 | 2.37 | 2.53 | 2.82 | 2.01 | 2.23 | 3.28 | 2.72 | 3.00 | 2.83 | 2.46 | 2.58 | 1.99 | 2.00 | 1.99 |

| IA | 0.56 | 0.64 | 0.61 | 0.49 | 0.65 | 0.60 | 0.51 | 0.63 | 0.60 | 0.48 | 0.69 | 0.63 | 0.47 | 0.58 | 0.52 | 0.48 | 0.59 | 0.54 | 0.64 | 0.58 | 0.65 | |

| R | 0.31 * | 0.48 * | 0.42 * | 0.17 * | 0.41 * | 0.35 * | 0.23 * | 0.44 * | 0.37 * | 0.13 * | 0.54 * | 0.44 * | 0.22 * | 0.50 * | 0.35 * | 0.17 * | 0.39 * | 0.33 * | 0.46 * | 0.33 * | 0.45 * | |

| Jun. | RMSE (m/s) | 2.29 | 2.06 | 2.15 | 2.31 | 2.02 | 2.09 | 2.84 | 2.56 | 2.64 | 2.53 | 2.04 | 2.20 | 3.39 | 2.87 | 3.16 | 3.34 | 3.17 | 3.23 | 2.32 | 2.12 | 2.18 |

| IA | 0.60 | 0.63 | 0.62 | 0.61 | 0.67 | 0.65 | 0.70 | 0.70 | 0.70 | 0.65 | 0.72 | 0.70 | 0.55 | 0.59 | 0.57 | 0.62 | 0.61 | 0.61 | 0.59 | 0.62 | 0.61 | |

| R | 0.36 * | 0.37 * | 0.37 * | 0.34 * | 0.45 * | 0.42 * | 0.58 * | 0.57 * | 0.58 * | 0.41 * | 0.56 * | 0.50 * | 0.36 * | 0.46 * | 0.41 * | 0.52 * | 0.49 * | 0.51 * | 0.48 * | 0.44 * | 0.47 * | |

| Jul. | RMSE(m/s) | 2.38 | 1.84 | 2.05 | 2.07 | 1.49 | 1.65 | 3.44 | 2.43 | 2.69 | 3.07 | 2.19 | 2.43 | 3.61 | 2.85 | 3.31 | 3.88 | 2.95 | 3.34 | 1.91 | 1.80 | 1.83 |

| IA | 0.65 | 0.77 | 0.72 | 0.59 | 0.76 | 0.71 | 0.58 | 0.76 | 0.70 | 0.47 | 0.57 | 0.53 | 0.47 | 0.60 | 0.53 | 0.38 | 0.47 | 0.44 | 0.69 | 0.67 | 0.68 | |

| R | 0.44 * | 0.66 * | 0.58 * | 0.32 * | 0.61 * | 0.54 * | 0.47 * | 0.74 * | 0.65 * | 0.26 * | 0.46 * | 0.40 * | 0.22 * | 0.48 * | 0.33 * | 0.03 | 0.24 * | 0.16 * | 0.63 * | 0.47 * | 0.64 * | |

| Aug. | RMSE (m/s) | 1.81 | 1.64 | 1.70 | 2.07 | 1.60 | 1.75 | 2.73 | 2.37 | 2.46 | 3.00 | 2.76 | 2.90 | 2.02 | 1.90 | 1.94 | ||||||

| IA | 0.82 | 0.86 | 0.85 | 0.73 | 0.86 | 0.82 | 0.65 | 0.75 | 0.71 | 0.64 | 0.71 | 0.67 | 0.61 | 0.63 | 0.62 | |||||||

| R | 0.70 * | 0.78 * | 0.75 * | 0.57 * | 0.77 * | 0.72 * | 0.51 * | 0.67 * | 0.63 * | 0.56 * | 0.69 * | 0.62 * | 0.44 * | 0.46 * | 0.45 * | |||||||

| Sept. | RMSE(m/s) | 2.39 | 2.16 | 2.25 | 2.59 | 2.40 | 2.44 | 2.90 | 2.84 | 2.85 | 2.89 | 2.58 | 2.75 | 2.24 | 2.32 | 2.26 | ||||||

| IA | 0.60 | 0.66 | 0.64 | 0.63 | 0.66 | 0.65 | 0.61 | 0.60 | 0.61 | 0.49 | 0.57 | 0.52 | 0.63 | 0.63 | 0.63 | |||||||

| R | 0.39 * | 0.60 * | 0.52 * | 0.51 * | 0.64 * | 0.60 * | 0.48 * | 0.58 * | 0.55 * | 0.20 * | 0.41 * | 0.30 * | 0.53 * | 0.47 * | 0.52 * | |||||||

| Oct. | RMSE (m/s) | 2.64 | 2.22 | 2.40 | 2.90 | 2.39 | 2.50 | 2.92 | 2.67 | 2.74 | 3.07 | 2.70 | 2.92 | 2.51 | 2.14 | 2.26 | ||||||

| IA | 0.62 | 0.75 | 0.70 | 0.55 | 0.71 | 0.68 | 0.60 | 0.69 | 0.67 | 0.50 | 0.64 | 0.55 | 0.56 | 0.68 | 0.63 | |||||||

| R | 0.41 * | 0.72 * | 0.60 * | 0.32 * | 0.64 * | 0.56 * | 0.43 * | 0.68 * | 0.62 * | 0.20 * | 0.54 * | 0.36 * | 0.31 * | 0.53 * | 0.46 * | |||||||

| Nov. | RMSE (m/s) | 3.04 | 2.81 | 2.89 | 3.21 | 2.91 | 3.00 | 4.09 | 3.92 | 3.96 | 3.76 | 3.43 | 3.59 | 2.92 | 3.08 | 2.97 | ||||||

| IA | 0.60 | 0.63 | 0.62 | 0.62 | 0.65 | 0.64 | 0.58 | 0.60 | 0.59 | 0.46 | 0.50 | 0.48 | 0.64 | 0.63 | 0.64 | |||||||

| R | 0.39 * | 0.51 * | 0.46 * | 0.54 * | 0.65 * | 0.62 * | 0.52 * | 0.63 * | 0.60 * | 0.24 * | 0.33 * | 0.29 * | 0.61 * | 0.56 * | 0.61 * | |||||||

| Dec. | RMSE (m/s) | 4.19 | 4.16 | 4.16 | 5.36 | 5.35 | 5.35 | 3.49 | 3.49 | 3.49 | 3.50 | 3.44 | 3.46 | |||||||||

| IA | 0.69 | 0.69 | 0.69 | 0.55 | 0.54 | 0.55 | 0.58 | 0.59 | 0.59 | 0.70 | 0.72 | 0.71 | ||||||||||

| R | 0.74 * | 0.77 * | 0.76 * | 0.56 * | 0.66 * | 0.62 * | 0.51 * | 0.50 * | 0.51 * | 0.69 * | 0.69 * | 0.69 * | ||||||||||

| Tower 1 | Tower 2 | Tower 3 | Tower 4 | Tower 5 | Tower 6 | Tower 7 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | |||

| Jan. | RMSE (m/s) | 4.37 | 4.48 | 4.41 | 2.68 | 2.67 | 2.67 | 4.14 | 4.39 | 4.27 | 3.08 | 2.53 | 2.74 | 3.60 | 3.64 | 3.61 | |||||||

| IA | 0.72 | 0.72 | 0.72 | 0.78 | 0.73 | 0.76 | 0.33 | 0.40 | 0.35 | 0.69 | 0.77 | 0.75 | 0.69 | 0.67 | 0.68 | ||||||||

| R | 0.77 * | 0.81 * | 0.80 * | 0.68 * | 0.61 * | 0.66 * | 0.15 | 0.50 * | 0.09 | 0.47 * | 0.60 * | 0.55 * | 0.63 * | 0.67 * | 0.67 * | ||||||||

| Feb. | RMSE (m/s) | 3.98 | 3.60 | 3.70 | 3.13 | 2.83 | 2.91 | 3.74 | 3.18 | 3.38 | 3.41 | 3.38 | 3.39 | ||||||||||

| IA | 0.69 | 0.76 | 0.74 | 0.74 | 0.72 | 0.73 | 0.56 | 0.63 | 0.61 | 0.73 | 0.70 | 0.72 | |||||||||||

| R | 0.66 * | 0.69 * | 0.68 * | 0.60 * | 0.60 * | 0.60 * | 0.26 * | 0.35 * | 0.32 * | 0.61 * | 0.63 * | 0.63 * | |||||||||||

| Mar. | RMSE (m/s) | 3.97 | 2.73 | 3.20 | 4.18 | 3.09 | 3.32 | 3.81 | 4.05 | 3.88 | 2.86 | 2.75 | 2.78 | 3.51 | 2.58 | 2.93 | 3.66 | 3.26 | 3.39 | ||||

| IA | 0.60 | 0.66 | 0.63 | 0.65 | 0.76 | 0.73 | 0.68 | 0.70 | 0.69 | 0.67 | 0.77 | 0.73 | 0.62 | 0.73 | 0.70 | 0.70 | 0.68 | 0.69 | |||||

| R | 0.38 * | 0.37 * | 0.37 * | 0.45 * | 0.66 * | 0.60 * | 0.59 * | 0.60 * | 0.60 * | 0.50 * | 0.59 * | 0.56 * | 0.42 * | 0.53 * | 0.49 * | 0.57 * | 0.60 * | 0.60 * | |||||

| Apr. | RMSE (m/s) | 3.50 | 2.52 | 2.84 | 3.38 | 2.60 | 2.77 | 4.37 | 4.54 | 4.42 | 3.52 | 3.00 | 3.16 | 3.12 | 2.64 | 2.79 | 3.65 | 2.94 | 3.23 | ||||

| IA | 0.50 | 0.71 | 0.63 | 0.51 | 0.70 | 0.64 | 0.61 | 0.64 | 0.63 | 0.62 | 0.68 | 0.66 | 0.59 | 0.71 | 0.67 | 0.60 | 0.72 | 0.68 | |||||

| R | 0.20 * | 0.51 * | 0.40 * | 0.20 * | 0.51 * | 0.42 * | 0.39 * | 0.52 * | 0.48 * | 0.40 * | 0.48 * | 0.46 * | 0.33 * | 0.50 * | 0.44 * | 0.40 * | 0.58 * | 0.51 * | |||||

| May | RMSE (m/s) | 2.58 | 1.96 | 2.23 | 2.89 | 2.45 | 2.55 | 3.25 | 2.26 | 2.52 | 3.01 | 2.37 | 2.59 | 2.93 | 2.48 | 2.61 | 2.72 | 2.14 | 2.31 | ||||

| IA | 0.59 | 0.77 | 0.71 | 0.52 | 0.67 | 0.63 | 0.44 | 0.59 | 0.53 | 0.52 | 0.72 | 0.65 | 0.51 | 0.64 | 0.59 | 0.56 | 0.74 | 0.68 | |||||

| R | 0.34 * | 0.61 * | 0.52 * | 0.24 * | 0.49 * | 0.43 * | 0.13 * | 0.31 * | 0.27 * | 0.21 * | 0.57 * | 0.47 * | 0.21 * | 0.42 * | 0.35 * | 0.28 * | 0.58 * | 0.47 * | |||||

| Jun. | RMSE (m/s) | 2.54 | 2.53 | 2.54 | 2.79 | 2.68 | 2.71 | 2.72 | 2.97 | 2.77 | 2.84 | 2.55 | 2.66 | 3.12 | 2.88 | 2.97 | 2.87 | 2.77 | 2.80 | ||||

| IA | 0.66 | 0.67 | 0.67 | 0.60 | 0.63 | 0.62 | 0.49 | 0.61 | 0.57 | 0.69 | 0.74 | 0.73 | 0.71 | 0.72 | 0.72 | 0.68 | 0.69 | 0.69 | |||||

| R | 0.49 * | 0.48 * | 0.49 * | 0.35 * | 0.43 * | 0.41 * | 0.13 * | 0.37 * | 0.29 * | 0.47 * | 0.57 * | 0.54 * | 0.58 * | 0.57 * | 0.57 * | 0.45 * | 0.52 * | 0.49 * | |||||

| Jul. | RMSE (m/s) | 2.65 | 1.93 | 2.21 | 2.38 | 1.89 | 2.03 | 3.35 | 2.56 | 3.01 | 3.03 | 1.78 | 2.27 | 4.00 | 2.79 | 3.31 | 2.80 | 2.24 | 2.42 | ||||

| IA | 0.69 | 0.83 | 0.78 | 0.59 | 0.73 | 0.69 | 0.62 | 0.87 | 0.79 | 0.50 | 0.68 | 0.62 | 0.40 | 0.49 | 0.45 | 0.70 | 0.80 | 0.77 | |||||

| R | 0.48 * | 0.71 * | 0.63 * | 0.31 * | 0.59 * | 0.52 * | 0.51 * | 0.79 * | 0.72 * | 0.28 * | 0.50 * | 0.44 * | 0.02 | 0.28 * | 0.18 * | 0.52 * | 0.71 * | 0.66 * | |||||

| Aug. | RMSE(m/s) | 2.01 | 1.86 | 1.92 | 2.34 | 1.91 | 2.03 | 3.58 | 2.43 | 2.80 | 2.47 | 2.71 | 2.56 | ||||||||||

| IA | 0.86 | 0.89 | 0.88 | 0.78 | 0.88 | 0.86 | 0.82 | 0.94 | 0.91 | 0.65 | 0.63 | 0.64 | |||||||||||

| R | 0.74 * | 0.80 * | 0.77 * | 0.62 * | 0.79 * | 0.74 * | 0.70 * | 0.89 * | 0.83 * | 0.44 * | 0.44 * | 0.44 * | |||||||||||

| Sept. | RMSE (m/s) | 2.42 | 1.90 | 2.10 | 2.77 | 2.42 | 2.51 | 3.33 | 2.59 | 2.85 | 2.58 | 2.41 | 2.46 | ||||||||||

| IA | 0.65 | 0.77 | 0.73 | 0.65 | 0.72 | 0.70 | 0.59 | 0.69 | 0.66 | 0.71 | 0.73 | 0.73 | |||||||||||

| R | 0.43 * | 0.62 * | 0.54 * | 0.42 * | 0.57 * | 0.53 * | 0.45 * | 0.66 * | 0.60 * | 0.56 * | 0.64 * | 0.61 * | |||||||||||

| Oct. | RMSE (m/s) | 2.74 | 1.77 | 2.14 | 3.28 | 2.35 | 2.60 | 2.74 | 2.40 | 2.52 | 3.23 | 2.63 | 2.82 | ||||||||||

| IA | 0.66 | 0.87 | 0.79 | 0.54 | 0.78 | 0.73 | 0.65 | 0.67 | 0.66 | 0.57 | 0.74 | 0.68 | |||||||||||

| R | 0.44 * | 0.78 * | 0.65 * | 0.24 * | 0.65 * | 0.53 * | 0.48 * | 0.51 * | 0.50 * | 0.32 * | 0.60 * | 0.49 * | |||||||||||

| Nov. | RMSE (m/s) | 3.09 | 2.62 | 2.81 | 2.97 | 2.65 | 2.74 | 3.67 | 3.03 | 3.20 | 3.50 | 3.10 | 3.26 | ||||||||||

| IA | 0.63 | 0.70 | 0.67 | 0.67 | 0.70 | 0.69 | 0.47 | 0.53 | 0.52 | 0.65 | 0.69 | 0.68 | |||||||||||

| R | 0.38 * | 0.50 * | 0.46 * | 0.47 * | 0.56 * | 0.54 * | 0.22 * | 0.26 * | 0.25 * | 0.55 * | 0.63 * | 0.60 * | |||||||||||

| Dec. | RMSE (m/s) | 3.16 | 3.07 | 3.10 | 4.31 | 3.85 | 4.02 | 3.91 | 3.88 | 3.89 | |||||||||||||

| IA | 0.81 | 0.81 | 0.81 | 0.62 | 0.62 | 0.62 | 0.76 | 0.74 | 0.75 | ||||||||||||||

| R | 0.72 * | 0.74 * | 0.73 * | 0.71 * | 0.71 * | 0.71 * | 0.72 * | 0.73 * | 0.73 * | ||||||||||||||

| Tower 1 | Tower 2 | Tower 3 | Tower 4 | Tower 5 | Tower 6 | Tower 7 | |||||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | Test 1 | Test 2 | Test 3 | |||

| Jan. | RMSE (m/s) | 2.55 | 2.58 | 2.56 | 3.52 | 3.04 | 3.22 | 3.54 | 3.56 | 3.55 | |||||||||||||

| IA | 0.73 | 0.76 | 0.75 | 0.66 | 0.72 | 0.69 | 0.69 | 0.70 | 0.69 | ||||||||||||||

| R | 0.56 * | 0.62 * | 0.60 * | 0.42 * | 0.54 * | 0.49 * | 0.66 * | 0.62 * | 0.66 * | ||||||||||||||

| Feb. | RMSE (m/s) | 3.09 | 2.69 | 2.83 | 4.19 | 3.63 | 3.81 | 3.36 | 3.45 | 3.39 | |||||||||||||

| IA | 0.72 | 0.73 | 0.73 | 0.52 | 0.58 | 0.56 | 0.71 | 0.74 | 0.73 | ||||||||||||||

| R | 0.54 * | 0.56 * | 0.56 * | 0.19 * | 0.28 * | 0.25 * | 0.61 * | 0.59 * | 0.61 * | ||||||||||||||

| Mar. | RMSE (m/s) | 4.09 | 2.82 | 3.33 | 4.78 | 3.35 | 3.77 | 4.07 | 2.80 | 3.21 | 2.88 | 2.88 | 2.88 | 3.64 | 2.77 | 3.08 | 3.86 | 3.27 | 3.47 | ||||

| IA | 0.62 | 0.68 | 0.66 | 0.59 | 0.73 | 0.69 | 0.58 | 0.66 | 0.64 | 0.63 | 0.72 | 0.69 | 0.64 | 0.73 | 0.69 | 0.69 | 0.70 | 0.69 | |||||

| R | 0.42 * | 0.40 * | 0.41 * | 0.35 * | 0.60 * | 0.53 * | 0.37 * | 0.43 * | 0.41 * | 0.39 * | 0.52 * | 0.48 * | 0.41 * | 0.53 * | 0.49 * | 0.55 * | 0.60 * | 0.58 * | |||||

| Apr. | RMSE (m/s) | 4.08 | 2.61 | 3.16 | 3.72 | 2.89 | 3.09 | 3.82 | 2.98 | 3.28 | 3.18 | 3.01 | 3.05 | 2.99 | 2.64 | 2.74 | 3.56 | 3.10 | 3.25 | ||||

| IA | 0.48 | 0.75 | 0.65 | 0.48 | 0.67 | 0.63 | 0.60 | 0.73 | 0.69 | 0.63 | 0.67 | 0.65 | 0.50 | 0.62 | 0.57 | 0.62 | 0.70 | 0.66 | |||||

| R | 0.16 * | 0.57 * | 0.39 * | 0.15 * | 0.46 * | 0.38 * | 0.38 * | 0.55 * | 0.49 * | 0.38 * | 0.46 * | 0.43 * | 0.18 * | 0.35 * | 0.28 * | 0.42 * | 0.52 * | 0.48 * | |||||

| May | RMSE (m/s) | 2.58 | 2.01 | 2.23 | 3.12 | 2.64 | 2.76 | 2.78 | 2.23 | 2.41 | 2.92 | 2.49 | 2.64 | 2.63 | 2.58 | 2.60 | |||||||

| IA | 0.61 | 0.78 | 0.71 | 0.50 | 0.66 | 0.61 | 0.60 | 0.74 | 0.70 | 0.53 | 0.71 | 0.65 | 0.54 | 0.58 | 0.57 | ||||||||

| R | 0.35 * | 0.62 * | 0.53 * | 0.19 * | 0.45 * | 0.37 * | 0.34 * | 0.56 * | 0.48 * | 0.21 * | 0.58 * | 0.48 * | 0.22 * | 0.32 * | 0.29 * | ||||||||

| Jun. | RMSE (m/s) | 2.71 | 2.62 | 2.66 | 3.13 | 3.01 | 3.05 | 2.71 | 2.70 | 2.70 | 2.67 | 2.61 | 2.63 | ||||||||||

| IA | 0.67 | 0.69 | 0.68 | 0.56 | 0.59 | 0.58 | 0.75 | 0.77 | 0.76 | 0.72 | 0.73 | 0.73 | |||||||||||

| R | 0.52 * | 0.51 * | 0.52 * | 0.28 * | 0.37 * | 0.35 * | 0.58 * | 0.61 * | 0.60 * | 0.59 * | 0.54 * | 0.57 * | |||||||||||

| Jul. | RMSE (m/s) | 2.65 | 1.94 | 2.22 | 2.70 | 2.14 | 2.30 | 3.18 | 2.21 | 2.53 | 2.65 | 1.82 | 2.07 | ||||||||||

| IA | 0.71 | 0.84 | 0.79 | 0.55 | 0.71 | 0.67 | 0.68 | 0.84 | 0.79 | 0.54 | 0.69 | 0.64 | |||||||||||

| R | 0.50 * | 0.73 * | 0.65 * | 0.25 * | 0.57 * | 0.49 * | 0.50 * | 0.75 * | 0.67 * | 0.33 * | 0.50 * | 0.44 * | |||||||||||

| Aug. | RMSE (m/s) | 2.09 | 1.93 | 1.99 | 2.51 | 2.15 | 2.23 | 2.56 | 2.18 | 2.28 | |||||||||||||

| IA | 0.85 | 0.89 | 0.88 | 0.79 | 0.87 | 0.84 | 0.79 | 0.86 | 0.84 | ||||||||||||||

| R | 0.73 * | 0.80 * | 0.77 * | 0.63 * | 0.78 * | 0.73 * | 0.65 * | 0.74 * | 0.71 * | ||||||||||||||

| Sept. | RMSE (m/s) | 2.46 | 1.98 | 2.15 | 3.06 | 2.69 | 2.79 | 2.71 | 2.34 | 2.44 | |||||||||||||

| IA | 0.66 | 0.78 | 0.73 | 0.62 | 0.70 | 0.68 | 0.73 | 0.80 | 0.77 | ||||||||||||||

| R | 0.44 * | 0.62 * | 0.55 * | 0.37 * | 0.53 * | 0.49 * | 0.58 * | 0.71 * | 0.67 * | ||||||||||||||

| Oct. | RMSE (m/s) | 2.84 | 1.85 | 2.24 | 3.67 | 2.67 | 2.96 | 3.16 | 2.35 | 2.62 | |||||||||||||

| IA | 0.67 | 0.88 | 0.79 | 0.51 | 0.77 | 0.71 | 0.68 | 0.84 | 0.78 | ||||||||||||||

| R | 0.45 * | 0.79 * | 0.65 * | 0.19 * | 0.62 * | 0.51 * | 0.50 * | 0.75 * | 0.67 * | ||||||||||||||

| Nov. | RMSE (m/s) | 3.18 | 2.72 | 2.90 | 3.30 | 2.98 | 3.05 | 3.56 | 2.97 | 3.14 | |||||||||||||

| IA | 0.63 | 0.70 | 0.67 | 0.63 | 0.66 | 0.65 | 0.67 | 0.75 | 0.73 | ||||||||||||||

| R | 0.37 * | 0.49 * | 0.44 * | 0.39 * | 0.48 * | 0.46 * | 0.50 * | 0.65 * | 0.61 * | ||||||||||||||

| Dec. | RMSE (m/s) | 3.35 | 3.23 | 3.26 | 3.70 | 3.34 | 3.42 | ||||||||||||||||

| IA | 0.79 | 0.79 | 0.79 | 0.59 | 0.69 | 0.66 | |||||||||||||||||

| R | 0.67 * | 0.68 * | 0.68 * | 0.41 * | 0.53 * | 0.48 * | |||||||||||||||||

Appendix B

References

- WWEA. Wind Power Capacity Worldwide Reaches 597 GW, 50,1 GW added in 2018. Available online: https://wwindea.org/blog/2019/02/25/wind-power-capacity-worldwide-reaches-600-gw-539-gw-added-in-2018/ (accessed on 27 December 2019).

- Costa, A.; Crespo, A.; Navarro, J.; Lizcano, G.; Madsen, H.; Feitosa, E. A review on the young history of the wind power short-term prediction. Renew. Sustain. Energy Rev. 2008, 12, 1725–1744. [Google Scholar] [CrossRef] [Green Version]

- Storm, B.; Dudhia, J.; Basu, S.; Swift, A.; Giammanco, I. Evaluation of the weather research and forecasting model on forecasting low-level jets: Implications for wind energy. Wind Energy Int. J. Prog. Appl. Wind Power Convers. Technol. 2009, 12, 81–90. [Google Scholar] [CrossRef]

- Skamarock, W.C.; Klemp, J.B.; Dudhia, J.; Gill, D.O.; Barker, D.M.; Wang, W.; Powers, J.G. A Description of the Advanced Research WRF Version 3. NCAR Technical Note-475+ STR. 2008. Available online: http://citeseerx.ist.psu.edu/viewdoc/summary?doi=10.1.1.484.3656 (accessed on 11 December 2018).

- Hu, X.; Nielsen-Gammon, J.W.; Zhang, F. Evaluation of Three Planetary Boundary Layer Schemes in the WRF Model. J. Appl. Meteorol. Clim. 2010, 49, 1831–1844. [Google Scholar] [CrossRef] [Green Version]

- Sušelj, K.; Sood, A. Improving the Mellor-Yamada-Janjić parameterization for wind conditions in the marine planetary boundary layer. Bound. Layer Meteorol. 2010, 136, 301–324. [Google Scholar] [CrossRef]

- Deppe, A.J.; Gallus, W.A., Jr.; Takle, E.S. A WRF ensemble for improved wind speed forecasts at turbine height. Weather Forecast. 2013, 28, 212–228. [Google Scholar] [CrossRef]

- Hu, X.M.; Klein, P.M.; Xue, M. Evaluation of the updated YSU planetary boundary layer scheme within WRF for wind resource and air quality assessments. J. Geophys. Res. Atmos. 2013, 118, 10–490. [Google Scholar] [CrossRef]

- Liu, Y.; Warner, T.; Liu, Y.; Vincent, C.; Wu, W.; Mahoney, B.; Swerdlin, S.; Parks, K.; Boehnert, J. Simultaneous nested modeling from the synoptic scale to the LES scale for wind energy applications. J. Wind Eng. Ind. Aerodyn. 2011, 99, 308–319. [Google Scholar] [CrossRef] [Green Version]

- Zhang, F.; Yang, Y.; Wang, C. The Effects of Assimilating Conventional and ATOVS Data on Forecasted Near-Surface Wind with WRF-3DVAR. Mon. Weather Rev. 2015, 143, 153–164. [Google Scholar] [CrossRef]

- Ancell, B.C.; Kashawlic, E.; Schroeder, J.L. Evaluation of wind forecasts and observation impacts from variational and ensemble data assimilation for wind energy applications. Mon. Weather Rev. 2015, 143, 3230–3245. [Google Scholar] [CrossRef]

- Ulazia, A.; Saenz, J.; Ibarra-Berastegui, G. Sensitivity to the use of 3DVAR data assimilation in a mesoscale model for estimating offshore wind energy potential. A case study of the Iberian northern coastline. Appl. Energy 2016, 180, 617–627. [Google Scholar] [CrossRef]

- Che, Y.; Xiao, F. An integrated wind-forecast system based on the weather research and forecasting model, Kalman filter, and data assimilation with nacelle-wind observation. J. Renew. Sustain. Energy 2016, 8, 53308. [Google Scholar] [CrossRef]

- Ulazia, A.; Sáenz, J.; Ibarra-Berastegui, G.; González-Rojí, S.J.; Carreno-Madinabeitia, S. Using 3DVAR data assimilation to measure offshore wind energy potential at different turbine heights in the West Mediterranean. Appl. Energy 2017, 208, 1232–1245. [Google Scholar] [CrossRef] [Green Version]

- Cheng, W.Y.; Liu, Y.; Bourgeois, A.J.; Wu, Y.; Haupt, S.E. Short-term wind forecast of a data assimilation/weather forecasting system with wind turbine anemometer measurement assimilation. Renew. Energy 2017, 107, 340–351. [Google Scholar] [CrossRef]

- China Meteorological Administration Wind Energy Solar Energy Resource Center. Detailed investigation and assessment of wind energy resources in China. Wind Energy 2011, 8, 26–30. (In Chinese) [Google Scholar]

- Morrison, H.; Thompson, G.; Tatarskii, V. Impact of cloud microphysics on the development of trailing stratiform precipitation in a simulated squall line: Comparison of one-and two-moment schemes. Mon. Weather Rev. 2009, 137, 991–1007. [Google Scholar] [CrossRef] [Green Version]

- Iacono, M.J.; Delamere, J.S.; Mlawer, E.J.; Shephard, M.W.; Clough, S.A.; Collins, W.D. Radiative forcing by long-lived greenhouse gases: Calculations with the AER radiative transfer models. J. Geophys. Res. Atmos. 2008, 113. [Google Scholar] [CrossRef]

- Ek, M.B.; Mitchell, K.E.; Lin, Y.; Rogers, E.; Grunmann, P.; Koren, V.; Gayno, G.; Tarpley, J.D. Implementation of Noah land surface model advances in the National Centers for Environmental Prediction operational mesoscale Eta model. J. Geophys. Res. Atmos. 2003, 108, GCP12-1. [Google Scholar] [CrossRef]

- Kain, J.S. The Kain—Fritsch convective parameterization: An update. J. Appl. Meteorol. 2004, 43, 170–181. [Google Scholar] [CrossRef] [Green Version]

- Hong, S.; Noh, Y.; Dudhia, J. A new vertical diffusion package with an explicit treatment of entrainment processes. Mon. Weather Rev. 2006, 134, 2318–2341. [Google Scholar] [CrossRef] [Green Version]

- National Centers for Environmental Prediction; National Weather Service; NOAA; U.S Department of Commerce. NCEP FNL Operational Model Global Tropospheric Analyses, Continuing from July 1999. Research Data Archive at the National Center for Atmospheric Research, Computational and Information Systems Laboratory. 2000. Available online: https://rda.ucar.edu/datasets/ds083.2/ (accessed on 11 December 2018).

- Parrish, D.F.; Derber, J.C. The National Meteorological Center’s spectral statistical-interpolation analysis system. Mon. Weather Rev. 1992, 120, 1747–1763. [Google Scholar] [CrossRef]

- National Centers for Environmental Prediction; National Weather Service; NOAA; U.S Department of Commerce. NCEP GDAS Satellite Data 2004-Continuing. Research Data Archive at the National Center for Atmospheric Research, Computational and Information Systems Laboratory, Boulder, Colo. (Updated daily). 2009. Available online: https://rda.ucar.edu/datasets/ds735.0/ (accessed on 11 December 2018).

- National Centers for Environmental Prediction; National Weather Service; NOAA; U.S Department of Commerce. Updated Daily. NCEP ADP Global Surface Observational Weather Data, October 1999-continuing. Research Data Archive at the National Center for Atmospheric Research, Computational and Information Systems Laboratory. 2004. Available online: https://data.ucar.edu/dataset/ncep-adp-global-surface-observational-weather-data-october-1999-continuing (accessed on 2 March 2020).

- National Centers for Environmental Prediction; National Weather Service; NOAA; U.S Department of Commerce. Updated Daily. NCEP ADP Global Upper Air Observational Weather Data, October 1999-Continuing. Research Data Archive at the National Center for Atmospheric Research, Computational and Information Systems Laboratory. 2004. Available online: https://data.ucar.edu/dataset/ncep-adp-global-upper-air-observational-weather-data-october-1999-continuing (accessed on 2 March 2020).

- Carvalho, D.; Rocha, A.; Gómez-Gesteira, M.; Santos, C. A sensitivity study of the WRF model in wind simulation for an area of high wind energy. Environ. Model. Softw. 2012, 33, 23–34. [Google Scholar] [CrossRef] [Green Version]

- Willmott, C.J. On the validation of models. Phys. Geogr. 1981, 2, 184–194. [Google Scholar] [CrossRef]

- Willmott, C.J. On the evaluation of model performance in physical geography. In Spatial Statistics and Models; Gaile, G.L., Willmott, C.J., Eds.; Springer: Dordrecht, The Netherlands, 1984; pp. 443–460. [Google Scholar]

- Willmott, C.J.; Ackleson, S.G.; Davis, R.E.; Feddema, J.J.; Klink, K.M.; Legates, D.R.; O’Donnell, J.; Rowe, C.M. Statistics for the evaluation and comparison of models. J. Geophys. Res. Ocean. 1985, 90, 8995–9005. [Google Scholar] [CrossRef] [Green Version]

- Legates, D.R.; McCabe, G.J., Jr. Evaluating the use of “goodness-of-fit” measures in hydrologic and hydroclimatic model validation. Water Resour. Res. 1999, 35, 233–241. [Google Scholar] [CrossRef]

- Lun, I.Y.F.; Lam, J.C. A study of Weibull parameters using long-term wind observations. Renew. Energy 2000, 20, 145–153. [Google Scholar] [CrossRef]

- Stensrud, D.J.; Skindlov, J.A. Gridpoint predictions of high temperature from a mesoscale model. Weather Forecast. 1996, 11, 103–110. [Google Scholar] [CrossRef] [Green Version]

- Anthes, R.A.; Warner, T.T. Development of hydrodynamic models suitable for air pollution and other mesometerological studies. Mon. Weather Rev. 1978, 106, 1045–1078. [Google Scholar] [CrossRef] [Green Version]

- Carvalho, D.; Rocha, A.; Gómez-Gesteira, M. Ocean surface wind simulation forced by different reanalyses: Comparison with observed data along the Iberian Peninsula coast. Ocean Model. 2012, 56, 31–42. [Google Scholar] [CrossRef]

- Carvalho, D.; Rocha, A.; Santos, C.S.; Pereira, R. Wind resource modelling in complex terrain using different mesoscale–microscale coupling techniques. Appl. Energy 2013, 108, 493–504. [Google Scholar] [CrossRef] [Green Version]

- Carvalho, D.; Rocha, A.; Gómez-Gesteira, M.; Silva Santos, C. WRF wind simulation and wind energy production estimates forced by different reanalyses: Comparison with observed data for Portugal. Appl. Energy 2014, 117, 116–126. [Google Scholar] [CrossRef]

- Carvalho, D.; Rocha, A.; Gómez-Gesteira, M.; Silva Santos, C. Offshore wind energy resource simulation forced by different reanalyses: Comparison with observed data in the Iberian Peninsula. Appl. Energy 2014, 134, 57–64. [Google Scholar] [CrossRef]

- Mattar, C.; Borvar, D. Offshore wind power simulation by using WRF in the central coast of Chile. Renew. Energy 2016, 94, 22–31. [Google Scholar] [CrossRef]

- Giannaros, T.M.; Melas, D.; Ziomas, I. Performance evaluation of the Weather Research and Forecasting (WRF) model for assessing wind resource in Greece. Renew. Energy 2017, 102, 190–198. [Google Scholar] [CrossRef]

- Salvação, N.; Soares, C.G. Wind resource assessment offshore the Atlantic Iberian coast with the WRF model. Energy 2018, 145, 276–287. [Google Scholar] [CrossRef]

| Tower | Longitude (E) | Latitude (N) | Terrain Height (m) |

|---|---|---|---|

| Tower1 | 112.304 | 21.768 | 380 |

| Tower2 | 112.309 | 21.827 | 285 |

| Tower3 | 112.208 | 21.783 | 320 |

| Tower4 | 111.985 | 22.144 | 540 |

| Tower5 | 112.269 | 21.796 | 473 |

| Tower6 | 112.076 | 22.110 | 758 |

| Tower7 | 112.334 | 21.844 | 322 |

| Tower | Wind Sensor | Model | Hardware version | Software Version | Sampling Frequency | Sensor Bias |

|---|---|---|---|---|---|---|

| Tower 1 | NRG | 4280 | 023-022-053 | SDR 6.0.26 | 1 s | |

| Tower 2 | NRG | 4280 | 023-022-053 | SDR 6.0.26 | 1 s | |

| Tower 3 | NRG | 4280 | 023-022-036 | SDR 6.0.26 | 1 s | |

| Tower 4 | NRG | 4280 | 023-022-036 | SDR 6.0.26 | 1 s | |

| Tower 5 | NRG | 4280 | 023-022-039 | SDR 6.0.26 | 1 s | |

| Tower 6 | NRG | 4280 | 023-022-039 | SDR 6.0.26 | 1 s | |

| Tower 7 | NRG | 4280 | 023-022-039 | SDR 6.0.26 | 1 s |

| Station 1 | Station 2 | Station 3 | Station 4 | Station 5 | Station 6 | Station 7 | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Level | 10 m | 50 m | 70 m | 10 m | 50 m | 70 m | 10 m | 50 m | 70 m | 10 m | 50 m | 70 m | 10 m | 50 m | 70 m | 10 m | 50 m | 70 m | 10 m | 50 m | 70 m |

| Jan. | 0 | 0 | 0 | 0 | 0 | 0 | 1728 | 1728 | 1728 | 4271 | 4271 | 4271 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 |

| Feb. | 0 | 0 | 0 | 0 | 0 | 0 | 4044 | 4044 | 4044 | 4176 | 4176 | 4176 | 4176 | 4176 | 4176 | 4176 | 4176 | 4176 | 4176 | 4176 | 4176 |

| Mar. | 432 | 432 | 432 | 1296 | 1296 | 1296 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4284 | 4284 | 4284 | 4464 | 4464 | 4464 |

| Apr. | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4176 | 4176 | 4176 | 4320 | 4320 | 4320 |

| May | 4464 | 4464 | 4464 | 4320 | 4320 | 4320 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4272 | 4272 | 4272 | 4464 | 4464 | 4464 |

| June | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 |

| July | 4464 | 4464 | 4464 | 4320 | 4320 | 4320 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 2736 | 2736 | 2736 | 4464 | 4464 | 4464 |

| Aug. | 4176 | 4176 | 4176 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 0 | 0 | 0 | 4320 | 4320 | 4320 |

| Sept. | 3888 | 3888 | 3888 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 4176 | 4176 | 4176 | 0 | 0 | 0 | 3888 | 3888 | 3888 |

| Oct. | 4176 | 4176 | 4176 | 4284 | 4284 | 4284 | 4464 | 4464 | 4464 | 4464 | 4464 | 4464 | 4320 | 4320 | 4320 | 0 | 0 | 0 | 3888 | 3888 | 3888 |

| Nov. | 4176 | 4176 | 4176 | 4032 | 4032 | 4032 | 4320 | 4320 | 4320 | 4320 | 4320 | 4320 | 3888 | 3888 | 3888 | 0 | 0 | 0 | 4176 | 4176 | 4176 |

| Dec. | 0 | 0 | 0 | 4176 | 4176 | 4176 | 1362 | 1362 | 1362 | 4368 | 4368 | 4368 | 3924 | 3924 | 3924 | 0 | 0 | 0 | 4464 | 4464 | 4464 |

| Tower 1 | Tower 2 | Tower 3 | Tower 4 | Tower 5 | Tower 6 | Tower 7 | |||||||||||||||

|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Level (m) | 10 | 50 | 70 | 10 | 50 | 70 | 10 | 50 | 70 | 10 | 50 | 70 | 10 | 50 | 70 | 10 | 50 | 70 | 10 | 50 | 70 |

| Jan. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 1728 | 0 | 4270 | 4210 | 4271 | 4271 | 63 | 0 | 4158 | 4158 | 4158 | 4167 | 4244 | 4249 |

| Feb. | 0 | 0 | 0 | 0 | 0 | 0 | 0 | 4044 | 0 | 4176 | 4176 | 4176 | 4176 | 0 | 0 | 4158 | 4158 | 4158 | 4099 | 4155 | 4155 |

| Mar. | 432 | 432 | 432 | 1290 | 1290 | 1290 | 1059 | 4062 | 1076 | 4220 | 4220 | 4220 | 4464 | 0 | 0 | 4284 | 4284 | 4281 | 4454 | 4454 | 4454 |

| Apr. | 4320 | 4320 | 2314 | 4239 | 4248 | 4247 | 4320 | 4320 | 4320 | 3943 | 4175 | 4176 | 4320 | 0 | 0 | 4176 | 4175 | 2695 | 4302 | 4302 | 3969 |

| May | 4411 | 4411 | 4411 | 4320 | 4319 | 4320 | 4464 | 1068 | 4464 | 4464 | 4330 | 4464 | 4464 | 0 | 0 | 4271 | 4272 | 0 | 4464 | 4464 | 1909 |

| Jun. | 4313 | 4312 | 4313 | 4320 | 4320 | 4320 | 4320 | 1238 | 4296 | 4320 | 4117 | 4320 | 4320 | 0 | 0 | 4320 | 4320 | 0 | 4320 | 4320 | 0 |

| Jul. | 4450 | 4451 | 4451 | 4319 | 4320 | 4320 | 4464 | 816 | 4464 | 2692 | 2661 | 2736 | 4464 | 0 | 0 | 790 | 790 | 0 | 4433 | 4463 | 0 |

| Aug. | 4122 | 4122 | 4122 | 4461 | 4464 | 4463 | 4463 | 740 | 4463 | 0 | 0 | 0 | 4463 | 0 | 0 | 0 | 0 | 0 | 4319 | 4319 | 0 |

| Sept. | 3842 | 3843 | 3843 | 4320 | 4320 | 4319 | 4320 | 940 | 4320 | 0 | 0 | 0 | 4175 | 0 | 0 | 0 | 0 | 0 | 3813 | 3814 | 0 |

| Oct. | 4019 | 4037 | 4037 | 4283 | 4284 | 4283 | 4464 | 526 | 4464 | 0 | 0 | 0 | 4320 | 0 | 0 | 0 | 0 | 0 | 3852 | 3852 | 0 |

| Nov. | 4096 | 4138 | 4138 | 4032 | 4032 | 4032 | 4320 | 430 | 4320 | 0 | 0 | 0 | 3888 | 0 | 0 | 0 | 0 | 0 | 4176 | 4176 | 0 |

| Dec. | 0 | 0 | 0 | 4080 | 4080 | 4080 | 1362 | 260 | 1362 | 0 | 0 | 0 | 3924 | 0 | 0 | 0 | 0 | 0 | 4368 | 4368 | 0 |

| Domain | 01 | 02 | 03 |

| Grid number | 80 × 80 | 88 × 88 | 88 × 88 |

| Grid resolution | 27 km | 9 km | 3 km |

| Vertical levels | 51 | 51 | 51 |

| Microphysics | Morrison | Morrison | Morrison |

| Longwave radiation | RRTMG | RRTMG | RRTMG |

| Shortwave radiation | RRTMG | RRTMG | RRTMG |

| Land-surface | Noah | Noah | Noah |

| Cumulus convention | Kain–Fritsch | Kain–Fritsch | Not set |

| PBL | YSU | YSU | YSU |

| Platform | Satellite ID | Sensor | Observation Variables |

|---|---|---|---|

| EOS | 2 | AIRS | Infrared Radiance |

| EOS | 2 | AMSUA | Microwave Radiance |

| METOP | 1 | AMSUA | Microwave Radiance |

| METOP | 1 | MHS | Microwave Radiance |

| METOP | 2 | AMSUA | Microwave Radiance |

| METOP | 2 | MHS | Microwave Radiance |

| NOAA | 15 | AMSUA | Microwave Radiance |

| NOAA | 15 | HIRS | Infrared Radiance |

| NOAA | 16 | AMSUA | Microwave Radiance |

| NOAA | 16 | HIRS | Infrared Radiance |

| NOAA | 17 | HIRS | Infrared Radiance |

| NOAA | 18 | AMSUA | Microwave Radiance |

| NOAA | 18 | HIRS | Infrared Radiance |

| NOAA | 18 | MHS | Microwave Radiance |

| NOAA | 19 | AMSUA | Microwave Radiance |

| NOAA | 19 | MHS | Microwave Radiance |

| Sensor | Resolution |

|---|---|

| AMSUA | ~50 km |

| MHS | ~17 km |

| AIRS | ~13.5 km |

| HIRS | ~10 km |

| K (Shape) | Lambda (Scale) | ||||||||

|---|---|---|---|---|---|---|---|---|---|

| Obs | Test 1 | Test 2 | Test 3 | Obs | Test 1 | Test 2 | Test 3 | ||

| Tower 1 | 10 m | 1.99 | 2.45 | 2.20 | 2.35 | 5.39 | 4.61 | 4.62 | 4.62 |

| 50 m | 2.20 | 2.56 | 2.27 | 2.45 | 6.47 | 6.63 | 6.02 | 6.38 | |

| 70 m | 2.25 | 2.50 | 2.24 | 2.39 | 6.71 | 7.15 | 6.41 | 6.89 | |

| Tower 2 | 10 m | 1.53 | 2.31 | 2.04 | 2.24 | 5.10 | 4.54 | 4.18 | 4.44 |

| 50 m | 1.75 | 2.51 | 2.12 | 2.43 | 5.95 | 6.41 | 5.64 | 6.18 | |

| 70 m | 1.77 | 2.52 | 2.12 | 2.42 | 6.37 | 6.97 | 6.05 | 6.76 | |

| Tower 3 | 10 m | 2.02 | 2.36 | 2.08 | 2.27 | 6.74 | 4.83 | 4.62 | 4.76 |

| 50 m | 1.51 | 2.76 | 2.31 | 2.62 | 8.27 | 7.91 | 7.40 | 7.75 | |

| 70 m | 1.84 | 2.50 | 2.13 | 2.16 | 6.83 | 7.10 | 6.16 | 6.94 | |

| Tower 4 | 10 m | 1.81 | 3.06 | 2.03 | 2.71 | 5.66 | 4.72 | 4.89 | 4.78 |

| 50 m | 1.79 | 3.18 | 2.08 | 2.88 | 6.10 | 6.92 | 6.10 | 6.68 | |

| 70 m | 2.23 | 3.16 | 2.08 | 2.81 | 6.38 | 7.50 | 6.40 | 7.19 | |

| Tower 5 | 10 m | 2.41 | 2.48 | 2.08 | 2.28 | 6.86 | 4.50 | 4.40 | 4.44 |

| 50 m | |||||||||

| 70 m | |||||||||

| Tower 6 | 10 m | 2.43 | 2.64 | 2.17 | 2.48 | 7.40 | 5.51 | 5.23 | 5.41 |

| 50 m | 2.45 | 2.71 | 2.16 | 2.49 | 7.44 | 7.21 | 6.63 | 6.99 | |

| 70 m | 2.40 | 2.66 | 2.05 | 2.45 | 7.56 | 7.92 | 7.28 | 7.70 | |

| Tower 7 | 10 m | 1.27 | 2.44 | 2.16 | 2.34 | 4.11 | 4.53 | 4.34 | 4.47 |

| 50 m | 1.54 | 2.65 | 2.23 | 2.51 | 6.48 | 6.46 | 5.90 | 6.25 | |

| 70 m | 1.75 | 3.31 | 2.36 | 3.02 | 7.69 | 7.40 | 6.68 | 7.18 | |

© 2020 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Xu, W.; Ning, L.; Luo, Y. Applying Satellite Data Assimilation to Wind Simulation of Coastal Wind Farms in Guangdong, China. Remote Sens. 2020, 12, 973. https://doi.org/10.3390/rs12060973

Xu W, Ning L, Luo Y. Applying Satellite Data Assimilation to Wind Simulation of Coastal Wind Farms in Guangdong, China. Remote Sensing. 2020; 12(6):973. https://doi.org/10.3390/rs12060973

Chicago/Turabian StyleXu, Wenqing, Like Ning, and Yong Luo. 2020. "Applying Satellite Data Assimilation to Wind Simulation of Coastal Wind Farms in Guangdong, China" Remote Sensing 12, no. 6: 973. https://doi.org/10.3390/rs12060973

APA StyleXu, W., Ning, L., & Luo, Y. (2020). Applying Satellite Data Assimilation to Wind Simulation of Coastal Wind Farms in Guangdong, China. Remote Sensing, 12(6), 973. https://doi.org/10.3390/rs12060973