Deep Learning Based Thin Cloud Removal Fusing Vegetation Red Edge and Short Wave Infrared Spectral Information for Sentinel-2A Imagery

Abstract

:1. Introduction

1.1. Motivation

1.2. Related Works

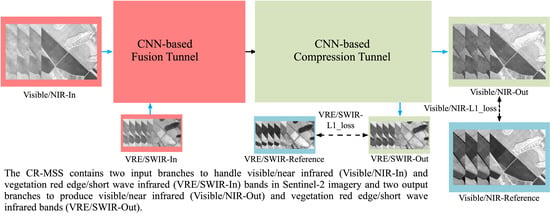

- We propose an end-to-end network architecture for cloud and cloud shadow removal that is tailored for Sentinel-2A images with the fusion of visible, NIR, VRE, and SWIR bands. The spectral features in VRE/SWIR bands are fully used to recover the cloud contaminated background information in Vis/NIR bands. Convolutional layers were adopted to replace manually designed rescaling algorithm to better preserve and extract spectral information in low resolution VRE/SWIR bands.

- The experimental data are from different regions of the world. The types of land cover are rich and the acquisition dates of the experimental data cover a long time period (from 2015 to 2019) and all seasons. Experiments on both real and simulated testing datasets are conducted to analyze the performance of the proposed CR-MSS in different aspects.

- Three DL-based methods and two traditional methods are compared with CR-MSS. The performance of CR-MSS with/without VRE and SWIR bands as input and output is analyzed. The results show that CR-MSS is very efficient and robust for thin cloud and cloud shadow removal, and it performs the better when taking VRE and SWIR bands into consideration.

2. Materials and Methods

2.1. Sentinel-2A Multispectral Data

2.2. Selection of Training and Testing Data

2.3. Method

- The convolution layer contains multiple convolution kernels and is used to extract features from input data. Each element that constitutes the convolution kernel corresponds to a weight coefficient and a bias. Each neuron in the convolution layer is connected with multiple neurons in the adjacent region from the previous layer, and the size of the region depends on the size of the convolution kernel.

- The deconvolution layer is used to up-sample the input data, by interpolating between the elements of the input matrix, and then, constructing the same connection and operation as a normal convolutional layer, except that it starts from the opposite direction.

2.4. Data Pre-Processing and Experiment Setting

3. Results

3.1. Comparison of Different Methods

3.2. Influence of the Temporal Shift between Images

3.3. Influence of VRE/SWIR Bands

3.4. Spectral Preservation on Simulated Data

4. Discussion

5. Conclusions

Author Contributions

Funding

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Foley, J.A.; DeFries, R.; Asner, G.P.; Barford, C.; Bonan, G.; Carpenter, S.R.; Chapin, F.S.; Coe, M.T.; Daily, G.C.; Gibbs, H.K.; et al. Global Consequences of Land Use. Science 2005, 309, 570–574. [Google Scholar] [CrossRef] [Green Version]

- Voltersen, M.; Berger, C.; Hese, S.; Schmullius, C. Object-based land cover mapping and comprehensive feature calculation for an automated derivation of urban structure types at block level. Remote Sens. Environ. 2014, 154, 192–201. [Google Scholar]

- Rogan, J.; Franklin, J.; Roberts, D.A. A comparison of methods for monitoring multitemporal vegetation change using Thematic Mapper imagery. Remote Sens. Environ. 2002, 80, 143–156. [Google Scholar] [CrossRef]

- Fisher, A.; Flood, N.; Danaher, T. Comparing Landsat water index methods for automated water classification in eastern Australia. Remote Sens. Environ. 2016, 175, 167–182. [Google Scholar]

- Mueller, N.; Lewis, A.C.; Roberts, D.A.; Ring, S.; Melrose, R.; Sixsmith, J.; Lymburner, L.; McIntyre, A.; Tan, P.; Curnow, S.; et al. Water observations from space: Mapping surface water from 25 years of Landsat imagery across Australia. Remote Sens. Environ. 2016, 174, 341–352. [Google Scholar] [CrossRef] [Green Version]

- Zhang, Y.; Rossow, W.B.; Lacis, A.A.; Oinas, V.; Mishchenko, M.I. Calculation of radiative fluxes from the surface to top of atmosphere based on ISCCP and other global data sets: Refinements of the radiative transfer model and the input data. J. Geophys. Res. Space Phys. 2004, 109. [Google Scholar] [CrossRef] [Green Version]

- Liou, K.N.; Davies, R. Radiation and Cloud Processes in the Atmosphere. Phys. Today 1993, 46, 66–67. [Google Scholar] [CrossRef]

- Parmes, E.; Rauste, Y.; Molinier, M.; Andersson, K.; Seitsonen, L. Automatic Cloud and Shadow Detection in Optical Satellite Imagery Without Using Thermal Bands—Application to Suomi NPP VIIRS Images over Fennoscandia. Remote Sens. 2017, 9, 806. [Google Scholar]

- Roy, D.; Ju, J.; Lewis, P.; Schaaf, C.; Gao, F.; Hansen, M.; Lindquist, E. Multi-temporal MODIS–Landsat data fusion for relative radiometric normalization, gap filling, and prediction of Landsat data. Remote Sens. Environ. 2008, 112, 3112–3130. [Google Scholar] [CrossRef]

- Lv, H.; Wang, Y.; Shen, Y. An empirical and radiative transfer model based algorithm to remove thin clouds in visible bands. Remote Sens. Environ. 2016, 179, 183–195. [Google Scholar] [CrossRef]

- Zhu, X.; Gao, F.; Liu, D.; Chen, J. A Modified Neighborhood Similar Pixel Interpolator Approach for Removing Thick Clouds in Landsat Images. IEEE Geosci. Remote Sens. Lett. 2012, 9, 521–525. [Google Scholar] [CrossRef]

- Cheng, Q.; Shen, H.; Zhang, L.; Yuan, Q.; Zeng, C. Cloud removal for remotely sensed images by similar pixel replacement guided with a spatio-temporal MRF model. ISPRS J. Photogram. Remote Sens. 2014, 92, 54–68. [Google Scholar] [CrossRef]

- Menaka, E.; Kumar, S.S.; Bharathi, M. Cloud removal using efficient cloud detection and removal algorithm for high-resolution satellite imagery. Int. J. Comput. Appl. Technol. 2015, 51, 54. [Google Scholar] [CrossRef]

- Tseng, D.-C.; Tseng, H.-T.; Chien, C.-L. Automatic cloud removal from multi-temporal SPOT images. Appl. Math. Comput. 2008, 205, 584–600. [Google Scholar] [CrossRef]

- Zhang, Y.; Wen, F.; Gao, Z.; Ling, X. A Coarse-to-Fine Framework for Cloud Removal in Remote Sensing Image Sequence. IEEE Trans. Geosci. Remote Sens. 2019, 57, 5963–5974. [Google Scholar] [CrossRef]

- Cerra, D.; Bieniarz, J.; Beyer, F.; Tian, J.; Müller, R.; Jarmer, T.; Reinartz, P. Cloud Removal in Image Time Series Through Sparse Reconstruction from Random Measurements. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3615–3628. [Google Scholar] [CrossRef]

- Lin, C.H.; Tsai, P.H.; Lai, K.H.; Chen, J.Y. Cloud removal from multitemporal satellite images using information cloning. IEEE Trans. Geosci. Remote Sens. 2013, 51, 232–241. [Google Scholar]

- Chen, B.; Huang, B.; Chen, L.; Xu, B. Spatially and temporally weighted regression: A novel method to produce continuous cloud-free Landsat imagery. IEEE Trans. Geosci. Remote Sens. 2017, 55, 27–37. [Google Scholar]

- Li, X.H.; Wang, L.; Cheng, Q.; Wu, P.; Gan, W.; Fang, L. Cloud removal in remote sensing images using nonnegative matrix factorization and error correction. ISPRS J. Photogram. Remote Sens. 2019, 148, 103–113. [Google Scholar]

- Zhang, Y.; Guindon, B.; Cihlar, J. An image transform to characterize and compensate for spatial variations in thin cloud contamination of Landsat images. Remote Sens. Environ. 2002, 82, 173–187. [Google Scholar] [CrossRef]

- Chen, S.; Chen, X.; Chen, J.; Jia, P.; Cao, X.; Liu, C. An Iterative Haze Optimized Transformation for Automatic Cloud/Haze Detection of Landsat Imagery. IEEE Trans. Geosci. Remote Sens. 2015, 54, 2682–2694. [Google Scholar] [CrossRef]

- Xu, M.; Pickering, M.; Plaza, A.J.; Jia, X. Thin Cloud Removal Based on Signal Transmission Principles and Spectral Mixture Analysis. IEEE Trans. Geosci. Remote Sens. 2015, 54, 1659–1669. [Google Scholar] [CrossRef]

- Liu, Z.; Hunt, B. A new approach to removing cloud cover from satellite imagery. Comput. Vis. Graph. Image Process. 1984, 25, 252–256. [Google Scholar] [CrossRef]

- Shen, H.; Li, H.; Qian, Y.; Zhang, L.; Yuan, Q. An effective thin cloud removal procedure for visible remote sensing images. ISPRS J. Photogramm. Remote Sens. 2014, 96, 224–235. [Google Scholar] [CrossRef]

- Liu, J.; Wang, X.; Chen, M.; Liu, S.; Zhou, X.; Shao, Z.; Liu, P. Thin cloud removal from single satellite images. Opt. Express 2014, 22, 618–632. [Google Scholar] [CrossRef]

- Land, E.H. Recent advances in retinex theory. Vis. Res. 1986, 26, 7–21. [Google Scholar]

- Jobson, D.; Rahman, Z.; Woodell, G. Properties and performance of a center/surround retinex. IEEE Trans. Image Process. 1997, 6, 451–462. [Google Scholar] [CrossRef]

- Rahman, Z.-U.; Jobson, D.J.; Woodell, G.A. Multi-Scale Retinex for Color Image Enhancement. In Proceedings of the 3rd IEEE International Conference on Image Processing, Lausanne, Switzerland, 19 September 1996. [Google Scholar]

- Jobson, D.; Rahman, Z.; Woodell, G. A multiscale retinex for bridging the gap between color images and the human observation of scenes. IEEE Trans. Image Process. 1997, 6, 965–976. [Google Scholar] [CrossRef] [Green Version]

- Krizhevsky, A.; Sutskever, I.; Hinton, G. ImageNet classification with deep convolutional neural networks. Adv. Neural Inf. Process. Syst. 2012, 1, 97–115. [Google Scholar]

- Long, J.; Shelhamer, E.; Darrell, T. Fully convolutional networks for semantic segmentation. IEEE Trans. Pattern Anal. Mach. Intell. 2014, 39, 640–651. [Google Scholar]

- Goodfellow, I.J.; Abadie, J.P.; Mirza, M.; Xu, B. Generative Adversarial Nets. Adv. Neural Inf. Process. Syst. 2014, 2, 2672–2680. [Google Scholar]

- Mao, X.; Shen, C.; Yang, Y. Image restoration using very deep convolutional encoder-decoder networks with symmetric skip connections. Adv. Neural Inf. Process. Syst. 2016, 29, 2802–2810. [Google Scholar]

- He, K.; Zhang, X.; Ren, S.; Sun, J. Deep Residual Learning for Image Recognition. In Proceedings of the IEEE Computer Society Conference on Computer Vision and Pattern Recognition, Las Vegas, NV, USA, 27–30 June 2016; pp. 770–778. [Google Scholar]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation. In Proceedings of the Medical Image Computing and Computer-Assisted Intervention—MICCAI, Munich, Germany, 5–9 October 2015; pp. 234–241. [Google Scholar]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef] [Green Version]

- Zhong, L.; Hu, L.; Zhou, H. Deep learning based multi-temporal crop classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Marcos, D.; Volpi, M.; Kellenberger, B.; Tuia, D. Land cover mapping at very high resolution with rotation equivariant CNNs: Towards small yet accurate models. ISPRS J. Photogramm. Remote Sens. 2018, 145, 96–107. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Pan, X.; Li, H.; Gardiner, A.; Sargent, I.; Hare, J.; Atkinson, P.M. A hybrid MLP-CNN classifier for very fine resolution remotely sensed image classification. ISPRS J. Photogramm. Remote Sens. 2018, 140, 133–144. [Google Scholar] [CrossRef] [Green Version]

- Paoletti, M.E.; Haut, J.M.; Plaza, J.; Plaza, A. Deep learning classifiers for hyperspectral imaging: A review. ISPRS J. Photogramm. Remote Sens. 2019, 158, 279–317. [Google Scholar] [CrossRef]

- Zhao, W.; Du, S. Spectral–Spatial Feature Extraction for Hyperspectral Image Classification: A Dimension Reduction and Deep Learning Approach. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4544–4554. [Google Scholar] [CrossRef]

- Pan, B.; Shi, Z.W.; Xu, X. MugNet: Deep learning for hyperspectral image classification using limited samples. ISPRS J. Photogramm. Remote Sens. 2018, 145, 108–119. [Google Scholar]

- Othman, E.; Bazi, Y.; Alajlan, N.; Alhichri, H.; Melgani, F. Using convolutional features and a sparse autoencoder for land-use scene classification. Int. J. Remote Sens. 2016, 37, 2149–2167. [Google Scholar] [CrossRef]

- Dai, X.; Wu, X.; Wang, B.; Zhang, L. Semisupervised Scene Classification for Remote Sensing Images: A Method Based on Convolutional Neural Networks and Ensemble Learning. IEEE Geosci. Remote Sens. Lett. 2019, 16, 869–873. [Google Scholar] [CrossRef]

- Deng, Z.; Sun, H.; Zhou, S.; Zhao, J.; Lei, L.; Zou, H. Multi-scale object detection in remote sensing imagery with convolutional neural networks. ISPRS J. Photogramm. Remote Sens. 2018, 145, 3–22. [Google Scholar] [CrossRef]

- Schilling, H.; Bulatov, D.; Middelmann, W. Object-based detection of vehicles using combined optical and elevation data. ISPRS J. Photogram. Remote Sens. 2018, 136, 85–105. [Google Scholar]

- Bermudez, J.D.; Happ, P.N.; Feitosa, R.Q.; Oliveira, D.A.B. Synthesis of Multispectral Optical Images From SAR/Optical Multitemporal Data Using Conditional Generative Adversarial Networks. IEEE Geosci. Remote Sens. Lett. 2019, 16, 1220–1224. [Google Scholar] [CrossRef]

- Li, W.B.; Li, Y.; Chan, J.C. Thick Cloud removal with optical and SAR imagery via convolutional mapping deconvolutional network. IEEE Trans. Geosci. Remote Sens. 2019, 99, 1–15. [Google Scholar]

- Meraner, A.; Eel, P.; Zhu, X.X.; Schmit, M. Cloud removal in Sentinel-2 imagery using a deep residual neural network and SAR-optical data fusion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 333–346. [Google Scholar]

- Gao, J.; Yuan, Q.; Li, J.; Zhang, H.; Su, X. Cloud Removal with Fusion of High Resolution Optical and SAR Images Using Generative Adversarial Networks. Remote Sens. 2020, 12, 191. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Xu, G.; Wang, Y.; Lin, D.; Li, P.; Lin, X. Thin and Thick Cloud Removal on Remote Sensing Image by Conditional Generative Adversarial Network. IEEE Int. Geosci. Remote Sens. Symp. 2019, 921–924. [Google Scholar] [CrossRef]

- Chai, D.; Newsam, S.; Zhang, H.; Qiu, Y.; Huang, J. Cloud and cloud shadow detection in Landsat imagery based on deep convolutional neural networks. Remote Sens. Environ. 2019, 225, 307–316. [Google Scholar] [CrossRef]

- Jeppesen, J.H.; Jacobsen, R.H.; Inceoglu, F.; Toftegaard, T.S. A cloud detection algorithm for satellite imagery based on deep learning. Remote Sens. Environ. 2019, 229, 247–259. [Google Scholar] [CrossRef]

- Li, Z.; Shen, H.; Cheng, Q.; Liu, Y.; You, S.; He, Z. Deep learning based cloud detection for medium and high resolution remote sensing images of different sensors. ISPRS J. Photogramm. Remote Sens. 2019, 150, 197–212. [Google Scholar] [CrossRef] [Green Version]

- Wu, Z.; Li, J.; Wang, Y.; Hu, Z.; Molinier, M. Self-Attentive Generative Adversarial Network for Cloud Detection in High Resolution Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 1792–1796. [Google Scholar] [CrossRef]

- Dare, P.; Dowman, I. An improved model for automatic feature-based registration of SAR and SPOT images. ISPRS J. Photogramm. Remote Sens. 2001, 56, 13–28. [Google Scholar] [CrossRef]

- Cai, B.; Xu, X.; Jia, K.; Qing, C.; Tao, D. DehazeNet: An End-to-End System for Single Image Haze Removal. IEEE Trans. Image Process. 2016, 25, 5187–5198. [Google Scholar] [CrossRef] [Green Version]

- Zhang, H.; Patel, V.M. Densely Connected Pyramid Dehazing Network. IEEE Conf. Comput. Vis. Pattern Recognit. 2018, 3194–3203. [Google Scholar] [CrossRef] [Green Version]

- Ren, W.; Ma, L.; Zhang, J.; Pan, J.; Cao, X.; Liu, W.; Yang, M.-H. Gated Fusion Network for Single Image Dehazing. IEEE Conf. Comput. Vis. Pattern Recognit. 2018, 3253–3261. [Google Scholar] [CrossRef] [Green Version]

- Chen, D.; He, M.; Fan, Q.; Liao, J.; Zhang, L.; Hou, D.; Yuan, L.; Hua, G. Gated Context Aggregation Network for Image Dehazing and Deraining. In Proceedings of the 2019 IEEE Winter Conference on Applications of Computer Vision (WACV), Waikoloa Village, HI, USA, 7–11 January 2019; pp. 1375–1383. [Google Scholar]

- Qin, M.; Xie, F.; Li, W.; Shi, Z.; Zhang, H. Dehazing for Multispectral Remote Sensing Images Based on a Convolutional Neural Network with the Residual Architecture. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 1645–1655. [Google Scholar] [CrossRef]

- Li, W.B.; Li, Y.; Chen, D.; Chan, J.C. Thin cloud removal with residual symmetrical concatenation network. ISPRS J. Photogram. Rem. Sens. 2019, 153, 137–150. [Google Scholar]

- Zhu, J.Y.; Park, T.; Isola, P.; Efros, A.A. Unpaired Image-to-Image Translation Using Cycle-Consistent Adversarial Networks. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017; pp. 2223–2232. [Google Scholar]

- Singh, P.; Komodakis, N. Cloud-Gan: Cloud Removal for Sentinel-2 Imagery Using a Cyclic Consistent Generative Adversarial Networks. IEEE Int. Geosci. Remote Sens. Symp. 2018, 1772–1775. [Google Scholar] [CrossRef]

- Sun, L.; Zhang, Y.; Chang, X.; Wang, Y.; Xu, J. Cloud-Aware Generative Network: Removing Cloud from Optical Remote Sensing Images. IEEE Geosci. Remote Sens. Lett. 2020, 17, 691–695. [Google Scholar] [CrossRef]

- Vaswani, A.; Shazeer, N.; Parmar, N.; Uszkoreit, J.; Jones, L.; Gomez, A.N.; Kaiser, L.; Polosukhin, I. Attention is All you Need. Adv. Neural Inform. Process. Syst. 2017, 29, 5998–6008. [Google Scholar]

- Li, J.; Wu, Z.; Hu, Z.; Zhang, J.; Li, M.; Mo, L.; Molinier, M. Thin cloud removal in optical remote sensing images based on generative adversarial networks and physical model of cloud distortion. ISPRS J. Photogramm. Remote Sens. 2020, 166, 373–389. [Google Scholar] [CrossRef]

- LeCun, Y.; Bottou, L.; Bengio, Y.; Haffner, P. Gradient-based learning applied to document recognition. Proc. IEEE 1998, 86, 2278–2324. [Google Scholar]

- Defourny, P.; Bontemps, S.; Lamarche, C.; Brockmann, C.; Boettcher, M.; Wevers, J.; Kirches, G.; Santoro, M. Land Cover CCI Product User Guide—Version 2.0. Esa. 2017. Available online: maps.elie.ucl.ac.be/CCI/viewer/download/ESACCI-LC-Ph2-PUGv2_2.0.pdf (accessed on 10 April 2017).

- Wang, Y.-K.; Huang, W.-B. A CUDA-enabled parallel algorithm for accelerating retinex. J. Real Time Image Process. 2012, 9, 407–425. [Google Scholar] [CrossRef]

- Main-Knorn, M.; Pflug, B.; Louis, J.; Debaecker, V.; Müller-Wilm, U.; Gascon, F. Sen2Cor for Sentinel-2. In Proceedings of the Image and Signal Processing for Remote Sensing XXIII, Warsaw, Poland, 11–13 September 2017; Volume 10427. [Google Scholar]

- Shen, H.; Wu, J.; Cheng, Q.; Aihemaiti, M.; Zhang, C.; Li, Z. A Spatiotemporal Fusion Based Cloud Removal Method for Remote Sensing Images with Land Cover Changes. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2019, 12, 862–874. [Google Scholar] [CrossRef]

- Zheng, J.; Liu, X.-Y.; Wang, X. Single Image Cloud Removal Using U-Net and Generative Adversarial Networks. IEEE Trans. Geosci. Remote Sens. 2020, 1–15. [Google Scholar] [CrossRef]

- Kingma, D.P.; Ba, J. Adam: A Method for Stochastic Optimization. In Proceedings of the 3rd International Conference on Learning Representations (ICLR), San Diego, CA, USA, 5–8 May 2015. [Google Scholar]

| Band No. | Band Name | Central Wavelength (μm) | Bandwidth (nm) | Spatial Resolution (m) |

|---|---|---|---|---|

| Band 1 | Coastal aerosol | 0.443 | 27 | 60 |

| Band 2 | Blue | 0.490 | 98 | 10 |

| Band 3 | Green | 0.560 | 45 | 10 |

| Band 4 | Red | 0.665 | 38 | 10 |

| Band 5 | Vegetation Red Edge | 0.705 | 19 | 20 |

| Band 6 | Vegetation Red Edge | 0.740 | 18 | 20 |

| Band 7 | Vegetation Red Edge | 0.783 | 28 | 20 |

| Band 8 | NIR | 0.842 | 145 | 10 |

| Band 8A | Vegetation Red Edge | 0.865 | 33 | 20 |

| Band 9 | Water Vapor | 0.945 | 26 | 60 |

| Band 10 | SWIR-Cirrus | 1.375 | 75 | 60 |

| Band 11 | SWIR | 1.610 | 143 | 20 |

| Band 12 | SWIR | 2.190 | 242 | 20 |

| Pair | Condition | Product ID | Country/Land Cover | Date | |

|---|---|---|---|---|---|

| Training | 1 | Cloud-free | S2A_MSIL1C_20160403T030602_N0201_R075_T50TMK_20160403T031209 | China Urban | 2016.04.03 |

| Cloudy | S2A_MSIL1C_20160413T031632_N0201_R075_T50TMK_20160413T031626 | Urban | 2016.04.13 | ||

| 2 | Cloud-free | S2A_MSIL1C_20181111T053041_N0207_R105_T43RGM_20181111T083104 | Indian | 2018.11.11 | |

| Cloudy | S2A_MSIL1C_20181121T053121_N0207_R105_T43RGM_20181121T091419 | Urban | 2018.11.21 | ||

| 3 | Cloud-free | S2A_MSIL1C_20160925T104022_N0204_R008_T32ULB_20160925T104115 | Germany | 2016.09.25 | |

| Cloudy | S2A_MSIL1C_20160915T104022_N0204_R008_T32ULB_20160915T104018 | Urban | 2016.09.15 | ||

| 4 | Cloud-free | S2A_MSIL1C_20160528T153912_N0202_R011_T18TWL_20160528T154746 | United States | 2016.05.28 | |

| Cloudy | S2A_MSIL1C_20160518T155142_N0202_R011_T18TWL_20160518T155138 | Urban | 2016.05.18 | ||

| 5 | Cloud-free | S2A_MSIL1C_20181208T170701_N0207_R069_T14QMG_20181208T202913 | Mexico | 2018.12.08 | |

| Cloudy | S2A_MSIL1C_20181218T170711_N0207_R069_T14QMG_20181218T203015 | Urban | 2018.12.18 | ||

| 6 | Cloud-free | S2A_MSIL1C_20180809T190911_N0206_R056_T10UFB_20180810T002400 | Canada | 2018.08.10 | |

| Cloudy | S2A_MSIL1C_20180819T190911_N0206_R056_T10UFB_20180820T002955 | Vegetation | 2018.08.20 | ||

| 7 | Cloud-free | S2A_MSIL1C_20190306T132231_N0207_R038_T22KHV_20190306T164115 | Brazil Vegetation | 2019.03.06 | |

| Cloudy | S2A_MSIL1C_20190224T132231_N0207_R038_T22KHV_20190224T164104 | 2019.02.24 | |||

| 8 | Cloud-free | S2A_MSIL1C_20181012T084901_N0206_R107_T37VCC_20181012T110218 | Russia | 2018.10.12 | |

| Cloudy | S2A_MSIL1C_20181022T085011_N0206_R107_T37VCC_20181022T110901 | Urban | 2018.10.22 | ||

| 9 | Cloud-free | S2A_MSIL1C_20190619T023251_N0207_R103_T50JKP_20190619T071925 | Australia | 2019.06.19 | |

| Cloudy | S2A_MSIL1C_20190629T023251_N0207_R103_T50JKP_20190629T053618 | Vegetation | 2019.06.29 | ||

| 10 | Cloud-free | S2A_MSIL1C_20190901T032541_N0208_R018_T47NPF_20190901T070148 | Malaysia | 2019.09.01 | |

| Cloudy | S2A_MSIL1C_20190911T032541_N0208_R018_T47NPF_20190911T084555 | Urban | 2019.09.11 | ||

| 11 | Cloud-free | S2A_MSIL1C_20160419T083012_N0201_R021_T36RUU_20160419T083954 | Egypt | 2016.04.19 | |

| Cloudy | S2A_MSIL1C_20160409T083012_N0201_R021_T36RUU_20160409T084024 | Bare land | 2016.04.09 | ||

| 12 | Cloud-free | S2A_MSIL1C_20190218T143751_N0207_R096_T19HCC_20190218T175945 | Chile | 2019.02.18 | |

| Cloudy | S2A_MSIL1C_20190208T143751_N0207_R096_T19HCC_20190208T180253 | Vegetation | 2019.02.08 | ||

| 13 | Cloud-free | S2A_MSIL1C_20180609T061631_N0206_R034_T42TWL_20180609T081837 | Uzbekistan | 2018.06.09 | |

| Cloudy | S2A_MSIL1C_20180530T061631_N0206_R034_T42TWL_20180530T082050 | Bare land | 2018.05.30 | ||

| 14 | Cloud-free | S2A_MSIL1C_20191111T025941_N0208_R032_T49QGF_20191111T055938 | China | 2019.11.11 | |

| Cloudy | S2A_MSIL1C_20191101T025841_N0208_R032_T49QGF_20191101T054434 | Urban | 2019.11.01 | ||

| 15 | Cloud-free | S2A_MSIL1C_20190818T103031_N0208_R108_T31SEA_20190818T124651 | Algeria | 2019.08.18 | |

| Cloudy | S2A_MSIL1C_20190808T103031_N0208_R108_T31SEA_20190808T124427 | Vegetation | 2019.08.08 | ||

| 16 | Cloud-free | S2A_MSIL1C_20191202T105421_N0208_R051_T29PPP_20191202T112025 | Mali | 2019.12.02 | |

| Cloudy | S2A_MSIL1C_20191212T105441_N0208_R051_T29PPP_20191212T111831 | Bare land | 2019.12.12 | ||

| 17 | Cloud-free | S2A_MSIL1C_20190919T074611_N0208_R135_T35JPL_20190919T105208 | South Africa | 2019.09.19 | |

| Cloudy | S2A_MSIL1C_20190929T074711_N0208_R135_T35JPL_20190929T100745 | Bare land | 2019.09.29 | ||

| 18 | Cloud-free | S2A_MSIL1C_20190725T142801_N0208_R053_T20LMR_20190725T175149 | Brazil | 2019.07.25 | |

| Cloudy | S2A_MSIL1C_20190804T142801_N0208_R053_T20LMR_20190804T175038 | Vegetation | 2019.08.04 | ||

| 19 | Cloud-free | S2A_MSIL1C_20191101T043931_N0208_R033_T46TDK_20191101T074915 | China | 2019.11.01 | |

| Cloudy | S2A_MSIL1C_20191022T043831_N0208_R033_T46TDK_20191022T063301 | Bare land | 2019.10.22 | ||

| 20 | Cloud-free | S2A_MSIL1C_20160509T065022_N0202_R020_T41UNV_20160509T065018 | Kazakhstan | 2016.05.09 | |

| Cloudy | S2A_MSIL1C_20160519T064632_N0202_R020_T41UNV_20160519T064833 | Bare land | 2016.05.19 | ||

| 21 | Cloud-free | S2A_MSIL1C_20191019T012631_N0208_R131_T53LKF_20191019T030531 | Australia | 2019.10.19 | |

| Cloudy | S2A_MSIL1C_20191029T012721_N0208_R131_T53LKF_20191029T040003 | Vegetation | 2019.10.29 | ||

| 22 | Cloud-free | S2A_MSIL1C_20190503T071621_N0207_R006_T38PMB_20190503T092340 | Yemen | 2019.05.03 | |

| Cloudy | S2A_MSIL1C_20190423T071621_N0207_R006_T38PMB_20190423T093049 | Bare land | 2019.04.23 | ||

| 23 | Cloud-free | S2A_MSIL1C_20190724T011701_N0208_R031_T56VLM_20190724T031136 | Russia | 2019.07.24 | |

| Cloudy | S2A_MSIL1C_20190714T011701_N0208_R031_T56VLM_20190714T031656 | Vegetation | 2019.07.14 | ||

| 24 | Cloud-free | S2A_MSIL1C_20181020T012651_N0206_R074_T54TXN_20181020T032526 | Japan | 2018.10.20 | |

| Cloudy | S2A_MSIL1C_20181010T012651_N0206_R074_T54TXN_20181010T055606 | Urban | 2018.10.10 | ||

| 25 | Cloud-free | S2A_MSIL1C_20170224T162331_N0204_R040_T16REV_20170224T162512 | United States | 2017.02.24 | |

| Cloudy | S2A_MSIL1C_20170214T162351_N0204_R040_T16REV_20170214T163022 | Urban | 2017.02.14 | ||

| 26 | Cloud-free | S2A_MSIL1C_20190613T032541_N0207_R018_T49UFT_20190613T062257 | Russia | 2019.06.13 | |

| Cloudy | S2A_MSIL1C_20190623T032541_N0207_R018_T49UFT_20190623T061953 | Vegetation | 2019.06.23 | ||

| 27 | Cloud-free | S2A_MSIL1C_20190208T011721_N0207_R088_T53KLP_20190208T024521 | Australia | 2019.02.08 | |

| Cloudy | S2A_MSIL1C_20190129T011721_N0207_R088_T53KLP_20190129T024501 | Bare land | 2019.01.29 | ||

| 28 | Cloud-free | S2A_MSIL1C_20190530T184921_N0207_R113_T12VVN_20190530T222535 | Canada | 2019.05.30 | |

| Cloudy | S2A_MSIL1C_20190520T184921_N0207_R113_T12VVN_20190520T222900 | Vegetation | 2019.05.20 | ||

| Testing | 1 | Cloud-free | S2A_MSIL1C_20150826T084006_N0204_R064_T37UCQ_20150826T084003 | Ukraine | 2015.08.26 |

| Cloudy | S2A_MSIL1C_20150905T083736_N0204_R064_T37UCQ_20150905T084002 | Urban | 2015.09.05 | ||

| 2 | Cloud-free | S2A_MSIL1C_20191101T000241_N0208_R030_T56HLH_20191101T012241 | Australia | 2019.11.10 | |

| Cloudy | S2A_MSIL1C_20191111T000241_N0208_R030_T56HLH_20191111T012137 | Urban | 2019.11.11 | ||

| 3 | Cloud-free | S2A_MSIL1C_20190711T174911_N0208_R141_T13TEE_20190711T212846 | United States | 2019.07.11 | |

| Cloudy | S2A_MSIL1C_20190701T174911_N0207_R141_T13TEE_20190701T212910 | Bare land | 2019.07.01 | ||

| 4 | Cloud-free | S2A_MSIL1C_20190201T093221_N0207_R136_T32PRR_20190201T113425 | Nigeria | 2019.02.01 | |

| Cloudy | S2A_MSIL1C_20190211T093121_N0207_R136_T32PRR_20190211T103706 | Bare land | 2019.02.11 | ||

| 5 | Cloud-free | S2A_MSIL1C_20190314T021601_N0207_R003_T52SCF_20190314T055026 | South Korea | 2019.03.14 | |

| Cloudy | S2A_MSIL1C_20190304T021601_N0207_R003_T52SCF_20190304T042035 | Urban | 2019.03.04 | ||

| 6 | Cloud-free | S2A_MSIL1C_20180804T045701_N0206_R119_T46VDH_20180804T065907 | Russia | 2018.08.04 | |

| Cloudy | S2A_MSIL1C_20180725T045701_N0206_R119_T46VDH_20180725T065359 | Vegetation | 2018.07.25 | ||

| 7 | Cloud-free | S2A_MSIL1C_20190707T213531_N0207_R086_T05VPJ_20190707T231819 | United States | 2019.07.07 | |

| Cloudy | S2A_MSIL1C_20190627T213531_N0207_R086_T05VPJ_20190628T010801 | Vegetation | 2019.06.28 | ||

| 8 | Cloud-free | S2A_MSIL1C_20190605T125311_N0207_R052_T24MXV_20190605T160555 | Brazil | 2019.06.05 | |

| Cloudy | S2A_MSIL1C_20190615T125311_N0207_R052_T24MXV_20190615T142536 | Vegetation | 2019.06.15 |

| Image | Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| T1 (Figure 4) | PSNR | CR-MSS | 14.19 | 16.99 | 19.38 | 23.17 | 22.57 | 22.94 | 24.48 | 25.39 | 23.68 | 24.15 |

| RSC-Net-10 | 16.32 | 18.60 | 19.76 | 23.63 | 20.96 | 24.33 | 24.57 | 24.47 | 24.50 | 24.90 | ||

| U-Net-10 | 14.21 | 16.68 | 19.68 | 22.34 | 21.61 | 21.96 | 23.99 | 24.95 | 23.62 | 23.23 | ||

| Cloud-GAN | 14.62 | 16.04 | 16.51 | 15.70 | / | / | / | / | / | / | ||

| AHF | 13.50 | 14.26 | 16.35 | 20.68 | / | / | / | / | / | / | ||

| MRSCP | 12.23 | 12.67 | 14.42 | 15.87 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.67 | 0.74 | 0.81 | 0.86 | 0.86 | 0.90 | 0.91 | 0.92 | 0.88 | 0.89 | |

| RSC-Net-10 | 0.72 | 0.76 | 0.82 | 0.87 | 0.85 | 0.90 | 0.92 | 0.92 | 0.9 | 0.91 | ||

| U-Net-10 | 0.65 | 0.7 | 0.79 | 0.84 | 0.83 | 0.89 | 0.91 | 0.91 | 0.85 | 0.87 | ||

| Cloud-GAN | 0.50 | 0.51 | 0.52 | 0.45 | / | / | / | / | / | / | ||

| AHF | 0.46 | 0.54 | 0.67 | 0.79 | / | / | / | / | / | / | ||

| MRSCP | 0.50 | 0.57 | 0.69 | 0.78 | / | / | / | / | / | / | ||

| T2 (Figure 5) | PSNR | CR-MSS | 16.57 | 14.46 | 17.39 | 25.35 | 17.59 | 27.27 | 25.41 | 24.22 | 23.08 | 22.91 |

| RSC-Net-10 | 12.21 | 10.01 | 12.75 | 19.04 | 9.93 | 16.56 | 18.53 | 19.88 | 16.81 | 15.95 | ||

| U-Net-10 | 17.78 | 14.97 | 18.34 | 25.25 | 17.11 | 25.12 | 26.57 | 27.74 | 19.93 | 23.19 | ||

| Cloud-GAN | 6.93 | 5.92 | 4.55 | 16.10 | / | / | / | / | / | / | ||

| AHF | 4.63 | 5.92 | 7.15 | 17.41 | / | / | / | / | / | / | ||

| MRSCP | 4.92 | 6.31 | 8.07 | 21.48 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.58 | 0.54 | 0.63 | 0.78 | 0.80 | 0.87 | 0.87 | 0.88 | 0.87 | 0.86 | |

| RSC-Net-10 | 0.45 | 0.41 | 0.52 | 0.72 | 0.64 | 0.82 | 0.83 | 0.84 | 0.83 | 0.80 | ||

| U-Net-10 | 0.60 | 0.54 | 0.63 | 0.74 | 0.77 | 0.86 | 0.87 | 0.88 | 0.84 | 0.85 | ||

| Cloud-GAN | 0.23 | 0.23 | 0.21 | 0.41 | / | / | / | / | / | / | ||

| AHF | 0.21 | 0.28 | 0.35 | 0.78 | / | / | / | / | / | / | ||

| MRSCP | 0.24 | 0.31 | 0.37 | 0.72 | / | / | / | / | / | / | ||

| T3 (Figure 6) | PSNR | CR-MSS | 18.69 | 17.98 | 21.28 | 19.49 | 20.67 | 20.85 | 19.19 | 20.01 | 21.59 | 19.00 |

| RSC-Net-10 | 16.39 | 15.06 | 16.87 | 18.59 | 15.12 | 19.65 | 18.11 | 18.91 | 20.02 | 19.49 | ||

| U-Net-10 | 21.49 | 19.04 | 19.86 | 15.10 | 19.41 | 15.95 | 14.26 | 16.35 | 18.18 | 19.00 | ||

| Cloud-GAN | 14.29 | 15.66 | 15.61 | 16.94 | / | / | / | / | / | / | ||

| AHF | 13.01 | 14.14 | 16.69 | 17.99 | / | / | / | / | / | / | ||

| MRSCP | 12.43 | 13.13 | 13.66 | 12.31 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.71 | 0.73 | 0.74 | 0.76 | 0.77 | 0.78 | 0.76 | 0.75 | 0.81 | 0.80 | |

| RSC-Net-10 | 0.60 | 0.61 | 0.63 | 0.77 | 0.67 | 0.77 | 0.77 | 0.79 | 0.85 | 0.82 | ||

| U-Net-10 | 0.70 | 0.70 | 0.69 | 0.72 | 0.73 | 0.76 | 0.74 | 0.75 | 0.77 | 0.78 | ||

| Cloud-GAN | 0.48 | 0.57 | 0.54 | 0.55 | / | / | / | / | / | / | ||

| AHF | 0.69 | 0.73 | 0.79 | 0.80 | / | / | / | / | / | / | ||

| MRSCP | 0.62 | 0.67 | 0.72 | 0.72 | / | / | / | / | / | / | ||

| T4 (Figure 7) | PSNR | CR-MSS | 19.36 | 19.73 | 20.64 | 21.94 | 22.1 | 23.61 | 23.37 | 21.91 | 20.84 | 24.17 |

| RSC-Net-10 | 18.43 | 19.39 | 19.91 | 18.9 | 20.91 | 21.32 | 20.68 | 19.91 | 22.14 | 22.9 | ||

| U-Net-10 | 18.19 | 18.83 | 19.61 | 20.37 | 20.77 | 22.47 | 22.09 | 20.98 | 22.21 | 23.88 | ||

| Cloud-GAN | 16.24 | 15.85 | 16.53 | 18.76 | / | / | / | / | / | / | ||

| AHF | 9.55 | 9.63 | 10.15 | 9.71 | / | / | / | / | / | / | ||

| MRSCP | 14.13 | 14.73 | 15.44 | 16.52 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.62 | 0.62 | 0.67 | 0.66 | 0.71 | 0.74 | 0.75 | 0.79 | 0.84 | 0.85 | |

| RSC-Net-10 | 0.56 | 0.55 | 0.59 | 0.56 | 0.61 | 0.61 | 0.61 | 0.67 | 0.73 | 0.77 | ||

| U-Net-10 | 0.53 | 0.54 | 0.57 | 0.6 | 0.62 | 0.64 | 0.66 | 0.71 | 0.77 | 0.79 | ||

| Cloud-GAN | 0.40 | 0.41 | 0.42 | 0.57 | / | / | / | / | / | / | ||

| AHF | 0.31 | 0.39 | 0.52 | 0.58 | / | / | / | / | / | / | ||

| MRSCP | 0.18 | 0.21 | 0.30 | 0.40 | / | / | / | / | / | / |

| Image | Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| T5 (Figure 8) | PSNR | CR-MSS | 19.85 | 18.39 | 21.17 | 18.63 | 20.25 | 21.14 | 20.29 | 17.67 | 14.98 | 15.82 |

| RSC-Net-10 | 14.42 | 13.5 | 16.28 | 18.73 | 15.68 | 17.98 | 18.74 | 19.32 | 17.41 | 19.36 | ||

| U-Net-10 | 20.02 | 19.6 | 20.12 | 15.94 | 20.09 | 17.00 | 16.21 | 14.36 | 12.88 | 14.10 | ||

| Cloud-GAN | 7.55 | 6.71 | 6.87 | 10.74 | / | / | / | / | / | / | ||

| AHF | 10.05 | 11.18 | 14.03 | 15.55 | / | / | / | / | / | / | ||

| MRSCP | 4.51 | 5.33 | 7.25 | 12.76 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.78 | 0.77 | 0.77 | 0.77 | 0.84 | 0.86 | 0.87 | 0.86 | 0.81 | 0.81 | |

| RSC-Net-10 | 0.68 | 0.64 | 0.71 | 0.71 | 0.76 | 0.78 | 0.8 | 0.81 | 0.8 | 0.8 | ||

| U-Net-10 | 0.74 | 0.74 | 0.70 | 0.73 | 0.79 | 0.79 | 0.8 | 0.79 | 0.73 | 0.74 | ||

| Cloud-GAN | 0.32 | 0.30 | 0.33 | 0.38 | / | / | / | / | / | / | ||

| AHF | 0.45 | 0.54 | 0.70 | 0.75 | / | / | / | / | / | / | ||

| MRSCP | 0.17 | 0.21 | 0.34 | 0.59 | / | / | / | / | / | / | ||

| T6 (Figure 8) | PSNR | CR-MSS | 25.13 | 22.12 | 27.54 | 23.63 | 23.02 | 23.56 | 24.57 | 24.78 | 30.29 | 30.73 |

| RSC-Net-10 | 22.92 | 22.46 | 25.18 | 19.97 | 22.24 | 20.34 | 20.74 | 22.95 | 23.23 | 23.88 | ||

| U-Net-10 | 25.04 | 19.78 | 26.58 | 20.66 | 19.52 | 18.81 | 20.6 | 22.3 | 24.33 | 29.25 | ||

| Cloud-GAN | 16.63 | 16.53 | 19.29 | 14.80 | / | / | / | / | / | / | ||

| AHF | 8.87 | 14.86 | 14.71 | 12.49 | / | / | / | / | / | / | ||

| MRSCP | 8.44 | 13.89 | 13.16 | 15.71 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.65 | 0.71 | 0.80 | 0.90 | 0.87 | 0.91 | 0.91 | 0.92 | 0.96 | 0.95 | |

| RSC-Net-10 | 0.66 | 0.7 | 0.80 | 0.89 | 0.87 | 0.89 | 0.9 | 0.91 | 0.94 | 0.93 | ||

| U-Net-10 | 0.65 | 0.68 | 0.78 | 0.87 | 0.83 | 0.87 | 0.89 | 0.91 | 0.92 | 0.92 | ||

| Cloud-GAN | 0.41 | 0.46 | 0.54 | 0.50 | / | / | / | / | / | / | ||

| AHF | 0.28 | 0.60 | 0.56 | 0.67 | / | / | / | / | / | / | ||

| MRSCP | 0.40 | 0.58 | 0.59 | 0.83 | / | / | / | / | / | / | ||

| T7 (Figure 8) | PSNR | CR-MSS | 16.73 | 18.39 | 16.78 | 21.57 | 20.83 | 23.62 | 23.54 | 23.22 | 22.65 | 21.84 |

| RSC-Net-10 | 14.11 | 13.82 | 12.69 | 22.28 | 16.3 | 23.16 | 23.22 | 23.17 | 20.96 | 18.61 | ||

| U-Net-10 | 15.01 | 15.06 | 15.36 | 23.01 | 18.56 | 22.2 | 22.84 | 24.41 | 21.93 | 21.61 | ||

| Cloud-GAN | 8.10 | 8.77 | 7.57 | 15.95 | / | / | / | / | / | / | ||

| AHF | 9.15 | 10.88 | 10.94 | 15.06 | / | / | / | / | / | / | ||

| MRSCP | 3.36 | 4.35 | 5.30 | 13.16 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.61 | 0.67 | 0.62 | 0.83 | 0.77 | 0.89 | 0.90 | 0.90 | 0.85 | 0.80 | |

| RSC-Net-10 | 0.52 | 0.58 | 0.52 | 0.78 | 0.74 | 0.87 | 0.89 | 0.89 | 0.77 | 0.71 | ||

| U-Net-10 | 0.53 | 0.56 | 0.56 | 0.8 | 0.70 | 0.89 | 0.90 | 0.90 | 0.78 | 0.75 | ||

| Cloud-GAN | 0.31 | 0.39 | 0.33 | 0.57 | / | / | / | / | / | / | ||

| AHF | 0.33 | 0.51 | 0.48 | 0.75 | / | / | / | / | / | / | ||

| MRSCP | 0.20 | 0.25 | 0.26 | 0.69 | / | / | / | / | / | / | ||

| T8 (Figure 8) | PSNR | CR-MSS | 22.64 | 18.66 | 21.02 | 20.84 | 19.16 | 21.32 | 21.35 | 21.55 | 19.12 | 22.83 |

| RSC-Net-10 | 19.06 | 19.69 | 17.82 | 20.79 | 19.74 | 19.45 | 19.56 | 19.36 | 19.78 | 20.34 | ||

| U-Net-10 | 18.66 | 18.69 | 20.14 | 19.73 | 18.17 | 20.20 | 20.48 | 20.29 | 17.85 | 18.96 | ||

| Cloud-GAN | 11.26 | 13.82 | 13.34 | 11.33 | / | / | / | / | / | / | ||

| AHF | 21.63 | 13.30 | 17.91 | 21.05 | / | / | / | / | / | / | ||

| MRSCP | 18.63 | 16.08 | 18.27 | 12.90 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.62 | 0.57 | 0.66 | 0.68 | 0.59 | 0.63 | 0.65 | 0.65 | 0.55 | 0.67 | |

| RSC-Net-10 | 0.56 | 0.60 | 0.69 | 0.67 | 0.60 | 0.62 | 0.63 | 0.63 | 0.55 | 0.67 | ||

| U-Net-10 | 0.59 | 0.56 | 0.65 | 0.68 | 0.58 | 0.62 | 0.64 | 0.64 | 0.56 | 0.64 | ||

| Cloud-GAN | 0.34 | 0.33 | 0.42 | 0.31 | / | / | / | / | / | / | ||

| AHF | 0.52 | 0.38 | 0.59 | 0.79 | / | / | / | / | / | / | ||

| MRSCP | 0.52 | 0.43 | 0.61 | 0.40 | / | / | / | / | / | / |

| Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | CR-MSS | 19.53 | 18.49 | 20.29 | 21.09 | 19.30 | 20.70 | 21.03 | 21.26 | 21.62 | 21.98 |

| RSC-Net-10 | 18.11 | 17.97 | 19.92 | 20.68 | 20.09 | 20.90 | 21.03 | 21.32 | 21.34 | 21.41 | |

| U-Net-10 | 18.68 | 18.09 | 19.87 | 20.68 | 19.39 | 20.68 | 20.97 | 21.11 | 20.5 | 20.79 | |

| Cloud-GAN | 15.63 | 15.23 | 16.22 | 15.90 | / | / | / | / | / | / | |

| AHF | 14.72 | 14.62 | 16.90 | 19.22 | / | / | / | / | / | / | |

| MRSCP | 15.13 | 14.94 | 16.18 | 13.15 | / | / | / | / | / | / | |

| SSIM | CR-MSS | 0.69 | 0.71 | 0.77 | 0.81 | 0.80 | 0.82 | 0.83 | 0.84 | 0.85 | 0.84 |

| RSC-Net-10 | 0.66 | 0.70 | 0.76 | 0.80 | 0.79 | 0.82 | 0.83 | 0.83 | 0.83 | 0.84 | |

| U-Net-10 | 0.67 | 0.69 | 0.74 | 0.79 | 0.79 | 0.82 | 0.83 | 0.83 | 0.83 | 0.82 | |

| Cloud-GAN | 0.47 | 0.49 | 0.53 | 0.51 | / | / | / | / | / | / | |

| AHF | 0.49 | 0.54 | 0.67 | 0.77 | / | / | / | / | / | / | |

| MRSCP | 0.47 | 0.51 | 0.62 | 0.55 | / | / | / | / | / | / |

| Method | CR-MSS | RSC-Net-10 | U-Net-10 | Cloud-GAN | AHF | MSRCP |

|---|---|---|---|---|---|---|

| Time | 1 min 29 s | 0 min 47 s | 1 min 19 s | 1 min 8 s | 5 min 16 s | 37 min 24 s |

| Landcover | Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Urban (1st, 2nd, 5th) | PSNR | CR-MSS | 17.23 | 16.93 | 19.08 | 21.02 | 20.74 | 19.02 | 20.84 | 20.90 | 21.20 | 21.65 |

| RSC-Net-10 | 15.89 | 16.31 | 18.31 | 20.43 | 17.35 | 19.96 | 20.55 | 20.92 | 20.84 | 20.98 | ||

| U-Net-10 | 16.28 | 16.71 | 18.56 | 20.26 | 18.49 | 20.32 | 20.60 | 20.54 | 20.33 | 20.36 | ||

| Cloud-GAN | 13.58 | 13.64 | 14.37 | 16.60 | / | / | / | / | / | / | ||

| AHF | 13.06 | 13.78 | 15.68 | 18.01 | / | / | / | / | / | / | ||

| MRSCP | 13.00 | 13.83 | 14.30 | 14.55 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.67 | 0.69 | 0.76 | 0.84 | 0.80 | 0.81 | 0.83 | 0.84 | 0.86 | 0.86 | |

| RSC-Net-10 | 0.67 | 0.65 | 0.75 | 0.80 | 0.78 | 0.82 | 0.83 | 0.84 | 0.85 | 0.85 | ||

| U-Net-10 | 0.67 | 0.64 | 0.74 | 0.78 | 0.79 | 0.81 | 0.82 | 0.83 | 0.84 | 0.84 | ||

| Cloud-GAN | 0.45 | 0.48 | 0.52 | 0.50 | / | / | / | / | / | / | ||

| AHF | 0.50 | 0.56 | 0.66 | 0.74 | / | / | / | / | / | / | ||

| MRSCP | 0.46 | 0.53 | 0.63 | 0.64 | / | / | / | / | / | / | ||

| Vegetation (6th, 7th, 8th) | PSNR | CR-MSS | 21.40 | 19.30 | 21.50 | 21.96 | 22.08 | 19.59 | 21.55 | 22.42 | 22.77 | 23.64 |

| RSC-Net-10 | 19.08 | 19.10 | 21.09 | 22.78 | 21.78 | 23.12 | 23.25 | 23.84 | 22.74 | 22.40 | ||

| U-Net-10 | 18.83 | 19.88 | 20.70 | 21.92 | 19.83 | 21.86 | 22.27 | 22.78 | 21.60 | 21.97 | ||

| Cloud-GAN | 16.77 | 15.78 | 17.03 | 16.43 | / | / | / | / | / | / | ||

| AHF | 16.83 | 15.48 | 18.09 | 21.75 | / | / | / | / | / | / | ||

| MRSCP | 17.00 | 15.17 | 17.84 | 11.95 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.68 | 0.70 | 0.76 | 0.86 | 0.83 | 0.81 | 0.85 | 0.87 | 0.87 | 0.86 | |

| RSC-Net-10 | 0.70 | 0.64 | 0.76 | 0.83 | 0.81 | 0.85 | 0.86 | 0.87 | 0.87 | 0.86 | ||

| U-Net-10 | 0.69 | 0.67 | 0.74 | 0.82 | 0.80 | 0.85 | 0.86 | 0.87 | 0.84 | 0.83 | ||

| Cloud-GAN | 0.45 | 0.46 | 0.52 | 0.50 | / | / | / | / | / | / | ||

| AHF | 0.49 | 0.54 | 0.67 | 0.82 | / | / | / | / | / | / | ||

| MRSCP | 0.49 | 0.51 | 0.63 | 0.47 | / | / | / | / | / | / | ||

| Bare land (3rd, 4th) | PSNR | CR-MSS | 18.50 | 18.45 | 19.10 | 20.07 | 20.08 | 19.32 | 20.01 | 20.35 | 21.10 | 20.49 |

| RSC-Net-10 | 18.43 | 17.85 | 19.13 | 17.55 | 19.56 | 18.62 | 18.19 | 17.78 | 20.00 | 20.52 | ||

| U-Net-10 | 18.65 | 18.09 | 19.03 | 19.48 | 19.54 | 20.15 | 19.86 | 19.76 | 19.51 | 19.90 | ||

| Cloud-GAN | 14.37 | 14.94 | 15.32 | 14.71 | / | / | / | / | / | / | ||

| AHF | 12.73 | 13.60 | 14.28 | 15.45 | / | / | / | / | / | / | ||

| MRSCP | 14.75 | 15.42 | 16.06 | 14.31 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.71 | 0.73 | 0.75 | 0.75 | 0.74 | 0.76 | 0.75 | 0.76 | 0.80 | 0.78 | |

| RSC-Net-10 | 0.70 | 0.68 | 0.72 | 0.71 | 0.75 | 0.73 | 0.73 | 0.74 | 0.79 | 0.78 | ||

| U-Net-10 | 0.71 | 0.68 | 0.71 | 0.72 | 0.75 | 0.74 | 0.74 | 0.75 | 0.78 | 0.77 | ||

| Cloud-GAN | 0.52 | 0.54 | 0.56 | 0.52 | / | / | / | / | / | / | ||

| AHF | 0.48 | 0.53 | 0.60 | 0.67 | / | / | / | / | / | / | ||

| MRSCP | 0.50 | 0.54 | 0.60 | 0.59 | / | / | / | / | / | / |

| Image | Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|---|

| Figure 9 | PSNR | CR-MSS | 17.40 | 17.07 | 17.95 | 20.74 | 21.21 | 17.45 | 19.73 | 21.98 | 19.30 | 18.00 |

| CR-MSS-10-4 | 15.12 | 13.91 | 16.06 | 19.57 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 14.11 | 13.72 | 14.80 | 20.16 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.67 | 0.69 | 0.75 | 0.88 | 0.81 | 0.83 | 0.86 | 0.89 | 0.87 | 0.84 | |

| CR-MSS-10-4 | 0.64 | 0.64 | 0.74 | 0.82 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 0.62 | 0.63 | 0.71 | 0.82 | / | / | / | / | / | / | ||

| Figure 10 | PSNR | CR-MSS | 21.28 | 19.24 | 21.65 | 22.87 | 22.62 | 19.41 | 22.15 | 23.12 | 26.39 | 25.03 |

| CR-MSS-10-4 | 19.83 | 18.71 | 20.18 | 22.46 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 19.58 | 18.38 | 20.11 | 20.91 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.70 | 0.69 | 0.81 | 0.88 | 0.88 | 0.79 | 0.87 | 0.88 | 0.92 | 0.92 | |

| CR-MSS-10-4 | 0.69 | 0.69 | 0.81 | 0.88 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 0.69 | 0.69 | 0.79 | 0.87 | / | / | / | / | / | / | ||

| Figure 11 | PSNR | CR-MSS | 23.56 | 21.75 | 24.84 | 24.24 | 23.54 | 22.89 | 23.15 | 24.72 | 23.08 | 20.41 |

| CR-MSS-10-4 | 26.20 | 23.51 | 24.91 | 23.08 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 27.71 | 25.19 | 22.63 | 23.15 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.73 | 0.72 | 0.76 | 0.91 | 0.83 | 0.85 | 0.90 | 0.91 | 0.91 | 0.88 | |

| CR-MSS-10-4 | 0.75 | 0.73 | 0.78 | 0.81 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 0.77 | 0.73 | 0.75 | 0.82 | / | / | / | / | / | / | ||

| Figure 12 | PSNR | CR-MSS | 19.83 | 21.41 | 21.91 | 22.59 | 22.09 | 22.56 | 22.44 | 22.60 | 18.39 | 21.72 |

| CR-MSS-10-4 | 21.68 | 21.45 | 20.65 | 22.07 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 18.52 | 21.31 | 21.63 | 21.11 | / | / | / | / | / | / | ||

| SSIM | CR-MSS | 0.65 | 0.67 | 0.78 | 0.78 | 0.75 | 0.73 | 0.77 | 0.78 | 0.79 | 0.84 | |

| CR-MSS-10-4 | 0.70 | 0.68 | 0.77 | 0.77 | / | / | / | / | / | / | ||

| CR-MSS-4-4 | 0.65 | 0.70 | 0.78 | 0.78 | / | / | / | / | / | / |

| Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | CR-MSS | 19.85 | 19.83 | 22.18 | 23.50 | 23.29 | 22.22 | 23.93 | 23.56 | 23.36 | 24.77 |

| CR-MSS-10-4 | 18.87 | 18.58 | 20.42 | 22.87 | / | / | / | / | / | / | |

| CR-MSS-4-4 | 18.89 | 18.93 | 20.23 | 23.23 | / | / | / | / | / | / | |

| SSIM | CR-MSS | 0.73 | 0.74 | 0.77 | 0.85 | 0.80 | 0.84 | 0.85 | 0.86 | 0.88 | 0.86 |

| CR-MSS-10-4 | 0.73 | 0.75 | 0.77 | 0.78 | / | / | / | / | / | / | |

| CR-MSS-4-4 | 0.70 | 0.71 | 0.73 | 0.78 | / | / | / | / | / | / |

| Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| PSNR | CR-MSS | 19.53 | 18.49 | 20.29 | 21.09 | 19/30 | 20.70 | 21.03 | 21.26 | 21.62 | 21.98 |

| CR-MSS-10-4 | 19.50 | 18.47 | 20.34 | 20.86 | / | / | / | / | / | / | |

| CR-MSS-4-4 | 19.43 | 18.30 | 20.03 | 20.56 | / | / | / | / | / | / | |

| SSIM | CR-MSS | 0.69 | 0.71 | 0.77 | 0.81 | 0.80 | 0.82 | 0.83 | 0.84 | 0.85 | 0.84 |

| CR-MSS-10-4 | 0.69 | 0.71 | 0.77 | 0.80 | / | / | / | / | / | / | |

| CR-MSS-4-4 | 0.69 | 0.71 | 0.76 | 0.79 | / | / | / | / | / | / |

| Index | Method | B2 | B3 | B4 | B8 | B5 | B6 | B7 | B8A | B11 | B12 |

|---|---|---|---|---|---|---|---|---|---|---|---|

| NRMSE | CR-MSS | 0.5697 | 0.2830 | 0.2382 | 0.0190 | 0.0767 | 0.0298 | 0.0249 | 0.0161 | 0.0123 | 0.0221 |

| CR-MSS-10-4 | 0.5873 | 0.2840 | 0.2182 | 0.0194 | / | / | / | / | / | / | |

| CR-MSS-4-4 | 0.4762 | 0.2335 | 0.2408 | 0.0208 | / | / | / | / | / | / | |

| RSC-Net-10 | 0.6244 | 0.3216 | 0.3114 | 0.0177 | 0.1008 | 0.0325 | 0.0239 | 0.0101 | 0.0163 | 0.0337 | |

| U-Net-10 | 0.4279 | 0.2621 | 0.2210 | 0.0249 | 0.0938 | 0.0352 | 0.0316 | 0.0220 | 0.0289 | 0.0594 | |

| Cloud-GAN | 1.3156 | 0.5831 | 1.1778 | 0.0785 | / | / | / | / | / | / | |

| AHF | 0.7123 | 0.2666 | 0.3874 | 0.0952 | / | / | / | / | / | / | |

| MSRCP | 1.1203 | 0.4694 | 0.8262 | 0.0615 | / | / | / | / | / | / |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, J.; Wu, Z.; Hu, Z.; Li, Z.; Wang, Y.; Molinier, M. Deep Learning Based Thin Cloud Removal Fusing Vegetation Red Edge and Short Wave Infrared Spectral Information for Sentinel-2A Imagery. Remote Sens. 2021, 13, 157. https://doi.org/10.3390/rs13010157

Li J, Wu Z, Hu Z, Li Z, Wang Y, Molinier M. Deep Learning Based Thin Cloud Removal Fusing Vegetation Red Edge and Short Wave Infrared Spectral Information for Sentinel-2A Imagery. Remote Sensing. 2021; 13(1):157. https://doi.org/10.3390/rs13010157

Chicago/Turabian StyleLi, Jun, Zhaocong Wu, Zhongwen Hu, Zilong Li, Yisong Wang, and Matthieu Molinier. 2021. "Deep Learning Based Thin Cloud Removal Fusing Vegetation Red Edge and Short Wave Infrared Spectral Information for Sentinel-2A Imagery" Remote Sensing 13, no. 1: 157. https://doi.org/10.3390/rs13010157

APA StyleLi, J., Wu, Z., Hu, Z., Li, Z., Wang, Y., & Molinier, M. (2021). Deep Learning Based Thin Cloud Removal Fusing Vegetation Red Edge and Short Wave Infrared Spectral Information for Sentinel-2A Imagery. Remote Sensing, 13(1), 157. https://doi.org/10.3390/rs13010157