Deep Learning-Based Radar Composite Reflectivity Factor Estimations from Fengyun-4A Geostationary Satellite Observations

Abstract

:1. Introduction

2. Data

2.1. Interest Fields from Fengyun-4A AGRI

2.2. Weather Radar Data

2.3. GPM IMERG Data

3. Methodology

3.1. Training and Validation Data

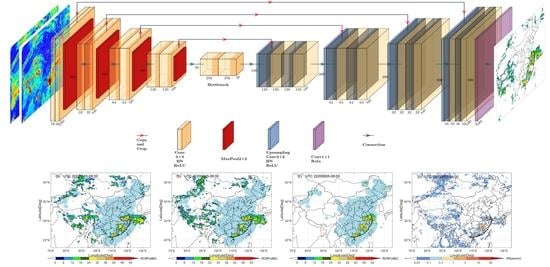

3.2. Network Architecture

3.3. Model Training

4. Validation and Discussions

4.1. Case Studies

4.2. Statistical Results

4.3. Validation Based on Precipitaiton Observations

5. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Ivers, L.C.; Ryan, E.T. Infectious diseases of severe weather-related and flood-related natural disasters. Curr. Opin. Infect. Dis. 2006, 19, 408–414. [Google Scholar] [CrossRef]

- Roy, S.S.; Lakshmanan, V.; Bhowmik, S.K.R.; Thampi, S.B. Doppler weather radar based nowcasting of cyclone Ogni. J. Earth Syst. Sci. 2010, 119, 183–199. [Google Scholar] [CrossRef] [Green Version]

- Bech, J.; Berenguer, M. Predictability of heavy sub-hourly precipitation amounts for a weather radar based nowcasting system. Brain Res. 2007, 1153, 84–91. [Google Scholar]

- Liechti, K.; Panziera, L.; Germann, U.; Zappa, M. Flash-flood early warning using weather radar data: From nowcasting to forecasting. Hydrol. Earth Syst. Sci. Discuss. 2013, 10, 8490. [Google Scholar]

- Norin, L. A quantitative analysis of the impact of wind turbines on operational Doppler weather radar data. Atmos. Meas. Tech. 2015, 8, 593–609. [Google Scholar] [CrossRef] [Green Version]

- Sheng, C.; Gao, S.; Xue, M. Short-range prediction of a heavy precipitation event by assimilating Chinese CINRAD-SA radar reflectivity data using complex cloud analysis. Meteorol. Atmos. Phys. 2006, 94, 167–183. [Google Scholar] [CrossRef]

- Chu, Z.; Yin, Y.; Gu, S. Characteristics of velocity ambiguity for CINRAD-SA doppler weather radars. Asia Pac. J. Atmos. Sci. 2014, 50, 221–227. [Google Scholar] [CrossRef]

- Wilson, J.W.; Schreiber, W.E. Initiation of convective storms at radar-observed boundary-layer convergence lines. Mon. Weather Rev. 1986, 114, 2516–2536. [Google Scholar] [CrossRef] [Green Version]

- Mecikalski, J.R.; Bedka, K.M. Forecasting convective initiation by monitoring the evolution of moving cumulus in daytime GOES imagery. Mon. Weather Rev. 2006, 134, 49–78. [Google Scholar] [CrossRef] [Green Version]

- Walker, J.R.; Mackenzie, W.M., Jr.; Mecikalski, J.R.; Jewett, C.P. An enhanced geostationary satellite-based convective initiation algorithm for 0-2-h nowcasting with object tracking. J. Appl. Meteorol. Climatol. 2012, 51, 1931–1949. [Google Scholar] [CrossRef]

- Sokol, Z. Assimilation of extrapolated radar reflectivity into a NWP model and its impact on a precipitation forecast at high resolution. Atmos. Res. 2011, 100, 201–212. [Google Scholar] [CrossRef]

- Sokol, Z.; Zacharov, P. Nowcasting of precipitation by an NWP model using assimilation of extrapolated radar reflectivity. Q. J. Roy. Meteor. Soc. 2012, 138, 1072–1082. [Google Scholar] [CrossRef]

- Wang, H.; Sun, J.; Fan, S.; Huang, X.Y. Indirect assimilation of radar reflectivity with WRF 3D-var and its impact on prediction of four summertime convective events. J. Appl. Meteorol. Climatol. 2013, 52, 889–902. [Google Scholar] [CrossRef]

- Wang, H.; Sun, J.; Guo, Y.R. Radar reflectivity assimilation with the four-dimensional variational system of the Weather Research and Forecast model. J. Environ. Hydrol. 2011, 46, 289–298. [Google Scholar]

- Aumont, O.C.; Ducrocq, V.; Wattrelot, E.; Jaubert, G.; Pradier-Vabre, S. 1D+3DVar assimilation of radar reflectivity data: A proof of concept. Tellus Ser. A 2010, 62, 173–187. [Google Scholar] [CrossRef]

- Sokol, Z.; Rezacova, D. Assimilation of radar reflectivity into the LM COSMO model with a high horizontal resolution. Meteorol. Appl. 2010, 13, 317–330. [Google Scholar] [CrossRef]

- Yang, J.; Zhang, Z.; Wei, C.; Lu, F.; Guo, Q. Introducing the new generation of Chinese geostationary weather satellites—FengYun 4 (FY-4). Bull. Am. Meteorol. Soc. 2016. [Google Scholar] [CrossRef]

- Bessho, K.; Date, K.; Hayashi, M.; Ikeda, A.; Yoshida, R. An introduction to Himawari-8/9—Japan’s new-generation geostationary meteorological satellites. J. Meteorol. Soc. Jpn. 2016, 94, 151–183. [Google Scholar] [CrossRef] [Green Version]

- Schmit, T.J.; Gunshor, M.M.; Menzel, W.P.; Gurka, J.J.; Bachmeier, A.S. Introducing the next-generation advanced baseline imager on GOES-R. Bull. Am. Meteorol. Soc. 2005, 86, 1079–1096. [Google Scholar] [CrossRef]

- Mecikalski, J.R.; Rosenfeld, D.; Manzato, A. Evaluation of geostationary satellite observations and the development of a 1–2 h prediction model for future storm intensity. J. Geophys. Res. Atmos. 2016, 121, 6374–6392. [Google Scholar] [CrossRef]

- Horinouchi, T.; Shimada, U.; Wada, A. Convective bursts with gravity waves in tropical cyclones: Case study with the Himawari-8 satellite and idealized numerical study. Geophys. Res. Lett. 2020, 47, 47. [Google Scholar] [CrossRef] [Green Version]

- Chen, D.; Guo, J.; Yao, D.; Feng, Z.; Lin, Y. Elucidating the life cycle of warm-season mesoscale convective systems in eastern China from the Himawari-8 geostationary satellite. Remote Sens. 2020, 12, 2307. [Google Scholar] [CrossRef]

- Sawada, Y.; Okamoto, K.; Kunii, M.; Miyoshi, T. Assimilating every-10-minute Himawari-8 infrared radiances to improve convective predictability. J. Geophys. Res. Atmos. 2019. [Google Scholar] [CrossRef]

- Sun, F.; Qin, D.; Min, M.; Li, B.; Wang, F. Convective initiation nowcasting over China from Fengyun-4A measurements based on TV-L1 optical flow and BP_adaboost neural network algorithms. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 12, 4284–4296. [Google Scholar] [CrossRef]

- Zhuge, X.; Zou, X. Summertime convective initiation nowcasting over southeastern China based on advanced Himawari imager observations. J. Meteorol. Soc. Jpn. 2018, 96, 337–353. [Google Scholar] [CrossRef] [Green Version]

- Han, D.; Lee, J.; Im, J.; Sim, S.; Han, H. A novel framework of detecting convective initiation combining automated sampling, machine learning, and repeated model tuning from geostationary satellite data. Remote Sens. 2019, 11, 1454. [Google Scholar] [CrossRef] [Green Version]

- Sun, F.; Min, M.; Qin, D.; Wang, F.; Hu, J. Refined typhoon geometric center derived from a high spatiotemporal resolution geostationary satellite imaging system. IEEE Geosci. Remote Sens. Lett. 2019, 16, 499–503. [Google Scholar] [CrossRef]

- Min, M.; Bai, C.; Guo, J.P.; Sun, F.L.; Liu, C.; Wang, F.; Xu, H.; Tang, S.H.; Li, B.; Di, D.; et al. Estimating summertime precipitation from Himawari-8 and global forecast system based on machine learning. IEEE Trans. Geosci. Remote Sens. 2019, 57, 2557–2570. [Google Scholar] [CrossRef]

- Kuligowski, R.J.; Li, Y.P.; Hao, Y.; Zhang, Y. Improvements to the GOES-R rainfall rate algorithm. J. Hydrometeorol. 2016, 17, 1693–1704. [Google Scholar] [CrossRef]

- Min, M.; Li, J.; Wang, F.; Liu, Z.J.; Menzel, W.P. Retrieval of cloud top properties from advanced geostationary satellite imager measurements based on machine learning algorithms. Remote Sens. Environ. 2020, 239, 111616. [Google Scholar] [CrossRef]

- Schmidhuber, J. Deep learning in neural networks: An overview. Neural Netw. 2015, 61, 85–117. [Google Scholar] [CrossRef] [Green Version]

- Goodfellow, I.; Bengio, Y.; Courville, A. Deep Learning; The MIT Press: Cambridge, MA, USA, 2016. [Google Scholar]

- Ayzel, G.; Scheffer, T.; Heistermann, M. RainNet v1.0: A convolutional neural network for radar-based precipitation nowcasting. Geosci. Model Dev. 2020, 13, 2631–2644. [Google Scholar] [CrossRef]

- Zhang, P.; Lei, Z.; Leung, H.; Wang, J. A deep-learning based precipitation forecasting approach using multiple environmental factors. In Proceedings of the IEEE International Congress on Big Data (BigData Congress), Honolulu, HI, USA, 25–30 June 2017. [Google Scholar]

- Han, P.; Wang, W.; Shi, Q.; Yang, J. Real-time short-term trajectory prediction based on GRU neural network. In Proceedings of the 38th Digital Avionics Systems Conference (DASC), IEEE/AIAA, San Diego, CA, USA, 8–12 September 2019. [Google Scholar]

- Tian, L.; Li, X.T.; Ye, Y.M.; Xie, P.F.; Li, Y. A generative adversarial gated recurrent unit model for precipitation nowcasting. IEEE Trans. Geosci. Remote Sens. 2020, 17, 601–605. [Google Scholar] [CrossRef]

- Snderby, C.K.; Espeholt, L.; Heek, J.; Dehghani, M.; Oliver, A.; Salimans, T.; Agrawal, S.; Hickey, J.; Kalchbrenner, N. MetNet: A neural weather model for precipitation forecasting. arXiv 2020, arXiv:2003.12140. [Google Scholar]

- Shou, Y.X.; Lu, F.; Shou, S. High-resolution Fengyun-4 satellite measurements of dynamical tropopause structure and variability. Remote Sens. 2020, 12, 1600. [Google Scholar] [CrossRef]

- Steiner, M.; Smith, J.A.; Uijlenhoet, R. A microphysical interpretation of radar reflectivity-rain rate relationships. J. Atmos. Sci. 2004, 61, 1114–1131. [Google Scholar] [CrossRef]

- Matrosov, S.Y.; Heymsfield, A.J.; Wang, Z. Dual-frequency radar ratio of nonspherical atmospheric hydrometeors. Geophys. Res. Lett. 2005, 32, 32. [Google Scholar] [CrossRef] [Green Version]

- Letu, H.S.; Nagao, T.M.; Nakajima, T.Y.; Riedi, J.; Ishimoto, H.; Baran, A.J.; Shang, H.Z.; Sekiguchi, M.; Kikuchi, M. Ice cloud properties from Himawari-8/AHI next-generation geostationary satellite: Capability of the AHI to monitor the DC cloud generation process. IEEE Trans. Geosci. Remote Sens. 2019, 57, 3229–3239. [Google Scholar] [CrossRef]

- Letu, H.; Yang, K.; Nakajima, T.Y.; Ishimoto, H.; Shi, J. High-resolution retrieval of cloud microphysical properties and surface solar radiation using Himawari-8/AHI next-generation geostationary satellite. Remote Sens. Environ. 2020, 239, 1–16. [Google Scholar] [CrossRef]

- Zhao, L.; Zhao, C.; Wang, Y.; Wang, Y.; Yang, Y. Evaluation of cloud microphysical properties derived from MODIS and Himawari-8 using in situ aircraft measurements over the Southern Ocean. Earth Space Sci. 2020, 7. [Google Scholar] [CrossRef] [Green Version]

- Luo, C. Research on three-dimensional wind field structure characteristic of supercell hailstorm by dual- and triple-doppler radar retrieval. Acta Meteorol. Sin. 2017, 75, 757–770. [Google Scholar]

- Huffman, G.; Bolvin, D.; Braithwaite, D.; Hsu, K.; Joyce, R.; Kidd, C.; Nelkin, E.; Sorooshian, S.; Wang, J.; Xie, P. First results from the integrated multi-satellite retrievals for GPM (IMERG). In Proceedings of the EGU General Assembly Conference, Vienna, Austria, 12–17 April 2015. [Google Scholar]

- Sharifi, E.; Steinacker, R.; Saghafian, B. Assessment of GPM-IMERG and other precipitation products against gauge data under different topographic and climatic conditions in Iran: Preliminary results. Remote Sens. 2016, 8, 135. [Google Scholar] [CrossRef] [Green Version]

- Huffman, G.; Bolvin, D.; Nelkin, E.; Kidd, C. Improving user access to the integrated multi-satellite retrievals for GPM (IMERG) products. In Proceedings of the EGU General Assembly Conference, Vienna, Austria, 12–17 April 2016. [Google Scholar]

- Wang, X.; Min, M.; Wang, F.; Guo, J.; Li, B.; Tang, S. Intercomparisons of cloud mask products among Fengyun-4A, Himawari-8, and MODIS. IEEE Trans. Geosci. Remote Sens. 2019, 57, 8827–8839. [Google Scholar] [CrossRef]

- Ronneberger, O.; Fischer, P.; Brox, T. U-Net: Convolutional Networks for Biomedical Image Segmentation; Springer: Cham, Switzerland, 2015. [Google Scholar]

- Iek, Z.; Abdulkadir, A.; Lienkamp, S.S.; Brox, T.; Ronneberger, O. 3D U-Net: Learning Dense Volumetric Segmentation from Sparse Annotation; Springer: Cham, Switzerland, 2016. [Google Scholar]

- Norman, B.; Pedoia, V.; Majumdar, S. Use of 2D U-net convolutional neural networks for automated cartilage and meniscus segmentation of knee MR imaging data to determine relaxometry and morphometry. Radiology 2018, 288, 177–185. [Google Scholar] [CrossRef] [Green Version]

- Simpson, R.; Saffir, H. Tropical cyclone destructive potential by integrated kinetic energy. Bull. Am. Meteorol. Soc. 2008, 89, 219. [Google Scholar]

- Zhen, Z.; Lei, H. Observed microphysical structure of nimbostratus in northeast cold vortex over China. Atmos. Res. 2014, 142, 91–99. [Google Scholar]

- Gultepe, I.; Heymsfield, A.J.; Field, P.R.; Axisa, D. Chapter 6 ice phase precipitation. Meteorol. Monogr. 2017, 58. [Google Scholar] [CrossRef]

- Gultepe, I.; Sharman, R.; Williams, P.D.; Zhou, B.; Thobois, L. A review of high impact weather for aviation meteorology. Pure Appl. Geophys. 2019, 176, 1869–1921. [Google Scholar] [CrossRef]

- Min, M.; Wu, C.Q.; Li, C.; Liu, H.; Xu, N.; Wu, X.; Chen, L.; Wang, F.; Sun, F.L.; Qin, D.Y.; et al. Developing the science product algorithm testbed for Chinese next-generation geostationary meteorological satellites: Fengyun-4 series. J. Meteorol. Res. 2017, 31, 708–719. [Google Scholar] [CrossRef]

| No | Interest Fields | Physical Basis | Model Index |

|---|---|---|---|

| 1 | BT 10.8μm | Cloud-top temperature assessment | Model I |

| 2 | BTD 10.8–6.2μm | Cloud-top height relative to tropopause | |

| 3 | BTD 12.3+8.6–2×10.8μm | Cloud-top glaciation/ phase | |

| 4 | Modified albedo 0.65μm | Cloud optical thickness | Model II |

| 5 | Albedo ratio 0.65/1.61μm | Cloud-top glaciation /phase |

| Application Month | Model I | Model II | ||

|---|---|---|---|---|

| Training | Validation | Training | Validation | |

| May. | 4284 | 918 | 1927 | 412 |

| Jun. | 4183 | 896 | 1882 | 403 |

| Jul. | 4257 | 912 | 1916 | 410 |

| Aug. | 4273 | 915 | 1923 | 412 |

| Sept. | 4194 | 898 | 1887 | 404 |

| Oct. | 4217 | 903 | 1898 | 406 |

| Application Month | Model I | Model II | ||

|---|---|---|---|---|

| MAE (mm/h) | RMSE (mm/h) | MAE (mm/h) | RMSE (mm/h) | |

| May. | 0.259 | 0.543 | 0.251 | 0.552 |

| Jun. | 0.329 | 0.759 | 0.247 | 0.506 |

| Jul. | 0.385 | 0.776 | 0.274 | 0.524 |

| Aug. | 0.452 | 0.991 | 0.339 | 0.701 |

| Sept. | 0.394 | 0.813 | 0.265 | 0.517 |

| Oct. | 0.279 | 0.594 | 0.297 | 0.637 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Sun, F.; Li, B.; Min, M.; Qin, D. Deep Learning-Based Radar Composite Reflectivity Factor Estimations from Fengyun-4A Geostationary Satellite Observations. Remote Sens. 2021, 13, 2229. https://doi.org/10.3390/rs13112229

Sun F, Li B, Min M, Qin D. Deep Learning-Based Radar Composite Reflectivity Factor Estimations from Fengyun-4A Geostationary Satellite Observations. Remote Sensing. 2021; 13(11):2229. https://doi.org/10.3390/rs13112229

Chicago/Turabian StyleSun, Fenglin, Bo Li, Min Min, and Danyu Qin. 2021. "Deep Learning-Based Radar Composite Reflectivity Factor Estimations from Fengyun-4A Geostationary Satellite Observations" Remote Sensing 13, no. 11: 2229. https://doi.org/10.3390/rs13112229

APA StyleSun, F., Li, B., Min, M., & Qin, D. (2021). Deep Learning-Based Radar Composite Reflectivity Factor Estimations from Fengyun-4A Geostationary Satellite Observations. Remote Sensing, 13(11), 2229. https://doi.org/10.3390/rs13112229