HORUS: Multispectral and Multiangle CubeSat Mission Targeting Sub-Kilometer Remote Sensing Applications

Abstract

:1. Introduction

2. Scientific Background

Acquisition Methodology

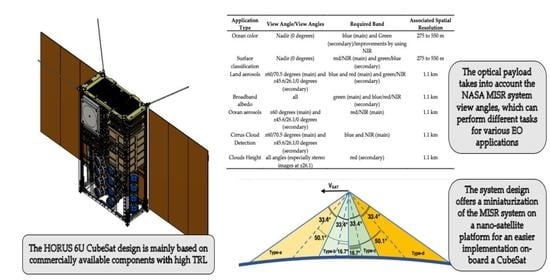

3. HORUS Multiangle and Multispectral Observation Module

3.1. Spatial Resolution

3.2. Signal-to-Noise Ratio

4. Satellite Architecture and System Budgets

4.1. Mass Budget

4.2. Power Budget

4.3. Link Budget

4.4. Data Budget

4.5. Propulsion System Operations and Propellant Budget

5. Implementation, Technical Challenges and Extensions to Multiplatform Missions

6. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Toth, C.; Jóźków, G. Remote Sensing Platforms and Sensors: A Survey. ISPRS J. Photogramm. Remote Sens. 2016, 115, 22–36. [Google Scholar] [CrossRef]

- Goetz, A.F.H. Three Decades of Hyperspectral Remote Sensing of the Earth: A Personal View. Remote Sens. Environ. 2009, 113, S5–S16. [Google Scholar] [CrossRef]

- Sun, W.; Du, Q. Hyperspectral Band Selection: A Review. IEEE Geosci. Remote Sens. Mag. 2019, 7, 118–139. [Google Scholar] [CrossRef]

- van der Meer, F.D.; van der Werff, H.M.; van Ruitenbeek, F.J.; Hecker, C.A.; Bakker, W.H.; Noomen, M.F.; van der Meijde, M.; Carranza, E.J.M.; de Smeth, J.B.; Woldai, T. Multi-and Hyperspectral Geologic Remote Sensing: A Review. Int. J. Appl. Earth Obs. Geoinf. 2012, 14, 112–128. [Google Scholar] [CrossRef]

- Yokoya, N.; Grohnfeldt, C.; Chanussot, J. Hyperspectral and Multispectral Data Fusion: A Comparative Review of the Recent Literature. IEEE Geosci. Remote Sens. Mag. 2017, 5, 29–56. [Google Scholar] [CrossRef]

- Govender, M.; Chetty, K.; Bulcock, H. A Review of Hyperspectral Remote Sensing and Its Application in Vegetation and Water Resource Studies. Water SA 2007, 33, 145–151. [Google Scholar] [CrossRef] [Green Version]

- Adam, E.; Mutanga, O.; Rugege, D. Multispectral and Hyperspectral Remote Sensing for Identification and Mapping of Wetland Vegetation: A Review. Wetl. Ecol. Manag. 2010, 18, 281–296. [Google Scholar] [CrossRef]

- Dozier, J.; Painter, T.H. Multispectral and Hyperspectral Remote Sensing of Alpine Snow Properties. Annu. Rev. Earth Planet. Sci. 2004, 32, 465–494. [Google Scholar] [CrossRef] [Green Version]

- Lorente, D.; Aleixos, N.; Gómez-Sanchis, J.; Cubero, S.; García-Navarrete, O.L.; Blasco, J. Recent Advances and Applications of Hyperspectral Imaging for Fruit and Vegetable Quality Assessment. Food Bioprocess. Technol. 2012, 5, 1121–1142. [Google Scholar] [CrossRef]

- Su, W.-H.; Sun, D.-W. Multispectral Imaging for Plant Food Quality Analysis and Visualization. Compr. Rev. Food Sci. Food Saf. 2018, 17, 220–239. [Google Scholar] [CrossRef] [Green Version]

- Zhang, C.; Walters, D.; Kovacs, J.M. Applications of Low Altitude Remote Sensing in Agriculture upon Farmers’ Requests-A Case Study in Northeastern Ontario, Canada. PLoS ONE 2014, 9. [Google Scholar] [CrossRef] [PubMed]

- Atzberger, C. Advances in Remote Sensing of Agriculture: Context Description, Existing Operational Monitoring Systems and Major Information Needs. Remote Sens. 2013, 5, 949–981. [Google Scholar] [CrossRef] [Green Version]

- Sishodia, R.P.; Ray, R.L.; Singh, S.K. Applications of Remote Sensing in Precision Agriculture: A Review. Remote Sens. 2020, 12, 3136. [Google Scholar] [CrossRef]

- Digital Globe Remote Sensing Technology Trends and Agriculture by DigitalGlobe. Available online: https://dg-cms-uploads-production.s3.amazonaws.com/uploads/document/file/31/DG-RemoteSensing-WP.pdf (accessed on 20 April 2020).

- From Panchromatic to Hyperspectral: Earth Observation in a Myriad of Colors. Available online: https://www.ohb.de/en/magazine/from-panchromatic-to-hyperspectral-earth-observation-in-a-myriad-of-colors (accessed on 28 December 2020).

- Crevoisier, C.; Clerbaux, C.; Guidard, V.; Phulpin, T.; Armante, R.; Barret, B.; Camy-Peyret, C.; Chaboureau, J.-P.; Coheur, P.-F.; Crépeau, L.; et al. Towards IASI-New Generation (IASI-NG): Impact of Improved Spectral Resolution and Radiometric Noise on the Retrieval of Thermodynamic, Chemistry and Climate Variables. Atmos. Meas. Tech. 2014, 7, 4367–4385. [Google Scholar] [CrossRef] [Green Version]

- Barnsley, M.J.; Settle, J.J.; Cutter, M.A.; Lobb, D.R.; Teston, F. The PROBA/CHRIS Mission: A Low-Cost Smallsat for Hyperspectral Multiangle Observations of the Earth Surface and Atmosphere. IEEE Trans. Geosci. Remote Sens. 2004, 42, 1512–1520. [Google Scholar] [CrossRef]

- Esposito, M.; Conticello, S.S.; Vercruyssen, N.; van Dijk, C.N.; Foglia Manzillo, P.; Koeleman, C.J. Demonstration in Space of a Smart Hyperspectral Imager for Nanosatellites. In Proceedings of the Small Satellite Conference, Logan, UT, USA, 4–9 August 2018. [Google Scholar]

- Villafranca, A.G.; Corbera, J.; Martin, F.; Marchan, J.F. Limitations of Hyperspectral Earth Observation on Small Satellites. J. Small Satell. 2012, 1, 17–24. [Google Scholar]

- eXtension. What Is the Difference between Multispectral and Hyperspectral Imagery? Available online: https://mapasyst.extension.org/what-is-the-difference-between-multispectral-and-hyperspectral-imagery (accessed on 28 December 2020).

- Loveland, T.R.; Dwyer, J.L. Landsat: Building a Strong Future. Remote Sens. Environ. 2012, 122, 22–29. [Google Scholar] [CrossRef]

- NASA Landsat Overview. Available online: https://www.nasa.gov/mission_pages/landsat/overview/index.html (accessed on 12 December 2020).

- Roller, N. Intermediate Multispectral Satellite Sensors. J. For. 2000, 98, 32–35. [Google Scholar] [CrossRef]

- NASA NASA’s Earth Observing System (EOS) Programme. Available online: https://eospso.nasa.gov/content/nasas-earth-observing-system-project-science-office (accessed on 4 December 2020).

- ESA Copernicus Programme. Available online: https://www.copernicus.eu/en/services (accessed on 5 November 2020).

- Platnick, S.; King, M.D.; Ackerman, S.A.; Menzel, W.P.; Baum, B.A.; Riédi, J.C.; Frey, R.A. The MODIS Cloud Products: Algorithms and Examples from Terra. IEEE Trans. Geosci. Remote Sens. 2003, 41, 459–473. [Google Scholar] [CrossRef] [Green Version]

- Remer, L.A.; Kaufman, Y.J.; Tanré, D.; Mattoo, S.; Chu, D.A.; Martins, J.V.; Li, R.-R.; Ichoku, C.; Levy, R.C.; Kleidman, R.G.; et al. The MODIS Aerosol Algorithm, Products, and Validation. J. Atmos. Sci. 2005, 62, 947–973. [Google Scholar] [CrossRef] [Green Version]

- Gillespie, A.; Rokugawa, S.; Matsunaga, T.; Steven Cothern, J.; Hook, S.; Kahle, A.B. A Temperature and Emissivity Separation Algorithm for Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER) Images. IEEE Trans. Geosci. Remote Sens. 1998, 36, 1113–1126. [Google Scholar] [CrossRef]

- Yamaguchi, Y.; Kahle, A.B.; Tsu, H.; Kawakami, T.; Pniel, M. Overview of Advanced Spaceborne Thermal Emission and Reflection Radiometer (ASTER). IEEE Trans. Geosci. Remote Sens. 1998, 36, 1062–1071. [Google Scholar] [CrossRef] [Green Version]

- NASA MISR: Mission. Available online: http://www-misr.jpl.nasa.gov/Mission/ (accessed on 4 September 2020).

- NASA MISR: Technical Documents. Available online: https://www-misr.jpl.nasa.gov/publications/technicalDocuments/ (accessed on 4 September 2020).

- Blondeau-Patissier, D.; Gower, J.F.R.; Dekker, A.G.; Phinn, S.R.; Brando, V.E. A Review of Ocean Color Remote Sensing Methods and Statistical Techniques for the Detection, Mapping and Analysis of Phytoplankton Blooms in Coastal and Open Oceans. Prog. Oceanogr. 2014, 123, 123–144. [Google Scholar] [CrossRef] [Green Version]

- Meera Gandhi, G.; Parthiban, S.; Thummalu, N. Ndvi: Vegetation Change Detection Using Remote Sensing and Gis—A Case Study of Vellore District. Procedia Comput. Sci. 2015, 57, 1199–1210. [Google Scholar] [CrossRef] [Green Version]

- Dierssen, H.M.; Randolph, K. Remote Sensing of Ocean Color. In Earth System Monitoring: Selected Entries from the Encyclopedia of Sustainability Science and Technology; Orcutt, J., Ed.; Springer: New York, NY, USA, 2013; pp. 439–472. ISBN 978-1-4614-5684-1. [Google Scholar]

- Shi, W.; Wang, M. Ocean Reflectance Spectra at the Red, near-Infrared, and Shortwave Infrared from Highly Turbid Waters: A Study in the Bohai Sea, Yellow Sea, and East China Sea. Limnol. Oceanogr. 2014, 59, 427–444. [Google Scholar] [CrossRef]

- NASA Jet Propulsion Laboratory MISR’s Study of Atmospheric Aerosols. Available online: https://misr.jpl.nasa.gov/Mission/missionIntroduction/scienceGoals/studyOfAerosols (accessed on 10 June 2021).

- Mahajan, S.; Fataniya, B. Cloud Detection Methodologies: Variants and Development—A Review. Complex. Intell. Syst. 2020, 6, 251–261. [Google Scholar] [CrossRef] [Green Version]

- NASA Jet Propulsion Laboratory MISR’s Study of Clouds. Available online: https://misr.jpl.nasa.gov/Mission/missionIntroduction/scienceGoals/studyOfClouds (accessed on 10 June 2021).

- MISR: View Angles. Available online: http://misr.jpl.nasa.gov/Mission/misrInstrument/viewingAngles (accessed on 10 June 2021).

- NASA MISR: Spatial Resolution. Available online: https://www-misr.jpl.nasa.gov/Mission/misrInstrument/spatialResolution/ (accessed on 10 June 2021).

- Wilfried, L.; Wittmann, K. Remote Sensing Satellite. In Handbook of Space Technology; J. Wiley and Sons: Hoboken, NJ, USA, 2009; pp. 76–77. ISBN 978-0-4706-9739-9. [Google Scholar]

- Murugan, P.; Pathak, N. Deriving Primary Specifications of Optical Remote Sensing Satellite from User Requirements. Int. J. Innov. Technol. Explor. Eng. 2019, 8, 3295–3301. [Google Scholar]

- EoPortal Directory—Satellite Missions. ESA SPOT-6 and 7. Available online: https://earth.esa.int/web/eoportal/satellite-missions/s/spot-6-7 (accessed on 28 December 2020).

- Earth Online. IKONOS ESA Archive. Available online: https://earth.esa.int/eogateway/catalog/ikonos-esa-archive (accessed on 28 December 2020).

- Earth Online. ESA QuickBird-2. Available online: https://earth.esa.int/eogateway/missions/quickbird-2 (accessed on 28 December 2020).

- EoPortal Directory—Satellite Missions. ESA Terra. Available online: https://directory.eoportal.org/web/eoportal/satellite-missions/t/terra (accessed on 28 December 2020).

- Wiley, J.L.; Wertz, J.R. Space Mission Analysis and Design, 3rd ed.; Space Technology Library; Springer: New York, NY, USA, 2005. [Google Scholar]

- Basler. AG, B. CMOS-Global-Shutter-Cameras. Available online: https://www.baslerweb.com/en/sales-support/knowledge-base/cmos-global-shutter-cameras (accessed on 28 December 2020).

- AMS CMV4000 Sensor Datasheet. Available online: https://ams.com/documents/20143/36005/CMV4000_DS000728_3-00.pdf/36fecc09-e04a-3aac-ca14-def9478fc317 (accessed on 10 June 2021).

- Seidel, F.; Schläpfer, D.; Nieke, J.; Itten, K.I. Sensor Performance Requirements for the Retrieval of Atmospheric Aerosols by Airborne Optical Remote Sensing. Sensors 2008, 8, 1901–1914. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Bruegge, C.J.; Chrien, N.L.; Diner, D.J. MISR: Level 1—In-Flight Radiometric Calibration and Characterization Algorithm Theoretical Basis; Jet Propulsion Laboratory, California Institute of Technology: Pasadena, CA, USA, 1999; Volume JPL D-13398, Rev. A. [Google Scholar]

- MODTRAN (MODerate Resolution Atmospheric TRANsmission) Computer Code; Spectral Sciences, Inc. (SSI). Available online: https://www.spectral.com/our-software/modtran/ (accessed on 20 April 2020).

- The CubeSat Program, California Polytechnic State University 6U CubeSat Design Specification Revision 1.0 2018. Available online: https://static1.squarespace.com/static/5418c831e4b0fa4ecac1bacd/t/5b75dfcd70a6adbee5908fd9/1534451664215/6U_CDS_2018-06-07_rev_1.0.pdf (accessed on 18 June 2021).

- European Space Agency (ESA). Margin Philosophy for Science Assessment Studies, version 1.3. Available online: https://sci.esa.int/documents/34375/36249/1567260131067-Margin_philosophy_for_science_assessment_studies_1.3.pdf (accessed on 8 June 2021).

- Saito, H.; Iwakiri, N.; Tomiki, A.; Mizuno, T.; Watanabe, H.; Fukami, T.; Shigeta, O.; Nunomura, H.; Kanda, Y.; Kojima, K.; et al. 300 Mbps Downlink Communications from 50kg Class Small Satellites. In Proceedings of the 2013 Small Satellite Conference, Logan, UT, USA, August 2013. paper reference SSC13-II-2. [Google Scholar]

- Saito, H.; Iwakiri, N.; Tomiki, A.; Mizuno, T.; Watanabe, H.; Fukami, T.; Shigeta, O.; Nunomura, H.; Kanda, Y.; Kojima, K.; et al. High-Speed Downlink Communications with Hundreds Mbps FROM 50kg Class Small Satellites. In Proceedings of the 63th International Astronautical Congress (IAC), Naples, Italy, October 2012; 5, pp. 3519–3531. [Google Scholar]

- Syrlinks X Band Transmitter. Available online: https://www.syrlinks.com/en/spatial/x-band-transmitter (accessed on 27 January 2021).

- Enpulsion Nano-Thruster Datasheet. Available online: https://www.enpulsion.com/wp-content/uploads/ENP2018-001.G-ENPULSION-NANO-Product-Overview.pdf (accessed on 29 December 2020).

- International Academy of Astronautics (IAA, Study Group 4.23). A Handbook for Post-Mission Disposal of Satellites Less Than 100 Kg; International Academy of Astronautics: Paris, France, 2019; ISBN 978-2-917761-68-7. [Google Scholar]

- Santoni, F.; Piergentili, F.; Graziani, F. The UNISAT Program: Lessons Learned and Achieved Results. Acta Astronaut. 2009, 65, 54–60. [Google Scholar] [CrossRef]

- Santoni, F.; Piergentili, F.; Bulgarelli, F.; Graziani, F. UNISAT-3 Power System. In Proceedings of the European Space Agency, (Special Publication) ESA SP, Stresa, Italy, 9 May 2015; pp. 395–400. [Google Scholar]

- Candini, G.P.; Piergentili, F.; Santoni, F. Designing, Manufacturing, and Testing a Self-Contained and Autonomous Nanospacecraft Attitude Control System. J. Aerosp. Eng. 2014, 27. [Google Scholar] [CrossRef]

- Piergentili, F.; Candini, G.P.; Zannoni, M. Design, Manufacturing, and Test of a Real-Time, Three-Axis Magnetic Field Simulator. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1369–1379. [Google Scholar] [CrossRef]

- Pastore, R.; Delfini, A.; Micheli, D.; Vricella, A.; Marchetti, M.; Santoni, F.; Piergentili, F. Carbon Foam Electromagnetic Mm-Wave Absorption in Reverberation Chamber. Carbon 2019, 144, 63–71. [Google Scholar] [CrossRef]

- Piattoni, J.; Ceruti, A.; Piergentili, F. Automated Image Analysis for Space Debris Identification and Astrometric Measurements. Acta Astronaut. 2014, 103, 176–184. [Google Scholar] [CrossRef]

- Otieno, V.; Frezza, L.; Grossi, A.; Amadio, D.; Marzioli, P.; Mwangi, C.; Kimani, J.N.; Santoni, F. 1KUNS-PF after One Year of Flight: New Results for the IKUNS Programme. In Proceedings of the 70th International Astronautical Congress (IAC), Washington DC, USA, 21–25 October 2019. paper code IAC-19,B4,1,9,x53881. [Google Scholar]

- UNISEC Global. The 4th Mission Idea Contest Workshop. Available online: https://www.spacemic.net/index4.html (accessed on 28 December 2020).

| Application Type | View Angle/View Angles | Required Band | Associated Spatial Resolution |

|---|---|---|---|

| Ocean color | Nadir (0 degrees) | Blue (main) and green (secondary)/improvements by using NIR | 275 to 550 m |

| Surface classification | Nadir (0 degrees) | red/NIR (main) and green/blue (secondary) | 275 to 550 m |

| Land aerosols | ±60/70.5 degrees (main) and ±45.6/26.1/0 degrees (secondary) | Blue and red (main) and green/NIR (secondary) | 1.1 km |

| Broadband albedo | All | Green (main) and blue/red/NIR (secondary) | 1.1 km |

| Ocean aerosols | ±60 degrees (main) and ±45.6/26.1/0 degrees (secondary) | Red/NIR (main) | 1.1 km |

| Cirrus cloud detection | ±60/70.5 degrees (main) and ±45.6/26.1/0 degrees (secondary) | Blue and NIR (main) | 1.1 km |

| Cloud height | All angles (especially stereo images at ±26.1) | Red (secondary) | 1.1 km |

| Orbital Height | 300 km | 400 km | 500 km | 600 km | 700 km |

|---|---|---|---|---|---|

| 64.2° | 62.5 | 60.9 | 59.5 | 58.1 |

| Parameter | Value |

|---|---|

| Pixel size | 5.5 µm (H) × 5.5 µm (V) |

| Resolution | 4 MP, 2048 × 2048 px |

| Maximum frame rate | 90 fps |

| Shutter type | Global |

| Power consumption (typical) | 3.2 W |

| Parameter | Value |

|---|---|

| Focal length | 18.7 mm |

| F# | 1.4 |

| Effective aperture diameter | 13.7 mm |

| HFOV (along-track) | 33.4 degrees |

| VFOV (cross-track) | 33.4 degrees |

| Spectral Band | Central Wavelength (nm) | Spectral Bandwidth |

|---|---|---|

| Blue | 443 | 30 |

| Green | 555 | 20 |

| Dark red | 670 | 20 |

| NIR | 865 | 60 |

| Type of Camera | HFOV (degrees) | VFOV (degrees) | Optical Axis Boresight Angle (degrees) | Filter’s Central Wavelength (nm) |

|---|---|---|---|---|

| a | 33.4 | 33.4 | +50.1 | 1. a1: 443 (blue) 2. a2: 555 (green) 3. a3: 670 (red) 4. a4: 865 (NIR) |

| b | 33.4 | 33.4 | +16.7 | 1. b1: 443 (blue) 2. b2: 555 (green) 3. b3: 670 (red) 4. b4: 865 (NIR) |

| c | 33.4 | 33.4 | −16.7 | 5. b1: 443 (blue) 6. b2: 555 (green) 7. b3: 670 (red) 8. b4: 865 (NIR) |

| d | 33.4 | 33.4 | −50.1 | 1. c1: 4430 (blue) 2. c2: 555 (green) 3. c3: 670 (red) 4. c4: 865 (NIR) |

| View-Angle (degrees) | Type of Filter | Central Wavelength (nm) | GSD (m) | Diffraction-Limited Resolution (m) |

|---|---|---|---|---|

| 0 | Dark red | 443 | 147.1 | 62.1 |

| Green | 555 | 147.1 | 50.7 | |

| Blue | 670 | 147.1 | 45.2 | |

| NIR | 865 | 147.1 | 79.0 | |

| ±26.1 | Dark red | 443 | 180.8 | 76.4 |

| Green | 555 | 180.8 | 62.3 | |

| Blue | 670 | 180.8 | 55.6 | |

| NIR | 865 | 180.8 | 97.1 | |

| ±45.6 | Dark red | 443 | 289.8 | 122.4 |

| Green | 555 | 289.8 | 99.9 | |

| Blue | 670 | 289.8 | 89.1 | |

| NIR | 865 | 289.8 | 155.7 | |

| ±60 | Dark red | 443 | 534.7 | 226.0 |

| Green | 555 | 534.7 | 184.4 | |

| Blue | 670 | 534.7 | 164.5 | |

| NIR | 865 | 534.7 | 287.4 | |

| ±70.5 | Dark red | 443 | 1065.8 | 450.9 |

| Green | 555 | 1065.8 | 368.0 | |

| Blue | 670 | 1065.8 | 328.2 | |

| NIR | 865 | 1065.8 | 573.6 |

| Parameter | Value |

|---|---|

| Integration time | 17 ms (60 FPS) |

| Quantum efficiency | 50% blue and NIR/60% red and green |

| Dark current | 125e−/s |

| Read noise | 5e− RMS |

| Full well capacity | ~106 e− |

| 0° Nadir | 26.1° | 45.6° | 60° | 70.5° | |

|---|---|---|---|---|---|

| Red | 203 | 191 | 169 | 141 | 116 |

| Green | 177 | 167 | 148 | 123 | 101 |

| Blue | 150 | 142 | 125 | 105 | 86 |

| NIR | 305 | 288 | 254 | 213 | 174 |

| Subsystem | Mass (kg) | Margin | Mass with Margin (kg) |

|---|---|---|---|

| Structures and mechanisms | 1.700 | 10% | 1.870 |

| OBDH | 0.200 | 5% | 0.210 |

| EPS | 2.300 | 10% | 2.530 |

| TT&C | 0.600 | 5% | 0.63 |

| ADCS | 1.300 | 10% | 1.430 |

| ODCS | 1.000 | 10% | 1.100 |

| Payload optical systems | 1.400 | 10% | 1.540 |

| Payload data handling systems | 0.200 | 10% | 0.220 |

| Harness | 0.400 | 20% | 0.480 |

| Total | 9.100 | 10.010 |

| Component | Peak Power (W) | Duty Cycle | Average Power (W) |

|---|---|---|---|

| Solar panels’ generation | 75.4 | 0.44 | 33.20 |

| Main OBDH | −0.9 | 1 | −0.90 |

| TT&C on board UHF transmitter | −3.0 | 0.01 | −0.03 |

| TT&C on board UHF receiver | −0.4 | 1 | −0.40 |

| X-Band transmitter | −28.5 | 0.08 | −2.30 |

| ADCS + GPS | −4.0 | 1 | −4.00 |

| Thruster | −40.0 | 0.006 | −0.24 |

| HORUS camera system | −51.2 | 0.45 | −23.04 |

| Image acquisition system | −2.0 | 0.45 | −0.90 |

| Margin | 2.11 |

| UHF (435 MHz) | X-Band (8.0 GHz) | |||

|---|---|---|---|---|

| Parameter | Value (Linear) | Value (dB) | Value (Linear) | Value (dB) |

| RF output (spacecraft) | 1 W | 0 dBW | 3 | 4.77 dBW |

| Spacecraft line loss | 0.6 dB | 0.6 dB | ||

| Spacecraft antenna gain (and pointing losses) | −0.5 dB | 13 dB | ||

| Free space loss | 5 degrees elevation | 151.6 dB | 5 degrees elevation | 176.9 dB |

| Ionospheric/Atmospheric losses | 2.5 dB | 2.4 dB | ||

| Polarization losses | 3 dB | 1 dB | ||

| Ground station antenna pointing loss | 0.7 dB | 1.1 dB | ||

| Ground station antenna gain | 14.1 dBi | 48.3 dBi | ||

| Effective noise temperature | 510 K | 27.08 dBK | 245.36 K | 23.90 dBK |

| Ground station line losses | 1 dB | 1 dB | ||

| Data rate | 9600 bps | 39.8 dBHz | 300 Mbps | 80.0 dBHz |

| Eb/N0 | 17.7 dB | 6.77 dB | ||

| Eb/N0 threshold | (GMSK, BER 10−5) | 10.6 dB | Band efficient 8PSK Concatenated Viterbi/Reed Solomon Rate 1/2 | 4.2 dB |

| Link margin | 5.2 dB | 3.57 dB | ||

| Parameter | Value |

|---|---|

| Pixels per line | 2048 |

| Bits per pixels | 12 |

| Bits per pixel-line | 24,576 |

| Orbit altitude (km) | 500 |

| Orbital speed (km/s) | 7.616 |

| Ground speed (km/s) | 7.062 |

| Ground speed (pixel/s) | 48.04 |

| Required frame rate with 25% margin (fps) | 60 |

| Payload reference duty cycle | 0.45 |

| Average generated data bit rate (Mbps) | 0.664 |

| Total data amount per day (Gbits) | 57.331 |

| Data rate of the transmission system (Mbps) | 300 |

| Daily transmission time per observation angle, per spectral band (50% lossless data compression) | 1 min 36 s |

| Total data amount per day, including nine angles and four spectral bands (Gbits) | 2064 |

| Daily transmission time per observation angle, per spectral band (50% lossless data compression) | 57 min 20 s |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Pellegrino, A.; Pancalli, M.G.; Gianfermo, A.; Marzioli, P.; Curianò, F.; Angeletti, F.; Piergentili, F.; Santoni, F. HORUS: Multispectral and Multiangle CubeSat Mission Targeting Sub-Kilometer Remote Sensing Applications. Remote Sens. 2021, 13, 2399. https://doi.org/10.3390/rs13122399

Pellegrino A, Pancalli MG, Gianfermo A, Marzioli P, Curianò F, Angeletti F, Piergentili F, Santoni F. HORUS: Multispectral and Multiangle CubeSat Mission Targeting Sub-Kilometer Remote Sensing Applications. Remote Sensing. 2021; 13(12):2399. https://doi.org/10.3390/rs13122399

Chicago/Turabian StylePellegrino, Alice, Maria Giulia Pancalli, Andrea Gianfermo, Paolo Marzioli, Federico Curianò, Federica Angeletti, Fabrizio Piergentili, and Fabio Santoni. 2021. "HORUS: Multispectral and Multiangle CubeSat Mission Targeting Sub-Kilometer Remote Sensing Applications" Remote Sensing 13, no. 12: 2399. https://doi.org/10.3390/rs13122399

APA StylePellegrino, A., Pancalli, M. G., Gianfermo, A., Marzioli, P., Curianò, F., Angeletti, F., Piergentili, F., & Santoni, F. (2021). HORUS: Multispectral and Multiangle CubeSat Mission Targeting Sub-Kilometer Remote Sensing Applications. Remote Sensing, 13(12), 2399. https://doi.org/10.3390/rs13122399