Wildland Fire Tree Mortality Mapping from Hyperspatial Imagery Using Machine Learning

Abstract

:1. Introduction

Background

2. Materials and Methods: Mapping Canopy Cover and Tree Mortality

2.1. Classification of Tree Crowns from Hyperspatial Imagery

2.2. Calculating Canopy Cover

2.2.1. Calculate Five Centimeter Class Densities for Each 30 Meter Pixel

2.2.2. Canopy Cover Analysis

2.3. Canopy Cover: sUAS Derived vs. LANDFIRE

2.3.1. Canopy Cover Comparison Methods

2.3.2. Canopy Cover Comparison Analysis

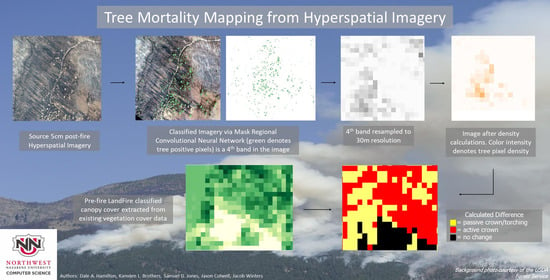

2.4. Mapping Tree Mortality via Canopy Reduction

2.4.1. Canopy Reduction Methods

2.4.2. Canopy Reduction Analysis

3. Results

3.1. Tree Crown Classification Validation

- NewStart: Orthomosaic consisted of 474 acres. Surface vegetation comprised of brush and herbaceous vegetation in the riparian zone along Grimes Creek. Low canopy cover.

- GrimesCreek: Orthomosaic consisted of 249 acres. Surface vegetation comprised of brush and herbaceous vegetation both inside and outside the riparian zone along Grimes Creek. Moderate to low canopy cover.

- South Placerville: Orthomosaic consisted of 262 acres. Surface vegetation comprised of brush and herbaceous vegetation both inside and outside the riparian zone. Low canopy cover.

- East Placerville: Orthomosaic consisted of 627 acres. Mix of herbaceous vegetation and canopy cover. Moderate to low canopy cover.

- West Placerville: Orthomosaic consisted of 565 acres. Wide open area covered in trees with surface vegetation along the ground around trees. Moderate to high canopy cover

- Northwest Placerville: Orthomosaic consisted of 1268 acres. Flat area with trees and tree-like brush. Moderate canopy cover.

- Edna Creek: Orthomosaic consisted of 1084 acres. Lots of unforested areas or areas with dead trees. Patches of forest throughout the orthomosaic. Low canopy cover.

- Belshazzar: Orthomosaic consisted of 265 acres. Very dense surface vegetation. Not in a riparian zone. Certain areas experienced larger-than-normal orthomosaic stitching artifacts, which we hypothesized could cause trouble for object detection techniques. Predominantly high canopy cover with some meadows.

- North Experimental Forest: Orthomosaic consisted of 800 acres. Herbaceous vegetation around water sources. Sparse forest. Moderate to low canopy cover.

- South Experimental Forest: Orthomosaic consisted of 642 acres. Not much vegetation other than trees. Ground is white in many areas. Moderate canopy cover.

3.2. Canopy Cover Analysis

3.3. Canopy Cover Comparison: Hyperspatial Derived vs. LANDFIRE

3.4. Tree Mortality Mapping Assessment

4. Discussion

5. Conclusions

5.1. Future Work

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A. Accuracy of Calculated Value for Canopy Cover

| cp(1 − p) + (1 − c)q(1 − q) |

| < c(0.70)(1 − 0.70) + (1 − c)(0.95)(1 − 0.95) |

| < (0.80)(0.70)(1 − 0.70) + (1 − 0.80)(0.95)(1 − 0.95) |

References

- Hamilton, D. Improving Mapping Accuracy of Wildland Fire Effects from Hyperspatial Imagery Using Machine Learning. Ph.D. Thesis, University of Idaho, Moscow, ID, USA, 2018. [Google Scholar]

- Keeley, J.E. Fire intensity, fire severity and burn severity: A brief review and suggested usage. Int. J. Wildl. Fire 2009, 18, 116–126. [Google Scholar] [CrossRef]

- Hamilton, D.; Pacheco, R.; Myers, B.; Peltzer, B. kNN vs. SVM: A Comparison of Algorithms. In Proceedings of the Fire Continuum—Preparing for the Future of wildland Fire; Missoula, MT, USA, 21–24 May 2018, p. 358. Available online: https://www.fs.usda.gov/treesearch/pubs/60581 (accessed on 1 December 2020).

- Hamilton, D.; Hamilton, N.; Myers, B. Evaluation of Image Spatial Resolution for Machine Learning Mapping of Wildland Fire Effects. In Proceedings of SAI Intelligent Systems Conference; Springer: Cham, Switzerland, 2019; pp. 400–415. [Google Scholar]

- Hamilton, D.; Myers, B.; Branham, J. Evaluation of Texture as an Input of Spatial Context for Machine Learning Mapping of Wildland Fire Effects. Signal Image Process. Int. J. 2017, 8, 146–158. [Google Scholar] [CrossRef]

- Rothermel, R.C. Predicting Behavior and Size of Crown Fires in the Northern Rocky Mountains; U.S. Department of Agriculture, Forest Service, Intermountain Forest and Range Experiment Station: Ogden, UT, USA, 1991.

- Cruz, M.G. Development and testing of models for predicting crown fire rate of spread in conifer forest stands. Can. J. For. Res. 2005, 35, 1626–1639. [Google Scholar] [CrossRef]

- LANDFIRE. Landfire Program. 2020. Available online: https://landfire.gov/ (accessed on 25 November 2020).

- Reeves, M.C.; Ryan, K.C.; Rollins, M.G.; Thompson, T.G. Spatial fuel data products of the LANDFIRE Project. Int. J. Wildl. Fire 2009, 18, 250. [Google Scholar] [CrossRef]

- Hamilton, D.; Bowerman, M.; Collwel, J.; Donahoe, G.; Myers, B. A Spectroscopic Analysis for Mapping Wildland Fire Effects from Remotely Sensed Imagery. J. Unmanned Veh. Syst. 2017, 5, 146–158. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Scott, J.H.; Reinhardt, E.D. Assessing crown fire potential by linking models of surface and crown fire behavior. In USDA Forest Service Research Paper; USDA: Fort Collins, CO, USA, 2001; p. 1. [Google Scholar]

- He, K.; Gkioxari, G.; Dollar, P.; Girshick, R. Mask R-CNN. In Proceedings of the IEEE International Conference on Computer Vision, Venice, Italy, 22–29 October 2017. [Google Scholar]

- Lin, T.Y.; Maire, M.; Belongie, S.; Hays, J.; Perona, P.; Ramanan, D.; Dollár, P.; Zitnick, C.L. Microsoft COCO: Common objects in context. In European Conference on Computer Vision; Springer: Cham, Switzerland, 2014; pp. 740–755. [Google Scholar]

- Dutta, A.; Zisserman, A. The VIA Annotation Software for Images, Audio and Video; Springer: New York, NY, USA, 2019. [Google Scholar] [CrossRef] [Green Version]

- Matese, A.; Toscano, P.; Di Gennaro, S.F.; Genesio, L.; Vaccari, F.P.; Primicerio, J.; Belli, C.; Zaldei, A.; Bianconi, R.; Gioli, B. Intercomparison of UAV, aircraft and satellite remote sensing platforms for precision viticulture. Remote Sens. 2015, 7, 2971–2990. [Google Scholar] [CrossRef] [Green Version]

- Goodwin, J.; Hamilton, D. Archaeological Imagery Acquisition and Mapping Analytics Development; Boise National Forest: Boise, ID, USA, 2019.

- Calkins, A.; Hamilton, D. Archaeological Imagery Acquisition and Mapping Analytics Development; Boise National Forest: Boise, ID, USA, 2018.

- Scott, J. Review and Assessment of LANDFIRE Canopy Fuel Mapping Procedures. LANDFIRE, LANDFIRE Bulletin. 2008. Available online: https://landfire.cr.usgs.gov/documents/LANDFIRE_Canopyfuels_and_Seamlines_ReviewScott.pdf (accessed on 30 July 2020).

- National Wildfire Coordinating Group (NWCG). Size Class of Fire. 2020. Available online: www.nwcg.gov/term/glossary/size-class-of-fire (accessed on 20 May 2020).

- Barrett, S.; Havlina, D.; Jones, J.; Hann, W.; Frame, C.; Hamilton, D.; Schon, K.; Demeo, T.; Hutter, L.; Menakis, J.; et al. Interagency Fire Regime Condition Class Guidebook; Version 3.0; USDA Forest Service, US Department of Interior, and The Nature Conservancy: Rolla, MO, USA, 2010.

| South Placerville | NW Placerville | Edna Creek | South Exp. Forest | |||||

|---|---|---|---|---|---|---|---|---|

| Surface | Canopy | Surface | Canopy | Surface | Canopy | Surface | Canopy | |

| Surface | 47.5% | 19.9% | 46.6% | 6.2% | 49.0% | 0.6% | 49.1% | 10.6% |

| Canopy | 0.6% | 32.0% | 2.3% | 44.9% | 0.0% | 50.3% | 0.4% | 40.0% |

| North Exp. Forest | East Placerville | West Placerville | ||||||

| Surface | Canopy | Surface | Canopy | Surface | Canopy | |||

| Surface | 50.0% | 10.5% | 49.29% | 4.50% | 49.98% | 7.29% | ||

| Canopy | 0.3% | 39.2% | 0.00% | 46.21% | 0.00% | 42.73% | ||

| Belshazzar | Newstart | Grimes Creek | ||||||

| Surface | Canopy | Surface | Canopy | Surface | Canopy | |||

| Surface | 47.1% | 3.6% | 50.1% | 16.9% | 51.5% | 2.9% | ||

| Canopy | 3.0% | 46.3% | 0.2% | 32.8% | 0.2% | 45.4% | ||

| Accuracy | Specificity | Sensitivity | |

|---|---|---|---|

| South Placerville | 79.5% | 98.2% | 70.5% |

| NW Placerville | 91.5% | 95.1% | 88.3% |

| Edna Creek | 99.3% | 100.0% | 98.8% |

| South Exp. Forest | 89.1% | 99.0% | 82.2% |

| North Exp. Forest | 89.2% | 99.2% | 82.6% |

| East Placerville | 95.5% | 100.0% | 91.6% |

| West Placerville | 92.7% | 100.0% | 87.3% |

| Belshazzar | 93.4% | 93.9% | 92.9% |

| Newstart | 82.9% | 99.4% | 74.8% |

| Grimes Creek | 96.9% | 99.6% | 94.7% |

| Mean | Standard Deviation | |

|---|---|---|

| Belshazzar | 17% | 14 |

| New Start | 4% | 13 |

| Grimes Creek | 6% | 15 |

| NW Placerville | 1% | 11 |

| 0530 Placerville | 10% | 9 |

| Edna Creek | 4% | 1 |

| South Experimental Forest | 3% | 12 |

| North Experimental Forest | 7% | 12 |

| East Placerville | 7% | 12 |

| West Placerville | 2% | 13 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Hamilton, D.A.; Brothers, K.L.; Jones, S.D.; Colwell, J.; Winters, J. Wildland Fire Tree Mortality Mapping from Hyperspatial Imagery Using Machine Learning. Remote Sens. 2021, 13, 290. https://doi.org/10.3390/rs13020290

Hamilton DA, Brothers KL, Jones SD, Colwell J, Winters J. Wildland Fire Tree Mortality Mapping from Hyperspatial Imagery Using Machine Learning. Remote Sensing. 2021; 13(2):290. https://doi.org/10.3390/rs13020290

Chicago/Turabian StyleHamilton, Dale A., Kamden L. Brothers, Samuel D. Jones, Jason Colwell, and Jacob Winters. 2021. "Wildland Fire Tree Mortality Mapping from Hyperspatial Imagery Using Machine Learning" Remote Sensing 13, no. 2: 290. https://doi.org/10.3390/rs13020290

APA StyleHamilton, D. A., Brothers, K. L., Jones, S. D., Colwell, J., & Winters, J. (2021). Wildland Fire Tree Mortality Mapping from Hyperspatial Imagery Using Machine Learning. Remote Sensing, 13(2), 290. https://doi.org/10.3390/rs13020290