A Training Sample Migration Method for Wetland Mapping and Monitoring Using Sentinel Data in Google Earth Engine

Abstract

:1. Introduction

2. Study Area and Data

2.1. Study Area

2.2. Field and Reference Data

2.3. Satellite Dataset

2.3.1. Sentinel-1 Data

2.3.2. Sentinel-2 Data

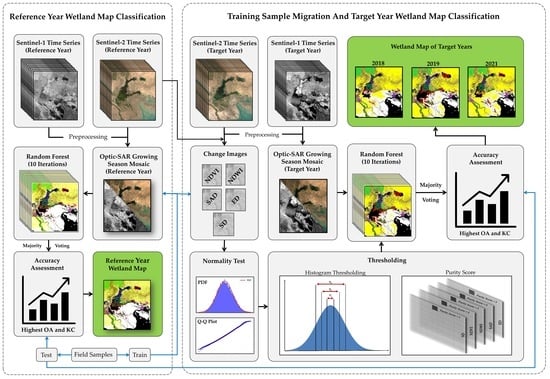

3. Methodology

3.1. Reference Year Wetland Classification

3.1.1. Satellite Data Preprocessing

3.1.2. Classification

3.2. Automatic Wetland Classification for Target Years Using Migrated Samples

3.2.1. Input Indices for the Migration Algorithm

3.2.2. Determination of the Optimum Threshold for Selecting Unchanged Training Samples

- The corresponding pixel values of the training samples in the reference year image were independently extracted from each of the five change images, resulting in five sets of pixels.

- For each set, the mean and SD were independently computed.

- A stepwise procedure was performed to extract the potentially unchanged pixels at each step from each set of pixels (see Figure 4a).

- At each step, pixels were given a purity score between 1 and 5 based on the frequency of their presence among five sets. A score of 1 indicated that the pixel existed only in one set (i.e., only one of the five purity conditions was satisfied, and the pixel was potentially changed), and a score of 5 indicated that the pixels existed in all five sets (i.e., all five purity conditions were satisfied, and the pixels were likely unchanged). Thus, the pixels with a score of 5 were selected at each step (see Figure 4b).

- The unchanged pixels selected at each step were then used to classify the test samples that were specified in Section 2.2, and the quality of these unchanged pixels was evaluated using the OA and KC values derived from the confusion matrix of the classification.

- Steps 4 and 5 were repeated until no further improvement in classification accuracy was achieved, and the abovementioned statistics (i.e., the OA and KC) began to decline. In this case, the step with the highest accuracy was selected as the optimal threshold value.

4. Results

4.1. Wetland Classification for the Reference Year

4.2. Migration of Training Samples

4.2.1. Normality Test

4.2.2. Optimum Threshold Determination

4.3. Wetland Classification for the Target Years

5. Discussion

5.1. Generated Wetland Map for the Reference Year

5.2. The Proposed Sample Migration Method

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

References

- Lees, K.J.; Quaife, T.; Artz, R.R.E.; Khomik, M.; Clark, J.M. Potential for using remote sensing to estimate carbon fluxes across northern peatlands—A review. Sci. Total Environ. 2018, 615, 857–874. [Google Scholar] [CrossRef]

- Grings, F.; Salvia, M.; Karszenbaum, H.; Ferrazzoli, P.; Kandus, P.; Perna, P. Exploring the capacity of radar remote sensing to estimate wetland marshes water storage. Environ. Manag. 2009, 90, 2189–2198. [Google Scholar] [CrossRef]

- Wang, X.; Zhang, F.; Kung, H.; Johnson, V.C. New methods for improving the remote sensing estimation of soil organic matter content (SOMC) in the Ebinur Lake Wetland National Nature Reserve (ELWNNR) in northwest China. Remote Sens. Environ. 2018, 218, 104–118. [Google Scholar] [CrossRef]

- Frappart, F.; Seyler, F.; Martinez, J.M.; Leon, J.G.; Cazenave, A. Floodplain water storage in the Negro River basin estimated from microwave remote sensing of inundation area and water levels. Remote Sens. Environ. 2005, 99, 387–399. [Google Scholar] [CrossRef] [Green Version]

- Bolca, M.; Turkyilmaz, B.; Kurucu, Y.; Altinbas, U.; Esetlili, M.T. Determination of impact of urbanization on agricultural land and wetland land use in balcovas’ delta by remote sensing and GIS technique. Environ. Monit. Assess. 2007, 131, 409–419. [Google Scholar] [CrossRef] [PubMed]

- Jiang, P.; Cheng, L.; Li, M.; Zhao, R.; Huang, Q. Analysis of landscape fragmentation processes and driving forces in wetlands in arid areas: A case study of the middle reaches of the Heihe River, China. Ecol. Indic. 2014, 46, 240–252. [Google Scholar] [CrossRef]

- Hu, S.; Niu, Z.; Chen, Y.; Li, L.; Zhang, H. Global wetlands: Potential distribution, wetland loss, and status. Sci. Total Environ. 2017, 586, 319–327. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.; Brisco, B. Wetland classification in Newfoundland and Labrador using multi-source SAR and optical data integration. Gisci. Remote Sens. 2017, 54, 779–796. [Google Scholar] [CrossRef]

- Mahdavi, S.; Salehi, B.; Granger, J.; Amani, M.; Brisco, B.; Huang, W. Remote sensing for wetland classification: A comprehensive review. Gisci Remote Sens. 2018, 55, 623–658. [Google Scholar] [CrossRef]

- Kaplan, G.; Avdan, U. Monthly Analysis of Wetlands Dynamics Using Remote Sensing Data. ISPRS Int. J. Geo-Inf. 2018, 7, 411. [Google Scholar] [CrossRef] [Green Version]

- Adam, E.; Mutanga, O. Spectral discrimination of papyrus vegetation (Cyperus papyrus L.) in swamp wetlands using field spectrometry. ISPRS J. Photogramm. 2009, 64, 612–620. [Google Scholar] [CrossRef]

- Amani, M.; Brisco, B.; Mahdavi, S.; Ghorbanian, A.; Moghimi, A.; DeLancey, E.R.; Merchant, M.; Jahncke, R.; Fedorchuk, L.; Mui, A.; et al. Evaluation of the Landsat-Based Canadian Wetland Inventory Map Using Multiple Sources: Challenges of Large-Scale Wetland Classification Using Remote Sensing. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 32–52. [Google Scholar] [CrossRef]

- Guo, M.; Li, J.; Sheng, C.; Xu, J.; Wu, L. A Review of Wetland Remote Sensing. Sensors 2017, 17, 777. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.; Brisco, B. Spectral analysis of wetlands using multi-source optical satellite imagery. ISPRS J. Photogramm. 2018, 144, 119–136. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Brisco, B.; Shehata, M. A Multiple Classifier System to improve mapping complex land covers: A case study of wetland classification using SAR data in Newfoundland, Canada. Int. J. Remote Sens. 2018, 39, 7370–7383. [Google Scholar] [CrossRef]

- Lane, C.R.; D’Amico, E. Calculating the Ecosystem Service of Water Storage in Isolated Wetlands using LiDAR in North Central Florida, USA. Wetlands 2010, 30, 967–977. [Google Scholar] [CrossRef]

- Amani, M.; Mahdavi, S.; Berard, O. Supervised wetland classification using high spatial resolution optical, SAR, and LiDAR imagery. J. Appl. Remote Sens. 2020, 14, 024502. [Google Scholar] [CrossRef]

- Gilmore, M.S.; Wilson, E.H.; Barrett, N.; Civco, D.; Prisloe, S.; Hurd, J.D.; Chadwick, C. Integrating multi-temporal spectral and structural information to map wetland vegetation in a lower Connecticut River tidal marsh. Remote Sens. Environ. 2008, 112, 4048–4060. [Google Scholar] [CrossRef]

- Ex18Rapinel, S.; Mony, C.; Lecoq, L.; Clement, B.; Thomas, A.; Hubert-Moy, L. Evaluation of Sentinel-2 time-series for mapping floodplain grassland plant communities. Remote Sens. Environ. 2019, 223, 115–129. [Google Scholar]

- Adeli, S.; Salehi, B.; Mahdianpari, M.; Quackenbush, L.J.; Brisco, B.; Tamiminia, H.; Shaw, S. Wetland Monitoring Using SAR Data: A Meta-Analysis and Comprehensive Review. Remote Sens. 2020, 12, 2190. [Google Scholar] [CrossRef]

- Mahdianpari, M.; Salehi, B.; Mohammadimanesh, F.; Homayouni, S.; Gill, E. The First Wetland Inventory Map of Newfoundland at a Spatial Resolution of 10 m Using Sentinel-1 and Sentinel-2 Data on the Google Earth Engine Cloud Computing Platform. Remote Sens. 2019, 11, 43. [Google Scholar] [CrossRef] [Green Version]

- Slagter, B.; Tsendbazar, N.; Vollrath, A.; Reiche, J. Mapping wetland characteristics using temporally dense Sentinel-1 and Sentinel-2 data: A case study in the St. Lucia wetlands, South Africa. Int. J. Appl. Earth Obs. 2020, 86, 102009. [Google Scholar] [CrossRef]

- Kaplan, G.; Avdan, U. Evaluating the utilization of the red edge and radar bands from Sentinel sensors for wetland classification. Catena 2019, 178, 109–119. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Zaghian, S.; Mohammadi Asiyabi, R.; Amani, M.; Mohammadzadeh, A.; Jamali, S. Mangrove Ecosystem Mapping Using Sentinel-1 and Sentinel-2 Satellite Images and Random Forest Algorithm in Google Earth Engine. Remote Sens. 2011, 13, 2565. [Google Scholar] [CrossRef]

- Gallant, A.L. The Challenges of Remote Monitoring of Wetlands. Remote Sens. 2015, 7, 10938–10950. [Google Scholar] [CrossRef] [Green Version]

- Gorelick, N.; Hancher, M.; Dixon, M.; Ilyushchenko, S.; Thau, D.; Moore, R. Google Earth Engine: Planetary-scale geospatial analysis for everyone. Remote Sens. Environ. 2017, 202, 18–27. [Google Scholar] [CrossRef]

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- Amani, M.; Brisco, B.; Afshar, M.; Mirmazloumi, S.M.; Mahdavi, S.; Ghahremanloo, M.; Mirzadeh, S.M.J.; Huang, W.; Granger, J. A generalized supervised classification scheme to produce provincial wetland inventory maps: An application of Google Earth Engine for big geo data processing. Big Earth Data 2019, 3, 378–394. [Google Scholar] [CrossRef]

- Hird, J.; DeLancey, E.; McDermid, G.; Kariyeva, J. Google Earth Engine, Open-Access Satellite Data, and Machine Learning in Support of Large-Area Probabilistic Wetland Mapping. Remote Sens. 2017, 9, 1315. [Google Scholar] [CrossRef] [Green Version]

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.E.; Brisco, B.; Hanson, A. Wetland Classification Using Multi-Source and Multi-Temporal Optical Remote Sensing Data in Newfoundland and Labrador, Canada. Can. J. Remote Sens. 2017, 43, 360–373. [Google Scholar] [CrossRef]

- Chatziantoniou, A.; Petropoulos, G.; Psomiadis, E. Co-Orbital Sentinel 1 and 2 for LULC Mapping with Emphasis on Wetlands in a Mediterranean Setting Based on Machine Learning. Remote Sens. 2017, 9, 1259. [Google Scholar] [CrossRef] [Green Version]

- Berhane, T.; Lane, C.R.; Wu, Q.; Autrey, B.C.; Anenkhonov, O.; Chepinoga, V.V.; Liu, H. Decision-Tree, Rule-Based, and Random Forest Classification of High-Resolution Multispectral Imagery for Wetland Mapping and Inventory. Remote Sens. 2018, 10, 580. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Demir, B.; Bovolo, F.; Bruzzone, L. Updating Land-Cover Maps by Classification of Image Time Series: A Novel Change-Detection-Driven Transfer Learning Approach. IEEE Trans. Geosci. Remote Sens. 2013, 51, 300–312. [Google Scholar] [CrossRef]

- Huang, X.; Weng, C.; Lu, Q.; Feng, T.; Zhang, L. Automatic Labelling and Selection of Training Samples for High-Resolution Remote Sensing Image Classification over Urban Areas. Remote Sens. 2015, 7, 16024–16044. [Google Scholar] [CrossRef] [Green Version]

- Michishita, R.; Jiang, Z.; Gong, P.; Xu, B. Bi-scale analysis of multitemporal land cover fractions for wetland vegetation mapping. ISPRS J. Photogramm. 2012, 72, 1–15. [Google Scholar] [CrossRef]

- Li, X.; Ling, F.; Foody, G.M.; Du, Y. A Superresolution Land-Cover Change Detection Method Using Remotely Sensed Images with Different Spatial Resolutions. IEEE Trans. Geosci. Remote Sens. 2016, 54, 3822–3841. [Google Scholar] [CrossRef]

- Lu, D.; Mausel, P.; Brondizio, E.; Moran, E. Change detection techniques. Int. J. Remote Sens. 2004, 25, 2365–2407. [Google Scholar] [CrossRef]

- Munyati, C. Wetland change detection on the Kafue Flats, Zambia, by classification of a multitemporal remote sensing image dataset. Int. J. Remote Sens. 2000, 21, 1787–1806. [Google Scholar] [CrossRef]

- Seydi, S.T.; Akhoondzadeh, M.; Amani, M.; Mahdavi, S. Wildfire Damage Assessment over Australia Using Sentinel-2 Imagery and MODIS Land Cover Product within the Google Earth Engine Cloud Platform. Remote Sens. 2021, 13, 220. [Google Scholar] [CrossRef]

- Huang, H.; Wang, J.; Liu, C.; Liang, L.; Li, C.; Gong, P. The migration of training samples towards dynamic global land cover mapping. ISPRS J. Photogramm. 2020, 161, 27–36. [Google Scholar] [CrossRef]

- Ghorbanian, A.; Kakooei, M.; Amani, M.; Mahdavi, S.; Mohammadzadeh, A.; Hasanlou, M. Improved land cover map of Iran using Sentinel imagery within Google Earth Engine and a novel automatic workflow for land cover classification using migrated training samples. ISPRS J. Photogramm. 2020, 167, 276–288. [Google Scholar] [CrossRef]

- Zhu, Q.; Wang, Y.; Liu, J.; Li, X.; Pan, H.; Jia, M. Tracking Historical Wetland Changes in the China Side of the Amur River Basin Based on Landsat Imagery and Training Samples Migration. Remote Sens. 2021, 13, 2161. [Google Scholar] [CrossRef]

- Phan, D.C.; Trung, T.H.; Truong, V.T.; Sasagawa, T.; Vu, T.P.T.; Bui, D.T.; Hayashi, M.; Tadono, T.; Nasahara, K.N. First comprehensive quantification of annual land use/cover from 1990 to 2020 across mainland Vietnam. Sci. Rep. 2021, 11, 9979. [Google Scholar] [CrossRef]

- Szantoi, Z.; Escobedo, F.; Abd-Elrahman, A.; Pearlstine, L.; Dewitt, B.; Smith, S. Classifying spatially heterogeneous wetland communities using machine learning algorithms and spectral and textural features. Environ. Monit. Assess. 2015, 187, 262. [Google Scholar] [CrossRef]

- Mui, A.; He, Y.; Weng, Q. An object-based approach to delineate wetlands across landscapes of varied disturbance with high spatial resolution satellite imagery. ISPRS J. Photogramm. 2015, 109, 30–46. [Google Scholar] [CrossRef] [Green Version]

- Lane, C.; Liu, H.; Autrey, B.; Anenkhonov, O.; Chepinoga, V.; Wu, Q. Improved Wetland Classification Using Eight-Band High Resolution Satellite Imagery and a Hybrid Approach. Remote Sens. 2014, 6, 12187–12216. [Google Scholar] [CrossRef] [Green Version]

- Mahdavi, S.; Salehi, B.; Amani, M.; Granger, J.; Brisco, B.; Huang, W.; Hanson, A. Object-Based Classification of Wetlands in Newfoundland and Labrador Using Multi-Temporal PolSAR Data. Can. J. Remote Sens. 2017, 43, 432–450. [Google Scholar] [CrossRef]

- Hu, T.; Smith, R.B. The Impact of Hurricane Maria on the Vegetation of Dominica and Puerto Rico Using Multispectral Remote Sensing. Remote Sens. 2018, 10, 827. [Google Scholar] [CrossRef] [Green Version]

- Rokni, K.; Ahmad, A.; Selamat, A.; Hazini, S. Water Feature Extraction and Change Detection Using Multitemporal Landsat Imagery. Remote Sens. 2014, 6, 4173–4189. [Google Scholar] [CrossRef] [Green Version]

- Dronova, I.; Gong, P.; Wang, L. Object-based analysis and change detection of major wetland cover types and their classification uncertainty during the low water period at Poyang Lake, China. Remote Sens. Environ. 2011, 115, 3220–3236. [Google Scholar] [CrossRef]

- Hakdaoui, S.; Emran, A.; Pradhan, B.; Lee, C.; Fils, S.C.N. A Collaborative Change Detection Approach on Multi-Sensor Spatial Imagery for Desert Wetland Monitoring after a Flash Flood in Southern Morocco. Remote Sens. 2019, 9, 1042. [Google Scholar] [CrossRef] [Green Version]

- Klemas, V. Remote Sensing of Coastal Wetland Biomass: An Overview. J. Coast. Res. 2013, 29, 1016–1028. [Google Scholar] [CrossRef]

- Zhu, Z. Change detection using landsat time series: A review of frequencies, preprocessing, algorithms, and applications. ISPRS J. Photogramm. 2017, 130, 370–384. [Google Scholar] [CrossRef]

- Coppin, P.; Jonckheere, I.; Nackaerts, K.; Muys, B.; Lambin, E. Digital change detection methods in ecosystem monitoring: A review. Int. J. Remote Sens. 2004, 25, 1565–1596. [Google Scholar] [CrossRef]

- Patra, S.; Ghosh, S.; Ghosh, A. Histogram thresholding for unsupervised change detection of remote sensing images. Int. J. Remote Sens. 2011, 32, 6071–6089. [Google Scholar] [CrossRef]

- Behnamian, A.; Banks, S.; White, L.; Brisco, B.; Millard, K.; Pasher, J.; Chen, Z.; Duffe, J.; Bourgeau-Chavez, L.; Battaglia, M. Semi-Automated Surface Water Detection with Synthetic Aperture Radar Data: A Wetland Case Study. Remote Sens. 2017, 9, 1209. [Google Scholar] [CrossRef] [Green Version]

- Ozturk, D.; Beyazit, I.; Kilic, F. Spatiotemporal Analysis of Shoreline Changes of the Kizilirmak Delta. J. Coast. Res. 2015, 31, 1389–1402. [Google Scholar] [CrossRef]

- Lotfi, A. Shadegan Wetland (Islamic Republic of Iran). In The Wetland Book; Springer: Dordrecht, The Netherlands, 2016; pp. 1675–1683. Available online: https://link.springer.com/content/pdf/bfm%3A978-94-007-4001-3%2F1.pdf (accessed on 14 September 2021).

- Almasi, H.; Takdastan, A.; Jaafarzadeh, N.; Babaei, A.A.; Birgani, Y.T.; Cheraghian, B.; Saki, A.; Jorfi, S. Spatial distribution, ecological and health risk assessment and source identification of atrazine in Shadegan international wetland, Iran. Mar. Pollut. Bull. 2020, 160, 111569. [Google Scholar] [CrossRef]

- Malenovský, Z.; Rott, H.; Cihlar, J.; Schaepman, M.E.; García-Santos, G.; Fernandes, R.; Berger, M. Sentinels for science: Potential of Sentinel-1, -2, and -3 missions for scientific observations of ocean, cryosphere, and land. Remote Sens. Environ. 2012, 120, 91–101. [Google Scholar] [CrossRef]

- Torres, R.; Snoeij, P.; Geudtner, D.; Bibby, D.; Davidson, M.; Attema, E.; Potin, P.; Rommen, B.; Floury, N.; Brown, M.; et al. GMES Sentinel-1 mission. Remote Sens. Environ. 2012, 120, 9–24. [Google Scholar] [CrossRef]

- Stromann, O.; Nascetti, A.; Yousif, O.; Ban, Y. Dimensionality Reduction and Feature Selection for Object-Based Land Cover Classification based on Sentinel-1 and Sentinel-2 Time Series Using Google Earth Engine. Remote Sens. 2020, 12, 76. [Google Scholar] [CrossRef] [Green Version]

- Brisco, B.; Schmitt, A.; Murnaghan, K.; Kaya, S.; Roth, A. SAR polarimetric change detection for flooded vegetation. Int. J. Remote Sens. 2020, 6, 103–114. [Google Scholar] [CrossRef]

- Mutanga, O.; Adam, E.; Cho, M. High density biomass estimation for wetland vegetation using WorldView-2 imagery and random forest regression algorithm. Int. J. Appl. Earth Obs. 2012, 18, 399–406. [Google Scholar] [CrossRef]

- Valderrama-Landeros, L.; Flores-de-Santiago, F.; Kovacs, J.M.; Flores-Verdugo, F. An assessment of commonly employed satellite-based remote sensors for mapping mangrove species in Mexico using an NDVI-based classification scheme. Environ. Monit. Assess. 2018, 190, 23. [Google Scholar] [CrossRef] [PubMed]

- Bhatnagar, S.; Gill, L.; Regan, S.; Naughton, O.; Johnston, P.; Waldren, S.; Ghosh, B. Mapping vegetation communities inside wetlands using Sentinel-2 imagery in ireland. Int. J. Appl. Earth Obs. 2020, 88, 102083. [Google Scholar] [CrossRef]

- Petus, C.; Lewis, M.; White, D. Monitoring temporal dynamics of Great Artesian Basin wetland vegetation, Australia, using MODIS NDVI. Ecol. Indic. 2013, 34, 41–52. [Google Scholar] [CrossRef]

- Malinowski, R.; Groom, G.; Schwanghart, W.; Heckrath, G. Detection and Delineation of Localized Flooding from World View-2 Multispectral Data. Remote Sens. 2015, 7, 14853–14875. [Google Scholar] [CrossRef] [Green Version]

- Dronova, I.; Gong, P.; Wang, L.; Zhong, L. Mapping dynamic cover types in a large seasonally flooded wetland using extended principal component analysis and object-based classification. Remote Sens. Environ. 2015, 158, 193–206. [Google Scholar] [CrossRef]

- Ji, L.; Geng, X.; Sun, K.; Zhao, Y.; Gong, P. Target Detection Method for Water Mapping Using Landsat 8 OLI/TIRS Imagery. Water 2015, 7, 794–817. [Google Scholar] [CrossRef] [Green Version]

- Ashayeri, N.Y.; Keshavarzi, B.; Moore, F.; Kersten, M.; Yazdi, M.; Lahijanzadeh, A.R. Presence of polycyclic aromatic hydrocarbons in sediments and surface water from Shadegan wetland—Iran: A focus on source apportionment, human and ecological risk assessment and Sediment-Water Exchange. Ecotoxicology 2018, 148, 1054–1066. [Google Scholar]

- Nasirian, H.; Irvine, K.N.; Sadeghi, S.M.T.; Mahvi, A.H.; Nazmara, S. Assessment of bed sediment metal contamination in the Shadegan and Hawr Al Azim wetlands, Iran. Environ. Monit. Assess. 2016, 188, 107. [Google Scholar] [CrossRef]

- Davodi, M.; Esmaili-Sari, A.; Bahramifarr, N. Concentration of polychlorinated biphenyls and organochlorine pesticides in some edible fish species from the Shadegan Marshes (Iran). Ecotoxicology 2011, 74, 294–300. [Google Scholar] [CrossRef]

- Hashemi, S.A.R.; Stara, A.; Faggio, C. Biological Characteristics, Growth Parameters and Mortality Rate of Carassius auratus in the Shadegan Wetland (Iran). Int. J. Environ. 2019, 13, 457–464. [Google Scholar] [CrossRef]

- Zamani-Ahmadmahmoodi, R.; Esmaili-Sari, A.; Savabieasfahani, M.; Ghasempouri, S.M.; Bahramifar, N. Mercury Pollution in Three Species of Waders from Shadegan Wetlands at the Head of the Persian Gulf. Bull. Environ. Contam. Toxicol. 2010, 84, 326–330. [Google Scholar] [CrossRef]

- Zamani-Ahmadmahmoodi, R.; Esmaili-Sari, A.; Ghasempouri, S.M.; Savabieasfahani, M. Mercury levels in selected tissues of three kingfisher species; Ceryle rudis, Alcedo atthis, and Halcyon smyrnensi, from Shadegan Marshes of Iran. Ecotoxicology 2009, 18, 319–324. [Google Scholar] [CrossRef] [PubMed]

- Schmidt, K.; Skidmore, A. Spectral discrimination of vegetation types in a coastal wetland. Remote Sens. Environ. 2003, 85, 92–108. [Google Scholar] [CrossRef]

- Zomer, R.; Trabucco, A.; Ustin, S. Building spectral libraries for wetlands land cover classification and hyperspectral remote sensing. J. Environ. Manag. 2009, 90, 2170–2177. [Google Scholar] [CrossRef] [PubMed]

- Ma, S.; Zhou, Y.; Gowda, P.H.; Dong, J.; Zhang, G.; Kakani, V.G.; Wagle, P.; Chen, L.; Flynn, K.C.; Jiang, W. Application of the water-related spectral reflectance indices: A review. Ecol. Indic. 2019, 98, 68–79. [Google Scholar] [CrossRef]

- Xue, J.; Su, B. Significant Remote Sensing Vegetation Indices: A Review of Developments and Applications. J. Sens. 2017, 2017, 1353691. [Google Scholar] [CrossRef] [Green Version]

- Szantoi, Z.; Escobedo, F.; Abd-Elrahman, A.; Smith, S.; Pearlstine, L. Analyzing fine-scale wetland composition using high resolution imagery and texture features. Int. J. Appl. Earth Obs. Geoinf. 2013, 23, 204–212. [Google Scholar] [CrossRef]

- Wang, M.; Fei, X.; Zhang, Y.; Chen, Z.; Wang, X.; Tsou, J.Y.; Liu, D.; Lu, X. Assessing Texture Features to Classify Coastal Wetland Vegetation from High Spatial Resolution Imagery Using Completed Local Binary Patterns (CLBP). Remote Sens. 2018, 10, 778. [Google Scholar] [CrossRef] [Green Version]

- Schmitt, A.; Brisco, B. Wetland Monitoring Using the Curvelet-Based Change Detection Method on Polarimetric SAR Imagery. Water 2013, 5, 1036–1051. [Google Scholar] [CrossRef]

| ID | Class Type | Class | Training Samples | Test Samples | Total | |||

|---|---|---|---|---|---|---|---|---|

| Polygon | Area (Km2) | Polygon | Area (Km2) | Polygon | Area (Km2) | |||

| 1 | Wetland | Juncusacutus | 44 | 7.65 | 22 | 3.81 | 66 | 11.46 |

| 2 | Wetland | Typa angustifolia | 27 | 6.2 | 12 | 2.4 | 39 | 8.6 |

| 3 | Wetland | Phragmites australis | 38 | 6.92 | 18 | 3.53 | 56 | 10.45 |

| 4 | Non-wetland | Non-wetland vegetation | 54 | 12.43 | 24 | 6.48 | 78 | 18.91 |

| 5 | Non-wetland | Agriculture land | 41 | 7.51 | 19 | 3.73 | 60 | 11.24 |

| 6 | Non-wetland | Water | 53 | 9.3 | 20 | 3.12 | 73 | 12.42 |

| 7 | Non-wetland | Bareland | 44 | 12.1 | 21 | 6.1 | 65 | 18.2 |

| 8 | Non-wetland | Mudflat | 49 | 11.58 | 27 | 6.9 | 76 | 18.48 |

| 9 | Non-wetland | Urban | 51 | 5.72 | 21 | 2.43 | 72 | 8.15 |

| Total | 401 | 79.41 | 184 | 38.5 | 585 | 117.91 | ||

| Year | Number of Images | Total | Acquisition Date | |

|---|---|---|---|---|

| Sentinel-1 | Sentinel-2 | |||

| 2018 (Target) | 114 | 119 | 233 | 1 April 2018–29 June 2018 |

| 2019 (Target) | 120 | 110 | 230 | 1 April 2019–29 June 2019 |

| 2020 (Reference) | 112 | 111 | 223 | 1 April 2020–29 June 2020 |

| 2021 (Target) | 120 | 97 | 217 | 1 April 2021–29 June 2021 |

| Juncus acutus | Typa angustifolia | Phragmites australis | Water | Non-Wetland Vegetation | Agriculture Land | Bareland | Mudflat | Urban | |

|---|---|---|---|---|---|---|---|---|---|

| Juncusacutus | 7352 | 14 | 0 | 0 | 196 | 12 | 0 | 0 | 0 |

| Typa angustifolia | 43 | 7311 | 152 | 0 | 0 | 1 | 0 | 0 | 0 |

| Phragmites australis | 0 | 129 | 7221 | 0 | 16 | 0 | 0 | 0 | 0 |

| Water | 0 | 0 | 0 | 7605 | 0 | 0 | 0 | 11 | 0 |

| Non-wetland vegetation | 152 | 1 | 0 | 0 | 7420 | 21 | 0 | 0 | 0 |

| Agriculture land | 1 | 9 | 0 | 0 | 11 | 7473 | 53 | 0 | 21 |

| Bareland | 0 | 0 | 0 | 0 | 0 | 98 | 7466 | 9 | 108 |

| Mudflat | 0 | 0 | 6 | 13 | 0 | 3 | 23 | 7555 | 31 |

| Urban | 0 | 1 | 5 | 0 | 2 | 68 | 131 | 64 | 7208 |

| PA% | 97.07 | 97.39 | 98.03 | 99.86 | 97.71 | 98.74 | 97.20 | 99 | 96.38 |

| UA% | 97.4 | 97.94 | 97.79 | 99.83 | 97.06 | 97.36 | 97.3 | 98.9 | 97.83 |

| OA = 97.93%, KC = 0.97 | |||||||||

| Year | Optimum Threshold (SD) | Migrated Samples | OA% | KC | Purity Score |

|---|---|---|---|---|---|

| 2021 | 1 | 44,078 | 97.06 | 0.96 | 5 |

| 2019 | 0.9 | 38,762 | 96.83 | 0.96 | 5 |

| 2018 | 1.1 | 43,146 | 95.89 | 0.95 | 5 |

| Juncus acutus | Typa angustifolia | Phragmites australis | Water | Non-Wetland Vegetation | Agriculture Land | Bareland | Mudflat | Urban | |

|---|---|---|---|---|---|---|---|---|---|

| Juncus acutus | 7177 | 109 | 0 | 0 | 250 | 38 | 0 | 0 | 0 |

| Typa angustifolia | 192 | 7146 | 164 | 0 | 0 | 3 | 0 | 2 | 0 |

| Phragmites australis | 0 | 135 | 7121 | 0 | 67 | 0 | 0 | 43 | 0 |

| Water | 0 | 0 | 0 | 7598 | 0 | 0 | 0 | 18 | 0 |

| Non-wetland vegetation | 153 | 5 | 41 | 0 | 7318 | 77 | 0 | 0 | 0 |

| Agriculture land | 4 | 34 | 0 | 0 | 21 | 7464 | 3 | 27 | 15 |

| Bareland | 0 | 0 | 0 | 0 | 0 | 2 | 7445 | 114 | 128 |

| Mudflat | 0 | 1 | 68 | 4 | 0 | 0 | 38 | 7520 | 0 |

| Urban | 0 | 0 | 4 | 0 | 2 | 69 | 169 | 11 | 7267 |

| PA% | 94.76 | 95.19 | 96.67 | 99.76 | 97.16 | 98.63 | 96.93 | 98.55 | 96.59 |

| UA% | 95.36 | 96.18 | 96.26 | 99.95 | 95.24 | 97.53 | 97.26 | 97.22 | 98.17 |

| OA = 97.06%, KC = 0.96 | |||||||||

| (a) | |||||||||

| Juncus acutus | Typa angustifolia | Phragmites australis | Water | Non-Wetland Vegetation | Agriculture Land | Bareland | Mudflat | Urban | |

| Juncus acutus | 7192 | 121 | 0 | 0 | 253 | 8 | 0 | 0 | 0 |

| Typa angustifolia | 196 | 7042 | 248 | 0 | 10 | 10 | 0 | 0 | 1 |

| Phragmites australis | 0 | 329 | 7007 | 0 | 0 | 1 | 0 | 28 | 1 |

| Water | 0 | 0 | 2 | 7609 | 0 | 0 | 0 | 5 | 0 |

| Non-wetland vegetation | 213 | 1 | 0 | 0 | 7327 | 53 | 0 | 0 | 0 |

| Agriculture land | 0 | 9 | 0 | 0 | 31 | 7477 | 0 | 5 | 46 |

| Bareland | 0 | 0 | 0 | 0 | 0 | 16 | 7461 | 41 | 163 |

| Mudflat | 0 | 1 | 10 | 2 | 0 | 10 | 41 | 7545 | 22 |

| Urban | 0 | 0 | 5 | 0 | 1 | 67 | 172 | 39 | 7195 |

| PA% | 94.96 | 93.81 | 95.13 | 99.91 | 96.48 | 98.8 | 97.14 | 98.87 | 96.2 |

| UA% | 94.62 | 93.86 | 96.36 | 99.97 | 96.13 | 97.84 | 97.22 | 98.46 | 96.86 |

| OA = 96.83%, KC = 0.96 | |||||||||

| (b) | |||||||||

| Juncus acutus | Typa angustifolia | Phragmites australis | Water | Non-Wetland Vegetation | Agriculture Land | Bareland | Mudflat | Urban | |

| Juncus acutus | 7127 | 107 | 0 | 0 | 314 | 26 | 0 | 0 | 0 |

| Typa angustifolia | 196 | 6999 | 282 | 0 | 29 | 1 | 0 | 0 | 0 |

| Phragmites australis | 0 | 222 | 7045 | 14 | 71 | 0 | 0 | 14 | 0 |

| Water | 0 | 0 | 2 | 7612 | 0 | 0 | 0 | 2 | 0 |

| Non-wetland vegetation | 293 | 42 | 44 | 0 | 7150 | 65 | 0 | 0 | 0 |

| Agriculture land | 0 | 32 | 0 | 0 | 82 | 7381 | 25 | 23 | 25 |

| Bareland | 0 | 0 | 0 | 0 | 0 | 19 | 7291 | 78 | 293 |

| Mudflat | 0 | 3 | 17 | 2 | 0 | 30 | 2 | 7531 | 46 |

| Urban | 0 | 2 | 12 | 0 | 4 | 69 | 257 | 44 | 7091 |

| PA% | 94.1 | 93.27 | 95.64 | 99.95 | 94.15 | 97.53 | 94.92 | 98.69 | 94.81 |

| UA% | 93.59 | 94.49 | 95.2 | 99.79 | 93.46 | 97.23 | 96.25 | 97.91 | 95.12 |

| OA = 95.89%, KC = 0.95 | |||||||||

| (c) | |||||||||

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Fekri, E.; Latifi, H.; Amani, M.; Zobeidinezhad, A. A Training Sample Migration Method for Wetland Mapping and Monitoring Using Sentinel Data in Google Earth Engine. Remote Sens. 2021, 13, 4169. https://doi.org/10.3390/rs13204169

Fekri E, Latifi H, Amani M, Zobeidinezhad A. A Training Sample Migration Method for Wetland Mapping and Monitoring Using Sentinel Data in Google Earth Engine. Remote Sensing. 2021; 13(20):4169. https://doi.org/10.3390/rs13204169

Chicago/Turabian StyleFekri, Erfan, Hooman Latifi, Meisam Amani, and Abdolkarim Zobeidinezhad. 2021. "A Training Sample Migration Method for Wetland Mapping and Monitoring Using Sentinel Data in Google Earth Engine" Remote Sensing 13, no. 20: 4169. https://doi.org/10.3390/rs13204169

APA StyleFekri, E., Latifi, H., Amani, M., & Zobeidinezhad, A. (2021). A Training Sample Migration Method for Wetland Mapping and Monitoring Using Sentinel Data in Google Earth Engine. Remote Sensing, 13(20), 4169. https://doi.org/10.3390/rs13204169