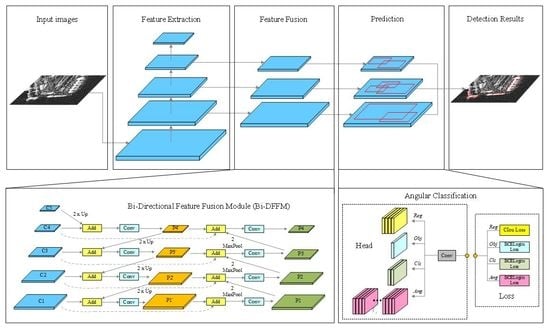

Figure 2.

The overall scheme of the proposed method.

Figure 2.

The overall scheme of the proposed method.

Figure 3.

Random rotation mosaic (RR-Mosaic) data augmentation.

Figure 3.

Random rotation mosaic (RR-Mosaic) data augmentation.

Figure 4.

Structure of Bi-DFFM.

Figure 4.

Structure of Bi-DFFM.

Figure 5.

The oriented bounding box (OBB) of ships in SAR images, where is determined by the long side of the rectangle and x-axis. (a) belongs to [−90, 0). (b) belongs to [0, 90).

Figure 5.

The oriented bounding box (OBB) of ships in SAR images, where is determined by the long side of the rectangle and x-axis. (a) belongs to [−90, 0). (b) belongs to [0, 90).

Figure 6.

The distributions of the sizes, aspect ratios, angle and corresponding error of the horizontal bounding boxes and oriented bounding boxes in SSDD. (a) Distributions of the bounding boxes’ length in SSDD. (b) Distributions of the bounding boxes’ width in SSDD. (c) Distributions of the bounding boxes’ sizes in SSDD. (d) Distributions of the bounding boxes’ aspect ratios in SSDD. (e) Distributions of corresponding errors of the HBB and OBB in SSDD. (f) Distributions of the oriented bounding boxes’ angle in SSDD.

Figure 6.

The distributions of the sizes, aspect ratios, angle and corresponding error of the horizontal bounding boxes and oriented bounding boxes in SSDD. (a) Distributions of the bounding boxes’ length in SSDD. (b) Distributions of the bounding boxes’ width in SSDD. (c) Distributions of the bounding boxes’ sizes in SSDD. (d) Distributions of the bounding boxes’ aspect ratios in SSDD. (e) Distributions of corresponding errors of the HBB and OBB in SSDD. (f) Distributions of the oriented bounding boxes’ angle in SSDD.

Figure 7.

The large-scene images. (a) GF-3 HR SAR image. (b) Corresponding optical image.

Figure 7.

The large-scene images. (a) GF-3 HR SAR image. (b) Corresponding optical image.

Figure 8.

The distributions of the sizes, aspect ratios and angle of the oriented bounding boxes in GF-3 dataset. (a) Distributions of the bounding boxes’ length in GF-3 dataset. (b) Distributions of the bounding boxes’ width in GF-3 dataset. (c) Distributions of the bounding boxes’ aspect ratios in GF-3 dataset. (d) Distributions of the oriented bounding boxes’ angle in GF-3 dataset.

Figure 8.

The distributions of the sizes, aspect ratios and angle of the oriented bounding boxes in GF-3 dataset. (a) Distributions of the bounding boxes’ length in GF-3 dataset. (b) Distributions of the bounding boxes’ width in GF-3 dataset. (c) Distributions of the bounding boxes’ aspect ratios in GF-3 dataset. (d) Distributions of the oriented bounding boxes’ angle in GF-3 dataset.

Figure 9.

Distributions of the angle on SSDD with different data augmentation methods. (a) Flip. (b) Rotation. (c) Radom Rotation. (d) Flip Mosaic. (e) Rotation Mosaic. (f) RR-Mosaic.

Figure 9.

Distributions of the angle on SSDD with different data augmentation methods. (a) Flip. (b) Rotation. (c) Radom Rotation. (d) Flip Mosaic. (e) Rotation Mosaic. (f) RR-Mosaic.

Figure 10.

Experimental results on SSDD. The blue number represents the number of detected ships.

Figure 10.

Experimental results on SSDD. The blue number represents the number of detected ships.

Figure 11.

Precision-Recall (PR) curves of different models on SSDD. (a) PR curves of YOLOv5s-CSL, YOLOv5m-CSL, YOLOv5l-CSL, YOLOv5x-CSL and BiFA-YOLO. (b) PR curves of YOLOv5s-DCL, YOLOv5m- DCL, YOLOv5l-DCL, YOLOv5x-DCL and BiFA-YOLO.

Figure 11.

Precision-Recall (PR) curves of different models on SSDD. (a) PR curves of YOLOv5s-CSL, YOLOv5m-CSL, YOLOv5l-CSL, YOLOv5x-CSL and BiFA-YOLO. (b) PR curves of YOLOv5s-DCL, YOLOv5m- DCL, YOLOv5l-DCL, YOLOv5x-DCL and BiFA-YOLO.

Figure 12.

Comparison of curves of different methods in inshore and offshore scene on SSDD. (a) PR curves of different methods in inshore scene. (b) PR curves of different methods in offshore scene.

Figure 12.

Comparison of curves of different methods in inshore and offshore scene on SSDD. (a) PR curves of different methods in inshore scene. (b) PR curves of different methods in offshore scene.

Figure 13.

Detection results of different methods in inshore scene. (a,k) ground-truths. (b,l) results of YOLOv5s-CSL. (c,m) results of YOLOv5m-CSL. (d,n) results of YOLOv5l-CSL. (e,o) results of YOLOv5x-CSL. (f,p) results of YOLOv5s-DCL. (g,q) results of YOLOv5m-DCL. (h,r) results of YOLOv5l-DCL. (i,s) results of YOLOv5x-DCL. (j,t) results of BiFA-YOLO. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets.

Figure 13.

Detection results of different methods in inshore scene. (a,k) ground-truths. (b,l) results of YOLOv5s-CSL. (c,m) results of YOLOv5m-CSL. (d,n) results of YOLOv5l-CSL. (e,o) results of YOLOv5x-CSL. (f,p) results of YOLOv5s-DCL. (g,q) results of YOLOv5m-DCL. (h,r) results of YOLOv5l-DCL. (i,s) results of YOLOv5x-DCL. (j,t) results of BiFA-YOLO. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets.

Figure 14.

Detection results of different methods in offshore scene. (a,k) ground-truths. (b,l) results of YOLOv5s-CSL. (c,m) results of YOLOv5m-CSL. (d,n) results of YOLOv5l-CSL. (e,o) results of YOLOv5x-CSL. (f,p) results of YOLOv5s-DCL. (g,q) results of YOLOv5m-DCL. (h,r) results of YOLOv5l-DCL. (i,s) results of YOLOv5x-DCL. (j,t) results of BiFA-YOLO. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets.

Figure 14.

Detection results of different methods in offshore scene. (a,k) ground-truths. (b,l) results of YOLOv5s-CSL. (c,m) results of YOLOv5m-CSL. (d,n) results of YOLOv5l-CSL. (e,o) results of YOLOv5x-CSL. (f,p) results of YOLOv5s-DCL. (g,q) results of YOLOv5m-DCL. (h,r) results of YOLOv5l-DCL. (i,s) results of YOLOv5x-DCL. (j,t) results of BiFA-YOLO. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets.

Figure 15.

Detection results of different CNN-based methods on SSDD. (a,g,m) ground-truths. (b,h,n) results of DRBox-v1. (c,i,o) results of SDOE. (d,j,p) results of DRBox-v2. (e,k,q) results of improved R-RetinaNet. (f,l,r) results of proposed BiFA-YOLO. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets; the blue number represents the number of detected ships.

Figure 15.

Detection results of different CNN-based methods on SSDD. (a,g,m) ground-truths. (b,h,n) results of DRBox-v1. (c,i,o) results of SDOE. (d,j,p) results of DRBox-v2. (e,k,q) results of improved R-RetinaNet. (f,l,r) results of proposed BiFA-YOLO. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets; the blue number represents the number of detected ships.

Figure 16.

Detection results in large-scene SAR image. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets; and the blue number represents the number of detected ships.

Figure 16.

Detection results in large-scene SAR image. Note that the red boxes represent true positive targets, the yellow ellipses represent false positive targets and the green ellipses represent missed targets; and the blue number represents the number of detected ships.

Figure 17.

Feature map visualization results of feature pyramid with and without Bi-DFFM. (a,c,e,g,i,k) represent results without Bi-DFFM. (b,d,f,h,j,l) represent results with Bi-DFFM.

Figure 17.

Feature map visualization results of feature pyramid with and without Bi-DFFM. (a,c,e,g,i,k) represent results without Bi-DFFM. (b,d,f,h,j,l) represent results with Bi-DFFM.

Figure 18.

Feature map visualization results of three-scale prediction layers with and without Bi-DFFM. (a,b,e,f,i,j) represent results without Bi-DFFM. (c,d,g,h,k,l) represent results with Bi-DFFM.

Figure 18.

Feature map visualization results of three-scale prediction layers with and without Bi-DFFM. (a,b,e,f,i,j) represent results without Bi-DFFM. (c,d,g,h,k,l) represent results with Bi-DFFM.

Table 1.

The division of angle category.

Table 1.

The division of angle category.

| Range | | Categories |

|---|

| 0–180° | 1 | 180 |

| 0–180° | 2 | 90 |

| 0–90° | 1 | 90 |

| 0–90° | 2 | 45 |

Table 2.

The binary coded label and gray coded label corresponding to the angle categories.

Table 2.

The binary coded label and gray coded label corresponding to the angle categories.

| Angle Category | 1 | 45 | 90 | 135 | 179 |

|---|

| Binary Coded Label | 00000001 | 01000000 | 10000000 | 11000000 | 11111111 |

| Gray Coded Label | 00000001 | 01100000 | 11000000 | 10100000 | 10000000 |

Table 3.

Division of different datasets.

Table 3.

Division of different datasets.

| Dataset | Training | Test | ALL |

|---|

| SSDD | 812 | 348 | 1160 |

| GF-3 Dataset | 10,150 | 4729 | 14,879 |

Table 4.

Experimental environment.

Table 4.

Experimental environment.

| Project | Model/Parameter |

|---|

| CPU | Intel i7-10875H |

| RAM | 32 GB |

| GPU | NVIDIA RTX 2070 |

| System | windows 10 |

| Code | python3.8 |

| Framework | CUDA10.1/cudnn7.6.5/torch 1.6 |

Table 5.

Detection results with different data augmentation methods.

Table 5.

Detection results with different data augmentation methods.

| Method | Precision (%) | Recall (%) | AP (%) | F1 |

|---|

| Flip | 93.84 | 93.02 | 92.23 | 0.9343 |

| Rotation | 93.65 | 93.23 | 92.25 | 0.9344 |

| Random Rotation | 94.80 | 93.33 | 92.72 | 0.9408 |

| Flip Mosaic | 93.49 | 94.13 | 93.06 | 0.9380 |

| Rotation Mosaic | 93.25 | 93.46 | 92.49 | 0.9335 |

| RR-Mosaic | 94.85 | 93.97 | 93.90 | 0.9441 |

Table 6.

Comparison of the methods without and with angular classification.

Table 6.

Comparison of the methods without and with angular classification.

| Method | Precision (%) | Recall (%) | AP (%) | F1 |

|---|

| YOLOv5 | 90.30 | 92.00 | 90.80 | 0.9114 |

| BiFA-YOLO + CSL | 94.85 | 93.97 | 93.90 | 0.9441 |

| BiFA-YOLO + DCL | 94.25 | 93.66 | 92.59 | 0.9395 |

Table 7.

Comparison of different models using the CSL on SSDD.

Table 7.

Comparison of different models using the CSL on SSDD.

| Method | Precision (%) | Recall (%) | AP (%) | F1 | Time (ms) | Params (M) | Model (M) |

|---|

| YOLOv5s-CSL | 91.73 | 88.79 | 86.66 | 0.9024 | 12.1 | 7.38 | 14.9 |

| YOLOv5m-CSL | 91.86 | 93.02 | 90.78 | 0.9244 | 13.2 | 21.19 | 42.6 |

| YOLOv5l-CSL | 93.03 | 93.23 | 92.25 | 0.9313 | 13.8 | 46.13 | 92.6 |

| YOLOv5x-CSL | 94.60 | 93.66 | 92.73 | 94.22 | 16.2 | 85.50 | 171.0 |

| BiFA-YOLO | 94.85 | 93.97 | 93.90 | 0.9441 | 13.3 | 19.57 | 39.4 |

Table 8.

Comparison of different models using the DCL on SSDD.

Table 8.

Comparison of different models using the DCL on SSDD.

| Method | Precision (%) | Recall (%) | AP (%) | F1 | Time (ms) | Params (M) | Model (M) |

|---|

| YOLOv5s-DCL | 91.93 | 88.69 | 85.75 | 0.9028 | 12.0 | 6.94 | 14.0 |

| YOLOv5m-DCL | 92.20 | 92.49 | 89.69 | 0.9235 | 13.6 | 20.53 | 41.3 |

| YOLOv5l-DCL | 92.23 | 92.92 | 90.63 | 0.9257 | 15.4 | 45.24 | 90.8 |

| YOLOv5x-DCL | 94.69 | 92.39 | 91.29 | 0.9353 | 15.7 | 84.39 | 169.0 |

| BiFA-YOLO | 94.85 | 93.97 | 93.90 | 0.9441 | 13.3 | 19.57 | 39.4 |

Table 9.

Detection results of the proposed method in inshore and offshore scene on SSDD.

Table 9.

Detection results of the proposed method in inshore and offshore scene on SSDD.

| Scene | Precision (%) | Recall (%) | AP (%) | F1 |

|---|

| Inshore | 92.81 | 91.60 | 91.15 | 0.9220 |

| Offshore | 96.16 | 95.55 | 94.81 | 0.9585 |

Table 10.

Detection results of different CNN-based methods on SSDD.

Table 10.

Detection results of different CNN-based methods on SSDD.

| Method | Bounding Box | Framework | AP (%) | Time (ms) |

|---|

| R-FPN [49] | Oriented | Two-Stages | 84.38 | - |

| R-Faster-RCNN [49] | Oriented | Two-Stages | 82.22 | - |

| RRPN [53] | Oriented | Two-Stages | 74.82 | 316.0 |

| R2CNN [53] | Oriented | Two-Stages | 80.26 | 210.8 |

| R-DFPN [53] | Oriented | Two-Stages | 83.44 | 370.5 |

| MSR2N [49] | Oriented | Two-Stages | 93.93 | 103.3 |

| SCRDet [63] | Oriented | Two-Stages | 92.04 | 120.8 |

| Cascade RCNN [48] | Oriented | Multi-Stages | 88.45 | 357.6 |

| R-YOLOv3 [53] | Oriented | One-Stage | 73.15 | 34.2 |

| R- Attention-ResNet [53] | Oriented | One-Stage | 76.40 | 39.6 |

| R-RetinaNet [50] | Oriented | One-Stage | 92.34 | 46.5 |

| R2FA-Det [48] | Oriented | One-Stage | 94.72 | 63.2 |

| DRBox-v1 [51] | Oriented | One-Stage | 86.41 | - |

| DRBox-v2 [51] | Oriented | One-Stage | 92.81 | 55.1 |

| MSARN [53] | Oriented | One-Stage | 76.24 | 35.4 |

| CSAP [54] | Oriented | One-Stage | 90.75 | - |

| SDOE [47] | Oriented | One-Stage | 84.20 | 25.0 |

| BiFA-YOLO | Oriented | One-Stage | 93.90 | 13.3 |