A Robust Vegetation Index Based on Different UAV RGB Images to Estimate SPAD Values of Naked Barley Leaves

Abstract

:1. Introduction

2. Materials and Method

2.1. Experimental Field Design

2.2. Data Collection and Analysis

2.3. Data Processing

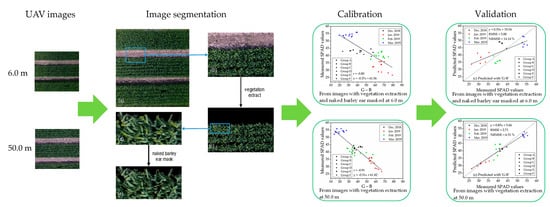

2.3.1. Flowchart of Data Processing

2.3.2. Image Segmentation

2.3.3. Color-Based Vegetation Indices

2.3.4. Data Analysis

3. Results

3.1. SPAD Values for Different Fertilizer Treatments

3.2. Optimal Image Processing for Best Relating SPAD Values with Vegetation Indices

3.3. Relationships between SPAD Values and Vegetation Indices

3.4. Validation of the Linear Regression Models for Predicting SPAD Values

4. Discussion

4.1. The SPAD Value Estimation Improvements by Vegetation Extraction and Naked Barley Ears Mask

4.2. Performance of the Color-Based VIs on Estimating SPAD Values

5. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Food and Agriculture Organization of the United Nations. Available online: http://www.fao.org/faostat/zh/#data/QC/visualize (accessed on 22 December 2020).

- Vinesh, B.; Prasad, L.; Prasad, R.; Madhukar, K. Association studies of yield and it’s attributing traits in indigenous and exotic Barley (Hordeum vulgare L.) germplasm. J. Pharmacogn. Phytochem. 2018, 7, 1500–1502. [Google Scholar]

- Agegnehu, G.; Nelson, P.N.; Bird, M.I. Crop yield, plant nutrient uptake and soil physicochemical properties under organic soil amendments and nitrogen fertilization on Nitisols. Soil Tillage Res. 2016, 160, 1–13. [Google Scholar] [CrossRef]

- Spaner, D.; Todd, A.G.; Navabi, A.; McKenzie, D.B.; Goonewardene, L.A. Can leaf chlorophyll measures at differing growth stages be used as an indicator of winter wheat and spring barley nitrogen requirements in eastern Canada? J. Agron. Crop Sci. 2005, 191, 393–399. [Google Scholar] [CrossRef]

- Houles, V.; Guerif, M.; Mary, B. Elaboration of a nitrogen nutrition indicator for winter wheat based on leaf area index and chlorophyll content for making nitrogen recommendations. Eur. J. Agron. 2007, 27, 1–11. [Google Scholar] [CrossRef]

- Clevers, J.G.P.W.; Kooistra, L. Using hyperspectral remote sensing data for retrieving canopy chlorophyll and nitrogen content. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2011, 5, 574–583. [Google Scholar] [CrossRef]

- Yu, K.; Lenz-Wiedemann, V.; Chen, X.; Bareth, G. Estimating leaf chlorophyll of barley at different growth stages using spectral indices to reduce soil background and canopy structure effects. ISPRS J. Photogramm. Remote Sens. 2014, 97, 58–77. [Google Scholar] [CrossRef]

- Shah, S.H.; Houborg, R.; McCabe, M.F. Response of chlorophyll, carotenoid and SPAD-502 measurement to salinity and nutrient stress in wheat (Triticum aestivum L.). Agronmy 2017, 7, 61. [Google Scholar] [CrossRef] [Green Version]

- Follett, R.; Follett, R.; Halvorson, A. Use of a chlorophyll meter to evaluate the nitrogen status of dryland winter wheat. Commun. Soil Sci. Plant Anal. 1992, 23, 687–697. [Google Scholar] [CrossRef]

- Li, G.; Ding, Y.; Xue, L.; Wang, S. Research progress on diagnosis of nitrogen nutrition and fertilization recommendation for rice by use chlorophyll meter. Plant Nutr. Fert. Sci. 2005, 11, 412–416. [Google Scholar]

- Lu, X.; Lu, S. Effects of adaxial and abaxial surface on the estimation of leaf chlorophyll content using hyperspectral vegetation indices. Int. J. Remote Sens. 2015, 36, 1447–1469. [Google Scholar] [CrossRef]

- Jiang, C.; Johkan, M.; Hohjo, M.; Tsukagoshi, S.; Maruo, T. A correlation analysis on chlorophyll content and SPAD value in tomato leaves. HortResearch 2017, 71, 37–42. [Google Scholar]

- Minolta, C. Manual for Chlorophyll Meter SPAD-502 Plus; Minolta Camera Co.: Osaka, Japan, 2013. [Google Scholar]

- Pagola, M.; Ortiz, R.; Irigoyen, I.; Bustince, H.; Barrenechea, E.; Aparicio-Tejo, P.; Lamsfus, C.; Lasa, B. New method to assess barley nitrogen nutrition status based on image colour analysis: Comparison with SPAD-502. Comput. Electron. Agr. 2009, 65, 213–218. [Google Scholar] [CrossRef]

- Golzarian, M.R.; Frick, R.A. Classification of images of wheat, ryegrass and brome grass species at early growth stages using principal component analysis. Plant Methods 2011, 7, 28. [Google Scholar] [CrossRef] [Green Version]

- Rorie, R.L.; Purcell, L.C.; Mozaffari, M.; Karcher, D.E.; King, C.A.; Marsh, M.C.; Longer, D.E. Association of “greenness” in corn with yield and leaf nitrogen concentration. Agron. J. 2011, 103, 529–535. [Google Scholar] [CrossRef] [Green Version]

- Wang, Y.; Wang, D.; Shi, P.; Omasa, K. Estimating rice chlorophyll content and leaf nitrogen concentration with a digital still color camera under natural light. Plant Methods 2014, 10, 36. [Google Scholar] [CrossRef] [Green Version]

- Peñuelas, J.; Gamon, A.; Fredeen, A.; Merino, J.; Field, C. Reflectance indices associated with physiological changes in nitrogen-and water-limited sunflower leaves. Remote Sens. Environ. 1994, 48, 135–146. [Google Scholar] [CrossRef]

- Woebbecke, D.M.; Meyer, G.E.; Von Bargen, K.; Mortensen, D.A. Color indices for weed identification under various soil, residue, and lighting conditions. Trans. ASAE 1995, 38, 259–269. [Google Scholar] [CrossRef]

- Kawashima, S.; Nakatani, M. An algorithm for estimating chlorophyll content in leaves using a video camera. Ann. Bot. 1998, 81, 49–54. [Google Scholar] [CrossRef] [Green Version]

- Wang, F.; Wang, K.; Li, S.; Chen, B.; Chen, J. Estimation of chlorophyll and nitrogen contents in cotton leaves using digital camera and imaging spectrometer. Acta Agron. Sin. 2010, 36, 1981–1989. [Google Scholar]

- Wei, Q.; Li, L.; Ren, T.; Wang, Z.; Wang, S.; Li, X.; Cong, R.; Lu, J. Diagnosing nitrogen nutrition status of winter rapeseed via digital image processing technique. Sci. Agric. Sin. 2015, 48, 3877–3886. [Google Scholar]

- Graeff, S.; Pfenning, J.; Claupein, W.; Liebig, H.-P. Evaluation of image analysis to determine the N-fertilizer demand of broccoli plants (Brassica oleracea convar. botrytis var. italica). Adv. Opt. Technol. 2008, 2008, 359760. [Google Scholar] [CrossRef] [Green Version]

- Wiwart, M.; Fordoński, G.; Żuk-Gołaszewska, K.; Suchowilska, E. Early diagnostics of macronutrient deficiencies in three legume species by color image analysis. Comput. Electron. Agr. 2009, 65, 125–132. [Google Scholar] [CrossRef]

- Sulistyo, S.B.; Woo, W.L.; Dlay, S.S. Regularized neural networks fusion and genetic algorithm based on-field nitrogen status estimation of wheat plants. IEEE Trans. Ind. Informat. 2016, 13, 103–114. [Google Scholar] [CrossRef] [Green Version]

- Kanning, M.; Kühling, I.; Trautz, D.; Jarmer, T. High-resolution UAV-based hyperspectral imagery for LAI and chlorophyll estimations from wheat for yield prediction. Remote Sens. 2018, 10, 2000. [Google Scholar] [CrossRef] [Green Version]

- Hunt Jr, E.R.; Daughtry, C.S. What good are unmanned aircraft systems for agricultural remote sensing and precision agriculture? Int. J. Remote Sens. 2018, 39, 5345–5376. [Google Scholar] [CrossRef] [Green Version]

- Guo, Y.; Senthilnath, J.; Wu, W.; Zhang, X.; Zeng, Z.; Huang, H. Radiometric calibration for multispectral camera of different imaging conditions mounted on a UAV platform. Sustainability 2019, 11, 978. [Google Scholar] [CrossRef] [Green Version]

- Han-Ya, I.; Ishii, K.; Noguchi, N. Satellite and aerial remote sensing for production estimates and crop assessment. Environ. Control. Biol. 2010, 48, 51–58. [Google Scholar] [CrossRef] [Green Version]

- Gevaert, C.M.; Suomalainen, J.; Tang, J.; Kooistra, L. Generation of spectral–temporal response surfaces by combining multispectral satellite and hyperspectral UAV imagery for precision agriculture applications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 8, 3140–3146. [Google Scholar] [CrossRef]

- Yue, J.; Yang, G.; Li, C.; Li, Z.; Wang, Y.; Feng, H.; Xu, B. Estimation of winter wheat above-ground biomass using unmanned aerial vehicle-based snapshot hyperspectral sensor and crop height improved models. Remote Sens. 2017, 9, 708. [Google Scholar] [CrossRef] [Green Version]

- Zheng, H.; Cheng, T.; Li, D.; Zhou, X.; Yao, X.; Tian, Y.; Cao, W.; Zhu, Y. Evaluation of RGB, color-infrared and multispectral images acquired from unmanned aerial systems for the estimation of nitrogen accumulation in rice. Remote Sens. 2018, 10, 824. [Google Scholar] [CrossRef] [Green Version]

- Teng, P.; Zhang, Y.; Shimizu, Y.; Hosoi, F.; Omasa, K. Accuracy assessment in 3D remote sensing of rice plants in paddy field using a small UAV. Eco-Engineering 2016, 28, 107–112. [Google Scholar]

- Teng, P.; Ono, E.; Zhang, Y.; Aono, M.; Shimizu, Y.; Hosoi, F.; Omasa, K. Estimation of ground surface and accuracy assessments of growth parameters for a sweet potato community in ridge cultivation. Remote Sens. 2019, 11, 1487. [Google Scholar] [CrossRef] [Green Version]

- Liebisch, F.; Kirchgessner, N.; Schneider, D.; Walter, A.; Hund, A. Remote, aerial phenotyping of maize traits with a mobile multi-sensor approach. Plant Methods 2015, 11, 9. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Candiago, S.; Remondino, F.; De Giglio, M.; Dubbini, M.; Gattelli, M. Evaluating multispectral images and vegetation indices for precision farming applications from UAV images. Remote Sens. 2015, 7, 4026–4047. [Google Scholar] [CrossRef] [Green Version]

- Lu, N.; Zhou, J.; Han, Z.; Li, D.; Cao, Q.; Yao, X.; Tian, Y.; Zhu, Y.; Cao, W.; Cheng, T. Improved estimation of aboveground biomass in wheat from RGB imagery and point cloud data acquired with a low-cost unmanned aerial vehicle system. Plant Methods 2019, 15, 17. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Shimojima, K.; Ogawa, S.; Naito, H.; Valencia, M.O.; Shimizu, Y.; Hosoi, F.; Uga, Y.; Ishitani, M.; Selvaraj, M.G.; Omasa, K. Comparison between rice plant traits and color indices calculated from UAV remote sensing images. Eco-Engineering 2017, 29, 11–16. [Google Scholar]

- Escalante, H.; Rodríguez-Sánchez, S.; Jiménez-Lizárraga, M.; Morales-Reyes, A.; De La Calleja, J.; Vazquez, R. Barley yield and fertilization analysis from UAV imagery: A deep learning approach. Int. J. Remote Sens. 2019, 40, 2493–2516. [Google Scholar] [CrossRef]

- Bendig, J.; Bolten, A.; Bennertz, S.; Broscheit, J.; Eichfuss, S.; Bareth, G. Estimating biomass of barley using crop surface models (CSMs) derived from UAV-based RGB imaging. Remote Sens. 2014, 6, 10395–10412. [Google Scholar] [CrossRef] [Green Version]

- Bendig, J.; Yu, K.; Aasen, H.; Bolten, A.; Bennertz, S.; Broscheit, J.; Gnyp, M.L.; Bareth, G. Combining UAV-based plant height from crop surface models, visible, and near infrared vegetation indices for biomass monitoring in barley. Int. J. Appl. Earth Obs. 2015, 39, 79–87. [Google Scholar] [CrossRef]

- Holman, F.H.; Riche, A.B.; Michalski, A.; Castle, M.; Wooster, M.J.; Hawkesford, M.J. High throughput field phenotyping of wheat plant height and growth rate in field plot trials using UAV based remote sensing. Remote Sens. 2016, 8, 1031. [Google Scholar] [CrossRef]

- Li, J.; Shi, Y.; Veeranampalayam-Sivakumar, A.-N.; Schachtman, D.P. Elucidating sorghum biomass, nitrogen and chlorophyll contents with spectral and morphological traits derived from unmanned aircraft system. Front. Plant Sci. 2018, 9, 1406. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, K.; Tang, C.; Cao, Q.; Tian, Y.; Zhu, Y.; Cao, W.; Liu, X. Estimation of rice growth parameters based on linear mixed-effect model using multispectral images from fixed-wing unmanned aerial vehicles. Remote Sens. 2019, 11, 1371–1392. [Google Scholar] [CrossRef] [Green Version]

- Mincato, R.L.; Parreiras, T.C.; Lense, G.H.E.; Moreira, R.S.; Santana, D.B. Using unmanned aerial vehicle and machine learning algorithm to monitor leaf nitrogen in coffee. Coffee Sci. 2020, 15, 1–9. [Google Scholar]

- Mesas-Carrascosa, F.-J.; Torres-Sánchez, J.; Clavero-Rumbao, I.; García-Ferrer, A.; Peña, J.-M.; Borra-Serrano, I.; López-Granados, F. Assessing optimal flight parameters for generating accurate multispectral orthomosaicks by UAV to support site-specific crop management. Remote Sens. 2015, 7, 12793–12814. [Google Scholar] [CrossRef] [Green Version]

- Avtar, R.; Suab, S.A.; Syukur, M.S.; Korom, A.; Umarhadi, D.A.; Yunus, A.P. Assessing the influence of UAV altitude on extracted biophysical parameters of young oil palm. Remote Sens. 2020, 12, 3030. [Google Scholar] [CrossRef]

- Meier, U. Growth stages of mono-and dicotyledonous plants. In BCH-Monograph, Federal Biological Research Centre for Agriculture and Forestry; Blackwell Science: Berlin, Germany, 2001. [Google Scholar]

- Lu, S.; Oki, K.; Shimizu, Y.; Omasa, K. Comparison between several feature extraction/classification methods for mapping complicated agricultural land use patches using airborne hyperspectral data. Int. J. Remote Sens. 2007, 28, 963–984. [Google Scholar] [CrossRef]

- Robertson, A.R. The CIE 1976 color-difference formulae. Color Res. Appl. 1977, 2, 7–11. [Google Scholar] [CrossRef]

- Pearson, R.L.; Miller, L.D.; Tucker, C.J. Hand-held spectral radiometer to estimate gramineous biomass. Appl. Opt. 1976, 15, 416–418. [Google Scholar] [CrossRef] [PubMed]

- Gamon, J.; Surfus, J. Assessing leaf pigment content and activity with a reflectometer. New Phytol. 1999, 143, 105–117. [Google Scholar] [CrossRef]

- Sellaro, R.; Crepy, M.; Trupkin, S.A.; Karayekov, E.; Buchovsky, A.S.; Rossi, C.; Casal, J.J. Cryptochrome as a sensor of the blue/green ratio of natural radiation in Arabidopsis. Plant Physiol. 2010, 154, 401–409. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Hunt, E.R.; Cavigelli, M.; Daughtry, C.S.; Mcmurtrey, J.E.; Walthall, C.L. Evaluation of digital photography from model aircraft for remote sensing of crop biomass and nitrogen status. Precis. Agric. 2005, 6, 359–378. [Google Scholar] [CrossRef]

- Gitelson, A.A.; Kaufman, Y.J.; Stark, R.; Rundquist, D. Novel algorithms for remote estimation of vegetation fraction. Remote Sens. Environ. 2002, 80, 76–87. [Google Scholar] [CrossRef] [Green Version]

- Wang, X.; Wang, M.; Wang, S.; Wu, Y. Extraction of vegetation information from visible unmanned aerial vehicle images. Trans. Chin. Soc. Agric. Eng 2015, 31, 152–159. [Google Scholar]

- Kirillova, N.; Vodyanitskii, Y.N.; Sileva, T. Conversion of soil color parameters from the Munsell system to the CIE-L* a* b* system. Eurasian Soil Sci. 2015, 48, 468–475. [Google Scholar] [CrossRef]

- Moghaddam, P.A.; Derafshi, M.H.; Shirzad, V. Estimation of single leaf chlorophyll content in sugar beet using machine vision. Turk. J. Agrci. For 2011, 35, 563–568. [Google Scholar]

- El-Mageed, A.; Ibrahim, M.; Elbeltagi, A. The effect of water stress on nitrogen status as well as water use efficiency of potato crop under drip irrigation system. Misr J. Ag. Eng. 2017, 34, 1351–1374. [Google Scholar] [CrossRef]

- Huete, A.; Post, D.; Jackson, R. Soil spectral effects on 4-space vegetation discrimination. Remote Sens. Environ. 1984, 15, 155–165. [Google Scholar] [CrossRef]

- Zhang, X.; Zhang, F.; Qi, Y.; Deng, L.; Wang, X.; Yang, S. New research methods for vegetation information extraction based on visible light remote sensing images from an unmanned aerial vehicle (UAV). Int. J. Appl. Earth Obs. 2019, 78, 215–226. [Google Scholar] [CrossRef]

- Hanan, N.; Prince, S.; Hiernaux, P.H. Spectral modelling of multicomponent landscapes in the Sahel. Int. J. Remote Sens. 1991, 12, 1243–4258. [Google Scholar] [CrossRef]

- Jiang, Z.; Huete, A.R.; Chen, J.; Chen, Y.; Li, J.; Yan, G.; Zhang, X. Analysis of NDVI and scaled difference vegetation index retrievals of vegetation fraction. Remote Sens. Environ. 2006, 101, 366–378. [Google Scholar] [CrossRef]

- Du, M.; Noguchi, N. Monitoring of wheat growth status and mapping of wheat yield’s within-field spatial variations using color images acquired from UAV-camera system. Remote Sens. 2017, 9, 289. [Google Scholar] [CrossRef] [Green Version]

- Omasa, K. Image instrumentation methods of plant analysis. In Physical Methods in Plant Sciences; Springer: Berlin/Heidelberg, Germany, 1990; Volume 11, pp. 203–243. [Google Scholar]

- Chavez Jr, P.S. An improved dark-object subtraction technique for atmospheric scattering correction of multispectral data. Remote Sens. Environ. 1988, 24, 459–479. [Google Scholar] [CrossRef]

- Yang, G.; Liu, J.; Zhao, C.; Li, Z.; Huang, Y.; Yu, H.; Xu, B.; Yang, X.; Zhu, D.; Zhang, X. Unmanned aerial vehicle remote sensing for field-based crop phenotyping: Current status and perspectives. Front. Plant Sci. 2017, 8, 1111. [Google Scholar] [CrossRef]

| Experimental Group | Nitrogen Content in Fertilizer (kg/10a) | ||||

|---|---|---|---|---|---|

| Basic Fertilizer | Additional Fertilizer 1 | Additional Fertilizer 2 | Earing Fertilizer | Total | |

| A | 7 | 0 | 2 | 3 | 12 |

| B | 7 | 2 | 2 | 3 | 14 |

| C | 3 | 0 | 2 | 3 | 8 |

| D | 3 | 3 | 3 | 3 | 12 |

| E | 9 | 0 | 2 | 3 | 14 |

| F | 9 | 2 | 2 | 3 | 16 |

| Vegetation Indices | Reference |

|---|---|

| R | Pearson et al. [51] |

| G | Pearson et al. [51] |

| B | Pearson et al. [51] |

| L* | Graeff et al. [23] |

| a* | Graeff et al. [23] |

| b* | Graeff et al. [23] |

| G/R | Gamon & Surfus [52] |

| B/G | Sellaro et al. [53] |

| B/R | Wei et al. [22] |

| b*/a* | Wang et al. [17] |

| G − B | Kawashima & Nakatani [20] |

| R − B | Wang et al. [21] |

| 2G − R − B | Woebbecke et al. [19] |

| (R + G + B)/3 | Wang et al. [17] |

| R/(R + G + B) | Kawashima & Nakatani [20] |

| G/(R + G + B) | Kawashima & Nakatani [20] |

| B/(R + G + B) | Kawashima & Nakatani [20] |

| (G − R)/(G + R) | Gitelson et al. [55] |

| (R − B)/(R + B) | Peñuelas et al. [18] |

| (G − B)/(G + B) | Hunt et al. [54] |

| (2G − R − B)/(2G + R + B) | Wang et al. [56] |

| F-Value | p-Value | ||

|---|---|---|---|

| Different fertilizer treatments | Dec. 2018 | 0.47 | 0.79 |

| Jan. 2019 | 9.54 | 0.00 | |

| Feb. 2019 | 0.89 | 0.52 | |

| Mar. 2019 | 1.87 | 0.17 | |

| Different growth stages | Group A | 140.35 | 0.00 |

| Group B | 84.29 | 0.00 | |

| Group C | 196.53 | 0.00 | |

| Group D | 43.03 | 0.00 | |

| Group E | 185.91 | 0.00 | |

| Group F | 139.15 | 0.00 |

| Vegetation Indices | UAV Images Acquired at 6.0 m | UAV Images Acquired at 50.0 m | |||

|---|---|---|---|---|---|

| Original Image | Vegetation Extraction (Soil and Shadow mAsked) | Original Image | Vegetation Extraction (Soil and Shadow Masked) | ||

| No Naked Barley Ears Masked | Naked Barley Ears Masked | ||||

| R | −0.94 ** | −0.96 ** | −0.97 ** | −0.29 | −0.41 * |

| G | −0.93 ** | −0.95 ** | −0.95 ** | −0.61 * | −0.70 * |

| B | −0.80 ** | −0.79 ** | −0.80 ** | 0.20 | 0.33 |

| L* | −0.90 ** | −0.93 ** | −0.92 ** | −0.72 ** | −0.76 ** |

| a* | −0.28 | −0.15 | −0.12 | 0.56 * | 0.59 * |

| b* | −0.51 * | −0.67 * | −0.71 ** | −0.87 ** | −0.90 ** |

| G/R | 0.59 * | 0.58 * | 0.63 * | −0.20 | −0.20 |

| B/G | 0.02 | 0.22 | 0.26 | 0.77 ** | 0.86 ** |

| B/R | 0.35 | 0.47 * | 0.53 * | 0.52 * | 0.57 * |

| b*/a* | 0.19 | 0.80 ** | 0.82 ** | −0.15 | 0.60 * |

| G − B | −0.61 * | −0.78 ** | −0.80 ** | −0.87 ** | −0.91 ** |

| R − B | 0.62 * | 0.73 ** | 0.77 ** | 0.57 * | 0.61 * |

| 2G − R − B | −0.58 * | −0.72 ** | −0.77 ** | −0.74 ** | −0.82 ** |

| (R + G + B)/3 | −0.93 ** | −0.95 ** | −0.96 ** | −0.28 | −0.40 |

| R/(R + G + B) | −0.68 * | −0.68 * | −0.75 ** | −0.18 | −0.20 |

| G/(R + G + B) | 0.21 | 0.12 | 0.12 | −0.66 * | −0.70 * |

| B/(R + G + B) | 0.16 | 0.32 | 0.37 | 0.71 ** | 0.78 ** |

| (G − R)/(G + R) | 0.57 * | 0.59 * | 0.63 * | −0.22 | −0.22 |

| (R − B)/(R + B) | −0.35 | −0.46 * | −0.52 * | −0.55 * | −0.59 * |

| (G − B)/(G + B) | −0.05 | −0.21 | −0.26 | −0.77 ** | −0.84 ** |

| (2G − R − B)/(2G + R + B) | 0.22 | 0.12 | −0.04 | −0.65 * | −0.75 ** |

| Vegetation Indices | UAV Images Acquired at 6.0 m | UAV Images Acquired at 50.0 m | |||

|---|---|---|---|---|---|

| Original Image | Vegetation Extraction (Soil and Shadow Masked) | Original Image | Vegetation Extraction (Soil and Shadow Masked) | ||

| No Naked Barley Ears Masked | Naked Barley Ears Masked | ||||

| R | 2.61 (6.28 %) | 2.40 (5.78 %) | 2.03 (4.89 %) | 8.55 (20.58 %) | 8.81 (21.20 %) |

| G | 3.24 (7.78 %) | 3.18 (7.64 %) | 2.88 (6.93 %) | 8.29 (19.94 %) | 7.87 (18.94 %) |

| B | 5.24 (12.61 %) | 5.44 (13.10 %) | 5.50 (13.22 %) | 8.38 (20.15 %) | 8.84 (21.27 %) |

| L* | 3.95 (9.49 %) | 3.64 (8.76 %) | 3.65 (8.79 %) | 7.56 (18.18 %) | 7.39 (17.77 %) |

| a* | 8.20 (19.72 %) | 8.20 (19.74 %) | 8.28 (19.91 %) | 7.06 (16.99 %) | 7.20 (17.33 %) |

| b* | 7.61 (18.30 %) | 6.94 (16.68 %) | 6.68 (16.06 %) | 3.59 (8.63 %) | 3.67 (8.82 %) |

| G/R | 5.76 (13.86 %) | 27.81 (66.91 %) | 4.71 (11.32 %) | 8.31 (19.99 %) | 8.32 (20.02 %) |

| B/G | 8.45 (20.33 %) | 8.66 (20.84 %) | 8.68 (20.89 %) | 5.08 (12.23 %) | 5.37 (12.93 %) |

| B/R | 8.11 (19.51 %) | 7.71 (18.54 %) | 7.56 (18.18 %) | 7.06 (16.98 %) | 6.88 (16.55 %) |

| b*/a* | 8.62 (20.73 %) | 4.77 (11.47 %) | 4.63 (11.14 %) | 8.33 (20.05 %) | 6.82 (16.40 %) |

| G − B | 7.26 (17.46 %) | 6.06 (14.59 %) | 5.88 (14.14 %) | 2.87 (6.91 %) | 2.71 (6.51 %) |

| R − B | 6.86 (16.50 %) | 5.93 (14.27 %) | 5.67 (13.65 %) | 6.65 (15.99 %) | 6.36 (15.31 %) |

| 2G − R − B | 7.47 (17.97 %) | 6.49 (15.61 %) | 6.09 (14.64 %) | 5.16 (12.42 %) | 4.32 (10.40 %) |

| (R + G + B)/3 | 3.05 (7.33 %) | 2.85 (6.85 %) | 2.89 (6.95 %) | 8.57 (20.62 %) | 9.02 (21.69 %) |

| R/(R + G + B) | 5.04 (12.12 %) | 4.72 (11.35 %) | 4.32 (10.39 %) | 8.25 (19.85 %) | 8.20 (19.73 %) |

| G/(R + G + B) | 7.98 (19.19 %) | 8.06 (19.39 %) | 8.06 (19.39 %) | 6.74 (16.20 %) | 6.92 (16.65 %) |

| B/(R + G + B) | 8.50 (20.44 %) | 8.50 (20.45 %) | 8.47 (20.39 %) | 5.75 (13.84 %) | 5.59 (13.46 %) |

| (G − R)/(G + R) | 6.11 (14.70 %) | 5.01 (12.04 %) | 4.76 (11.46%) | 8.29 (19.94 %) | 8.30 (19.98 %) |

| (R − B)/(R + B) | 8.13 (19.56 %) | 7.83 (18.85 %) | 7.69 (18.49 %) | 6.96 (16.74 %) | 6.79 (16.33 %) |

| (G − B)/(G + B) | 8.47 (20.39 %) | 8.66 (20.83 %) | 8.68 (20.88 %) | 5.42 (13.05 %) | 5.63 (13.55 %) |

| (2G − R − B)/(2G + R + B) | 7.91 (19.03 %) | 8.08 (19.45 %) | 8.55 (20.57 %) | 6.69 (16.10 %) | 6.36 (15.31 %) |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2021 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Liu, Y.; Hatou, K.; Aihara, T.; Kurose, S.; Akiyama, T.; Kohno, Y.; Lu, S.; Omasa, K. A Robust Vegetation Index Based on Different UAV RGB Images to Estimate SPAD Values of Naked Barley Leaves. Remote Sens. 2021, 13, 686. https://doi.org/10.3390/rs13040686

Liu Y, Hatou K, Aihara T, Kurose S, Akiyama T, Kohno Y, Lu S, Omasa K. A Robust Vegetation Index Based on Different UAV RGB Images to Estimate SPAD Values of Naked Barley Leaves. Remote Sensing. 2021; 13(4):686. https://doi.org/10.3390/rs13040686

Chicago/Turabian StyleLiu, Yu, Kenji Hatou, Takanori Aihara, Sakuya Kurose, Tsutomu Akiyama, Yasushi Kohno, Shan Lu, and Kenji Omasa. 2021. "A Robust Vegetation Index Based on Different UAV RGB Images to Estimate SPAD Values of Naked Barley Leaves" Remote Sensing 13, no. 4: 686. https://doi.org/10.3390/rs13040686

APA StyleLiu, Y., Hatou, K., Aihara, T., Kurose, S., Akiyama, T., Kohno, Y., Lu, S., & Omasa, K. (2021). A Robust Vegetation Index Based on Different UAV RGB Images to Estimate SPAD Values of Naked Barley Leaves. Remote Sensing, 13(4), 686. https://doi.org/10.3390/rs13040686