FPS: Fast Path Planner Algorithm Based on Sparse Visibility Graph and Bidirectional Breadth-First Search

Abstract

:1. Introduction

- Compared to existing methods for storing a laser pointcloud, this paper proposes a complementary holed structure for iteratively updating the local grid. Basically, only half of the pointcloud data needs to be processed in each data frame to update the map. The pointcloud data are subjected to image blurring after planar mapping, and then key vertices are extracted from the blurred image.

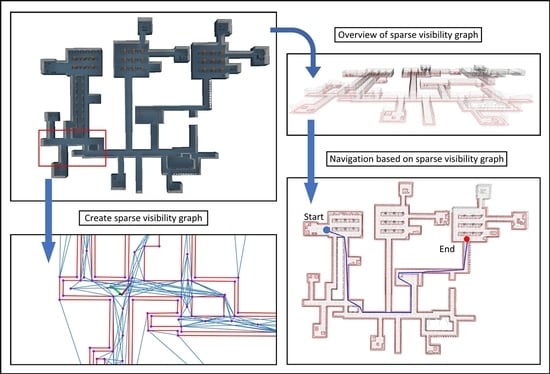

- For obstacles with complex contours, this paper proposes a filtering method based on the edge length and the number of vertices of the obstacle contour. The method effectively reduces the number of vertices in the v-graph and the maintenance cost of the v-graph by performing vertex filtering on large complex obstacles. Since the v-graph and the path search algorithm are tightly coupled, the efficiency of the path search algorithm will also be improved.

- A bidirectional breadth-first search algorithm was introduced since exploring uncharted territory requires a lot of search space. In this paper, the edge between the goal point and the existing vertices in the v-graph is established by geometric checking. Therefore, the bidirectional breadth-first search algorithm could reduce the waste of exploration space in navigation.

2. Related Work

3. Sparse Visibility Graph-Based Path Planner

3.1. Pointcloud Extraction Structure

| Algorithm 1 Module . |

Input: Sensor data Output:

|

| Algorithm 2 Polygon Extraction. |

Input: ∈ Output:

|

3.2. Simplified Complex Contours Algorithm

| Algorithm 3 Visibility Graph Update. |

Input: ∈ , Output:

|

3.3. Path-Planning Based on Bidirectional Breadth-First Search

| Algorithm 4 Bidirectional BFS. |

Input: , , : Output:

|

| Algorithm 5 STEP . |

|

| Algorithm 6 Way-point generation. |

Input: , , Output:

|

| Algorithm 7 Check_Collision. |

Input: P, Output:

|

4. Experiments and Results

4.1. Simulation Experiment

4.1.1. Laser Process Simulation Experiment

4.1.2. Visibility Graph Update Simulation Experiment

4.1.3. Path Planning Simulation Experiment

4.2. Physical Experiment

5. Discussion

5.1. Grid in Mapping

5.2. The Reduced Visibility Graph

5.3. Uncertainties in the Path Planning

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

Abbreviations

| FAR Planner | Fast, Attemptable Route Planner using Dynamic Visibility Update [19] |

| SLAM | Simultaneous Localization And Mapping |

| IMU | Inertial Measurement Unit |

| GPU | Graphics Processing Unit |

References

- Hess, W.; Kohler, D.; Rapp, H.; Andor, D. Real-time loop closure in 2D LIDAR SLAM. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016; pp. 1271–1278. [Google Scholar] [CrossRef]

- Grisetti, G.; Kümmerle, R.; Stachniss, C.; Burgard, W. A Tutorial on Graph-Based SLAM. IEEE Intell. Transp. Syst. Mag. 2010, 2, 31–43. [Google Scholar] [CrossRef]

- Li, T.; Pei, L.; Xiang, Y.; Wu, Q.; Xia, S.; Tao, L.; Guan, X.; Yu, W. P3-LOAM: PPP/LiDAR Loosely Coupled SLAM With Accurate Covariance Estimation and Robust RAIM in Urban Canyon Environment. IEEE Sens. J. 2021, 21, 6660–6671. [Google Scholar] [CrossRef]

- Shan, T.; Englot, B. Lego-loam: Lightweight and ground-optimized lidar odometry and mapping on variable terrain. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 4758–4765. [Google Scholar]

- Li, J.; Pei, L.; Zou, D.; Xia, S.; Wu, Q.; Li, T.; Sun, Z.; Yu, W. Attention-SLAM: A Visual Monocular SLAM Learning From Human Gaze. IEEE Sens. J. 2021, 21, 6408–6420. [Google Scholar] [CrossRef]

- Mur-Artal, R.; Montiel, J.M.M.; Tardós, J.D. ORB-SLAM: A Versatile and Accurate Monocular SLAM System. IEEE Trans. Robot. 2015, 31, 1147–1163. [Google Scholar] [CrossRef] [Green Version]

- Mur-Artal, R.; Tardós, J.D. ORB-SLAM2: An Open-Source SLAM System for Monocular, Stereo, and RGB-D Cameras. IEEE Trans. Robot. 2017, 33, 1255–1262. [Google Scholar] [CrossRef] [Green Version]

- Qin, T.; Li, P.; Shen, S. VINS-Mono: A Robust and Versatile Monocular Visual-Inertial State Estimator. IEEE Trans. Robot. 2018, 34, 1004–1020. [Google Scholar] [CrossRef] [Green Version]

- Chan, S.H.; Wu, P.T.; Fu, L.C. Robust 2D Indoor Localization Through Laser SLAM and Visual SLAM Fusion. In Proceedings of the 2018 IEEE International Conference on Systems, Man, and Cybernetics (SMC), Miyazaki, Japan, 7–10 October 2018; pp. 1263–1268. [Google Scholar] [CrossRef]

- Debeunne, C.; Vivet, D. A Review of Visual-LiDAR Fusion based Simultaneous Localization and Mapping. Sensors 2020, 20, 2068. [Google Scholar] [CrossRef] [Green Version]

- Nguyen, T.M.; Cao, M.; Yuan, S.; Lyu, Y.; Nguyen, T.H.; Xie, L. VIRAL-Fusion: A Visual-Inertial-Ranging-Lidar Sensor Fusion Approach. IEEE Trans. Robot. 2022, 38, 958–977. [Google Scholar] [CrossRef]

- Thrun, S. Learning Occupancy Grid Maps with Forward Sensor Models. Auton. Robot. 2003, 15, 111–127. [Google Scholar] [CrossRef]

- Lozano-Pérez, T.; Wesley, M.A. An algorithm for planning collision-free paths among polyhedral obstacles. Commun. ACM 1979, 22, 560–570. [Google Scholar] [CrossRef]

- Kitzinger, J.; Moret, B. The Visibility Graph among Polygonal Obstacles: A Comparison of Algorithms. Ph.D. Thesis, University of New Mexico, Albuquerque, NM, USA, 2003. [Google Scholar]

- Alt, H.; Welzl, E. Visibility graphs and obstacle-avoiding shortest paths. Z. Für Oper. Res. 1988, 32, 145–164. [Google Scholar] [CrossRef]

- Shen, X.; Edelsbrunner, H. A tight lower bound on the size of visibility graphs. Inf. Process. Lett. 1987, 26, 61–64. [Google Scholar] [CrossRef]

- Sridharan, K.; Priya, T.K. An Efficient Algorithm to Construct Reduced Visibility Graph and Its FPGA Implementation. In Proceedings of the VLSI Design, International Conference, Mumbai, India, 9 January 2004; IEEE Computer Society: Los Alamitos, CA, USA, 2004; p. 1057. [Google Scholar] [CrossRef]

- Wu, G.; Atilla, I.; Tahsin, T.; Terziev, M.; Wang, L. Long-voyage route planning method based on multi-scale visibility graph for autonomous ships. Ocean Eng. 2021, 219, 108242. [Google Scholar] [CrossRef]

- Yang, F.; Cao, C.; Zhu, H.; Oh, J.; Zhang, J. FAR Planner: Fast, Attemptable Route Planner using Dynamic Visibility Update. arXiv 2021, arXiv:2110.09460. [Google Scholar]

- Pradhan, S.; Mandava, R.K.; Vundavilli, P.R. Development of path planning algorithm for biped robot using combined multi-point RRT and visibility graph. Int. J. Inf. Technol. 2021, 13, 1513–1519. [Google Scholar] [CrossRef]

- D’Amato, E.; Nardi, V.A.; Notaro, I.; Scordamaglia, V. A Visibility Graph approach for path planning and real-time collision avoidance on maritime unmanned systems. In Proceedings of the 2021 International Workshop on Metrology for the Sea; Learning to Measure Sea Health Parameters (MetroSea), Reggio Calabria, Italy, 4–6 October 2021; pp. 400–405. [Google Scholar] [CrossRef]

- Dijkstra, E.W. A note on two problems in connexion with graphs. Numer. Math. 1959, 1, 269–271. [Google Scholar] [CrossRef] [Green Version]

- Hart, P.E.; Nilsson, N.J.; Raphael, B. A formal basis for the heuristic determination of minimum cost paths. IEEE Trans. Syst. Sci. Cybern. 1968, 4, 100–107. [Google Scholar] [CrossRef]

- Stentz, A. Optimal and efficient path planning for partially-known environments. In Proceedings of the 1994 IEEE International Conference on Robotics and Automation, San Diego, CA, USA, 8–13 May 1994; Volume 4, pp. 3310–3317. [Google Scholar] [CrossRef]

- Koenig, S.; Likhachev, M. Fast replanning for navigation in unknown terrain. IEEE Trans. Robot. 2005, 21, 354–363. [Google Scholar] [CrossRef]

- XiangRong, T.; Yukun, Z.; XinXin, J. Improved A-star algorithm for robot path planning in static environment. J. Phys. Conf. Ser. 2021, 1792, 012067. [Google Scholar] [CrossRef]

- Zhang, L.; Li, Y. Mobile Robot Path Planning Algorithm Based on Improved A Star. J. Phys. Conf. Ser. 2021, 1848, 012013. [Google Scholar] [CrossRef]

- LaValle, S.M.; Kuffner, J.J.; Donald, B. Rapidly-exploring random trees: Progress and prospects. Algorithmic Comput. Robot. New Dir. 2001, 5, 293–308. [Google Scholar]

- Karaman, S.; Frazzoli, E. Sampling-based algorithms for optimal motion planning. Int. J. Robot. Res. 2011, 30, 846–894. [Google Scholar] [CrossRef]

- Gammell, J.D.; Srinivasa, S.S.; Barfoot, T.D. Informed RRT*: Optimal sampling-based path planning focused via direct sampling of an admissible ellipsoidal heuristic. In Proceedings of the 2014 IEEE/RSJ International Conference on Intelligent Robots and Systems, Chicago, IL, USA, 14–18 September 2014; pp. 2997–3004. [Google Scholar]

- Kuffner, J.J.; LaValle, S.M. RRT-connect: An efficient approach to single-query path planning. In Proceedings of the 2000 ICRA, Millennium Conference, IEEE International Conference on Robotics and Automation, Symposia Proceedings (Cat. No. 00CH37065), San Francisco, CA, USA, 24–28 April 2000; Volume 2, pp. 995–1001. [Google Scholar]

- Tu, J.; Yang, S. Genetic algorithm based path planning for a mobile robot. In Proceedings of the 2003 IEEE International Conference on Robotics and Automation (Cat. No.03CH37422), Taipei, Taiwan, 14–19 September 2003; Volume 1, pp. 1221–1226. [Google Scholar] [CrossRef]

- Chen, J.; Ye, F.; Li, Y. Travelling salesman problem for UAV path planning with two parallel optimization algorithms. In Proceedings of the 2017 Progress in Electromagnetics Research Symposium—Fall (PIERS - FALL), Singapore, 19–22 November 2017; pp. 832–837. [Google Scholar] [CrossRef]

- Pan, Y.; Yang, Y.; Li, W. A Deep Learning Trained by Genetic Algorithm to Improve the Efficiency of Path Planning for Data Collection With Multi-UAV. IEEE Access 2021, 9, 7994–8005. [Google Scholar] [CrossRef]

- Hao, K.; Zhao, J.; Wang, B.; Liu, Y.; Wang, C. The application of an adaptive genetic algorithm based on collision detection in path planning of mobile robots. Comput. Intell. Neurosci. 2021, 2021, 5536574. [Google Scholar] [CrossRef] [PubMed]

- Rahmaniar, W.; Rakhmania, A.E. Mobile Robot Path Planning in a Trajectory with Multiple Obstacles Using Genetic Algorithms. J. Robot. Control (JRC) 2022, 3, 1–7. [Google Scholar] [CrossRef]

- Zhang, T.W.; Xu, G.H.; Zhan, X.S.; Han, T. A new hybrid algorithm for path planning of mobile robot. J. Supercomput. 2022, 78, 4158–4181. [Google Scholar] [CrossRef]

- Richter, C.; Vega-Brown, W.; Roy, N. Bayesian Learning for Safe High-Speed Navigation in Unknown Environments. In Robotics Research: Volume 2; Bicchi, A., Burgard, W., Eds.; Springer: Cham, Switzerland, 2018; pp. 325–341. [Google Scholar] [CrossRef]

- Zeng, J.; Ju, R.; Qin, L.; Hu, Y.; Yin, Q.; Hu, C. Navigation in unknown dynamic environments based on deep reinforcement learning. Sensors 2019, 19, 3837. [Google Scholar] [CrossRef] [Green Version]

- Guo, X.; Fang, Y. Learning to Navigate in Unknown Environments Based on GMRP-N. In Proceedings of the 2019 IEEE 9th Annual International Conference on CYBER Technology in Automation, Control, and Intelligent Systems (CYBER), Suzhou, China, 29 July–2 August 2019; pp. 1453–1458. [Google Scholar]

- Lin, G.; Zhu, L.; Li, J.; Zou, X.; Tang, Y. Collision-free path planning for a guava-harvesting robot based on recurrent deep reinforcement learning. Comput. Electron. Agric. 2021, 188, 106350. [Google Scholar] [CrossRef]

- Tutsoy, O.; Brown, M. Reinforcement learning analysis for a minimum time balance problem. Trans. Inst. Meas. Control 2016, 38, 1186–1200. [Google Scholar] [CrossRef]

- Tutsoy, O.; Barkana, D.E.; Balikci, K. A novel exploration-exploitation-based adaptive law for intelligent model-free control approaches. IEEE Trans. Cybern. 2021, 1–9. [Google Scholar] [CrossRef]

- Oommen, B.; Iyengar, S.; Rao, N.; Kashyap, R. Robot navigation in unknown terrains using learned visibility graphs. Part I: The disjoint convex obstacle case. IEEE J. Robot. Autom. 1987, 3, 672–681. [Google Scholar] [CrossRef]

- Wooden, D.; Egerstedt, M. Oriented visibility graphs: Low-complexity planning in real-time environments. In Proceedings of the 2006 IEEE International Conference on Robotics and Automation, Orlando, FL, USA, 15–19 May 2006; pp. 2354–2359. [Google Scholar]

- Rao, N.S. Robot navigation in unknown generalized polygonal terrains using vision sensors. IEEE Trans. Syst. Man Cybern. 1995, 25, 947–962. [Google Scholar] [CrossRef]

- Cao, C.; Zhu, H.; Yang, F.; Xia, Y.; Choset, H.; Oh, J.; Zhang, J. Autonomous Exploration Development Environment and the Planning Algorithms. In Proceedings of the 2022 International Conference on Robotics and Automation (ICRA), Philadelphia, PA, USA, 23–27 May 2022. [Google Scholar]

- Chang, A.; Dai, A.; Funkhouser, T.; Halber, M.; Niessner, M.; Savva, M.; Song, S.; Zeng, A.; Zhang, Y. Matterport3D: Learning from RGB-D Data in Indoor Environments. arXiv 2017, arXiv:1709.06158. [Google Scholar]

- Wei, Y.; Xiao, H.; Shi, H.; Jie, Z.; Feng, J.; Huang, T.S. Revisiting dilated convolution: A simple approach for weakly-and semi-supervised semantic segmentation. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Salt Lake City, UT, USA, 18–23 June 2018; pp. 7268–7277. [Google Scholar]

- Suzuki, S. Topological structural analysis of digitized binary images by border following. Comput. Vision Graph. Image Process. 1985, 30, 32–46. [Google Scholar] [CrossRef]

- Teh, C.H.; Chin, R.T. On the detection of dominant points on digital curves. IEEE Trans. Pattern Anal. Mach. Intell. 1989, 11, 859–872. [Google Scholar] [CrossRef] [Green Version]

- Lee, D.T. Proximity and Reachability in the Plane. Ph.D. Thesis, University of Illinois at Urbana-Champaign, Champaign, IL, USA, 1978. [Google Scholar]

- Quigley, M.; Conley, K.; Gerkey, B.; Faust, J.; Foote, T.; Leibs, J.; Wheeler, R.; Ng, A.Y. ROS: An open-source Robot Operating System. In Proceedings of the ICRA Workshop on Open Source Software, Kobe, Japan, 12–17 May 2009; Volume 3, p. 5. [Google Scholar]

- Will, P.; Pennington, K. Grid coding: A preprocessing technique for robot and machine vision. Artif. Intell. 1971, 2, 319–329. [Google Scholar] [CrossRef]

- Agarwal, P.K.; Arge, L.; Danner, A. From Point Cloud to Grid DEM: A Scalable Approach. In Progress in Spatial Data Handling: 12th International Symposium on Spatial Data Handling; Riedl, A., Kainz, W., Elmes, G.A., Eds.; Springer: Berlin/Heidelberg, Germany, 2006; pp. 771–788. [Google Scholar] [CrossRef]

- Birk, A.; Carpin, S. Merging Occupancy Grid Maps From Multiple Robots. Proc. IEEE 2006, 94, 1384–1397. [Google Scholar] [CrossRef]

- Meyer-Delius, D.; Beinhofer, M.; Burgard, W. Occupancy grid models for robot mapping in changing environments. In Proceedings of the Twenty-Sixth AAAI Conference on Artificial Intelligence, Toronto, ON, Canada, 22–26 July 2012. [Google Scholar]

- Lau, B.; Sprunk, C.; Burgard, W. Efficient grid-based spatial representations for robot navigation in dynamic environments. Robot. Auton. Syst. 2013, 61, 1116–1130. [Google Scholar] [CrossRef]

- Kim, B.; Kang, C.M.; Kim, J.; Lee, S.H.; Chung, C.C.; Choi, J.W. Probabilistic vehicle trajectory prediction over occupancy grid map via recurrent neural network. In Proceedings of the 2017 IEEE 20th International Conference on Intelligent Transportation Systems (ITSC), Yokohama, Japan, 16–19 October 2017; pp. 399–404. [Google Scholar] [CrossRef] [Green Version]

- Elfes, A. Using occupancy grids for mobile robot perception and navigation. Computer 1989, 22, 46–57. [Google Scholar] [CrossRef]

- Homm, F.; Kaempchen, N.; Ota, J.; Burschka, D. Efficient occupancy grid computation on the GPU with lidar and radar for road boundary detection. In Proceedings of the 2010 IEEE Intelligent Vehicles Symposium, La Jolla, CA, USA, 21–24 June 2010; pp. 1006–1013. [Google Scholar] [CrossRef] [Green Version]

- Li, H.; Tsukada, M.; Nashashibi, F.; Parent, M. Multivehicle Cooperative Local Mapping: A Methodology Based on Occupancy Grid Map Merging. IEEE Trans. Intell. Transp. Syst. 2014, 15, 2089–2100. [Google Scholar] [CrossRef] [Green Version]

- Li, Y.; Ruichek, Y. Occupancy Grid Mapping in Urban Environments from a Moving On-Board Stereo-Vision System. Sensors 2014, 14, 10454–10478. [Google Scholar] [CrossRef] [Green Version]

- Kneidl, A.; Borrmann, A.; Hartmann, D. Generation and use of sparse navigation graphs for microscopic pedestrian simulation models. Adv. Eng. Inform. 2012, 26, 669–680. [Google Scholar] [CrossRef]

- Welzl, E. Constructing the visibility graph for n-line segments in O(n2) time. Inf. Process. Lett. 1985, 20, 167–171. [Google Scholar] [CrossRef]

- Majeed, A.; Lee, S. A Fast Global Flight Path Planning Algorithm Based on Space Circumscription and Sparse Visibility Graph for Unmanned Aerial Vehicle. Electronics 2018, 7, 375. [Google Scholar] [CrossRef] [Green Version]

- Oleynikova, H.; Taylor, Z.; Siegwart, R.; Nieto, J. Sparse 3D Topological Graphs for Micro-Aerial Vehicle Planning. In Proceedings of the 2018 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Madrid, Spain, 1–5 October 2018; pp. 1–9. [Google Scholar] [CrossRef] [Green Version]

- Himmel, A.S.; Hoffmann, C.; Kunz, P.; Froese, V.; Sorge, M. Computational complexity aspects of point visibility graphs. Discret. Appl. Math. 2019, 254, 283–290. [Google Scholar] [CrossRef] [Green Version]

- Nguyet, T.T.N.; Hoai, T.V.; Thi, N.A. Some Advanced Techniques in Reducing Time for Path Planning Based on Visibility Graph. In Proceedings of the 2011 Third International Conference on Knowledge and Systems Engineering, Hanoi, Vietnam, 14–17 October 2011; pp. 190–194. [Google Scholar] [CrossRef]

- Ben-Moshe, B.; Hall-Holt, O.; Katz, M.J.; Mitchell, J.S.B. Computing the Visibility Graph of Points within a Polygon. In Proceedings of the Twentieth Annual Symposium on Computational Geometry, Brooklyn, NY, USA, 8–11 June 2004; Association for Computing Machinery: New York, NY, USA, 2004; pp. 27–35. [Google Scholar] [CrossRef] [Green Version]

| Test | Without | = 25 | = 20 | = 15 | = 10 | = 5 |

|---|---|---|---|---|---|---|

| total vertices (Indoor) | 904 | 873 | 725 | 714 | 695 | 630 |

| v-graph update (Indoor) | 21.48 ms | 20.64 ms | 14.13 ms | 13.88 ms | 13.82 ms | 12.81 ms |

| total vertices (Forest) | 3976 | 3589 | 2893 | 2838 | 2743 | 2334 |

| v-graph update (Forest) | 71.25 ms | 54.23 ms | 39.12 ms | 38.97 ms | 36.82 ms | 35.64 ms |

| Test | Overall Time [s] | Overall Distance [m] | Average Search Time [ms] |

|---|---|---|---|

| FAR(baseline) | 455.31 | 824.1 | 1.43 |

| A* | 486.4 | 906.3 | 44.21 |

| Compare to FAR | +6.7% | +9% | +2991% |

| D* Lite | 498.1 | 896.6 | 29.6 |

| Compare to FAR | +9.4% | +8.8% | +1967% |

| OURS | 357.2 | 633.1 | 0.81 |

| Compare to FAR | −21.5% | −19.5% | −43.3% |

| Test | Overall Time [s] | Overall Distance [m] | Average Search Time [ms] |

|---|---|---|---|

| FAR(baseline) | 1038.2 | 1914.4 | 2.05 |

| A* | 833.6 | 1543.8 | 332.95 |

| Compare to FAR | −19.7% | −19.3% | +16,141% |

| D* Lite | 1119.8 | 2109.8 | 162.3 |

| Compare to FAR | +7.8% | +10.2% | +7817% |

| OURS | 763.8 | 1420.4 | 1.44 |

| Compare to FAR | −26.4% | −25.8% | −29.7% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Li, Q.; Xie, F.; Zhao, J.; Xu, B.; Yang, J.; Liu, X.; Suo, H. FPS: Fast Path Planner Algorithm Based on Sparse Visibility Graph and Bidirectional Breadth-First Search. Remote Sens. 2022, 14, 3720. https://doi.org/10.3390/rs14153720

Li Q, Xie F, Zhao J, Xu B, Yang J, Liu X, Suo H. FPS: Fast Path Planner Algorithm Based on Sparse Visibility Graph and Bidirectional Breadth-First Search. Remote Sensing. 2022; 14(15):3720. https://doi.org/10.3390/rs14153720

Chicago/Turabian StyleLi, Qunzhao, Fei Xie, Jing Zhao, Bing Xu, Jiquan Yang, Xixiang Liu, and Hongbo Suo. 2022. "FPS: Fast Path Planner Algorithm Based on Sparse Visibility Graph and Bidirectional Breadth-First Search" Remote Sensing 14, no. 15: 3720. https://doi.org/10.3390/rs14153720

APA StyleLi, Q., Xie, F., Zhao, J., Xu, B., Yang, J., Liu, X., & Suo, H. (2022). FPS: Fast Path Planner Algorithm Based on Sparse Visibility Graph and Bidirectional Breadth-First Search. Remote Sensing, 14(15), 3720. https://doi.org/10.3390/rs14153720