1. Introduction

Fractional vegetation cover (FVC) usually refers to the percentage of ground area covered by the vertical projection area of vegetation (including leaves, stems, branches and roots). It is a comprehensive ecological index used to express the surface conditions of plant communities [

1,

2]. Information on FVC is required for assessment of land degradation, salinization and desertification, as well as environmental monitoring [

3]. Deserts are characterized by perennial shrubs forming simple plant communities. Sparse plant species exhibit unique adaptation strategies to the harsh conditions of desert ecosystems, which play an important role in their stabilization [

4]. Therefore, it is of considerable significance to study FVC and its changes over the years.

Traditional desert vegetation surveys require periodic frequent ground surveys involving the collection of a large numbers of samples, which may require considerable resources [

5]. Alternatively, remote-sensing-based data, which are the most prevalent in recent years, may be considered for estimation of FVC [

6]. Characteristics of such data include multiresolution, flexible acquisition time and coverage of a large area, providing rich spatiotemporal information on FVC [

7]. Based on the radiative transfer model and machine learning algorithms, Landsat data can be used for quantitative mapping of vegetation coverage in space and time to estimate FVC with satisfactory accuracy [

8]. The random forest regression method in combination with FengYun-3 reflectance data generated an FVC estimate with a reliable spatiotemporal continuity and high accuracy [

9]. Although satellite data are suitable for estimating vegetation cover on large scales, it is generally difficult to generate coverage estimates for different species, especially in areas with sparse vegetation, e.g., deserts. The spatial resolution of satellite images of deserts make it difficult to obtain individual information about different species, especially plants growing in deserts, which are small in size and sparsely and widely distributed [

10,

11,

12]. Given these limitations, higher-resolution images are needed to estimate the coverage of different species in desert regions.

Unmanned aerial vehicles (UAVs), which are light, low-cost, low-altitude and ground-operated flight platforms capable of carrying a variety of equipment and performing a variety of missions, are becoming increasingly popular in the scientific field [

13]. UAVs provide a remarkable opportunity for scientists to adapt to the scale of a variety of ecological variables and are used to monitor environments at both high spatial and temporal resolutions for responsiveness. UAV-based vegetation classification is also cost-effective [

14]. High-resolution UAV images are used to extract the structural and functional attributes of vegetation and explore the mechanisms of biodiversity [

15,

16]. UAVs serve as an effective tool to measure the leaf angle of hardwood tree species [

17]. If the flight path is low or the resolution of the sensor is high enough, previously undetected object details can be spatially identified, which is the main advantage of using UAVs to study vegetation dynamics [

18]. UAVs provide a large amount of data that can be used to study spatial ecology and monitor environmental phenomena. UAVs also represent an important step toward more effective and efficient monitoring and management of natural resources [

14,

19].

The quality of sensors is gradually improving, and a variety of sensors is widely used [

20,

21,

22]. RGB images may become an important form of data for investigating FVC in desert regions [

10,

11,

12,

23]. There have been few studies on such applications. Guo et al. used UAV RGB images to obtain FVC with the remote sensing indices in Mu Us sandy land [

24]. Li et al. proposed a mean-based spectral decomposition method to estimate FVC from RGB photos taken by a UAV [

25]. These studies confirmed the high accuracy of FVC estimates using RGB data.

Recently, machine learning methods have been applied for FVC estimation and estimation of other vegetation parameters due to their robust performance and nonlinear fitting capability [

8,

26,

27]. For example, some researchers have used machine learning methods, such as backpropagation neural network (BPNN) and random forest (RF), to estimate FVC [

26,

27,

28]. The processing of image patterns generally includes four steps: image acquisition, image preprocessing, image feature extraction and classification [

29]. Image pattern recognition can be used to study phenology [

30], plant phenotype analysis [

31], crop yield estimation [

32] and biodiversity assessment [

18]. However, only a few studies on FVC estimation have combined a machine learning method with image pattern recognition, especially for estimation of sparse vegetation in desert regions.

The desert ecosystem in northwest China is fragile and unstable, mainly characterized by an arid climate, sparse precipitation, high evaporation rates, sparse vegetation and barren land. Affected by human land use, global climate change and geographic environmental elements, these areas have become some of the fastest desertifying areas in China. Desertification not only affects regional drought conditions but also results in the release of large amounts of carbon into the atmosphere due to the degradation of vegetation and soil, which may lead to regional or global climate change. Prevention and control of desertification has become an important part of China’s ecological civilization construction policy, and monitoring sparse vegetation cover is integral to managing desert ecosystems [

25]. FVC derived from high-spatial-resolution UAV imagery could be exceptionally effective in mapping desert vegetation cover and assessing degradation, salinization and desertification [

23].

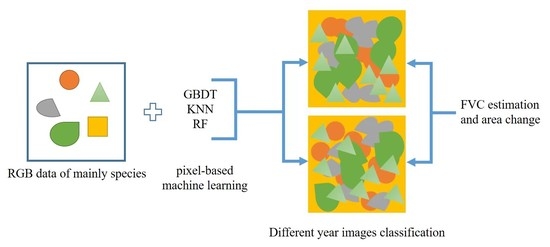

In this study, we used high-resolution RGB data and pixel-based machine learning classification methods to estimate vegetation coverage of major species in the study area. The objective of this study was to explore the reliability of vegetation coverage extraction using high-resolution RGB data and pixel-based machine learning classification methods. The methods and results of this study will provide insights into identification techniques for extraction of vegetation coverage information of different desert species from RGB data.

4. Discussion

In previous studies, FVC estimation methods based on remote sensing data were mainly applied to a global or large scale [

45]. In order to explore the FVC of each species in a small area, in this study, we evaluated the ability of high-resolution RGB data to estimate FVC in desert regions, mainly through ground surveying of the location information of each species in combination with combined with the images to make more accurate training samples and validation samples, as well as the use of a pixel-based machine learning method in two phases of RGB data for evaluation. According to the satisfying validation results obtained in terms of the overall accuracy, the high-resolution RGB data achieved reliable performance in FVC estimation, even at the species level, in line with results reported in previous studies. Zhou et al. used the RF method to classify desert vegetation based on RGB data [

33] and proposed a method of mapping rare desert vegetation based on pixel classifiers and the use of an RF algorithm with a VDVI–texture feature, which obtained high accuracy. Olariu et al. found that RGB drone sensors were indeed capable of providing highly accurate classifications of woody plant species in semiarid landscapes [

46]. Furthermore, we found that good accuracy was not obtained for all species in this study, which indicates that some species may do not perform well in RGB data. For example, the accuracy of

H (

Haloxylon ammodendron (C. A. Mey.) Bunge) was the lowest, indicating that it was misclassified more often than other species, possibly due to a lack of samples of this species or because the RGB characteristics of vegetation

H do not significantly different from those of other types of vegetation, resulting in a poor classification effect of vegetation

H. Oddi et al. combined high-resolution RGB images provided by UAVs with pixel-based classification workflows to map vegetation in recently eroded subalpine grasslands at the level of life forms (i.e., trees, shrubs and herbaceous species) [

47].

Geng et al. used a boosted regression tree model (BRT) that effectively enhanced the vegetation information in RGB images and improved the accuracy of FVC estimation [

48]. Wang et al. proposed a new method to classify woody vegetation and herbaceous vegetation and calculated FVC values based on high-resolution RGB images using a classification and regression tree (CART) machine learning algorithm [

49]. Guo et al. obtained FVC and biomass information using an object-based classification method, single shrub canopy biomass model and vegetation index-based method [

24]. These studies provide information that can be used in future studies to further improve the estimation of vegetation coverage using RGB data at the desert species level. Measures such as expanding color space, extraction of additional vegetation indices, combining multiple models and optimization of algorithms play a considerable role in studying the application of RGB data for estimation of vegetation coverage in desert regions. Furthermore, it is worth exploring additional approaches. Egli et al. presented a novel CNN employing a tree species classification approach based on low-cost UAV-RGB image data, achieving validation results of 92% according to spatially and temporally independent data [

50]. Zhou et al. evaluated the feasibility of using UAV-based RGB imagery for wetland vegetation classification at the species level and employed OBIA technology to overcome the limitations of traditional pixel-based classification methods [

51]. These methods may also improve accuracy when applied to desert vegetation areas, although further studies are required to verify this hypothesis.

Our study showed that the main desert vegetation in the study area changed slightly between 2006 and 2019, which indicates that the desert environment may have had an impact on the study area in the past decade. In addition, crops that were not present in 2006 appeared in the study area in 2019, which indicates that the study area was disturbed by human factors. In fact, China has been working on desert prevention, with attempts to improve the desert area through various measures [

52,

53]. With respect to estimation of FVC and desertification control in desert areas, improvements can be made in the following aspects.

Although RGB data from UAVs achieved notable performance in vegetation classification and coverage extraction studies, the present study is subject to some limitations. Compared with other image types (e.g., multispectral and hyperspectral), RGB data lack band information [

54,

55]. The near-infrared spectrum is an important band with respect to the study of vegetation [

56,

57,

58,

59]. Cameras can be modified to obtain images with information encompassing the near-infrared band, which could be used to improve the classification accuracy of desert vegetation and quantify vegetation coverage more accurately.

In addition, the optimal spatial resolution required depending on the research object must also be taken into account. Instead of always pursuing high-resolution images, the most suitable spatial resolution should be selected. Therefore, it is of considerable significance to explore the optimal spatial resolution of different vegetation features and determine the most reasonable flight altitudes for UAVs.

We only obtained image data for a specific period in the study area; therefore, our results only reflect the status of desert vegetation during that period, and we failed to take full advantage of the UAV. For example, UAVs could be used to acquire time-series data. In future work, UAVs should be used to photograph the growth status of desert regions at various time periods in a given year [

36].