Reference Data Accuracy Impacts Burned Area Product Validation: The Role of the Expert Analyst

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

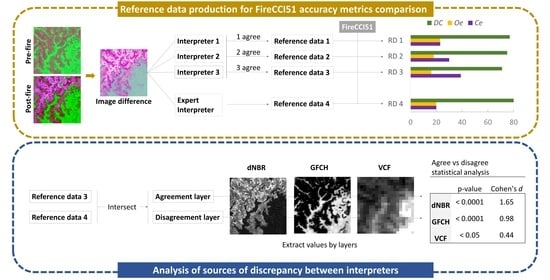

2.2. Reference Burned Area Data Production

2.3. Accuracy Metrics Comparison

2.4. Analysis of Sources of Discrepancy between Interpreters

3. Results

3.1. Accuracy Metrics Comparison

3.2. Analysis of the Sources of Discrepancy between Interpreters

4. Discussion

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Bond, W.J.; Woodward, F.I.; Midgley, G.F. The global distribution of ecosystems in a world without fire. New Phytol. 2005, 165, 525–538. [Google Scholar] [CrossRef] [PubMed]

- Langmann, B.; Duncan, B.; Textor, C.; Trentmann, J.; van der Werf, G.R. Vegetation fire emissions and their impact on air pollution and climate. Atmos. Environ. 2009, 43, 107–116. [Google Scholar] [CrossRef]

- Dong, X.; Li, F.; Lin, Z.; Harrison, S.P.; Chen, Y.; Kug, J.-S. Climate influence on the 2019 fires in Amazonia. Sci. Total Environ. 2021, 794, 148718. [Google Scholar] [CrossRef] [PubMed]

- Kelly, L.; Brotons, L. Using fire to promote biodiversity. Science 2017, 355, 1264–1265. [Google Scholar] [CrossRef]

- Kelly, L.; Giljohann, K.; Duane, A.; Aquilué, N.; Archibald, S.; Batllori, E.; Bennett, A.; Buckland, S.; Canelles, Q.; Clarke, M.; et al. Fire and biodiversity in the Anthropocene. Science 2020, 370, eabb0355. [Google Scholar] [CrossRef]

- Bowman, D.; Williamson, G.; Abatzoglou, J.; Kolden, C.A.; Cochrane, M.; Smith, A.M.S. Human exposure and sensitivity to globally extreme wildfire events. Nat. Ecol. Evol. 2017, 1, 58. [Google Scholar] [CrossRef]

- Turco, M.; Jerez, S.; Augusto, S.; Tarín-Carrasco, P.; Ratola, N.; Jiménez-Guerrero, P.; Trigo, R.M. Climate drivers of the 2017 devastating fires in Portugal. Sci. Rep. 2019, 9, 13886. [Google Scholar] [CrossRef]

- Franquesa, M.; Vanderhoof, M.K.; Stavrakoudis, D.; Gitas, I.Z.; Roteta, E.; Padilla, M.; Chuvieco, E. Development of a standard database of reference sites for validating global burned area products. Earth Syst. Sci. Data 2020, 12, 3229–3246. [Google Scholar] [CrossRef]

- Chuvieco, E. Fundamentals of Satellite Remote Sensing: An Environmental Approach, 3rd ed.; CRC Press: Boca Raton, FL, USA, 2020. [Google Scholar]

- Bastarrika, A.; Chuvieco, E.; Martín, M.P. Mapping burned areas from Landsat TM/ETM+ data with a two-phase algorithm: Balancing omission and commission errors. Remote Sens. Environ. 2011, 115, 1003–1012. [Google Scholar] [CrossRef]

- Chuvieco, E.; Mouillot, F.; van der Werf, G.R.; San Miguel, J.; Tanasse, M.; Koutsias, N.; García, M.; Yebra, M.; Padilla, M.; Gitas, I.; et al. Historical background and current developments for mapping burned area from satellite Earth observation. Remote Sens. Environ. 2019, 225, 45–64. [Google Scholar] [CrossRef]

- Pereira, J.M.; Sá, A.C.; Sousa, A.M.; Silva, J.M.; Santos, T.N.; Carreiras, J.M. Spectral characterisation and discrimination of burnt areas. In Remote Sensing of Large Wildfires; Springer: Berlin/Heidelberg, Germany, 1999; pp. 123–138. [Google Scholar]

- Trigg, S.; Flasse, S. An evaluation of different bi-spectral spaces for discriminating burned shrub-savannah. Int. J. Remote Sens. 2001, 22, 2641–2647. [Google Scholar] [CrossRef]

- Campagnolo, M.L.; Libonati, R.; Rodrigues, J.A.; Pereira, J.M.C. A comprehensive characterization of MODIS daily burned area mapping accuracy across fire sizes in tropical savannas. Remote Sens. Environ. 2021, 252, 112115. [Google Scholar] [CrossRef]

- Boschetti, L.; Roy, D.; Justice, C. International Global Burned Area Satellite Product Validation Protocol Part I—Production and Standardization of Validation Reference Data (to Be Followed by Part II—Accuracy Reporting); Committee on Earth Observation Satellites: Silver Spring, MD, USA, 2009. [Google Scholar]

- Roy, D.P.; Frost, P.G.H.; Justice, C.O.; Landmann, T.; Le Roux, J.L.; Gumbo, K.; Makungwa, S.; Dunham, K.; Du Toit, R.; Mhwandagara, K.; et al. The Southern Africa Fire Network (SAFNet) regional burned-area product-validation protocol. Int. J. Remote Sens. 2005, 26, 4265–4292. [Google Scholar] [CrossRef]

- Melchiorre, A.; Boschetti, L. Global Analysis of Burned Area Persistence Time with MODIS Data. Remote Sens. 2018, 10, 750. [Google Scholar] [CrossRef]

- Boschetti, L.; Roy, D.P.; Giglio, L.; Huang, H.; Zubkova, M.; Humber, M.L. Global validation of the collection 6 MODIS burned area product. Remote Sens. Environ. 2019, 235, 111490. [Google Scholar] [CrossRef]

- Padilla, M.; Stehman, S.V.; Chuvieco, E. Validation of the 2008 MODIS-MCD45 global burned area product using stratified random sampling. Remote Sens. Environ. 2014, 144, 187–196. [Google Scholar] [CrossRef]

- Padilla, M.; Stehman, S.; Litago, J.; Chuvieco, E. Assessing the Temporal Stability of the Accuracy of a Time Series of Burned Area Products. Remote Sens. 2014, 6, 2050. [Google Scholar] [CrossRef]

- Franquesa, M.; Lizundia-Loiola, J.; Stehman, S.V.; Chuvieco, E. Using long temporal reference units to assess the spatial accuracy of global satellite-derived burned area products. Remote Sens. Environ. 2022, 269, 112823. [Google Scholar] [CrossRef]

- Hawbaker, T.J.; Vanderhoof, M.K.; Schmidt, G.L.; Beal, Y.-J.; Picotte, J.J.; Takacs, J.D.; Falgout, J.T.; Dwyer, J.L. The Landsat Burned Area algorithm and products for the conterminous United States. Remote Sens. Environ. 2020, 244, 1–24. [Google Scholar] [CrossRef]

- Lizundia-Loiola, J.; Otón, G.; Ramo, R.; Chuvieco, E. A spatio-temporal active-fire clustering approach for global burned area mapping at 250 m from MODIS data. Remote Sens. Environ. 2020, 236, 111493. [Google Scholar] [CrossRef]

- Tanase, M.A.; Belenguer-Plomer, M.A.; Roteta, E.; Bastarrika, A.; Wheeler, J.; Fernández-Carrillo, Á.; Tansey, K.; Wiedemann, W.; Navratil, P.; Lohberger, S.; et al. Burned Area Detection and Mapping: Intercomparison of Sentinel-1 and Sentinel-2 Based Algorithms over Tropical Africa. Remote Sens. 2020, 12, 334. [Google Scholar] [CrossRef]

- Vanderhoof, M.K.; Fairaux, N.; Beal, Y.-J.G.; Hawbaker, T.J. Validation of the USGS Landsat Burned Area Essential Climate Variable (BAECV) across the conterminous United States. Remote Sens. Environ. 2017, 198, 393–406. [Google Scholar] [CrossRef]

- Roteta, E.; Bastarrika, A.; Franquesa, M.; Chuvieco, E. Landsat and Sentinel-2 Based Burned Area Mapping Tools in Google Earth Engine. Remote Sens. 2021, 13, 816. [Google Scholar] [CrossRef]

- DiMiceli, C.; Carroll, M.; Sohlberg, R.; Kim, D.; Kelly, M.; Townshend, J. MOD44B MODIS/Terra Vegetation Continuous Fields Yearly L3 Global 250 m SIN Grid V006; USGS: Reston, VA, USA, 2015. [Google Scholar] [CrossRef]

- Potapov, P.; Li, X.; Hernandez-Serna, A.; Tyukavina, A.; Hansen, M.C.; Kommareddy, A.; Pickens, A.; Turubanova, S.; Tang, H.; Silva, C.E.; et al. Mapping global forest canopy height through integration of GEDI and Landsat data. Remote Sens. Environ. 2021, 253, 112165. [Google Scholar] [CrossRef]

- Dinerstein, E.; Olson, D.; Joshi, A.; Vynne, C.; Burgess, N.D.; Wikramanayake, E.; Hahn, N.; Palminteri, S.; Hedao, P.; Noss, R.; et al. An Ecoregion-Based Approach to Protecting Half the Terrestrial Realm. BioScience 2017, 67, 534–545. [Google Scholar] [CrossRef]

- Alonso-Canas, I.; Chuvieco, E. Global burned area mapping from ENVISAT-MERIS and MODIS active fire data. Remote Sens. Environ. 2015, 163, 140–152. [Google Scholar] [CrossRef]

- Alves, D.B.; Pérez-Cabello, F. Multiple remote sensing data sources to assess spatio-temporal patterns of fire incidence over Campos Amazônicos Savanna Vegetation Enclave (Brazilian Amazon). Sci. Total Environ. 2017, 601–602, 142–158. [Google Scholar] [CrossRef] [PubMed]

- Dice, L.R. Measures of the amount of ecologic association between species. Ecology 1945, 26, 297–302. [Google Scholar] [CrossRef]

| RDc1 | RDc2 | RDc3 | External RD | |

|---|---|---|---|---|

| Ce | 23 | 30 ** | 39 **** | 20 |

| Oe | 23 | 18 | 16 | 20 |

| DC | 77 | 75 | 71 ** | 80 |

| Agree | Disagree | Agreement vs. Disagreement Layers | ||

|---|---|---|---|---|

| Mean | Mean | p-Value | Cohen’s d | |

| Severity (dNBR) | 0.3 | 0.2 | <0.0001 | 1.65 |

| Vegetation Continuous Field (VCF) | 25.4 | 30.7 | <0.05 | 0.44 |

| Global Forest Canopy High (GFCH) | 2.5 | 5.1 | <0.0001 | 0.98 |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Franquesa, M.; Rodriguez-Montellano, A.M.; Chuvieco, E.; Aguado, I. Reference Data Accuracy Impacts Burned Area Product Validation: The Role of the Expert Analyst. Remote Sens. 2022, 14, 4354. https://doi.org/10.3390/rs14174354

Franquesa M, Rodriguez-Montellano AM, Chuvieco E, Aguado I. Reference Data Accuracy Impacts Burned Area Product Validation: The Role of the Expert Analyst. Remote Sensing. 2022; 14(17):4354. https://doi.org/10.3390/rs14174354

Chicago/Turabian StyleFranquesa, Magí, Armando M. Rodriguez-Montellano, Emilio Chuvieco, and Inmaculada Aguado. 2022. "Reference Data Accuracy Impacts Burned Area Product Validation: The Role of the Expert Analyst" Remote Sensing 14, no. 17: 4354. https://doi.org/10.3390/rs14174354

APA StyleFranquesa, M., Rodriguez-Montellano, A. M., Chuvieco, E., & Aguado, I. (2022). Reference Data Accuracy Impacts Burned Area Product Validation: The Role of the Expert Analyst. Remote Sensing, 14(17), 4354. https://doi.org/10.3390/rs14174354