SAR Image Fusion Classification Based on the Decision-Level Combination of Multi-Band Information

Abstract

:1. Introduction

2. Single-Band SAR Image Classification Based on Convolutional Neural Networks

2.1. Convolutional Neural Networks (CNN)

2.1.1. Convolutional Layers

2.1.2. Pooling Layers

2.1.3. Fully Connected Layer

2.2. Single-Band SAR Image Classification

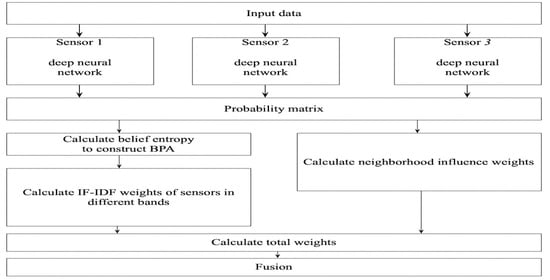

3. SAR Image Classification Method Based on the Decision-Level Combination of Multi-Band Information

3.1. Construction of Basic Probability Assignment (BPA) Functions of the Sensors’ Classification

3.1.1. Construct the Probability Matrix

3.1.2. Construct the BPAs

3.2. Calculate the Weights of Sensors in Different Bands

3.2.1. Calculate the Conflict Coefficient between the BPA of Sensors in Different Bands

3.2.2. Calculate the TF-IDF Weights of Sensors in Different Bands

3.3. Calculate the Neighborhood Influence Weight and Total Weight

3.4. Fusion

4. Results

4.1. Preprocessing

4.2. Experimental with the Dongying City Dataset

4.3. Experimental with the Baotou City Dataset

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- Sun, X.; Wang, B.; Wang, Z.; Li, H.; Li, H.; Fu, K. Research Progress on Few-Shot Learning for Remote Sensing Image Interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2387–2402. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Yan, Z.; Fu, K. DABNet: Deformable Contextual and Boundary-Weighted Network for Cloud Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–16. [Google Scholar] [CrossRef]

- He, Q.; Sun, X.; Yan, Z.; Li, B.; Fu, K. Multi-Object Tracking in Satellite Videos with Graph-Based Multitask Modeling. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–13. [Google Scholar] [CrossRef]

- Cerentini, A.; Welfer, D.; D’Ornellas, M.C.; Haygert, C.J.P.; Dotto, G.N. Automatic identification of glaucoma using deep learning methods. Stud. Health Technol. Inform. 2017, 245, 318–321. [Google Scholar]

- Tombak, A.; Turkmenli, I.; Aptoula, E.; Kayabol, K. Pixel-Based Classification of SAR Images Using Feature Attribute Profiles. IEEE Geosci. Remote Sens. Lett. 2018, 16, 564–567. [Google Scholar] [CrossRef]

- Sun, Z.; Li, J.; Liu, P.; Cao, W.; Yu, T.; Gu, X. SAR Image Classification Using Greedy Hierarchical Learning with Unsupervised Stacked CAEs. IEEE Trans. Geosci. Remote Sens. 2020, 59, 5721–5739. [Google Scholar] [CrossRef]

- Wang, J.; Hou, B.; Jiao, L.; Wang, S. POL-SAR Image Classification Based on Modified Stacked Autoencoder Network and Data Distribution. IEEE Trans. Geosci. Remote Sens. 2019, 58, 1678–1695. [Google Scholar] [CrossRef]

- Zhao, Z.; Jia, M.; Wang, L. High-Resolution SAR Image Classification via Multiscale Local Fisher Patterns. IEEE Trans. Geosci. Remote Sens. 2020, 59, 10161–10178. [Google Scholar] [CrossRef]

- Singha, S.; Johansson, M.; Hughes, N.; Hvidegaard, S.M.; Skourup, H. Arctic Sea Ice Characterization Using Spaceborne Fully Polarimetric L-, C-, and X-Band SAR with Validation by Airborne Measurements. IEEE Trans. Geosci. Remote Sens. 2018, 56, 3715–3734. [Google Scholar] [CrossRef]

- Del Frate, F.; Latini, D.; Scappiti, V. On neural networks algorithms for oil spill detection when applied to C-and X-band. In Proceedings of the SAR[C]//2017 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Fort Worth, TX, USA, 23–28 July 2017; pp. 5249–5251. [Google Scholar]

- Huang, Z.; Dumitru, C.O.; Pan, Z.; Lei, B.; Datcu, M. Classification of Large-Scale High-Resolution SAR Images with Deep Transfer Learning. IEEE Geosci. Remote Sens. Lett. 2020, 18, 107–111. [Google Scholar] [CrossRef] [Green Version]

- Mohammadimanesh, F.; Salehi, B.; Mahdianpari, M.; Gill, E.; Molinier, M. A new fully convolutional neural network for semantic segmentation of polarimetric SAR imagery in complex land cover ecosystem. ISPRS J. Photogramm. Remote Sens. 2019, 151, 223–236. [Google Scholar] [CrossRef]

- Yue, Z.; Gao, F.; Xiong, Q.; Wang, J.; Huang, T.; Yang, E.; Zhou, H. A Novel Semi-Supervised Convolutional Neural Network Method for Synthetic Aperture Radar Image Recognition. Cogn. Comput. 2019, 13, 795–806. [Google Scholar] [CrossRef] [Green Version]

- Hong, D.; Yokoya, N.; Xia, G.S.; Chanussot, J.; Zhu, X.X. X-ModalNet: A semi-supervised deep cross-modal network for classification of remote sensing data. ISPRS J. Photogramm. Remote Sens. 2020, 167, 12–23. [Google Scholar] [CrossRef] [PubMed]

- Rostami, M.; Kolouri, S.; Eaton, E.; Kim, K. Deep Transfer Learning for Few-Shot SAR Image Classification. Remote Sens. 2019, 11, 1374. [Google Scholar] [CrossRef] [Green Version]

- Zhao, Z.; Jiao, L.; Zhao, J.; Gu, J.; Zhao, J. Discriminant deep belief network for high-resolution SAR image classification. Pattern Recognit. 2017, 61, 686–701. [Google Scholar] [CrossRef]

- Hou, B.; Kou, H.; Jiao, L. Classification of Polarimetric SAR Images Using Multilayer Autoencoders and Superpixels. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2016, 9, 3072–3081. [Google Scholar] [CrossRef]

- Zhang, F.; Du, B.; Zhang, L. Scene Classification via a Gradient Boosting Random Convolutional Network Framework. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1793–1802. [Google Scholar] [CrossRef]

- Zhou, Y.; Wang, H.; Xu, F.; Jin, Y.-Q. Polarimetric SAR Image Classification Using Deep Convolutional Neural Networks. IEEE Geosci. Remote Sens. Lett. 2016, 13, 1935–1939. [Google Scholar] [CrossRef]

- Shang, R.; He, J.; Wang, J.; Xu, K.; Jiao, L.; Stolkin, R. Dense connection and depthwise separable convolution based CNN for polarimetric SAR image classification. Knowl.-Based Syst. 2020, 194, 105542. [Google Scholar] [CrossRef]

- Ni, J.; Zhang, F.; Yin, Q.; Zhou, Y.; Li, H.-C.; Hong, W. Random Neighbor Pixel-Block-Based Deep Recurrent Learning for Polarimetric SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 7557–7569. [Google Scholar] [CrossRef]

- Deng, J.; Deng, Y.; Cheong, K.H. Combining conflicting evidence based on Pearson correlation coefficient and weighted graph. Int. J. Intell. Syst. 2021, 36, 7443–7460. [Google Scholar] [CrossRef]

- Zhao, J.; Deng, Y. Complex Network Modeling of Evidence Theory. IEEE Trans. Fuzzy Syst. 2020, 29, 3470–3480. [Google Scholar] [CrossRef]

- Li, R.; Chen, Z.; Li, H.; Tang, Y. A new distance-based total uncertainty measure in Dempster-Shafer evidence theory. Appl. Intell. 2021, 52, 1209–1237. [Google Scholar] [CrossRef]

- Deng, Y. Deng entropy. Chaos Solitons Fractals 2016, 91, 549–553. [Google Scholar] [CrossRef]

- Christian, H.; Agus, M.P.; Suhartono, D. Single Document Automatic Text Summarization using Term Frequency-Inverse Document Frequency (TF-IDF). ComTech: Comput. Math. Eng. Appl. 2016, 7, 285–294. [Google Scholar] [CrossRef]

- Havrlant, L.; Kreinovich, V. A simple probabilistic explanation of term frequency-inverse document frequency (TF-IDF) heuristic (and variations motivated by this explanation). Int. J. Gen. Syst. 2017, 46, 27–36. [Google Scholar] [CrossRef] [Green Version]

- Bernal, J.; Kushibar, K.; Asfaw, D.S.; Valverde, S.; Oliver, A.; Martí, R.; Lladó, X. Deep convolutional neural networks for brain image analysis on magnetic resonance imaging: A review. Artif. Intell. Med. 2018, 95, 64–81. [Google Scholar] [CrossRef] [Green Version]

- Melinte, D.O.; Vladareanu, L. Facial Expressions Recognition for Human–Robot Interaction Using Deep Convolutional Neural Networks with Rectified Adam Optimizer. Sensors 2020, 20, 2393. [Google Scholar] [CrossRef]

- Agrawal, A.; Mittal, N. Using CNN for facial expression recognition: A study of the effects of kernel size and number of filters on accuracy. Vis. Comput. 2019, 36, 405–412. [Google Scholar] [CrossRef]

- Liu, S.; Tang, B.; Chen, Q.; Wang, X. Drug-Drug Interaction Extraction via Convolutional Neural Networks. Comput. Math. Methods Med. 2016, 2016, 6918381. [Google Scholar] [CrossRef] [Green Version]

- Olthof, A.W.; van Ooijen, P.M.A.; Cornelissen, L.J. Deep Learning-Based Natural Language Processing in Radiology: The Impact of Report Complexity, Disease Prevalence, Dataset Size, and Algorithm Type on Model Performance. J. Med. Syst. 2021, 45, 1–16. [Google Scholar] [CrossRef] [PubMed]

- Dong, P.; Zhang, H.; Li, G.Y.; Gaspar, I.S.; Naderializadeh, N. Deep CNN-Based Channel Estimation for mmWave Massive MIMO Systems. IEEE J. Sel. Top. Signal Process. 2019, 13, 989–1000. [Google Scholar] [CrossRef] [Green Version]

- Wei, Y.; Zhao, Y.; Lu, C.; Wei, S.; Liu, L.; Zhu, Z.; Yan, S. Cross-Modal Retrieval with CNN Visual Features: A New Baseline. IEEE Trans. Cybern. 2016, 47, 449–460. [Google Scholar] [CrossRef] [PubMed]

- Wei, Y.; Xia, W.; Lin, M.; Huang, J.; Ni, B.; Dong, J.; Zhao, Y.; Yan, S. HCP: A Flexible CNN Framework for Multi-Label Image Classification. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 38, 1901–1907. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhang, Z.; Wang, H.; Xu, F.; Jin, Y.-Q. Complex-Valued Convolutional Neural Network and Its Application in Polarimetric SAR Image Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 7177–7188. [Google Scholar] [CrossRef]

- Tang, H.; Xiao, B.; Li, W.; Wang, G. Pixel convolutional neural network for multi-focus image fusion. Inf. Sci. 2018, 433, 125–141. [Google Scholar] [CrossRef]

- Liu, Q.; Xiao, L.; Yang, J.; Wei, Z. CNN-Enhanced Graph Convolutional Network with Pixel- and Superpixel-Level Feature Fusion for Hyperspectral Image Classification. IEEE Trans. Geosci. Remote Sens. 2020, 59, 8657–8671. [Google Scholar] [CrossRef]

- Zhao, X.; Tao, R.; Li, W.; Li, H.-C.; Du, Q.; Liao, W.; Philips, W. Joint Classification of Hyperspectral and LiDAR Data Using Hierarchical Random Walk and Deep CNN Architecture. IEEE Trans. Geosci. Remote Sens. 2020, 58, 7355–7370. [Google Scholar] [CrossRef]

- Cheng, G.; Yan, B.; Shi, P.; Li, K.; Yao, X.; Guo, L.; Han, J. Prototype-CNN for Few-Shot Object Detection in Remote Sensing Images. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–10. [Google Scholar] [CrossRef]

- Xu, S.; Hou, Y.; Deng, X.; Ouyang, K.; Zhang, Y.; Zhou, S. Conflict Management for Target Recognition Based on PPT Entropy and Entropy Distance. Energies 2021, 14, 1143. [Google Scholar] [CrossRef]

- Xue, Y.; Deng, Y. Interval-valued belief entropies for Dempster–Shafer structures. Soft Comput. 2021, 25, 8063–8071. [Google Scholar] [CrossRef] [PubMed]

- Zhou, M.; Zhu, S.-S.; Chen, Y.-W.; Wu, J.; Herrera-Viedma, E. A Generalized Belief Entropy with Nonspecificity and Structural Conflict. IEEE Trans. Syst. Man Cybern. Syst. 2021, 1–14. [Google Scholar] [CrossRef]

- Jiang, W.; Peng, J.; Deng, Y. New representation method of evidential conflict. Syst. Eng. Electron. 2010, 32, 562–565. [Google Scholar]

- Yong, D.; WenKang, S.; ZhenFu, Z.; Qi, L. Combining belief functions based on distance of evidence. Decis. Support Syst. 2004, 38, 489–493. [Google Scholar] [CrossRef]

| C-Band SAR Classification | L-Band SAR Classification | P-Band SAR Classification | Modified Average Approach | Our Method | |

|---|---|---|---|---|---|

| Accuracy | 66.32% | 64.08% | 63.17% | 68.67% | 70.34% |

| C-Band SAR Classification | L-Band SAR Classification | P-Band SAR Classification | X-Band SAR Classification | Modified Average Approach | Fusion Result | |

|---|---|---|---|---|---|---|

| Accuracy | 59.85% | 62.39% | 62.07% | 67.75% | 68.97% | 69.26% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Zhu, J.; Pan, J.; Jiang, W.; Yue, X.; Yin, P. SAR Image Fusion Classification Based on the Decision-Level Combination of Multi-Band Information. Remote Sens. 2022, 14, 2243. https://doi.org/10.3390/rs14092243

Zhu J, Pan J, Jiang W, Yue X, Yin P. SAR Image Fusion Classification Based on the Decision-Level Combination of Multi-Band Information. Remote Sensing. 2022; 14(9):2243. https://doi.org/10.3390/rs14092243

Chicago/Turabian StyleZhu, Jinbiao, Jie Pan, Wen Jiang, Xijuan Yue, and Pengyu Yin. 2022. "SAR Image Fusion Classification Based on the Decision-Level Combination of Multi-Band Information" Remote Sensing 14, no. 9: 2243. https://doi.org/10.3390/rs14092243

APA StyleZhu, J., Pan, J., Jiang, W., Yue, X., & Yin, P. (2022). SAR Image Fusion Classification Based on the Decision-Level Combination of Multi-Band Information. Remote Sensing, 14(9), 2243. https://doi.org/10.3390/rs14092243