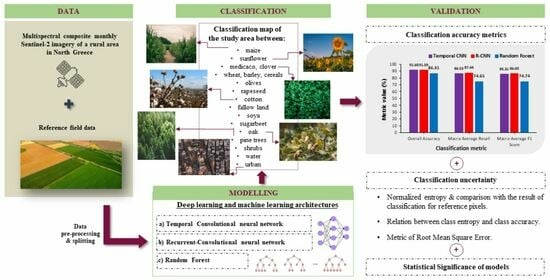

Agricultural Land Cover Mapping through Two Deep Learning Models in the Framework of EU’s CAP Activities Using Sentinel-2 Multitemporal Imagery

Abstract

:1. Introduction

2. Feedforward and Recurrent Neural Networks

2.1. Feedforward Neural Networks and Temporal Convolutions

2.2. Recurrent Layers and 2D Convolutions

3. Data and Methods

3.1. Study Area

| Class Name | Percent of Pixels |

|---|---|

| wheat, barley, cereals | 28.68% |

| fallow land | 10.84% |

| cotton | 9.15% |

| olives | 8.76% |

| maize | 8.73% |

| sunflower/rapeseed/soya | 6.86% |

| nuts | 3.23% |

| horticultural | 2.62% |

| rice | 1.02% |

| vineyards | 1.02% |

3.2. Image Data

3.3. Reference Instances

3.4. Data Pre-Processing

3.5. Temporal CNN Classification Model

3.6. R-CNN Classification Model

3.7. Random Forests Benchmark Classification

3.8. Validation of the Results

3.8.1. Classification Accuracy Metrics

3.8.2. Classification Uncertainty Evaluation

4. Results

4.1. Classification Accuracy

4.2. Classification Uncertainty

4.3. Statistical Assessment

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Acknowledgments

Conflicts of Interest

References

- European Commission. The Common Agricultural Policy at a Glance. Available online: https://agriculture.ec.europa.eu/common-agricultural-policy/cap-overview/cap-glance_en#cap2023-27 (accessed on 9 August 2023).

- Tóth, K.; Kučas, A. Spatial Information in European Agricultural Data Management. Requirements and Interoperability Supported by a Domain Model. Land Use Policy 2016, 57, 64–79. [Google Scholar] [CrossRef]

- Xia, J.; Yokoya, N.; Adriano, B.; Kanemoto, K. National High-Resolution Cropland Classification of Japan with Agricultural Census Information and Multi-Temporal Multi-Modality Datasets. Int. J. Appl. Earth Obs. Geoinf. 2023, 117, 103193. [Google Scholar] [CrossRef]

- Sarvia, F.; Xausa, E.; De Petris, S.; Cantamessa, G.; Borgogno-Mondino, E. A Possible Role of Copernicus Sentinel-2 Data to Support Common Agricultural Policy Controls in Agriculture. Agronomy 2021, 11, 110. [Google Scholar] [CrossRef]

- Abubakar, G.A.; Wang, K.; Shahtahamssebi, A.; Xue, X.; Belete, M.; Gudo, A.J.A.; Shuka, K.A.M.; Gan, M. Mapping Maize Fields by Using Multi-Temporal Sentinel-1A and Sentinel-2A Images in Makarfi, Northern Nigeria, Africa. Sustainability 2020, 12, 2539. [Google Scholar] [CrossRef]

- Foley, J.A.; Defries, R.S.; Asner, G.P.; Barford, C.C.; Bonan, G.; Carpenter, S.R.; Chapin, F.S.; Coe, M.T.; Daily, G.C.; Gibbs, H.K.; et al. Global Consequences of Land Use. Science 2005, 309, 570–574. [Google Scholar] [CrossRef]

- Xue, J.; Zhang, X.; Chen, S.; Hu, B.; Wang, N.; Shi, Z. Quantifying the Agreement and Accuracy Characteristics of Four Satellite-Based LULC Products for Cropland Classification in China. J. Integr. Agric. 2023, 1–23. [Google Scholar] [CrossRef]

- Cai, T.; Luo, X.; Fan, L.; Han, J.; Zhang, X. The Impact of Cropland Use Changes on Terrestrial Ecosystem Services Value in Newly Added Cropland Hotspots in China during 2000–2020. Land 2022, 11, 2294. [Google Scholar] [CrossRef]

- Faqe Ibrahim, G.R.; Rasul, A.; Abdullah, H. Improving Crop Classification Accuracy with Integrated Sentinel-1 and Sentinel-2 Data: A Case Study of Barley and Wheat. J. Geovisualization Spat. Anal. 2023, 7, 22. [Google Scholar] [CrossRef]

- Li, H.; Song, X.-P.; Hansen, M.C.; Becker-Reshef, I.; Adusei, B.; Pickering, J.; Wang, L.; Wang, L.; Lin, Z.; Zalles, V.; et al. Development of a 10-m Resolution Maize and Soybean Map over China: Matching Satellite-Based Crop Classification with Sample-Based Area Estimation. Remote Sens. Environ. 2023, 294, 113623. [Google Scholar] [CrossRef]

- Heupel, K.; Spengler, D.; Itzerott, S. A Progressive Crop-Type Classification Using Multitemporal Remote Sensing Data and Phenological Information. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2018, 86, 53–69. [Google Scholar] [CrossRef]

- Wheeler, T.; Von Braun, J. Climate Change Impacts on Global Food Security. Science 2013, 341, 508–513. [Google Scholar] [CrossRef] [PubMed]

- Wu, S.; Cao, L.; Xu, D.; Zhao, C. Historical Eco-Environmental Quality Mapping in China with Multi-Source Data Fusion. Appl. Sci. 2023, 13, 8051. [Google Scholar] [CrossRef]

- Ghayour, L.; Neshat, A.; Paryani, S.; Shahabi, H.; Shirzadi, A.; Chen, W.; Al-Ansari, N.; Geertsema, M.; Pourmehdi Amiri, M.; Gholamnia, M.; et al. Performance Evaluation of Sentinel-2 and Landsat 8 OLI Data for Land Cover/Use Classification Using a Comparison between Machine Learning Algorithms. Remote Sens. 2021, 13, 1349. [Google Scholar] [CrossRef]

- Ma, Z.; Li, W.; Warner, T.A.; He, C.; Wang, X.; Zhang, Y.; Guo, C.; Cheng, T.; Zhu, Y.; Cao, W.; et al. A Framework Combined Stacking Ensemble Algorithm to Classify Crop in Complex Agricultural Landscape of High Altitude Regions with Gaofen-6 Imagery and Elevation Data. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103386. [Google Scholar] [CrossRef]

- Weiss, M.; Jacob, F.; Duveiller, G. Remote Sensing for Agricultural Applications: A Meta-Review. Remote Sens. Environ. 2020, 236, 111402. [Google Scholar] [CrossRef]

- Cai, Y.; Guan, K.; Peng, J.; Wang, S.; Seifert, C.; Wardlow, B.; Li, Z. A High-Performance and in-Season Classification System of Field-Level Crop Types Using Time-Series Landsat Data and a Machine Learning Approach. Remote Sens. Environ. 2018, 210, 35–47. [Google Scholar] [CrossRef]

- Siachalou, S.; Mallinis, G.; Tsakiri-Strati, M. Analysis of Time-Series Spectral Index Data to Enhance Crop Identification Over a Mediterranean Rural Landscape. IEEE Geosci. Remote Sens. Lett. 2017, 14, 1508–1512. [Google Scholar] [CrossRef]

- Zhong, L.; Hu, L.; Zhou, H. Deep Learning Based Multi-Temporal Crop Classification. Remote Sens. Environ. 2019, 221, 430–443. [Google Scholar] [CrossRef]

- Campos-Taberner, M.; Garcia-Haro, F.J.; Martinez, B.; Sánchez-Ruíz, S.; Gilabert, M.A. A Copernicus Sentinel-1 and Sentinel-2 Classification Framework for the 2020+ European Common Agricultural Policy: A Case Study in València (Spain). Agronomy 2019, 9, 556. [Google Scholar] [CrossRef]

- López-Andreu, F.J.; Erena, M.; Dominguez-Gómez, J.A.; López-Morales, J.A. Sentinel-2 Images and Machine Learning as Tool for Monitoring of the Common Agricultural Policy: Calasparra Rice as a Case Study. Agronomy 2021, 11, 621. [Google Scholar] [CrossRef]

- Blickensdörfer, L.; Schwieder, M.; Pflugmacher, D.; Nendel, C.; Erasmi, S.; Hostert, P. Mapping of Crop Types and Crop Sequences with Combined Time Series of Sentinel-1, Sentinel-2 and Landsat 8 Data for Germany. Remote Sens. Environ. 2022, 269, 112831. [Google Scholar] [CrossRef]

- Phalke, A.R.; Özdoğan, M.; Thenkabail, P.S.; Erickson, T.; Gorelick, N.; Yadav, K.; Congalton, R.G. Mapping Croplands of Europe, Middle East, Russia, and Central Asia Using Landsat, Random Forest, and Google Earth Engine. ISPRS J. Photogramm. Remote Sens. 2020, 167, 104–122. [Google Scholar] [CrossRef]

- Ahmad, M.; Shabbir, S.; Roy, S.K.; Hong, D.; Wu, X.; Yao, J.; Khan, A.M.; Mazzara, M.; Distefano, S.; Chanussot, J. Hyperspectral Image Classification—Traditional to Deep Models: A Survey for Future Prospects. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 968–999. [Google Scholar] [CrossRef]

- Zhu, X.X.; Tuia, D.; Mou, L.; Xia, G.-S.; Zhang, L.; Xu, F.; Fraundorfer, F. Deep Learning in Remote Sensing: A Comprehensive Review and List of Resources. IEEE Geosci. Remote Sens. Mag. 2017, 5, 8–36. [Google Scholar] [CrossRef]

- Guo, Y.; Yu, Q.; Gao, Y.; Liu, X.; Li, C. Max-Min Distance Embedding for Unsupervised Hyperspectral Image Classification in the Satellite Internet of Things System. Internet Things 2023, 22, 100775. [Google Scholar] [CrossRef]

- Cao, T. Effective Detection Algorithm of Electronic Information and Signal Processing Based on Multi-Sensor Data Fusion. Egypt. J. Remote Sens. Space Sci. 2023, 26, 519–526. [Google Scholar] [CrossRef]

- Xu, C.; Lin, M.; Fang, Q.; Chen, J.; Yue, Q.; Xia, J. Air Temperature Estimation over Winter Wheat Fields by Integrating Machine Learning and Remote Sensing Techniques. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103416. [Google Scholar] [CrossRef]

- Xu, Y.; Luo, W.; Hu, A.; Xie, Z.; Xie, X.; Tao, L. TE-SAGAN: An Improved Generative Adversarial Network for Remote Sensing Super-Resolution Images. Remote Sens. 2022, 14, 2425. [Google Scholar] [CrossRef]

- Bazi, Y.; Bashmal, L.; Rahhal, M.M.A.; Dayil, R.A.; Ajlan, N.A. Vision Transformers for Remote Sensing Image Classification. Remote Sens. 2021, 13, 516. [Google Scholar] [CrossRef]

- Dabboor, M.; Atteia, G.; Meshoul, S.; Alayed, W. Deep Learning-Based Framework for Soil Moisture Content Retrieval of Bare Soil from Satellite Data. Remote Sens. 2023, 15, 1916. [Google Scholar] [CrossRef]

- Odebiri, O.; Mutanga, O.; Odindi, J.; Naicker, R. Mapping Soil Organic Carbon Distribution across South Africa’s Major Biomes Using Remote Sensing-Topo-Climatic Covariates and Concrete Autoencoder-Deep Neural Networks. Sci. Total Environ. 2023, 865, 161150. [Google Scholar] [CrossRef] [PubMed]

- Shakya, A.; Biswas, M.; Pal, M. Parametric Study of Convolutional Neural Network Based Remote Sensing Image Classification. Int. J. Remote Sens. 2021, 42, 2663–2685. [Google Scholar] [CrossRef]

- Ma, L.; Liu, Y.; Zhang, X.; Ye, Y.; Yin, G.; Johnson, B.A. Deep Learning in Remote Sensing Applications: A Meta-Analysis and Review. ISPRS J. Photogramm. Remote Sens. 2019, 152, 166–177. [Google Scholar] [CrossRef]

- Shakya, A.; Biswas, M.; Pal, M. Evaluating the Potential of Pyramid-Based Fusion Coupled with Convolutional Neural Network for Satellite Image Classification. Arab. J. Geosci. 2022, 15, 759. [Google Scholar] [CrossRef]

- Chen, Y.; Lin, M.; He, Z.; Polat, K.; Alhudhaif, A.; Alenezi, F. Consistency- and Dependence-Guided Knowledge Distillation for Object Detection in Remote Sensing Images. Expert Syst. Appl. 2023, 229, 120519. [Google Scholar] [CrossRef]

- Li, W.; Zhou, J.; Li, X.; Cao, Y.; Jin, G. Few-Shot Object Detection on Aerial Imagery via Deep Metric Learning and Knowledge Inheritance. Int. J. Appl. Earth Obs. Geoinf. 2023, 122, 103397. [Google Scholar] [CrossRef]

- Zhao, H.; Feng, K.; Wu, Y.; Gong, M. An Efficient Feature Extraction Network for Unsupervised Hyperspectral Change Detection. Remote Sens. 2022, 14, 4646. [Google Scholar] [CrossRef]

- Ding, J.; Li, X. A Spatial-Spectral-Temporal Attention Method for Hyperspectral Image Change Detection. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Kuala Lumpur, Malaysia, 17–22 July 2022; Institute of Electrical and Electronics Engineers (IEEE): Piscataway, NJ, USA, 2022; pp. 3704–3707. [Google Scholar]

- Tetteh, G.O.; Schwieder, M.; Erasmi, S.; Conrad, C.; Gocht, A. Comparison of an Optimised Multiresolution Segmentation Approach with Deep Neural Networks for Delineating Agricultural Fields from Sentinel-2 Images. PFG-J. Photogramm. Remote Sens. Geoinf. Sci. 2023, 91, 295–312. [Google Scholar] [CrossRef]

- LeCun, Y.; Bengio, Y.; Hinton, G. Deep Learning. Nature 2015, 521, 436–444. [Google Scholar] [CrossRef]

- Parajuli, J.; Fernandez-Beltran, R.; Kang, J.; Pla, F. Attentional Dense Convolutional Neural Network for Water Body Extraction From Sentinel-2 Images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 6804–6816. [Google Scholar] [CrossRef]

- Zhao, L.; Ji, S. CNN, RNN, or ViT? An Evaluation of Different Deep Learning Architectures for Spatio-Temporal Representation of Sentinel Time Series. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2023, 16, 44–56. [Google Scholar] [CrossRef]

- Li, B.; Guo, Y.; Yang, J.; Wang, L.; Wang, Y.; An, W. Gated Recurrent Multiattention Network for VHR Remote Sensing Image Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5606113. [Google Scholar] [CrossRef]

- Sun, X.; Wang, B.; Wang, Z.; Li, H.; Li, H.; Fu, K. Research Progress on Few-Shot Learning for Remote Sensing Image Interpretation. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2021, 14, 2387–2402. [Google Scholar] [CrossRef]

- Asam, S.; Gessner, U.; Almengor González, R.; Wenzl, M.; Kriese, J.; Kuenzer, C. Mapping Crop Types of Germany by Combining Temporal Statistical Metrics of Sentinel-1 and Sentinel-2 Time Series with LPIS Data. Remote Sens. 2022, 14, 2981. [Google Scholar] [CrossRef]

- Erdanaev, E.; Kappas, M.; Wyss, D. Irrigated Crop Types Mapping in Tashkent Province of Uzbekistan with Remote Sensing-Based Classification Methods. Sensors 2022, 22, 5683. [Google Scholar] [CrossRef]

- Gounari, O.; Karakizi, C.; Karantzalos, K. Filtering Lpis Data for Building Trustworthy Training Datasets for Crop Type Mapping: A Case Study in Greece. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Nice, France, 6–11 June 2022; Volume XLIII-B3-2022, pp. 871–877. [Google Scholar]

- Khan, S.; Rahmani, H.; Shah, S.A.A.; Bennamoun, M. A Guide to Convolutional Neural Networks for Computer Vision, 1st ed.; Synthesis Lectures on Computer Vision; Morgan & Claypool Publishers: Kentfield, CA, USA, 2018; Volume 8. [Google Scholar]

- Mazzia, V.; Khaliq, A.; Chiaberge, M. Improvement in Land Cover and Crop Classification Based on Temporal Features Learning from Sentinel-2 Data Using Recurrent-Convolutional Neural Network (R-CNN). Appl. Sci. 2020, 10, 238. [Google Scholar] [CrossRef]

- Rußwurm, M.; Körner, M. Convolutional LSTMs for Cloud-Robust Segmentation of Remote Sensing Imagery. arXiv 2018, arXiv:1811.02471. [Google Scholar] [CrossRef]

- Mou, L.; Bruzzone, L.; Zhu, X. Learning Spectral-Spatial-Temporal Features via a Recurrent Convolutional Neural Network for Change Detection in Multispectral Imagery. IEEE Trans. Geosci. Remote Sens. 2018, 57, 924–935. [Google Scholar] [CrossRef]

- CS231n Convolutional Neural Networks for Visual Recognition. Available online: https://cs231n.github.io/convolutional-networks/#conv (accessed on 9 August 2023).

- Capolongo, D.; Refice, A.; Bocchiola, D.; D’Addabbo, A.; Vouvalidis, K.; Soncini, A.; Zingaro, M.; Bovenga, F.; Stamatopoulos, L. Coupling Multitemporal Remote Sensing with Geomorphology and Hydrological Modeling for Post Flood Recovery in the Strymonas Dammed River Basin (Greece). Sci. Total Environ. 2019, 651, 1958–1968. [Google Scholar] [CrossRef] [PubMed]

- N.E.C.C.A. Management Unit of Protected Areas of Central Macedonia. Available online: https://necca.gov.gr/en/mdpp/management-unit-of-koroneia-volvi-kerkini-and-thermaikos-national-parks-and-protected-areas-of-central-macedonia/ (accessed on 9 August 2023).

- Struma/Strymon River Sub-Basin. Available online: http://www.inweb.gr/workshops2/sub_basins/11_Strymon.html (accessed on 9 August 2023).

- Weather Spark. Climate and Average Weather Year Round in Sérres. Available online: https://weatherspark.com/y/89459/Average-Weather-in-S%C3%A9rres-Greece-Year-Round (accessed on 9 August 2023).

- Hellenic Statistical Authority. Areas and Production/2019. Available online: https://www.statistics.gr/en/statistics/-/publication/SPG06/2019 (accessed on 2 September 2023).

- OPEKEPE. Συγκεντρωτικά Στοιχεία Ενιαίων Aιτήσεων Εκμετάλλευσης. Available online: http://aggregate.opekepe.gr/ (accessed on 2 September 2023).

- Google. Google Earth Engine. Available online: https://earthengine.google.com/ (accessed on 9 August 2023).

- Amani, M.; Ghorbanian, A.; Ahmadi, S.A.; Kakooei, M.; Moghimi, A.; Mirmazloumi, S.M.; Moghaddam, S.H.A.; Mahdavi, S.; Ghahremanloo, M.; Parsian, S.; et al. Google Earth Engine Cloud Computing Platform for Remote Sensing Big Data Applications: A Comprehensive Review. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 5326–5350. [Google Scholar] [CrossRef]

- European Space Agency. Resolution and Swath. Available online: https://sentinels.copernicus.eu/web/sentinel/missions/sentinel-2/instrument-payload/resolution-and-swath (accessed on 9 August 2023).

- Zabalza, M.; Bernardini, A. Super-Resolution of Sentinel-2 Images Using a Spectral Attention Mechanism. Remote Sens. 2022, 14, 2890. [Google Scholar] [CrossRef]

- Mancino, G.; Falciano, A.; Console, R.; Trivigno, M.L. Comparison between Parametric and Non-Parametric Supervised Land Cover Classifications of Sentinel-2 MSI and Landsat-8 OLI Data. Geographies 2023, 3, 82–109. [Google Scholar] [CrossRef]

- Immitzer, M.; Vuolo, F.; Atzberger, C. First Experience with Sentinel-2 Data for Crop and Tree Species Classifications in Central Europe. Remote Sens. 2016, 8, 166. [Google Scholar] [CrossRef]

- Schuster, C.; Förster, M.; Kleinschmit, B. Testing the Red Edge Channel for Improving Land-Use Classifications Based on High-Resolution Multi-Spectral Satellite Data. Int. J. Remote Sens. 2012, 33, 5583–5599. [Google Scholar] [CrossRef]

- USGS. Landsat 8. Available online: https://www.usgs.gov/landsat-missions/landsat-8 (accessed on 9 August 2023).

- European Space Agency. SPOT 4. Available online: https://earth.esa.int/eogateway/missions/spot-4 (accessed on 9 August 2023).

- Siachalou, S. Time Series Processing and Analysis of Satellite Images for Land Use/Land Cover Classification and Change Detection. Ph.D. Thesis, Aristotle University of Thessaloniki, Thessaloniki, Greece, 2016. [Google Scholar]

- Pelletier, C.; Webb, G.I.; Petitjean, F. Temporal Convolutional Neural Network for the Classification of Satellite Image Time Series. Remote Sens. 2019, 11, 523. [Google Scholar] [CrossRef]

- Aghdam, H.H.; Heravi, E.J. Guide to Convolutional Neural Networks: A Practical Application to Traffic-Sign Detection and Classification, 1st ed.; Springer: Cham, Switzerland, 2017. [Google Scholar]

- Ioffe, S.; Szegedy, C. Batch Normalization: Accelerating Deep Network Training by Reducing Internal Covariate Shift. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; JMLR.org: Lille, France; Volume 37, pp. 448–456. [Google Scholar]

- Krizhevsky, A.; Sutskever, I.; Hinton, G.E. ImageΝet Classification with Deep Convolutional Neural Networks. In Proceedings of the 25th International Conference on Neural Information Processing Systems, Lake Tahoe, NV, USA, 3–6 December 2012; pp. 1097–1105. [Google Scholar]

- Srivastava, N.; Hinton, G.; Krizhevsky, A.; Sutskever, I.; Salakhutdinov, R. Dropout: A Simple Way to Prevent Neural Network Overfitting. J. Mach. Learn. Res. 2014, 15, 1929–1958. [Google Scholar]

- Reddi, S.J.; Kale, S.; Kumar, S. On the Convergence of Adam and Beyond. In Proceedings of the 6th International Conference on Learning Representations, Vancouver, BC, Canada, 30 April–3 May 2018; pp. 1–23. [Google Scholar]

- Gers, F.A.; Schraudolph, N.N.; Schmidhuber, J. Learning Precise Timing with LSTM Recurrent Networks. J. Mach. Learn. Res. 2003, 3, 115–143. [Google Scholar] [CrossRef]

- Pal, M. Random Forest Classifier for Remote Sensing Classification. Int. J. Remote Sens. 2005, 26, 217–222. [Google Scholar] [CrossRef]

- Duchscherer, S.E. Classifying Building Usages: A Machine Learning Approach on Building Extractions. Master’s Thesis, University of Tennessee, Knoxville, TN, USA, 2018. [Google Scholar]

- Safavian, S.R.; Landgrebe, D. A Survey of Decision Tree Classifier Methodology. IEEE Trans. Syst. Man Cybern. 1991, 21, 660–674. [Google Scholar] [CrossRef]

- Roodposhti, M.S.; Aryal, J.; Lucieer, A.; Bryan, B.A. Uncertainty Assessment of Hyperspectral Image Classification: Deep Learning vs. Random Forest. Entropy 2019, 21, 78. [Google Scholar] [CrossRef]

- Grandini, M.; Bagli, E.; Visani, G. Metrics for Multi-Class Classification: An Overview. arXiv 2020, arXiv:2008.05756. [Google Scholar] [CrossRef]

- Rußwurm, M.; Körner, M. Temporal Vegetation Modelling Using Long Short-Term Memory Networks for Crop Identification from Medium-Resolution Multi-Spectral Satellite Images. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops (CVPRW), Honolulu, HI, USA, 21–26 July 2017; pp. 1496–1504. [Google Scholar]

- Özdemir, H.; Baduna Koçyiğit, M.; Akay, D. Flood Susceptibility Mapping with Ensemble Machine Learning: A Case of Eastern Mediterranean Basin, Türkiye. Stoch. Environ. Res. Risk Assess. 2023, 1–18. [Google Scholar] [CrossRef]

- Nakata, N.; Siina, T. Ensemble Learning of Multiple Models Using Deep Learning for Multiclass Classification of Ultrasound Images of Hepatic Masses. Bioengineering 2023, 10, 69. [Google Scholar] [CrossRef] [PubMed]

- Zablan, C.D.; Blanco, A.; Nadaoka, K.; Martinez, K. Assessment of Mangrove Extent Extraction Accuracy of Threshold Segmentation-Based Indices Using Sentinel Imagery. In Proceedings of the International Archives of the Photogrammetry, Remote Sensing and Spatial Information Sciences, Johor Bahru, Malaysia, 14–17 November 2022; International Society for Photogrammetry and Remote Sensing: Bethesda, MD, USA, 2022; Volume 48, pp. 391–401. [Google Scholar]

- Iban, M.C.; Sekertekin, A. Machine Learning Based Wildfire Susceptibility Mapping Using Remotely Sensed Fire Data and GIS: A Case Study of Adana and Mersin Provinces, Turkey. Ecol. Inform. 2022, 69, 101647. [Google Scholar] [CrossRef]

- Griffiths, P.; Nendel, C.; Hostert, P. Intra-Annual Reflectance Composites from Sentinel-2 and Landsat for National-Scale Crop and Land Cover Mapping. Remote Sens. Environ. 2019, 220, 135–151. [Google Scholar] [CrossRef]

- Xiong, J.; Thenkabail, P.S.; Tilton, J.C.; Gumma, M.K.; Teluguntla, P.; Oliphant, A.; Congalton, R.G.; Yadav, K.; Gorelick, N. Nominal 30-m Cropland Extent Map of Continental Africa by Integrating Pixel-Based and Object-Based Algorithms Using Sentinel-2 and Landsat-8 Data on Google Earth Engine. Remote Sens. 2017, 9, 1065. [Google Scholar] [CrossRef]

- Rodriguez-Galiano, V.F.; Ghimire, B.; Rogan, J.; Chica-Olmo, M.; Rigol-Sánchez, J.P. An Assessment of the Effectiveness of a Random Forest Classifier for Land-Cover Classification. ISPRS J. Photogramm. Remote Sens. 2012, 67, 93–104. [Google Scholar] [CrossRef]

- Masse, A.; Ducrot, D.; Marthon, P. Tools for Multitemporal Analysis and Classification of Multisource Satellite Imagery. In Proceedings of the 6th International Workshop on the Analysis of Multi-Temporal Remote Sensing Images (Multi-Temp), Trento, Italy, 12–14 July 2011; pp. 209–212. [Google Scholar]

- Pelletier, C.; Valero, S.; Inglada, J.; Champion, N.; Dedieu, G. Assessing the Robustness of Random Forests to Map Land Cover with High Resolution Satellite Image Time Series over Large Areas. Remote Sens. Environ. 2016, 187, 156–168. [Google Scholar] [CrossRef]

- Zhao, H.; Duan, S.; Liu, J.; Sun, L.; Reymondin, L. Evaluation of Five Deep Learning Models for Crop Type Mapping Using Sentinel-2 Time Series Images with Missing Information. Remote Sens. 2021, 13, 2790. [Google Scholar] [CrossRef]

- Xin, Q.; Zhang, L.; Qu, Y.; Geng, H.; Li, X.; Peng, S. Satellite Mapping of Maize Cropland in One-Season Planting Areas of China. Sci. Data 2023, 10, 437. [Google Scholar] [CrossRef]

- Yao, J.; Wu, J.; Xiao, C.; Zhang, Z.; Li, J. The Classification Method Study of Crops Remote Sensing with Deep Learning, Machine Learning, and Google Earth Engine. Remote Sens. 2022, 14, 2758. [Google Scholar] [CrossRef]

- Ghassemi, B.; Dujakovic, A.; Żółtak, M.; Immitzer, M.; Atzberger, C.; Vuolo, F. Designing a European-Wide Crop Type Mapping Approach Based on Machine Learning Algorithms Using LUCAS Field Survey and Sentinel-2 Data. Remote Sens. 2022, 14, 541. [Google Scholar] [CrossRef]

- Hao, P.; Zhan, Y.; Wang, L.; Niu, Z.; Shakir, M. Feature Selection of Time Series MODIS Data for Early Crop Classification Using Random Forest: A Case Study in Kansas, USA. Remote Sens. 2015, 7, 5347–5369. [Google Scholar] [CrossRef]

- Simón Sánchez, A.-M.; González-Piqueras, J.; de la Ossa, L.; Calera, A. Convolutional Neural Networks for Agricultural Land Use Classification from Sentinel-2 Image Time Series. Remote Sens. 2022, 14, 5373. [Google Scholar] [CrossRef]

| Band Id | Band Number | Band Name | Sentinel-2A | Sentinel-2B | ||

|---|---|---|---|---|---|---|

| Central Wavelength (nm) | Bandwidth (nm) | Central Wavelength (nm) | Bandwidth (nm) | |||

| 1 | B2 | Blue | 492.4 | 66 | 492.1 | 66 |

| 2 | B3 | Green | 559.8 | 36 | 559 | 36 |

| 3 | B4 | Red | 664.6 | 31 | 664.9 | 31 |

| 4 | B5 | Vegetation Red Edge 1 | 704.1 | 15 | 703.8 | 16 |

| 5 | B6 | Vegetation Red Edge 2 | 740.5 | 15 | 739.1 | 15 |

| 6 | B7 | Vegetation Red Edge 3 | 782.8 | 20 | 779.7 | 20 |

| 7 | B8 | NIR-1 | 832.8 | 106 | 832.9 | 106 |

| 8 | B8A | NIR-2 | 864.7 | 21 | 864.0 | 21 |

| 9 | B11 | SWIR 1 | 1613.7 | 91 | 1610.4 | 94 |

| 10 | B12 | SWIR 2 | 2202.4 | 175 | 2185.7 | 185 |

| Class Name | Class Color Legend | Class Id | Number of Parcels in Training Set | Number of Pixels in Training Set | Number of Parcels in Test Set | Number of Pixels in Test Set |

|---|---|---|---|---|---|---|

| maize |  | 0 | 178 | 16,720 | 32 | 2783 |

| sunflower |  | 1 | 132 | 9421 | 23 | 1918 |

| medicaco, clover |  | 2 | 140 | 12,348 | 25 | 2230 |

| wheat, barley, cereals |  | 3 | 149 | 14,124 | 26 | 2506 |

| olives |  | 4 | 68 | 4271 | 12 | 1061 |

| rapeseed |  | 5 | 58 | 5805 | 10 | 857 |

| cotton |  | 6 | 149 | 21,758 | 26 | 4881 |

| fallow land |  | 7 | 72 | 5232 | 13 | 641 |

| soya |  | 8 | 49 | 4381 | 9 | 980 |

| sugarbeet |  | 9 | 40 | 3180 | 7 | 1233 |

| oak |  | 10 | 47 | 5635 | 9 | 887 |

| pine trees |  | 11 | 31 | 2135 | 6 | 341 |

| shrubs |  | 12 | 40 | 11,731 | 7 | 1090 |

| water |  | 13 | 13 | 18,394 | 2 | 12,147 |

| urban |  | 14 | 18 | 3230 | 3 | 824 |

| Total | - | - | 1184 | 138,365 | 210 | 34,379 |

| Class Name | Temporal CNN | R-CNN | Random Forest | ||||||

|---|---|---|---|---|---|---|---|---|---|

| F1 Score | Precision | Recall | F1 Score | Precision | Recall | F1 Score | Precision | Recall | |

| maize | 83.87 | 88.59 | 79.86 | 84.87 | 86.19 | 83.91 | 76.02 | 70.43 | 82.89 |

| sunflower | 79.04 | 71.65 | 89.02 | 78.25 | 73.15 | 85.17 | 63.41 | 58.22 | 70.49 |

| medicaco, clover | 90.72 | 91.77 | 89.93 | 92.00 | 93.22 | 91.03 | 87.51 | 88.55 | 86.78 |

| wheat, barley, cereals | 93.09 | 92.08 | 94.25 | 92.61 | 91.18 | 94.22 | 81.92 | 72.51 | 94.53 |

| olives | 79.73 | 81.12 | 79.44 | 76.86 | 81.30 | 73.69 | 55.68 | 80.78 | 43.19 |

| rapeseed | 94.85 | 97.16 | 93.11 | 95.95 | 96.64 | 95.38 | 89.76 | 95.05 | 85.94 |

| cotton | 91.95 | 92.50 | 91.61 | 90.28 | 91.49 | 89.30 | 82.73 | 82.04 | 83.77 |

| fallow land | 59.30 | 67.27 | 54.79 | 59.38 | 64.88 | 56.59 | 14.51 | 61.40 | 8.81 |

| soya | 87.05 | 83.64 | 92.20 | 90.11 | 89.64 | 91.70 | 69.59 | 88.57 | 59.29 |

| sugarbeet | 81.34 | 83.54 | 80.98 | 84.50 | 87.29 | 83.19 | 66.83 | 92.58 | 53.73 |

| oak | 98.65 | 97.71 | 99.63 | 98.92 | 98.09 | 99.78 | 97.66 | 97.44 | 97.97 |

| pine trees | 91.61 | 96.84 | 87.81 | 92.58 | 95.45 | 90.85 | 77.01 | 97.07 | 64.94 |

| shrubs | 97.64 | 96.52 | 98.83 | 97.70 | 97.19 | 98.31 | 94.87 | 91.94 | 98.10 |

| water | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 100.00 | 99.49 | 99.06 | 99.97 |

| urban | 94.30 | 92.86 | 96.30 | 94.42 | 93.87 | 95.50 | 78.42 | 76.07 | 84.68 |

| Class Name | Temporal CNN | R-CNN | RF | |||

|---|---|---|---|---|---|---|

| Entropy (%) | Accuracy (%) | Entropy (%) | Accuracy (%) | Entropy (%) | Accuracy (%) | |

| maize | 11.97 | 81.17 | 11.16 | 87.75 | 45.11 | 81.67 |

| sunflower | 16.61 | 92.70 | 20.92 | 87.96 | 59.00 | 78.78 |

| medicaco, clover | 6.52 | 93.77 | 8.53 | 94.39 | 32.61 | 92.65 |

| wheat, barley, cereals | 10.09 | 89.82 | 10.28 | 88.23 | 47.48 | 94.45 |

| olives | 23.95 | 64.47 | 25.07 | 75.31 | 71.25 | 29.31 |

| Rape seed | 1.30 | 100.00 | 1.56 | 99.88 | 32.11 | 98.37 |

| cotton | 5.85 | 95.19 | 10.14 | 88.34 | 39.45 | 88.08 |

| Fallow land | 28.10 | 61.00 | 29.90 | 51.64 | 72.70 | 3.12 |

| soya | 7.10 | 98.57 | 4.42 | 100.00 | 43.92 | 72.96 |

| Sugar beet | 30.53 | 24.17 | 29.32 | 40.63 | 69.32 | 11.44 |

| oak | 0.26 | 100.00 | 0.72 | 100.00 | 14.40 | 100.00 |

| pine trees | 3.26 | 99.12 | 4.95 | 100.00 | 22.44 | 80.65 |

| shrubs | 1.91 | 99.82 | 5.85 | 98.26 | 18.60 | 100.00 |

| water | 0.00136 | 100.00 | 0.14 | 100.00 | 0.05 | 100.00 |

| urban | 2.29 | 98.18 | 4.16 | 99.15 | 63.74 | 88.35 |

| Average | 6.63 | 91.6 | 7.76 | 91.59 | 28.94 | 86.31 |

| RMSE of Uncertainty Assessment | 22.16 | 22.78 | 34.00 | |||

| Classifier 1 | Classifier 2 | Chi-Square | p-Value |

|---|---|---|---|

| Temporal CNN | R-CNN | 0.00294 | 0.96 |

| Temporal CNN | RF | 1244.94 | 1.04 × 10−272 |

| R-CNN | RF | 1146.88 | 2.13 × 10−251 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Papadopoulou, E.; Mallinis, G.; Siachalou, S.; Koutsias, N.; Thanopoulos, A.C.; Tsaklidis, G. Agricultural Land Cover Mapping through Two Deep Learning Models in the Framework of EU’s CAP Activities Using Sentinel-2 Multitemporal Imagery. Remote Sens. 2023, 15, 4657. https://doi.org/10.3390/rs15194657

Papadopoulou E, Mallinis G, Siachalou S, Koutsias N, Thanopoulos AC, Tsaklidis G. Agricultural Land Cover Mapping through Two Deep Learning Models in the Framework of EU’s CAP Activities Using Sentinel-2 Multitemporal Imagery. Remote Sensing. 2023; 15(19):4657. https://doi.org/10.3390/rs15194657

Chicago/Turabian StylePapadopoulou, Eleni, Giorgos Mallinis, Sofia Siachalou, Nikos Koutsias, Athanasios C. Thanopoulos, and Georgios Tsaklidis. 2023. "Agricultural Land Cover Mapping through Two Deep Learning Models in the Framework of EU’s CAP Activities Using Sentinel-2 Multitemporal Imagery" Remote Sensing 15, no. 19: 4657. https://doi.org/10.3390/rs15194657

APA StylePapadopoulou, E., Mallinis, G., Siachalou, S., Koutsias, N., Thanopoulos, A. C., & Tsaklidis, G. (2023). Agricultural Land Cover Mapping through Two Deep Learning Models in the Framework of EU’s CAP Activities Using Sentinel-2 Multitemporal Imagery. Remote Sensing, 15(19), 4657. https://doi.org/10.3390/rs15194657