Implementation Method of Automotive Video SAR (ViSAR) Based on Sub-Aperture Spectrum Fusion

Abstract

:1. Introduction

- Compared with other platforms, such as airborne and spaceborne, the automotive platform has slow speed, low altitude, and short distance characteristics. While motion error often significantly influences the instantaneous slant range, motion compensation is a vital issue to be solved in automotive SAR imaging.

- To achieve a 2-D high-resolution image, large, transmitted bandwidth and long integration time are necessary, which conflicts with the real-time imaging requirements in the driving scenario. Therefore, the realization of automotive SAR real-time imaging is also a problem that needs to be solved.

- In the automotive SAR imaging scene, the range-azimuth coupling of near targets is much more severe than that of distant targets, which means that there is a serious spatial variation problem. Some conventional imaging algorithms will cause defocus and distortion of the target. Therefore, the space-variant problem must be analyzed with specific imaging algorithms.

2. Modeling

2.1. Geometric Model

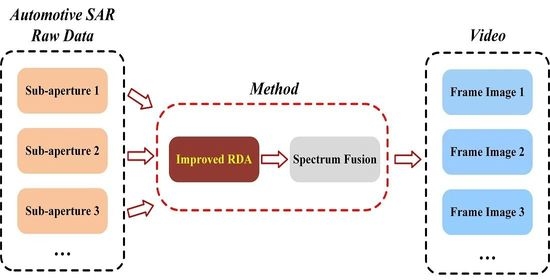

2.2. Basic Implementation Methods of Automotive ViSAR

2.3. The Frame Resolution of Automotive ViSAR Images

2.4. The Frame Rate of Automotive ViSAR Images

3. Imaging Approach

3.1. Sub-Aperture Signal Focusing Processing

3.1.1. Range Dimension Preprocessing

3.1.2. Azimuth Dimension Processing

3.2. Sub-Aperture Data Stitching

3.2.1. The First Frame Image Stitching

3.2.2. The i-th Frame Image Stitching

- (1)

- Processing of overlap sub-aperture data

- (2)

- Processing of new sub-aperture data

4. Simulation Results

4.1. Point Targets Simulation

4.2. Automotive SAR Real Data Experiments

5. Discussion

5.1. Size of the Range Blocks

5.2. Computational Complexity

6. Conclusions

Author Contributions

Funding

Conflicts of Interest

References

- Gao, C.; Wang, G.; Shi, W.; Wang, Z.; Chen, Y. Autonomous Driving Security: State of the Art and Challenges. IEEE Internet Things J. 2022, 9, 7572–7595. [Google Scholar] [CrossRef]

- Cui, H.; Wu, J.; Zhang, J.; Chowdhary, G.; Norris, W. 3D Detection and Tracking for On-road Vehicles with a Monovision Camera and Dual Low-cost 4D mmWave Radars. In Proceedings of the 2021 IEEE International Intelligent Transportation Systems Conference (ITSC), Indianapolis, IN, USA, 19–22 September 2021; pp. 2931–2937. [Google Scholar]

- Marti, E.; Miguel, M.A.; Garcia, F.; Perez, J. A Review of Sensor Technologies for Perception in Automated Driving. IEEE Intell. Transp. Syst. Mag. 2019, 11, 94–108. [Google Scholar] [CrossRef] [Green Version]

- Sun, S.; Petropulu, A.P.; Poor, H.V. MIMO Radar for Advanced Driver-Assistance Systems and Autonomous Driving: Advantages and Challenges. IEEE Signal Process. Mag. 2020, 37, 98–117. [Google Scholar] [CrossRef]

- Laribi, A.; Hahn, M.; Dickmann, J.; Waldschmidt, C. Performance Investigation of Automotive SAR Imaging. In Proceedings of the 2018 IEEE MTT-S International Conference on Microwaves for Intelligent Mobility (ICMIM), Munich, Germany, 15–17 April 2018; pp. 1–4. [Google Scholar]

- Tagliaferri, D.; Rizzi, M.; Nicoli, M.; Tebaldini, S.; Russo, L.; Monti-Guarnieri, A.; Prati, C.; Spagnolini, U. Navigation-Aided Automotive SAR for High-Resolution Imaging of Driving Environments. IEEE Access 2021, 9, 35599–35615. [Google Scholar] [CrossRef]

- Wu, H.; Zwick, T. Automotive SAR for Parking Lot Detection. In Proceedings of the 2009 German Microwave Conference, Munich, Germany, 16–18 March 2009; pp. 1–8. [Google Scholar]

- Iqbal, H.; Sajjad, M.B.; Mueller, M.; Waldschmidt, C. SAR imaging in an automotive scenario. In Proceedings of the 2015 IEEE 15th Mediterranean Microwave Symposium (MMS), Lecce, Italy, 30 November 2015–2 December 2015; pp. 1–4. [Google Scholar]

- Kobayashi, T.; Yamada, H.; Sugiyama, Y.; Muramatsu, S.; Yamagchi, Y. Study on Imaging Method and Doppler Effect for Millimeter Wave Automotive SAR. In Proceedings of the 2018 International Symposium on Antennas and Propagation (ISAP), Busan, Republic of Korea, 23–26 October 2018; pp. 1–2. [Google Scholar]

- Iqbal, H.; Schartel, M.; Roos, F.; Urban, J.; Waldschmidt, C. Implementation of a SAR Demonstrator for Automotive Imaging. In Proceedings of the 2018 18th Mediterranean Microwave Symposium (MMS), Istanbul, Turkey, 31 October 2018–2 November 2018; pp. 240–243. [Google Scholar]

- Rizzi, M.; Manzoni, M.; Tebaldini, S.; Monti–Guarnieri, A.; Prati, C.; Tagliaferri, D.; Nicoli, M.; Russo, L.; Mazzucco, C.; Alfageme, S.; et al. Multi-Beam Automotive SAR Imaging in Urban Scenarios. In Proceedings of the 2022 IEEE Radar Conference (RadarConf22), New York City, NY, USA, 21–25 March 2022. [Google Scholar]

- Wu, H.; Zwirello, L.; Li, X.; Reichardt, L.; Zwick, T. Motion compensation with one-axis gyroscope and two-axis accelerometer for automotive SAR. In Proceedings of the 2011 German Microwave Conference, Darmstadt, Germany, 14–16 March 2011; pp. 1–4. [Google Scholar]

- Harrer, F.; Pfeiffer, F.; Löffler, A.; Gisder, T.; Biebl, E. Synthetic aperture radar algorithm for a global amplitude map. In Proceedings of the 2017 14th Workshop on Positioning, Navigation and Communications (WPNC), Bremen, Germany, 25–26 October 2017; pp. 1–6. [Google Scholar]

- Farhadi, M.; Feger, R.; Fink, J.; Wagner, T.; Gonser, M.; Hasch, J.; Stelzer, A. Space-variant Phase Error Estimation and Correction for Automotive SAR. In Proceedings of the 2020 17th European Radar Conference (EuRAD), Utrecht, The Netherlands, 10–15 January 2021; pp. 310–313. [Google Scholar]

- Manzoni, M.; Rizzi, M.; Tebaldini, S.; Monti–Guarnieri, A.; Prati, C.; Tagliaferri, D.; Nicoli, M.; Russo, L.; Mazzucco, C.; Duque, S.; et al. Residual Motion Compensation in Automotive MIMO SAR Imaging. In Proceedings of the 2022 IEEE Radar Conference (RadarConf22), New York City, NY, USA, 21–25 March 2022; pp. 1–7. [Google Scholar]

- Tang, K.; Guo, X.; Liang, X.; Lin, Z. Implementation of Real-time Automotive SAR Imaging. In Proceedings of the 2020 IEEE 11th Sensor Array and Multichannel Signal Processing Workshop (SAM), Hangzhou, China, 8–11 June 2020. [Google Scholar]

- Fembacher, F.; Khalid, F.B.; Balazs, G.; Nugraha, D.T.; Roger, A. Real-Time Synthetic Aperture Radar for Automotive Embedded Systems. In Proceedings of the 2018 15th European Radar Conference (EuRAD), Madrid, Spain, 26–28 September 2018; pp. 517–520. [Google Scholar]

- Zhao, S.; Chen, J.; Yang, W.; Sun, B.; Wang, Y. Image formation method for spaceborne video SAR. In Proceedings of the 2015 IEEE 5th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Singapore, 1–4 September 2015; pp. 148–151. [Google Scholar]

- Liang, J.; Zhang, R.; Ma, L.; Lv, Z.; Jiao, K.; Wang, D.; Tan, Z. An Efficient Image Formation Algorithm for Spaceborne Video SAR. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Valencia, Spain, 22–27 July 2018; pp. 3675–3678. [Google Scholar]

- Wang, W.; An, D.; Zhou, Z. Preliminary Results of Airborne Video Synthetic Aperture Radar in THz Band. In Proceedings of the 2019 6th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Xiamen, China, 26–29 November 2019. [Google Scholar]

- Liu, C.; Wang, X.; Zhu, D. An Airborne Video SAR High-resolution Ground Playback System Based on FPGA. In Proceedings of the 2020 IEEE 11th Sensor Array and Multichannel Signal Processing Workshop (SAM), Hangzhou, China, 8–11 June 2020. [Google Scholar]

- Liu, T.; Xu, G.; Zhang, B. A Video SAR Imaging Algorithm for Micro Millimeter-Wave Radar. In Proceedings of the 2021 IEEE 4th International Conference on Electronic Information and Communication Technology (ICEICT), Xi’an, China, 18–20 August 2021; pp. 410–414. [Google Scholar]

- Song, X.; Yu, W. Derivation and application of stripmap Video SAR parameter relations. J. Univ. Chin. Acad. Sci. 2016, 33, 121–127. [Google Scholar]

- Tian, X.; Liu, J.; Mallick, M.; Huang, K. Simultaneous Detection and Tracking of Moving-Target Shadows in ViSAR Imagery. IEEE Trans. Geosci. Remote Sens. 2020, 59, 1182–1199. [Google Scholar] [CrossRef]

- Zhong, C.; Ding, J.; Zhang, Y. Video SAR Moving Target Tracking Using Joint Kernelized Correlation Filter. IEEE J. Sel. Top. Appl. Earth Observat. Remote Sens. 2022, 15, 1481–1493. [Google Scholar] [CrossRef]

- Miller, J.; Bishop, E.; Doerry, A. An application of backprojection for video SAR image formation exploiting a subaperature circular shift register. Proc. SPIE 2013, 8746, 874609. [Google Scholar]

- Bishop, E.; Linnehan, R.; Doerry, A. Video-SAR using higher order Taylor terms for differential range. In Proceedings of the 2016 IEEE Radar Conference (RadarConf), Philadelphia, PA, USA, 2–6 May 2016; pp. 1–4. [Google Scholar]

- Song, X.; Yu, W. Processing video-SAR data with the fast backprojection method. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 2838–2848. [Google Scholar] [CrossRef]

- Li, H.; Li, j.; Hou, Y.; Zhang, L.; Xing, M.; Bao, Z. Synthetic aperture radar processing using a novel implementation of fast factorized back-projection. In Proceedings of the IET International Radar Conference 2013, Xi’an, China, 14–16 April 2013; pp. 1–6. [Google Scholar]

- Farhadi, M.; Feger, R.; Fink, J.; Gonser, M.; Hasch, J.; Stelzer, A. Adaption of Fast Factorized Back-Projection to Automotive SAR Applications. In Proceedings of the 2019 16th European Radar Conference (EuRAD), Paris, France, 2–4 October 2019; pp. 261–264. [Google Scholar]

- Zuo, F.; Min, R.; Pi, Y.; Li, J.; Hu, R. Improved Method of Video Synthetic Aperture Radar Imaging Algorithm. IEEE Geosci. Remote Sens. Lett. 2019, 16, 897–901. [Google Scholar] [CrossRef]

- Gao, A.; Sun, B.; Li, J.; Li, C. A Parameter-Adjusting Autoregistration Imaging Algorithm for Video Synthetic Aperture Radar. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5215414. [Google Scholar] [CrossRef]

- Gorham, L.A.; Rigling, B.D. Scene size limits for polar format algorithm. IEEE Trans. Aerosp. Electron. Syst. 2016, 52, 73–84. [Google Scholar] [CrossRef]

- Sun, G.; Liu, Y.; Xing, M.; Wang, S.; Guo, L.; Yang, J. A Real-Time Imaging Algorithm Based on Sub-Aperture CS-Dechirp for GF3-SAR Data. Sensors 2018, 18, 2562–2577. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Zhou, F.; Yang, J.; Sun, G.; Zhang, J. A Real-Time Imaging Processing Method Based on Modified RMA with Sub-Aperture Images Fusion for Spaceborne Spotlight SAR. In Proceedings of the 2018 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September 2020–2 October 2020; pp. 1905–1908. [Google Scholar]

- Cai, Y.; Zhang, X.; Jiang, J. Echo modeling and signal analysis of frequency modulated continuous wave continuous wave synthetic aperture radar. Chin. J. Radio Sci. 2015, 30, 1157–1163. [Google Scholar]

- Kang, Y.; Jung, D.; Park, S. Validity of Stop-and-Go Approximation in High-Resolution Ku-band FMCW SAR with High-Velocity Platform. In Proceedings of the 2021 7th Asia-Pacific Conference on Synthetic Aperture Radar (APSAR), Bali, Indonesia, 1–3 November 2021; pp. 1–4. [Google Scholar]

- Bao, Z.; Xing, M.; Wang, T. Radar Imaging Technology; Publishing House of Electronics Industry: Beijing, China, 2014. [Google Scholar]

- Yan, H.; Mao, X.; Zhang, J.; Zhu, D. Frame rate analysis of video synthetic aperture radar (ViSAR). In Proceedings of the 2016 International Symposium on Antennas and Propagation (ISAP), Okinawa, Japan, 24–28 October 2016; pp. 446–447. [Google Scholar]

- Wang, B.; Hu, Z.; Guan, W.; Liu, Q.; Guo, J. Study on the echo signal model and R-D imaging algorithm for FMCW SAR. In Proceedings of the IET International Radar Conference 2015, Hangzhou, China, 14–16 October 2015; pp. 1–6. [Google Scholar]

- Li, X.; Liu, G.; Ni, J. Autofocusing of ISAR images based on entropy minimization. IEEE Trans. Aerosp. Electron. Syst. 1999, 35, 1240–1252. [Google Scholar] [CrossRef]

| Parameter | |||||

|---|---|---|---|---|---|

| value | 1~20 m/s | 77 Ghz | 3 GHZ | 51.2 μs | −4°–4° |

| Parameter | Value |

|---|---|

| Carrier Frequency | 77 GHz |

| Sampling Frequency | 10 MHz |

| Frequency Sweep Period | 51.2 μs |

| Bandwidth | 3600 MHz |

| Speed | 10 m/s |

| Reference slant range | 20 m |

| Height | 1.5 m |

| Method | Target | IRW (cm) | PSLR (dB) | ISLR (dB) |

|---|---|---|---|---|

| Traditional RDA | PT3 | 3.86 | −10.35 | −7.98 |

| PT4 | 3.66 | −10.34 | −7.93 | |

| PT5 | 3.70 | −10.33 | −8.03 | |

| Proposed | PT3 | 3.19 | −13.21 | −10.37 |

| PT4 | 3.25 | −13.24 | −10.36 | |

| PT5 | 3.19 | −13.23 | −10.33 | |

| FFBPA | PT3 | 3.19 | −13.34 | −10.32 |

| PT4 | 3.19 | −13.24 | −10.29 | |

| PT5 | 3.31 | −13.27 | −10.34 |

| Size of Sub-Aperture | 64 | 128 | 256 | 512 | 1024 |

|---|---|---|---|---|---|

| FFBPA/Proposed | 6.088 | 8.244 | 12.543 | 17.727 | 25.013 |

| RMA/Proposed | 2.863 | 3.019 | 3.904 | 4.689 | 6.675 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Guo, P.; Wu, F.; Tang, S.; Jiang, C.; Liu, C. Implementation Method of Automotive Video SAR (ViSAR) Based on Sub-Aperture Spectrum Fusion. Remote Sens. 2023, 15, 476. https://doi.org/10.3390/rs15020476

Guo P, Wu F, Tang S, Jiang C, Liu C. Implementation Method of Automotive Video SAR (ViSAR) Based on Sub-Aperture Spectrum Fusion. Remote Sensing. 2023; 15(2):476. https://doi.org/10.3390/rs15020476

Chicago/Turabian StyleGuo, Ping, Fuen Wu, Shiyang Tang, Chenghao Jiang, and Changjie Liu. 2023. "Implementation Method of Automotive Video SAR (ViSAR) Based on Sub-Aperture Spectrum Fusion" Remote Sensing 15, no. 2: 476. https://doi.org/10.3390/rs15020476

APA StyleGuo, P., Wu, F., Tang, S., Jiang, C., & Liu, C. (2023). Implementation Method of Automotive Video SAR (ViSAR) Based on Sub-Aperture Spectrum Fusion. Remote Sensing, 15(2), 476. https://doi.org/10.3390/rs15020476