Classification of Land Cover in Complex Terrain Using Gaofen-3 SAR Ascending and Descending Orbit Data

Abstract

:1. Introduction

2. Materials and Methods

2.1. Study Area

2.2. Data and Preprocessing

2.2.1. Gaofen-3 Data and Preprocessing

2.2.2. Auxiliary Data

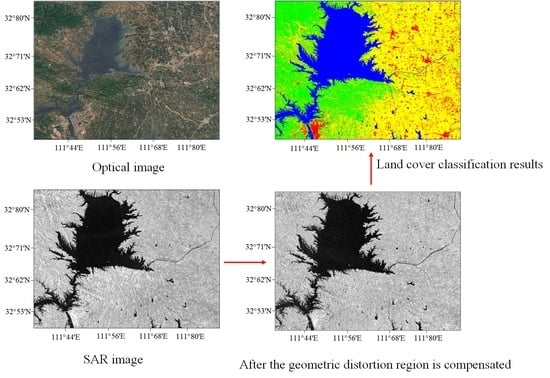

2.3. Geometric Distortion Region Analysis

2.4. Land-Cover Classification Method for Complex Terrain Area

2.4.1. Geometric Distortion Area Detection

- Expansion operation:

- Corrosion operation:

2.4.2. Geometric Distortion Area Compensation

{[M(i,j)∈AN]} →R(i,j) = M(i,j)

2.4.3. Feature Extraction

- Entropy indicates the randomness of target scattering:where , is the eigenvalues of the polarization matrix C2;

- Alpha is the average scattering mechanism from surface scattering to volume scattering and then to dihedral angle scattering:where is the internal degree of freedom of the scatterer, with a value ranging from 0° to 90°;

- Anisotropy indicates the degree of anisotropy of target scattering:

2.4.4. J-M Distance

2.4.5. 2D-CNN Classifier

- Uncompensated_DOPC_DpRVI: The feature combination of DOPC (backscattering coefficient, SPAN, DI, PR, H, A, ) and DpRVI based on the uncompensated HH and HV polarization images of Gaofen-3.

- Compensated_DOPC: The feature combination of DOPC based on compensated HH and HV polarization images of Gaofen-3.

- Compensated_DOPC_DpRVI: The feature combination of DOPC and DpRVI based on the compensated HH and HV polarization images of Gaofen-3.

2.4.6. Quantitative Analysis

- Precision:

- Recall:

- F1_Score:

- OA:

- Kappa coefficient:where TP is a positive sample predicted by the model to be positive, TN is a negative sample predicted by the model to be negative, FP is a negative sample predicted by the model to be positive, and FN is a positive sample predicted by the model to be negative.

3. Results and Discussion

3.1. Geometric Distortion Region Analysis

3.2. Geometric Distortion Area Detection

3.3. Geometric Distortion Area Compensated

3.4. J-M Distance Analysis of Features Combinaion

3.5. Quantitative Analysis and Discussion

3.5.1. Quantitative Evaluation

3.5.2. Qualitative Evaluation

4. Discussion

5. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Ghazifard, A.; Akbari, E.; Shirani, K.; Safaei, H. Evaluating land subsidence by field survey and D-InSAR technique in Damaneh City, Iran. J. Arid. Land. 2017, 9, 778. [Google Scholar] [CrossRef]

- Bauer-Marschallinger, B.; Cao, S.; Tupas, M.E.; Roth, F.; Navacchi, C.; Melzer, T.; Freeman, V.; Wagner, W. Satellite-Based Flood Mapping through Bayesian Inference from a Sentinel-1 SAR Datacube. Remote Sens. 2022, 14, 3673. [Google Scholar] [CrossRef]

- Hall-Beyer, M. Practical guidelines for choosing GLCM textures to use in landscape classification tasks over a range of moderate spatial scales. Int. J. Remote Sens. 2017, 38, 1312. [Google Scholar] [CrossRef]

- Yu, R.; Wang, G.; Shi, T.; Zhang, W.; Lu, C.; Zhang, T. Potential of Land Cover Classification Based on GF-1 and GF-3 Data. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 2747–2750. [Google Scholar] [CrossRef]

- Shi, X.; Xu, F. Land Cover Semantic Segmentation of High-Resolution Gaofen-3 SAR Image. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium IGARSS, Brussels, Belgium, 11–16 July 2021; pp. 3049–3052. [Google Scholar] [CrossRef]

- Dingle, R.; Davidson, A.; McNairn, H.; Hosseini, M. Synthetic Aperture Radar (SAR) image processing for operational space-based agriculture mapping. Int. J. Remote Sens. 2020, 41, 7112. [Google Scholar] [CrossRef]

- Cui, Z.; Zhang, M.; Cao, Z.; Cao, C. Image Data Augmentation for SAR Sensor via Generative Adversarial Nets. IEEE Access 2019, 7, 42255. [Google Scholar] [CrossRef]

- Wu, L.; Wang, H.; Li, Y.; Guo, Z.; Li, N. A Novel Method for Layover Detection in Mountainous Areas with SAR Images. Remote Sens. 2021, 13, 4882. [Google Scholar] [CrossRef]

- Mishra, V.N.; Prasad, R.; Rai, P.K. Performance evaluation of textural features in improving land use/land cover classification accuracy of heterogeneous landscape using multi-sensor remote sensing data. Earth Sci. Inform. 2019, 12, 71. [Google Scholar] [CrossRef]

- Luo, S.; Tong, L. A Fast Identification Algorithm for Geometric Distorted Areas of Sar Images. IEEE Int. Geosci. Remote Sens. Symp. 2021, 7, 5111. [Google Scholar] [CrossRef]

- Huanxin, Z.; Cai, B.; Fan, C.; Ren, Y. Layover and shadow detection based on distributed spaceborne single-baseline InSAR. IOP Conf. Ser. Earth Environ. Sci. 2014, 17, 22–26. [Google Scholar] [CrossRef]

- Wang, S.; Xu, H.; Yang, B.; Luo, Y. Improved InSAR Layover and Shadow Detection using Multi-Feature. In Proceedings of the IGARSS 2020—2020 IEEE International Geoscience and Remote Sensing Symposium, Waikoloa, HI, USA, 26 September–2 October 2020; pp. 28–31. [Google Scholar] [CrossRef]

- Rossi, C.; Eineder, M. High-Resolution InSAR Building Layovers Detection and Exploitation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 6457. [Google Scholar] [CrossRef]

- Gini, F.; Lombardini, F.; Montanari, M. Layover solution in multibaseline SAR interferometry. IEEE Trans. Aerosp. Electron. Syst. 2002, 38, 1344. [Google Scholar] [CrossRef]

- Eineder, M.; Adam, N. A maximum-likelihood estimator to simultaneously unwrap, geocode, and fuse SAR interferograms from different viewing geometries into one digital elevation model. IEEE Trans. Geosci. Remote Sens. 2005, 43, 24. [Google Scholar] [CrossRef]

- Wan, Z.; Shao, Y.; Xie, C.; Zhang, F. Ortho-rectification of high resolution SAR image in mountain area by DEM. Int. Conf. Geoinf. 2010, 6, 1. [Google Scholar] [CrossRef]

- Ren, Y.; Zou, H.X.; Qin, X.X.; Ji, K.F. A method for layover and shadow detecting in InSAR. J. Cent. South Univ. (Sci. Technol.) 2013, 44, 396. [Google Scholar]

- Zhang, T.T.; Yang, H.L.; Li, D.M.; Li, Y.J.; Liu, J.N. Identification of layover and shadows regions in SAR images: Taking Badong as an example. Bull. Surv. Mapp. 2019, 11, 85. [Google Scholar] [CrossRef]

- Mahdavi, S.; Amani, M.; Maghsoudi, Y. The Effects of Orbit Type on Synthetic Aperture RADAR (SAR) Backscatter. Remote Sens. Lett. 2019, 10, 120–128. [Google Scholar] [CrossRef]

- Borlaf-Mena, O.; Badea, M.; Tanase, A. Influence of the Mosaicking Algorithm on Sentinel-1 Land Cover Classification over Rough Terrain. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Brussels, Belgium, 11–16 July 2021; p. 6646. [Google Scholar] [CrossRef]

- Cheng, J.; Sun, G.; Zhang, A. Synergetic Use of Descending and Ascending SAR with Optical Data for Impervious Surface Mapping. In Proceedings of the 2021 IEEE International Geoscience and Remote Sensing Symposium (IGARSS), Brussels, Belgium, 11–16 July 2021; pp. 4272–4275. [Google Scholar] [CrossRef]

- Khan, J.; Ren, X.; Hussain, M.A.; Jan, M.Q. Monitoring Land Subsidence Using PS-InSAR Technique in Rawalpindi and Islamabad, Pakistan. Remote Sens. 2022, 14, 3722. [Google Scholar] [CrossRef]

- Mestre-Quereda, A.; Lopez-Sanchez, J.M.; Vicente-Guijalba, F.; Jacob, A.W.; Engdahl, M.E. Time-Series of Sentinel-1 Interferometric Coherence and Backscatter for Crop-Type Mapping. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 4070. [Google Scholar] [CrossRef]

- Zhi, F.; Dong, Z.; Guga, S.; Bao, Y.; Han, A.; Zhang, J.; Bao, Y. Rapid and Automated Mapping of Crop Type in Jilin Province UsingHistorical Crop Labels and the Google Earth Engine. Remote Sens. 2022, 14, 4028. [Google Scholar] [CrossRef]

- Amani, M.; Salehi, B.; Mahdavi, S.; Granger, J.E.; Brisco, B.; Hanson, A. Wetland Classification Using Multi-source and Multi-temporal Optical Remote Sensing Data in Newfoundland and Labrador, Canada. Can. J. Remote Sens. 2017, 43, 360. [Google Scholar] [CrossRef]

- Amarsaikhan, D.; Blotevogel, H.H.; Van Genderen, J.L.; Ganzorig, M.; Gantuya, R.; Nergui, B. Fusing High-resolution SAR and Optical Imagery for Improved Urban Land Cover Study and Classification. Int. J. Image Data Fusion. 2010, 1, 83. [Google Scholar] [CrossRef]

- Guo, X.; Li, K.; Wang, Z.; Li, H.; Yang, Z. Fine classification of rice by multi-temporal compact polarization SAR based on SVM+SFS strategy. Remote Sens. Land Resour. 2018, 30, 5060. [Google Scholar] [CrossRef]

- Sayedain, S.A.; Maghsoudi, Y.; Eini-Zinab, S. Assessing the use of cross-orbit Sentinel-1 images in land cover classification. Int. J. Remote Sens. 2020, 41, 7801. [Google Scholar] [CrossRef]

- Shen, G.; Fu, W. Water Body Extraction using GF-3 Polsar Data—A Case Study in Poyang Lake. In Proceedings of the IEEE International Geoscience and Remote Sensing Symposium (IGRSS), Waikoloa, HI, USA, 26 September–2 October 2020; p. 4762. [Google Scholar] [CrossRef]

- Li, D.; Zhang, Y. Unified huynen phenomenological decomposition of radar targets and its classification applications. IEEE Trans. Geosci. Remote Sens. 2016, 54, 723. [Google Scholar] [CrossRef]

- Miao, Y.; Wu, J.; Li, Z.; Yang, J. A Generalized Wavefront Curvature Corrected Polar Format Algorithm to Focus Bistatic SAR Under Complicated Flight Paths. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2020, 13, 3757. [Google Scholar] [CrossRef]

- Baghermanesh, S.S.; Jabari, S.; McGrath, H. Urban Flood Detection Using TerraSAR-X and SAR Simulated Reflectivity Maps. Remote Sens. 2022, 14, 6154. [Google Scholar] [CrossRef]

- Doulgeris, A.P. An automatic u-distribution and markov random field segmentation algorithm for PolSAR images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 1819–1827. [Google Scholar] [CrossRef]

- Wang, X.; Zhou, C.; Feng, X.; Cheng, C.; Fu, B. Testing the Efficiency of Using High-Resolution Data From GF-1 in Land Cover Classifications. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2018, 11, 3051. [Google Scholar] [CrossRef]

- Tassi, A.; Vizzari, M. Object-Oriented LULC Classification in Google Earth Engine Combining SNIC, GLCM, and Machine Learning Algorithms. Remote Sens. 2020, 12, 3776. [Google Scholar] [CrossRef]

- Atwood, D.K.; Thirion-Lefevre, L. Polarimetric phase and implications for urban classification. IEEE Trans. Geosci. Remote Sens. 2017, 56, 1278. [Google Scholar] [CrossRef]

- Mandal, D.; Kumar, V.; Ratha, D. Dual polarimetric radar vegetation index for crop growth monitoring using sentinel-1 SAR data. Remote Sens. Environ. 2020, 247, 111954. [Google Scholar] [CrossRef]

- Bhogapurapu, N.; Dey, S.; Mandal, D.; Bhattacharya, A.; Karthikeyan, L.; McNairn, H.; Rao, Y.S. Soil moisture retrieval over croplands using dual-pol L-band GRD SAR data. Remote Sens. Environ. 2022, 271, 112900. [Google Scholar] [CrossRef]

| Gaofen-3 Parameter | Master Image | Slave Image |

|---|---|---|

| Product | SLC | SLC |

| Image mode | Fine stripe mode II | Fine stripe mode II |

| Incidence angle | 31.597256 | 31.783311 |

| Polarization | HH, HV | HH, HV |

| Pixel interval | 10 × 10 m | 10 × 10 m |

| Band | C | C |

| Pass direction | Ascending | Descending |

| Date | 9 July 2017 | 9 July 2017 |

| Parameter | Formula |

|---|---|

| XS | |

| YS | |

| ZS | |

| XV | |

| YV | |

| ZV |

| Building | Farmland | Woodland | Water | OA | Kappa | ||

|---|---|---|---|---|---|---|---|

| Uncompensated_DOPC_DpRVI | Precision | 0.83 | 0.86 | 0.84 | 0.98 | 0.88 | 0.86 |

| Recall | 0.73 | 0.94 | 0.86 | 0.99 | |||

| F1_Score | 0.78 | 0.90 | 0.85 | 0.99 | |||

| Compensated_DOPC | Precision | 0.90 | 0.86 | 0.76 | 0.99 | 0.89 | 0.87 |

| Recall | 0.88 | 0.92 | 0.80 | 0.98 | |||

| F1_Score | 0.89 | 0.88 | 0.78 | 0.99 | |||

| Compensated_DOPC_DpRVI | Precision | 0.93 | 0.89 | 0.90 | 0.99 | 0.93 | 0.92 |

| Recall | 0.91 | 0.95 | 0.93 | 0.98 | |||

| F1_Score | 0.92 | 0.92 | 0.91 | 0.98 |

| Image | Number of Pixel | Area (Km2) |

|---|---|---|

| Geometric distortion region | 3,460,644 | 346.1 |

| Compensation area | 2,986,287 | 298.6 |

| Study area | 14,792,088 | 1479.2 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, H.; Yang, H.; Huang, Y.; Wu, L.; Guo, Z.; Li, N. Classification of Land Cover in Complex Terrain Using Gaofen-3 SAR Ascending and Descending Orbit Data. Remote Sens. 2023, 15, 2177. https://doi.org/10.3390/rs15082177

Wang H, Yang H, Huang Y, Wu L, Guo Z, Li N. Classification of Land Cover in Complex Terrain Using Gaofen-3 SAR Ascending and Descending Orbit Data. Remote Sensing. 2023; 15(8):2177. https://doi.org/10.3390/rs15082177

Chicago/Turabian StyleWang, Hongxia, Haoran Yang, Yabo Huang, Lin Wu, Zhengwei Guo, and Ning Li. 2023. "Classification of Land Cover in Complex Terrain Using Gaofen-3 SAR Ascending and Descending Orbit Data" Remote Sensing 15, no. 8: 2177. https://doi.org/10.3390/rs15082177

APA StyleWang, H., Yang, H., Huang, Y., Wu, L., Guo, Z., & Li, N. (2023). Classification of Land Cover in Complex Terrain Using Gaofen-3 SAR Ascending and Descending Orbit Data. Remote Sensing, 15(8), 2177. https://doi.org/10.3390/rs15082177