FEPVNet: A Network with Adaptive Strategies for Cross-Scale Mapping of Photovoltaic Panels from Multi-Source Images

Abstract

:1. Introduction

2. Datasets

3. Methodology

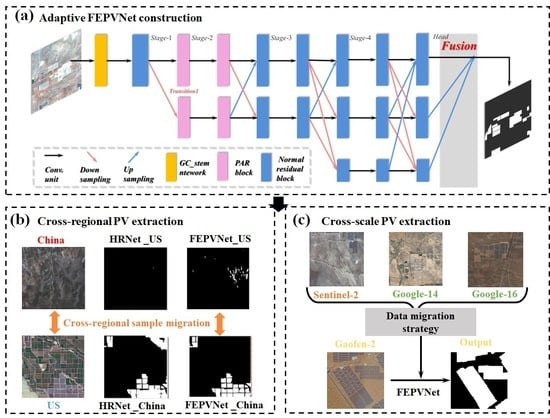

3.1. Proposal of a Filter-Embedded Neural Network

3.1.1. Stem Network Embedded Filtering

- 1.

- To perform the image smoothing, a Gaussian filter with a two-dimensional Gaussian kernel is used to carry out a convolution calculation to complete a weighted average of the image. This process is effective in filtering out the high-frequency noise in the image. The calculation process is as follows:

- 2.

- The edges are determined based on the image’s gradient amplitude and gradient direction. Here, the gradient amplitude and direction are calculated using the Sobel operator for the image with the following equation:

- 3.

- To remove the non-boundary points, non-maximum suppression is applied to the entire image. This is achieved by calculating the amplitude of each pixel point relative to the gradient direction, comparing the amplitudes of pixel points with the same gradient direction, and retaining only those with the highest amplitude in the same direction. The remaining pixel points are then eliminated.

- 4.

- To detect the edges, we employ the double threshold algorithm. We define strong and weak thresholds by setting pixel points with gradient values below the weak threshold to 0 and those exceeding the strong threshold to 255. For pixel points whose gradient values fall between the strong and weak thresholds, we keep pixel points whose eight neighborhoods are larger than the strong threshold and set them to 255, while the rest are assigned a value of 0. These points are then connected to form the object’s edges.

- 1.

- Slide the filter window across the image, with the center of the window overlapping the position of a pixel in the image.

- 2.

- Obtain the gray value of the corresponding pixel in this window.

- 3.

- Sort the grayscale values obtained from smallest to largest and find the median value in the middle of the sorted list.

- 4.

- Assign the median value to the pixel at the window’s center.

3.1.2. The Main Body Network Adaptability Improvements

3.2. Evaluation of the Model Adaptability

3.3. Evaluation Metrics

4. Results

4.1. Ablation Experiment of Filter-Embedded Neural Network

4.1.1. The Results of Different Stem Models

4.1.2. The Results of Models in Different Stages

4.2. The Filter-Embedded Neural Network for PV Panel Mapping

4.3. The Adaptability of the Model under Different Regions

4.4. The Adaptability of the Model under Multi-Source Images

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- BP. Statistical Review of World Energy. 2022. Available online: https://www.bp.com/en/global/corporate/energy-economics/statistical-review-of-world-energy.html (accessed on 20 October 2022).

- Chu, S.; Majumdar, A. Opportunities and challenges for a sustainable energy future. Nature 2012, 488, 294–303. [Google Scholar] [CrossRef] [PubMed]

- Kabir, E.; Kumar, P.; Kumar, S.; Adelodun, A.A.; Kim, K.-H. Solar energy: Potential and future prospects. Renew. Sustain. Energy Rev. 2018, 82, 894–900. [Google Scholar] [CrossRef]

- Dai, Z.; Liu, H.; Le, Q.V.; Tan, M. Coatnet: Marrying convolution and attention for all data sizes. Adv. Neural Inf. Process. Syst. 2021, 34, 3965–3977. [Google Scholar]

- Perez, R.; Kmiecik, M.; Herig, C.; Renné, D. Remote monitoring of PV performance using geostationary satellites. Sol. Energy 2001, 71, 255–261. [Google Scholar] [CrossRef]

- Jiang, H.; Yao, L.; Lu, N.; Qin, J.; Liu, T.; Liu, Y.; Zhou, C. Multi-resolution dataset for photovoltaic panel segmentation from satellite and aerial imagery. Earth Syst. Sci. Data 2021, 13, 5389–5401. [Google Scholar] [CrossRef]

- Niazi, K.A.K.; Akhtar, W.; Khan, H.A.; Yang, Y.; Athar, S. Hotspot diagnosis for solar photovoltaic modules using a Naive Bayes classifier. Sol. Energy 2019, 190, 34–43. [Google Scholar]

- Tsanakas, J.A.; Chrysostomou, D.; Botsaris, P.N.; Gasteratos, A. Fault diagnosis of photovoltaic modules through image processing and Canny edge detection on field thermographic measurements. Int. J. Sustain. Energy 2015, 34, 351–372. [Google Scholar] [CrossRef]

- Aghaei, M.; Leva, S.; Grimaccia, F. PV power plant inspection by image mosaicing techniques for IR real-time images. In Proceedings of the 2016 IEEE 43rd Photovoltaic Specialists Conference (PVSC), Portland, OR, USA, 5–10 June 2016; pp. 3100–3105. [Google Scholar]

- Joshi, B.; Hayk, B.; Al-Hinai, A.; Woon, W.L. Rooftop detection for planning of solar PV deployment: A case study in Abu Dhabi. In Proceedings of the Data Analytics for Renewable Energy Integration: Second ECML PKDD Workshop, DARE 2014, Nancy, France, 19 September 2014; pp. 137–149. [Google Scholar]

- Jiang, M.; Lv, Y.; Wang, T.; Sun, Z.; Liu, J.; Yu, X.; Yan, J. Performance analysis of a photovoltaics aided coal-fired power plant. Energy Procedia 2019, 158, 1348–1353. [Google Scholar] [CrossRef]

- Ji, S.; Zhang, Z.; Zhang, C.; Wei, S.; Lu, M.; Duan, Y. Learning discriminative spatiotemporal features for precise crop classification from multi-temporal satellite images. Int. J. Remote Sens. 2020, 41, 3162–3174. [Google Scholar] [CrossRef]

- Wang, M.; Cui, Q.; Sun, Y.; Wang, Q. Photovoltaic panel extraction from very high-resolution aerial imagery using region–line primitive association analysis and template matching. ISPRS J. Photogramm. Remote Sens. 2018, 141, 100–111. [Google Scholar] [CrossRef]

- Sahu, A.; Yadav, N.; Sudhakar, K. Floating photovoltaic power plant: A review. Renew. Sustain. Energy Rev. 2016, 66, 815–824. [Google Scholar] [CrossRef]

- Al Garni, H.Z.; Awasthi, A. Solar PV power plant site selection using a GIS-AHP based approach with application in Saudi Arabia. Appl. Energy 2017, 206, 1225–1240. [Google Scholar] [CrossRef]

- Hammoud, M.; Shokr, B.; Assi, A.; Hallal, J.; Khoury, P. Effect of dust cleaning on the enhancement of the power generation of a coastal PV-power plant at Zahrani Lebanon. Sol. Energy 2019, 184, 195–201. [Google Scholar] [CrossRef]

- Zhang, X.; Xu, M.; Wang, S.; Huang, Y.; Xie, Z. Mapping photovoltaic power plants in China using Landsat, random forest, and Google Earth Engine. Earth Syst. Sci. Data 2022, 14, 3743–3755. [Google Scholar] [CrossRef]

- Du, P.; Bai, X.; Tan, K.; Xue, Z.; Samat, A.; Xia, J.; Li, E.; Su, H.; Liu, W. Advances of Four Machine Learning Methods for Spatial Data Handling: A Review. J. Geovis. Spat. Anal. 2020, 4, 13. [Google Scholar] [CrossRef]

- Gao, X.; Wu, M.; Li, C.; Niu, Z.; Chen, F.; Huang, W. Influence of China’s Overseas power stations on the electricity status of their host countries. Int. J. Digit. Earth 2022, 15, 416–436. [Google Scholar] [CrossRef]

- Li, E.; Xia, J.; Du, P.; Lin, C.; Samat, A. Integrating Multilayer Features of Convolutional Neural Networks for Remote Sensing Scene Classification. IEEE Trans. Geosci. Remote Sens. 2017, 55, 5653–5665. [Google Scholar] [CrossRef]

- Huang, H.; Huang, J.; Feng, Q.; Liu, J.; Li, X.; Wang, X.; Niu, Q. Developing a Dual-Stream Deep-Learning Neural Network Model for Improving County-Level Winter Wheat Yield Estimates in China. Remote Sens. 2022, 14, 5280. [Google Scholar] [CrossRef]

- Samat, A.; Li, E.; Wang, W.; Liu, S.; Liu, X. HOLP-DF: HOLP Based Screening Ultrahigh Dimensional Subfeatures in Deep Forest for Remote Sensing Image Classification. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 8287–8298. [Google Scholar] [CrossRef]

- Tang, W.; Yang, Q.; Xiong, K.; Yan, W. Deep learning based automatic defect identification of photovoltaic module using electroluminescence images. Sol. Energy 2020, 201, 453–460. [Google Scholar] [CrossRef]

- Deitsch, S.; Christlein, V.; Berger, S.; Buerhop-Lutz, C.; Maier, A.; Gallwitz, F.; Riess, C. Automatic classification of defective photovoltaic module cells in electroluminescence images. Sol. Energy 2019, 185, 455–468. [Google Scholar] [CrossRef]

- Li, X.; Yang, Q.; Lou, Z.; Yan, W. Deep learning based module defect analysis for large-scale photovoltaic farms. IEEE Trans. Energy Convers. 2018, 34, 520–529. [Google Scholar] [CrossRef]

- Malof, J.M.; Rui, H.; Collins, L.M.; Bradbury, K.; Newell, R. Automatic solar photovoltaic panel detection in satellite imagery. In Proceedings of the 2015 International Conference on Renewable Energy Research and Applications (ICRERA), Palermo, Italy, 22–25 November 2015; pp. 1428–1431. [Google Scholar]

- Yuan, J.; Yang, H.H.L.; Omitaomu, O.A.; Bhaduri, B.L. Large-scale solar panel mapping from aerial images using deep convolutional networks. In Proceedings of the 2016 IEEE International Conference on Big Data (Big Data), Washington, DC, USA, 5–8 December 2016; pp. 2703–2708. [Google Scholar]

- Jumaboev, S.; Jurakuziev, D.; Lee, M. Photovoltaics plant fault detection using deep learning techniques. Remote Sens. 2022, 14, 3728. [Google Scholar] [CrossRef]

- Xu, C.; Du, X.; Fan, X.; Giuliani, G.; Hu, Z.; Wang, W.; Liu, J.; Wang, T.; Yan, Z.; Zhu, J.; et al. Cloud-based storage and computing for remote sensing big data: A technical review. Int. J. Digit. Earth 2022, 15, 1417–1445. [Google Scholar] [CrossRef]

- Xu, C.; Du, X.; Jian, H.; Dong, Y.; Qin, W.; Mu, H.; Yan, Z.; Zhu, J.; Fan, X. Analyzing large-scale Data Cubes with user-defined algorithms: A cloud-native approach. Int. J. Appl. Earth Obs. Geoinf. 2022, 109, 102784. [Google Scholar] [CrossRef]

- Qi, Z.; Xuelong, L. Big data: New methods and ideas in geological scientific research. Big Earth Data 2019, 3, 1–7. [Google Scholar] [CrossRef]

- Kruitwagen, L.; Story, K.T.; Friedrich, J.; Byers, L.; Skillman, S.; Hepburn, C. A global inventory of photovoltaic solar energy generating units. Nature 2021, 598, 604–610. [Google Scholar] [CrossRef]

- Yu, J.; Wang, Z.; Majumdar, A.; Rajagopal, R. DeepSolar: A Machine Learning Framework to Efficiently Construct a Solar Deployment Database in the United States. Joule 2018, 2, 2605–2617. [Google Scholar] [CrossRef]

- Xia, Z.; Li, Y.; Guo, X.; Chen, R. High-resolution mapping of water photovoltaic development in China through satellite imagery. Int. J. Appl. Earth Obs. Geoinf. 2022, 107, 102707. [Google Scholar] [CrossRef]

- Zhang, W.; Du, P.; Fu, P.; Zhang, P.; Tang, P.; Zheng, H.; Meng, Y.; Li, E. Attention-Aware Dynamic Self-Aggregation Network for Satellite Image Time Series Classification. IEEE Trans. Geosci. Remote Sens. 2022, 60, 1–17. [Google Scholar] [CrossRef]

- Tang, P.; Du, P.; Xia, J.; Zhang, P.; Zhang, W. Channel Attention-Based Temporal Convolutional Network for Satellite Image Time Series Classification. IEEE Geosci. Remote Sens. Lett. 2022, 19, 1–5. [Google Scholar] [CrossRef]

- Zhu, S.; Du, B.; Zhang, L.; Li, X. Attention-based multiscale residual adaptation network for cross-scene classification. IEEE Trans. Geosci. Remote Sens. 2021, 60, 1–15. [Google Scholar] [CrossRef]

- Sun, K.; Zhao, Y.; Jiang, B.; Cheng, T.; Xiao, B.; Liu, D.; Mu, Y.; Wang, X.; Liu, W.; Wang, J. High-resolution representations for labeling pixels and regions. arXiv 2019, arXiv:1904.04514. [Google Scholar]

- Wang, J.; Sun, K.; Cheng, T.; Jiang, B.; Deng, C.; Zhao, Y.; Liu, D.; Mu, Y.; Tan, M.; Wang, X.; et al. Deep High-Resolution Representation Learning for Visual Recognition. IEEE Trans. Pattern Anal. Mach. Intell. 2021, 43, 3349–3364. [Google Scholar] [CrossRef]

- Canny, J. A Computational Approach to Edge Detection. IEEE Trans. Pattern Anal. Mach. Intell. 1986, PAMI-8, 679–698. [Google Scholar] [CrossRef]

- Kanopoulos, N.; Vasanthavada, N.; Baker, R.L. Design of an image edge detection filter using the Sobel operator. IEEE J. Solid-State Circuits 1988, 23, 358–367. [Google Scholar] [CrossRef]

- Wang, X. Laplacian Operator-Based Edge Detectors. IEEE Trans. Pattern Anal. Mach. Intell. 2007, 29, 886–890. [Google Scholar] [CrossRef] [PubMed]

- Huber, M.F.; Hanebeck, U.D. Gaussian Filter based on Deterministic Sampling for High Quality Nonlinear Estimation. IFAC Proc. Vol. 2008, 41, 13527–13532. [Google Scholar] [CrossRef]

- Kumar, A.; Sodhi, S.S. Comparative Analysis of Gaussian Filter, Median Filter and Denoise Autoenocoder. In Proceedings of the 2020 7th International Conference on Computing for Sustainable Global Development (INDIACom), New Delhi, India, 12–14 March 2020; pp. 45–51. [Google Scholar]

- Liu, H.; Liu, F.; Fan, X.; Huang, D. Polarized self-attention: Towards high-quality pixel-wise mapping. Neurocomputing 2022, 506, 158–167. [Google Scholar] [CrossRef]

- Howard, A.G.; Zhu, M.; Chen, B.; Kalenichenko, D.; Wang, W.; Weyand, T.; Andreetto, M.; Adam, H. MobileNets: Efficient Convolutional Neural Networks for Mobile Vision Applications. arXiv 2017, arXiv:1704.04861. [Google Scholar]

- Liu, Z.; Lin, Y.; Cao, Y.; Hu, H.; Wei, Y.; Zhang, Z.; Lin, S.; Guo, B. Swin transformer: Hierarchical vision transformer using shifted windows. In Proceedings of the IEEE/CVF International Conference on Computer Vision, Montreal, BC, Canada, 11–17 October 2021; pp. 10012–10022. [Google Scholar]

| Dataset Name | Resolution | Region | Number of Training Sets | Number of Validation Sets | Number of Test Sets |

|---|---|---|---|---|---|

| Sentinel-2 | 10 m | China | 1037 | 49 | 177 |

| Sentinel-2 | 10 m | US | 426 | 51 | 98 |

| Google-14 | 10 m | China | 1000 | 0 | 0 |

| Google-16 | 2 m | China | 1000 | 0 | 0 |

| Gaofen-2 | 2 m | China | 0 | 52 | 118 |

| Region | Model | Recall | Precision | F1-Score | IoU |

|---|---|---|---|---|---|

| China | stem | 0.9052 | 0.9489 | 0.9265 | 0.8631 |

| LG_stem | 0.8965 | 0.9336 | 0.9147 | 0.8428 | |

| SG_stem | 0.8830 | 0.9057 | 0.8942 | 0.8087 | |

| CG_stem | 0.9065 | 0.9226 | 0.9145 | 0.8425 | |

| CM_stem | 0.9315 | 0.9472 | 0.9393 | 0.8856 | |

| US | stem | 0.9521 | 0.9595 | 0.9558 | 0.9153 |

| LG_stem | 0.9498 | 0.9564 | 0.9531 | 0.9105 | |

| SG_stem | 0.9444 | 0.9443 | 0.9444 | 0.8946 | |

| CG_stem | 0.9541 | 0.9700 | 0.9620 | 0.9268 | |

| CM_stem | 0.9619 | 0.9691 | 0.9655 | 0.9333 |

| Region | Model | Recall | Precision | F1-Score | IoU | Params | Flops |

|---|---|---|---|---|---|---|---|

| China | PAR_stage2 | 0.9306 | 0.9423 | 0.9364 | 0.8805 | 65,939,858 | 376.34 G |

| PAR_stage3 | 0.9241 | 0.9372 | 0.9306 | 0.8702 | 67,400,786 | 385.47 G | |

| PAR_stage4 | 0.9281 | 0.9481 | 0.9380 | 0.8833 | 70,555,922 | 385.45 G | |

| DDS_stage2 | 0.9202 | 0.9369 | 0.9285 | 0.8665 | 65,120,210 | 355.52 G | |

| DDS_stage3 | 0.9247 | 0.9149 | 0.9198 | 0.8515 | 53,557,586 | 260.13 G | |

| DDS_stage4 | 0.9217 | 0.9265 | 0.9241 | 0.8590 | 28,401,362 | 259.82 G | |

| SDS_stage2 | 0.9215 | 0.9366 | 0.9290 | 0.8675 | 65,068,946 | 354.14 G | |

| SDS_stage3 | 0.9147 | 0.9388 | 0.9266 | 0.8633 | 52,735,058 | 252.09 G | |

| SDS_stage4 | 0.9277 | 0.9380 | 0.9328 | 0.8742 | 25,973,522 | 251.93 G | |

| US | PAR_stage2 | 0.9422 | 0.9655 | 0.9537 | 0.9116 | 65,939,858 | 376.34 G |

| PAR_stage3 | 0.9318 | 0.9605 | 0.9459 | 0.8975 | 67,400,786 | 385.47 G | |

| PAR_stage4 | 0.9467 | 0.9615 | 0.9540 | 0.9122 | 70,555,922 | 385.45 G | |

| DDS_stage2 | 0.9409 | 0.9650 | 0.9528 | 0.9099 | 65,120,210 | 355.52 G | |

| DDS_stage3 | 0.9361 | 0.9617 | 0.9487 | 0.9025 | 53,557,586 | 260.13 G | |

| DDS_stage4 | 0.9410 | 0.9582 | 0.9495 | 0.9039 | 28,401,362 | 259.82 G | |

| SDS_stage2 | 0.9439 | 0.9609 | 0.9523 | 0.9090 | 65,068,946 | 354.14 G | |

| SDS_stage3 | 0.9417 | 0.9690 | 0.9552 | 0.9142 | 52,735,058 | 252.09 G | |

| SDS_stage4 | 0.9412 | 0.9633 | 0.9521 | 0.9087 | 25,973,522 | 251.93 G |

| Region | Model | Recall | Precision | F1-Score | IoU | Params | Flops |

|---|---|---|---|---|---|---|---|

| China | U-Net | 0.4174 | 0.5316 | 0.4676 | 0.3052 | 31,054,344 | 64,914,029 |

| HRNet | 0.9052 | 0.9489 | 0.9265 | 0.8631 | 65,847,122 | 374.51 G | |

| FEPVNet | 0.9309 | 0.9493 | 0.9400 | 0.8868 | 65,939,858 | 376.34 G | |

| SwinTransformer | 0.9309 | 0.9460 | 0.9384 | 0.8840 | 59,830,000 | 936.71 G | |

| FESPVNet | 0.9246 | 0.9503 | 0.9373 | 0.8820 | 26,066,258 | 253.77 G | |

| US | U-Net | 0.8717 | 0.6224 | 0.7262 | 0.5702 | 31,054,344 | 64,914,029 |

| HRNet | 0.9521 | 0.9595 | 0.9558 | 0.9153 | 65,847,122 | 374.51 G | |

| FEPVNet | 0.9641 | 0.9695 | 0.9668 | 0.9358 | 65,939,858 | 376.34 G | |

| SwinTransformer | 0.9591 | 0.9726 | 0.9658 | 0.9339 | 59,830,000 | 936.71 G | |

| FESPVNet | 0.9567 | 0.9679 | 0.9623 | 0.9273 | 26,066,258 | 253.77 G |

| Region | Model | Recall | Precision | F1-Score | IoU |

|---|---|---|---|---|---|

| China | HRNet_US | 0.3755 | 0.9372 | 0.5362 | 0.3663 |

| FEPVNet_US | 0.4645 | 0.9539 | 0.6248 | 0.4544 | |

| US | HRNet_China | 0.8288 | 0.4869 | 0.6134 | 0.4424 |

| FEPVNet_China | 0.6872 | 0.6221 | 0.6530 | 0.4848 |

| Model | Strategy | Recall | Precision | F1-Score | IoU |

|---|---|---|---|---|---|

| HRNet | Sentinel-2 | 0.2620 | 0.9216 | 0.4084 | 0.2563 |

| Sentinel-2 Google-14 | 0.3346 | 0.9036 | 0.4884 | 0.3231 | |

| Sentinel-2 Google-16 | 0.8940 | 0.9162 | 0.9050 | 0.8265 | |

| Sentinel-2 Google-14 16 | 0.8889 | 0.9269 | 0.9075 | 0.8308 | |

| FEPVNet | Sentinel-2 | 0.2883 | 0.9083 | 0.4377 | 0.2801 |

| Sentinel-2 Google-14 | 0.6681 | 0.8724 | 0.7567 | 0.6086 | |

| Sentinel-2 Google-16 | 0.8864 | 0.9437 | 0.9142 | 0.8419 | |

| Sentinel-2 Google-14 16 | 0.9084 | 0.9192 | 0.9138 | 0.8413 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2023 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Su, B.; Du, X.; Mu, H.; Xu, C.; Li, X.; Chen, F.; Luo, X. FEPVNet: A Network with Adaptive Strategies for Cross-Scale Mapping of Photovoltaic Panels from Multi-Source Images. Remote Sens. 2023, 15, 2469. https://doi.org/10.3390/rs15092469

Su B, Du X, Mu H, Xu C, Li X, Chen F, Luo X. FEPVNet: A Network with Adaptive Strategies for Cross-Scale Mapping of Photovoltaic Panels from Multi-Source Images. Remote Sensing. 2023; 15(9):2469. https://doi.org/10.3390/rs15092469

Chicago/Turabian StyleSu, Buyu, Xiaoping Du, Haowei Mu, Chen Xu, Xuecao Li, Fang Chen, and Xiaonan Luo. 2023. "FEPVNet: A Network with Adaptive Strategies for Cross-Scale Mapping of Photovoltaic Panels from Multi-Source Images" Remote Sensing 15, no. 9: 2469. https://doi.org/10.3390/rs15092469

APA StyleSu, B., Du, X., Mu, H., Xu, C., Li, X., Chen, F., & Luo, X. (2023). FEPVNet: A Network with Adaptive Strategies for Cross-Scale Mapping of Photovoltaic Panels from Multi-Source Images. Remote Sensing, 15(9), 2469. https://doi.org/10.3390/rs15092469