Compound Jamming Recognition Based on a Dual-Channel Neural Network and Feature Fusion

Abstract

:1. Introduction

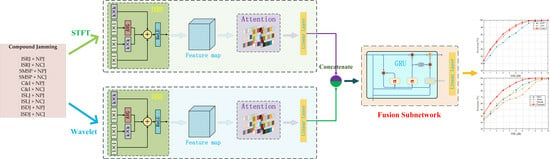

- Compound jamming signals consisting of noise suppression jamming and deception jamming are considered. In order to enrich the feature space and boost the representation ability of compound jamming, features obtained by the time-frequency transform and the wavelet transform are simultaneously inputted in parallel to the designed dual-channel network.

- To enhance the extraction and learning ability for task-relevant features, the diverse branch block (DBB) structure and a parameter-free attention module are incorporated into the proposed network. Then, a gated recurrent unit (GRU)-based subnetwork is designed for feature fusion to further improve the recognition performance.

- Compared with the existing three recognition methods, the proposed method achieves higher recognition accuracy with lower time complexity under different JNRs. More importantly, we have used the semi-measured jamming signals to validate the feasibility and generalization ability of the proposed method.

2. Materials

2.1. Jamming Models

2.1.1. Intermittent Sampling and Forwarding Jamming (ISFJ)

2.1.2. Chopping and Interleaving Jamming

2.1.3. Smeared Spectrum Jamming

2.1.4. Noise Convolutional Jamming (NCJ)

2.1.5. Noise Productive Jamming (NPJ)

2.1.6. Compound Jamming Models

2.2. Feature Extraction

2.2.1. The Short-Time Fourier Transform

2.2.2. The Continuous Wavelet Transform (CWT)

3. Approach

3.1. The Structure of the Proposed Network

3.2. The Subnetwork for Feature Fusion

3.3. Simulation and Training Configurations

4. Results

4.1. Recognition Performance of the Proposed Method

4.2. Comparisons with Existing Methods

4.2.1. Recognition Performance Comparison

4.2.2. Fusion Strategy Comparison

4.2.3. The Computational Complexity

5. Discussion

6. Conclusions

Author Contributions

Funding

Data Availability Statement

Conflicts of Interest

References

- Meng, Y.; Yu, L.; Wei, Y. Multi-Label Radar Compound Jamming Signal Recognition Using Complex-Valued CNN with Jamming Class Representation Fusion. Remote Sens. 2023, 15, 5180. [Google Scholar] [CrossRef]

- Lei, Z.; Zhang, Z.; Zhou, B.; Chen, H.; Dou, G.; Wang, Y. Transient Interference Suppression Method Based on an Improved TK Energy Operator and Fuzzy Reasoning. IEEE Trans. Geosci. Remote Sens. 2023, 61, 5106214. [Google Scholar] [CrossRef]

- Zhang, H.; Yu, L.; Chen, Y.; Wei, Y. Fast Complex-Valued CNN for Radar Jamming Signal Recognition. Remote Sens. 2021, 13, 2867. [Google Scholar] [CrossRef]

- Lei, Z.; Qu, Q.; Chen, H.; Zhang, Z.; Dou, G.; Wang, Y. Mainlobe Jamming Suppression with Space–Time Multichannel via Blind Source Separation. IEEE Sens. J. 2023, 23, 17042–17053. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, Z.; Wu, R.; Xu, X.; Guo, Z. Jamming Recognition Algorithm Based on Variational Mode Decomposition. IEEE Sens. J. 2023, 23, 17341–17349. [Google Scholar] [CrossRef]

- Lv, Q.; Quan, Y.; Sha, M.; Feng, W.; Xing, M. Deep Neural Network-Based Interrupted Sampling Deceptive Jamming Countermeasure Method. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2022, 15, 9073–9085. [Google Scholar] [CrossRef]

- Yang, X.; Ruan, H. A Recognition Method of Deception Jamming Based on Image Zernike Moment Feature of Time-frequency Distribution. Mod. Radar 2018, 40, 91–95. [Google Scholar]

- Du, C.; Tang, B. Novel Unconventional-Active-Jamming Recognition Method for Wideband Radars Based on Visibility Graphs. Sensors 2019, 19, 2344. [Google Scholar] [CrossRef]

- Wei, S.; Qu, Q.; Zeng, X.; Liang, J.; Shi, J.; Zhang, X. Self-Attention Bi-LSTM Networks for Radar Signal Modulation Recognition. IEEE Trans. Microw. Theory Tech. 2021, 69, 5160–5172. [Google Scholar] [CrossRef]

- Feng, M.; Wang, Z. Interference Recognition Based on Singular Value Decomposition and Neural Network. J. Electron. Inf. Technol. 2020, 42, 2573–2578. [Google Scholar]

- Zhengtu, S.H.A.O.; Dengrong, X.U.; Wenli, X.U. Radar Active Jamming Recognition Based on LSTM And Residual Network. Syst. Eng. Electron. 2023, 45, 416–423. [Google Scholar]

- Qu, Q.; Wei, S.; Liu, S.; Liang, J.; Shi, J. JRNet: Jamming Recognition Networks for Radar Compound Suppression Jamming Signals. IEEE Trans. Veh. Technol. 2020, 69, 15035–15045. [Google Scholar] [CrossRef]

- Zhou, H.; Wang, L.; Guo, Z. Recognition of Radar Compound Jamming Based on Convolutional Neural Network. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 7380–7394. [Google Scholar] [CrossRef]

- Zou, W.; Xie, K.; Lin, J. Light-weight Deep Learning Method for Active Jamming Recognition Based on Improved MobileViT. IET Radar Sonar Navig. 2023, 17, 1299–1311. [Google Scholar] [CrossRef]

- Jin, Z.; Zhang, X.; Tan, S.; Zhang, X.; Wei, J. Jamming Identification Based on Inverse Residual Neural Network with Integrated Time-Frequency Channel Attention. J. Signal Process. 2023, 39, 343–355. [Google Scholar]

- Krayani, A.; Alam, A.S.; Marcenaro, L.; Nallanathan, A.; Regazzoni, C. Automatic Jamming Signal Classification in Cognitive UAV Radios. IEEE Trans. Veh. Technol. 2022, 71, 12972–12988. [Google Scholar] [CrossRef]

- Wang, P.Y.; Cheng, Y.F.; Xu, H.; Shang, G. Jamming Classification Using Convolutional Neural Network-Based Joint Multi-Domain Feature Extraction. J. Signal Process. 2022, 38, 915–925. [Google Scholar]

- Kong, Y.; Xia, S.; Dong, L.; Yu, X.; Cui, G. Intelligent Recognition Method of Radar Active Jamming Based on Parallel Deep Learning Network. Mod. Radar 2021, 43, 9–14. [Google Scholar]

- Zhou, H.; Dong, C.; Wu, R.; Xu, X.; Guo, Z. Feature Fusion Based on Bayesian Decision Theory for Radar Deception Jamming Recognition. IEEE Access 2021, 9, 16296–16304. [Google Scholar] [CrossRef]

- Greco, M.; Gini, F.; Farina, A. Radar Detection and Classification of Jamming Signals Belonging to a Cone Class. IEEE Trans. Signal Process. 2008, 56, 1984–1993. [Google Scholar] [CrossRef]

- Xiao, J.; Wei, X.; Sun, J. Interrupted-Sampling Multi-Strategy Forwarding Jamming with Amplitude Constraints Based on Simultaneous Transmission and Reception Technology. Digit. Signal Process. 2023, 147, 1051–2004. [Google Scholar] [CrossRef]

- Wei, J.; Li, Y.; Yang, R.; Wang, J.; Ding, M.; Ding, J. A Nonuniformly Distributed Multipulse Coded Waveform to Combat Azimuth Interrupted Sampling Repeater Jamming in SAR. IEEE Trans. Aerosp. Electron. Syst. 2023, 59, 9054–9066. [Google Scholar] [CrossRef]

- Zhang, L.; Wang, G.; Zhang, X.; Li, S.; Xin, T. Interrupted-Sampling Repeater Jamming Adaptive Suppression Algorithm Based on Fractional Dictionary. Syst. Eng. Electron. 2020, 42, 1439–1448. [Google Scholar]

- Zeng, L.; Chen, H.; Zhang, Z.; Liu, W.; Wang, Y.; Ni, L. Cutting Compensation in the Time-Frequency Domain for Smeared Spectrum Jamming Suppression. Electronics 2022, 11, 1970. [Google Scholar] [CrossRef]

- Han, X.; He, H.; Zhang, Q.; Yang, L.; He, Y.; Li, Z. Main-Lobe Jamming Suppression Method for Phased Array Netted Radar Based on MSNR-BSS. IEEE Sens. J. 2022, 22, 22972–22984. [Google Scholar] [CrossRef]

- Wang, Y.; Zhu, S.; Lan, L.; Xu, J.; Li, X. Suppression of Noise Convolution Jamming with FDA-MIMO Radar. J. Signal Process. 2023, 39, 191–201. [Google Scholar]

- Sun, G.; Xing, S.; Huang, D.; Li, Y.; Wang, X. Jamming Method of Intermittent Sampling Against SAR-GMTI Based on Noise Multiplication Modulation. Syst. Eng. Electron. 2022, 44, 3059–3071. [Google Scholar]

- Lv, Q.; Quan, Y.; Feng, W.; Sha, M.; Dong, S.; Xing, M. Radar Deception Jamming Recognition Based on Weighted Ensemble CNN With Transfer Learning. IEEE Trans. Geosci. Remote Sens. 2022, 60, 5107511. [Google Scholar] [CrossRef]

- Ding, X.; Zhang, X.; Han, J.; Ding, G. Diverse Branch Block: Building a Convolution as an Inception-like Unit. In Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition, Nashville, TN, USA, 19–25 June 2021; pp. 10886–10895. [Google Scholar]

- Yang, L.; Zhang, R.Y.; Li, L.; Xie, X. SimAM: A Simple, Parameter-Free Attention Module for Convolutional Neural Networks. In Proceedings of the 38th International Conference on Machine Learning, Virtual, 18–24 July 2021; Volume 139, pp. 11863–11874. [Google Scholar]

- Chung, J.; Gulcehre, C.; Cho, K.; Bengio, Y. Gated Feedback Recurrent Neural Network. In Proceedings of the 32nd International Conference on Machine Learning, Lille, France, 6–11 July 2015; pp. 2067–2075. [Google Scholar]

- Kairouz, P.; McMahan, H.B.; Avent, B.; Bellet, A.; Bennis, M.; Bhagoji, A.N.; Bonawitz, K.; Charles, Z.; Cormode, G.; Cummings, R.; et al. Adam: A Method for Stochastic Optimization. In Proceedings of the International Conference on Learning Representations, San Diego, CA, USA, 7–9 May 2015. [Google Scholar]

| Input Size | Output Size | Layers/Modules | Kernel, Stride, Padding |

|---|---|---|---|

| 224 × 224 × 3 | 112 × 112×64 | Conv-1 | 7, 2, 3 |

| 112 × 112 × 64 | 56 ×56 × 64 | Max pool | 3, 2, 1 |

| 56 × 56 × 64 | 56 ×56 × 64 | Module-1 × 2 | DBB |

| 3, 1, 1 + Attention | |||

| 56 × 56 × 64 | 28× 28 × 128 | Module-2 × 2 | DBB |

| 3, 1, 1 + Attention | |||

| 28 × 28 × 128 | 14× 14 × 256 | Module-3 × 2 | DBB |

| 3, 1, 1 + Attention | |||

| 14 × 14 × 256 | 7× 7 × 512 | Module-4 × 2 | DBB |

| 3, 1, 1 + Attention | |||

| 7 × 7 ×512 | 1× 1 × 512 | Average pool | 7, 1, 0 |

| 512 | 10 | Linear | - |

| Proposed Method (%) | MBv2 (%) | JRNet (%) | IResNet (%) | |

|---|---|---|---|---|

| ISRJ + NPJ | 88.69 | 86.20 | 82.81 | 82.14 |

| ISRJ + NCJ | 72.59 | 54.14 | 47.04 | 77.02 |

| SMSP + NPJ | 93.98 | 82.18 | 89.23 | 87.83 |

| SMSP + NCJ | 99.14 | 99.59 | 71.23 | 98.37 |

| C&I + NPJ | 86.07 | 77.02 | 89.14 | 82.86 |

| C&I + NCJ | 79.10 | 45.77 | 90.95 | 36.23 |

| ISLJ + NPJ | 91.81 | 82.41 | 75.40 | 83.94 |

| ISLJ + NCJ | 74.90 | 71.28 | 28.90 | 43.19 |

| ISDJ + NPJ | 93.22 | 92.63 | 89.60 | 90.64 |

| ISDJ + NCJ | 67.80 | 65.90 | 37.99 | 75.58 |

| mOA | 84.73 | 75.71 | 70.23 | 75.78 |

| Proposed Method | MBv2 | JRNet | IResNet | |

|---|---|---|---|---|

| ISRJ + NPJ | 0.8915 | 0.845 | 0.8389 | 0.8405 |

| ISRJ + NCJ | 0.7231 | 0.6401 | 0.5588 | 0.5856 |

| SMSP + NPJ | 0.9475 | 0.8885 | 0.8239 | 0.9186 |

| SMSP + NCJ | 0.9956 | 0.9979 | 0.8319 | 0.9917 |

| C&I + NPJ | 0.8989 | 0.8453 | 0.8015 | 0.8454 |

| C&I + NCJ | 0.8093 | 0.6163 | 0.5344 | 0.5257 |

| ISLJ + NPJ | 0.9262 | 0.8361 | 0.8432 | 0.8652 |

| ISLJ + NCJ | 0.7409 | 0.5848 | 0.4479 | 0.5691 |

| ISDJ + NPJ | 0.8755 | 0.7993 | 0.8021 | 0.8118 |

| ISDJ + NCJ | 0.6698 | 0.5532 | 0.4998 | 0.6071 |

| Average | 0.8478 | 0.7603 | 0.6981 | 0.7561 |

| Proposed Method | MBv2 | JRNet | IResNet | |

|---|---|---|---|---|

| Time (ms) | 11.38 | 24.23 | 24.56 | 11.71 |

| LPs (M) | 11.4 | 2 | 11.69 | 4.04 |

| FLOPs (G) | 1.82 | 0.82 | 1.82 | 0.398 |

Disclaimer/Publisher’s Note: The statements, opinions and data contained in all publications are solely those of the individual author(s) and contributor(s) and not of MDPI and/or the editor(s). MDPI and/or the editor(s) disclaim responsibility for any injury to people or property resulting from any ideas, methods, instructions or products referred to in the content. |

© 2024 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Chen, H.; Chen, H.; Lei, Z.; Zhang, L.; Li, B.; Zhang, J.; Wang, Y. Compound Jamming Recognition Based on a Dual-Channel Neural Network and Feature Fusion. Remote Sens. 2024, 16, 1325. https://doi.org/10.3390/rs16081325

Chen H, Chen H, Lei Z, Zhang L, Li B, Zhang J, Wang Y. Compound Jamming Recognition Based on a Dual-Channel Neural Network and Feature Fusion. Remote Sensing. 2024; 16(8):1325. https://doi.org/10.3390/rs16081325

Chicago/Turabian StyleChen, Hao, Hui Chen, Zhenshuo Lei, Liang Zhang, Binbin Li, Jiajia Zhang, and Yongliang Wang. 2024. "Compound Jamming Recognition Based on a Dual-Channel Neural Network and Feature Fusion" Remote Sensing 16, no. 8: 1325. https://doi.org/10.3390/rs16081325

APA StyleChen, H., Chen, H., Lei, Z., Zhang, L., Li, B., Zhang, J., & Wang, Y. (2024). Compound Jamming Recognition Based on a Dual-Channel Neural Network and Feature Fusion. Remote Sensing, 16(8), 1325. https://doi.org/10.3390/rs16081325