Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection

Abstract

:1. Introduction

- (1)

- Image algebra: To detect changes directly, image differencing and image ratios are widely used to detect changes between multi-temporal images. Among them, image differencing (subtraction rule) is a robust and efficient method for detecting changes, and Change Vector Analysis (CVA) [8] represents its conceptual extension with an integrated theoretical framework, therein providing good performance.

- (2)

- Post-classification: Changed objects are acquired from independent classified multi-temporal maps, and land cover changes can be easily identified from the separately-classified maps. Therefore, numerous classification methods [9,10] have been proposed to improve change detection accuracy. In particular, a novel change-detection-driven transfer learning approach [11] was proposed to update land cover maps via the classification of image time series.

- (3)

- Feature learning and transformation: In this category, new learned (transformed) or selected features are utilized to distinguish changes, especially using a distance metric. Among the change feature learning methods, physically-meaningful features and learned change features both lead to a good performance and have been applied in various domains. As physically-meaningful features, vegetation indices, forest canopy variables and water indices are often extracted to identify changes in specific ground-object types [12,13]. For learned features and transformations, various features or transformed feature spaces are learned to highlight the change information to detect a changed region more easily than when using the original spectral information of multi-temporal images, such as in Principal Component Analysis (PCA) [14], Multivariate Alteration Detection (MAD) [15], subspace learning [16,17], sparse learning [18] and slow features [19].

- (4)

- Other advanced methods: Change detection can be formulated as a statistical hypothesis test using physical models [20]. The metric learning method [21] is also an effective method of detecting changes using well-learned distances. In addition, canonical correlation analysis [22,23] and clustering methods [24,25] have been proposed and found to perform well in unsupervised change detection tasks.

2. Image Preparation

3. Method

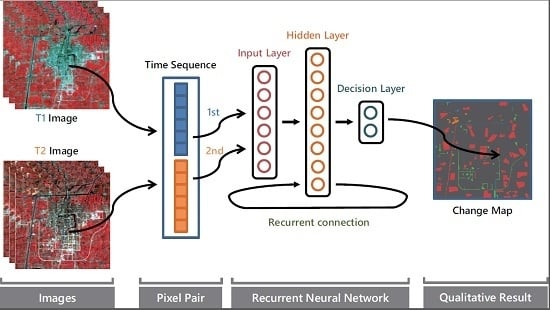

3.1. Our Proposed REFEREE Model

3.2. LSTM Hidden Unit and Forward Pass of the REFEREE Model

3.3. Optimization

4. Experimental Setup and Design

4.1. Competitors

4.2. Setup of Parameters

4.3. Experimental Design

5. Results and Discussion

5.1. Results and Discussion of the Binary Experiments

5.2. Results and Discussion of the Transfer Experiments

5.3. Results and Discussion of the Multi-Class Change Experiments

6. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Hermosilla, T.; Wulder, M.A.; White, J.C.; Coops, N.C.; Hobart, G.W. Regional detection, characterization, and attribution of annual forest change from 1984 to 2012 using landsat-derived time series metrics. Remote Sens. Environ. 2015, 170, 121–132. [Google Scholar] [CrossRef]

- Singh, A. Digital change detection techniques using remotely-sensed data. Int. J. Remote Sens. 1989, 10, 989–1003. [Google Scholar] [CrossRef]

- Yuan, Y.; Meng, Y.; Lin, L.; Sahli, H.; Yue, A.; Chen, J.b.; Zhao, Z.M.; Kong, Y.L.; He, D.X. Continuous Change Detection and Classification Using Hidden Markov Model: A Case Study for Monitoring Urban Encroachment onto Farmland in Beijing. Remote Sens. 2015, 7, 15318–15339. [Google Scholar] [CrossRef]

- Koltunov, A.; Ustin, S.L. Early fire detection using non-linear mul-titemporal prediction of thermal imagery. Remote Sens. Environ. 2007, 110, 18–28. [Google Scholar] [CrossRef]

- Wen, D.; Huang, X.; Zhang, L.; enediktsson, J.A. A novel automatic changedetection method for urban high-resolution remotely sensed imagery based on multiindex scene representation. IEEE Trans. Geosci. Remote Sens. 2016, 54, 609–625. [Google Scholar] [CrossRef]

- Shapiro, A.C.; Trettin, C.C.; Küchly, H.; Alavinapanah, S.; Bandeira, S. The Mangroves of the Zambezi Delta: Increase in Extent Observed via Satellite from 1994 to 2013. Remote Sens. 2015, 7, 16504–16518. [Google Scholar] [CrossRef]

- Robson, B.A.; Holbling, D.; Nuth, C.; Strozzi, T.; Dahl, S.O. Decadal Scale Changes in Glacier Area in the Hohe Tauern National Park (Austria) Determined by Object-Based Image Analysis. Remote Sens. 2016, 8. [Google Scholar] [CrossRef]

- Bovolo, F.; Bruzzone, L. A theoretical framework for unsupervised change detection based on change vector analysis in the polar domain. IEEE Trnas. Geosci. Remote Sens. 2007, 45, 218–236. [Google Scholar] [CrossRef]

- Sinha, P.; Kumar, L.; Reid, N. Rank-Based Methods for Selection of Landscape Metrics for Land Cover Pattern Change Detection. Remote Sens. 2016, 8, 107. [Google Scholar] [CrossRef]

- Basnet, B.; Vodacek, A. Tracking Land Use/Land Cover Dynamics in Cloud Prone Areas Using Moderate Resolution Satellite Data: A Case Study in Central Africa. Remote Sens. 2015, 7, 6683–6709. [Google Scholar] [CrossRef]

- Demir, B.; Bovolo, F.; Bruzzone, L. Updating land-cover maps by classification of image time series: A novel change-detection-driven transfer learning approach. IEEE Trans. Geosci. Remote Sens. 2013, 51, 300–312. [Google Scholar] [CrossRef]

- Ganchev, T.D.; Jahn, O.; Marques, M.I.; Figueired, J.M.; Schuchmann, K.L. Automated acoustic detection of vanellus chilensis lampronotus. Remote Sens. Environ. 2015, 42, 6098–6111. [Google Scholar] [CrossRef]

- Morsier, F.; Tuia, D.; Borgeaud, M.; Gass, V.; Thiran, J.P. Semi-supervised novelty detection using svm entire solution path. IEEE Trans. Geosci. Remote Sens. 2013, 51, 1939–1950. [Google Scholar] [CrossRef]

- Parmentier, B. Characterization of Land Transitions Patterns from Multivariate Time Series Using Seasonal Trend Analysis and Principal Component Analysis. Remote Sens. 2014, 6, 12639–12665. [Google Scholar] [CrossRef]

- Nielsen, A.A. The regularized iteratively reweighted mad method for change detection in multi- and hyperspectral data. IEEE Trans. Image Process. 2007, 16, 463–478. [Google Scholar] [CrossRef] [PubMed]

- Wu, C.; Du, B.; Zhang, L. A subspace-based change detection method for hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2013, 6, 815–830. [Google Scholar] [CrossRef]

- Bouaraba, A.; Aissa, A.B.; Borghys, D.; Acheroy, M.; Closson, D. Insar phase filtering via joint subspace projection method: Application in change detection. IEEE Geosci. Remote Sens. Lett. 2014, 11, 1817–1820. [Google Scholar] [CrossRef]

- Erturk, A.; Iordache, M.D.; Plaza, A. Sparse unmixing-based change detection for multitemporal hyperspectral images. IEEE J. Sel. Top. Appl. Earth Obs. Remote Sens. 2015, 9, 708–719. [Google Scholar] [CrossRef]

- Wu, C.; Du, B.; Zhang, L. Slow feature analysis for change detection in multispectral imagery. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2858–2874. [Google Scholar] [CrossRef]

- Meola, J.; Eismann, M.T.; Moses, R.L.; Ash, J.N. Application of model-based change detection to airborne VNIR/SWIR hyperspectral imagery. IEEE Trans. Geosci. Remote Sens. 2012, 50, 3693–3706. [Google Scholar] [CrossRef]

- Huang, X.; Friedl, M.A. Distance metric-based forest cover change detection using modis time series. Int. J. Appl. Earth Obs. Geoinf. 2014, 29, 78–92. [Google Scholar] [CrossRef]

- Byun, Y.; Han, Y.; Chae, T. Image Fusion-Based Change Detection for Flood Extent Extraction Using Bi-Temporal Very High-Resolution Satellite Images. Remote Sens. 2015, 7, 10347–10363. [Google Scholar] [CrossRef]

- Liu, S.; Bruzzone, L. Hierarchical unsupervised change detection in multitemporal hyperspectral images. IEEE Trans. Geosci. Remote Sens. 2015, 53, 244–260. [Google Scholar]

- Shah-Hosseini, R.; Homayouni, S.; Safari, A. A Hybrid Kernel-Based Change Detection Method for Remotely Sensed Data in a Similarity Space. Remote Sens. 2015, 7, 12829–12858. [Google Scholar] [CrossRef]

- Ding, K.; Huo, C. Sparse hierarchical clustering for vhr image change detection. IEEE Geosci. Remote Sens. Lett. 2015, 12, 577–581. [Google Scholar] [CrossRef]

- Fu, X.; Li, S.; Fairbank, M.; Wunsch, D.C.; Alonso, E. Training Recurrent Neural Networks with the Levenberg—Marquardt Algorithm for Optimal Control of a Grid-Connected Converter. IEEE Trans. Neural Netw. Learn. Syst. 2014, 26, 1900–1912. [Google Scholar] [CrossRef] [PubMed]

- Ordónez, F.J.; Roggen, D. Deep Convolutional and LSTM Recurrent Neural Networks for Multimodal Wearable Activity Recognition. Sensors 2016, 16, 115. [Google Scholar] [CrossRef] [PubMed]

- Ubeyli, E. Recurrent neural networks with composite features for detection of electrocardiographic changes in partial epileptic patients. Comput. Biol. Med. 2008, 38, 401–410. [Google Scholar] [CrossRef] [PubMed]

- Pacella, M.; Semeraro, Q. Using recurrent neural networks to detect changes in autocorrelated processes for quality monitoring. Comput. Ind. Eng. 2007, 52, 502–520. [Google Scholar] [CrossRef]

- Chren, W. One-hot residue coding for high-speed non-uniform pseudo-random test pattern generation. In Proceedings of the 1995 IEEE International Symposium on Circuits and Systems (ISCAS ’95), Seattle, WA, USA, 30 April–3 May 1995; Volume 1, pp. 401–404.

- Brillante, C.; Mannarino, A. Improvement of aeroelastic vehicles performance through recurrent neural network controllers. Nonlinear Dyn. 2016, 84, 1479–1495. [Google Scholar] [CrossRef]

- Yuan, Y.; Mou, L.; Lu, X. Scene Recognition by Manifold Regularized Deep Learning Architecture. IEEE Trans. Neural Netw. Learn. Syst. 2015, 26, 2222–2233. [Google Scholar] [CrossRef] [PubMed]

- Pascanu, R.; Mikolov, T.; Bengio, Y. On the difficulty of training recurrent neural networks. In Proceedings of the IEEE International Conference on Machine Learning, Atlanta, GA, USA, 16–21 June 2013; pp. 1310–1318.

- Deng, J.; Wang, K.; Deng, Y.; Qi, G. Pca-based land-use change detection and analysis using multitemporal and multisensor satellite data. Int. J. Remote Sens. 2008, 29, 4823–4838. [Google Scholar] [CrossRef]

- Chang, C.; Lin, C. LIBSVM: A Library for Support Vector Machines. ACM Trans. Intell. Syst. Technol. 2011, 2, 1–27. [Google Scholar] [CrossRef]

- Pal, M.; Mather, P. An assessment of the effectiveness of decision tree methods for land cover classification. Remote Sens. Environ. 2003, 86, 554–565. [Google Scholar] [CrossRef]

- Hu, F.; Xia, G.-S.; Hu, J.; Zhang, L. Transferring Deep Convolutional Neural Networks for the Scene Classification of High-Resolution Remote Sensing Imagery. Remote Sens. 2015, 7, 14680–14707. [Google Scholar] [CrossRef]

- Dauphin, Y.N.; Vries, H.; Chung, J.; Bengio, Y. Rmsprop and equilibrated adaptive learning rates for non-convex optimization. 2015. [Google Scholar]

- Celik, T. Unsupervised change detection in satellite images using principal component analysis and k-means clustering. IEEE Geosci. Remote Sens. Lett. 2009, 6, 772–776. [Google Scholar] [CrossRef]

- Rumelhart, D.E.; Hintont, G.E.; Williams, R.J. Learning representations by back-propagating errors. Nature 1986, 323, 533–536. [Google Scholar] [CrossRef]

- Congalton, R.G. A review of assessing the accuracy of classifications of remotely sensed data. Remote Sens. Environ. 1991, 37, 35–46. [Google Scholar] [CrossRef]

| Labeled Samples | Training Samples | Testing Samples | ||

|---|---|---|---|---|

| Taizhou | binary | 21,116 | 700 | |

| experiment | (16,890un, 4226c) | (500 un,200c) | 20,416 | |

| 200 (150un,50c) | ||||

| transfer | 21,116 | 400 (300un,1000c) | 64,183 | |

| experiment | (16,890un, 4226c) | 600 (450un,150c) | (Kunshan) | |

| (T-K) | 800 (600un,2000c) | 63,000 | ||

| (T-Y) | 1000 (650un,250c) | (Yancheng) | ||

| 3172 (city change) | 200 | 2922 | ||

| multi-class | 564 (soil change) | 50 | 514 | |

| experiment | 490 (water change) | 50 | 440 | |

| 16,890 (unchanged) | 50 | 11890 | ||

| Kunshan | binary | 64,183 | 1000 | |

| experiment | (48,119un, 16,064c) | (500un,500 c ) | 63183 | |

| 200 (100un,100c) | ||||

| transfer | 64,183 | 400 (200un,200c) | 21,116 | |

| experiment | (48,119un, 16,064c) | 600 (300un,300c) | (Taizhou) | |

| (K-T) | 800 (400un,400c) | 63,000 | ||

| (K-Y) | 1000 (500un,5000c) | (Yancheng) | ||

| multi-class | 9958 (city change) | 500 | 9458 | |

| experiment | 6506 (farmland change) | 500 | 6006 | |

| 48,119 (unchanged) | 500 | 43,119 | ||

| Yancheng | binary | 63,000 | 500 | |

| experiment | (44,723un, 18,277c) | (250un,250 c) | 63,000 | |

| 200 (100un,100c) | ||||

| transfer | 63,000 | 400 (200un,200c) | 21,116 | |

| experiment | (44,723un, 18,277c) | 600 (300un,300c) | (Taizhou) | |

| (Y-T) | 800 (400un,400c) | 64183 | ||

| (Y-K) | 1000 (500un,5000c) | (Kunshan) |

| TaiZhou | KunShan | Yancheng | ||||

|---|---|---|---|---|---|---|

| KAPPA | OA | KAPPA | OA | KAPPA | OA | |

| CVA | 0.3755 | 0.6982 | 0.4011 | 0.7160 | 0.7907 | 0.8722 |

| PCA | 0.5413 | 0.7419 | 0.633 | 0.7741 | 0.8174 | 0.9025 |

| IRMAD | 0.7942 | 0.9133 | 0.87 | 0.9397 | 0.6973 | 0.8352 |

| SSFA | 0.8229 | 0.9454 | 0.9361 | 0.9763 | 0.9032 | 0.9516 |

| REFEREE | 0.9477 | 0.9777 | 0.9573 | 0.9837 | 0.9563 | 0.9828 |

| OA | Kappa | FP | FN | OE | ||

|---|---|---|---|---|---|---|

| T-K | N = 200 | 0.8652 | 0.5870 | 326 | 8613 | 8939 |

| N = 400 | 0.8579 | 0.5574 | 202 | 9223 | 9425 | |

| N = 600 | 0.8717 | 0.6122 | 428 | 8083 | 8511 | |

| N = 800 | 0.8652 | 0.6028 | 1236 | 7705 | 8941 | |

| N = 1000 | 0.8693 | 0.6009 | 270 | 8398 | 8668 | |

| T-Y | N = 200 | 0.9508 | 0.8852 | 286 | 2816 | 3102 |

| N = 400 | 0.9694 | 0.9265 | 651 | 1275 | 1926 | |

| N = 600 | 0.9653 | 0.9178 | 356 | 1828 | 2184 | |

| N = 800 | 0.9724 | 0.9335 | 629 | 1110 | 1739 | |

| N = 1000 | 0.9721 | 0.9334 | 323 | 1437 | 1760 | |

| K-T | N = 200 | 0.9243 | 0.7116 | 15 | 1772 | 1787 |

| N = 400 | 0.9506 | 0.8428 | 279 | 808 | 1087 | |

| N = 600 | 0.9536 | 0.7931 | 387 | 1030 | 1417 | |

| N = 800 | 0.9481 | 0.8341 | 279 | 863 | 1141 | |

| N = 1000 | 0.9508 | 0.8363 | 23 | 1060 | 1083 | |

| K-Y | N = 200 | 0.9592 | 0.9032 | 251 | 2052 | 2303 |

| N = 400 | 0.9701 | 0.9227 | 824 | 1058 | 1882 | |

| N = 600 | 0.9675 | 0.9227 | 364 | 1684 | 2048 | |

| N = 800 | 0.9712 | 0.9310 | 481 | 1334 | 1815 | |

| N = 1000 | 0.9741 | 0.9379 | 383 | 1251 | 1634 | |

| Y-T | N = 200 | 0.7637 | 0.4326 | 1223 | 2982 | 3205 |

| N = 400 | 0.7984 | 0.4638 | 1210 | 1815 | 3025 | |

| N = 600 | 0.8894 | 0.6559 | 1094 | 1342 | 2436 | |

| N = 800 | 0.8942 | 0.7100 | 1230 | 918 | 2148 | |

| N = 1000 | 0.8939 | 0.6993 | 1113 | 1223 | 2336 | |

| Y-K | N = 200 | 0.7189 | 0.2760 | 9164 | 9479 | 18,643 |

| N = 400 | 0.7689 | 0.3125 | 4240 | 9706 | 13,946 | |

| N = 600 | 0.7932 | 0.5152 | 9607 | 4245 | 13,852 | |

| N = 800 | 0.8336 | 0.4883 | 1301 | 9737 | 11,038 | |

| N = 1000 | 0.8428 | 0.5571 | 606 | 8823 | 9429 |

| OA | Kappa | F-score | ||||||

|---|---|---|---|---|---|---|---|---|

| Unchanged | City (C) | Water (C) | Soil (C) | Farmland (C) | ||||

| Taizhou | REFEREE | 0.95 | 0.8689 | 0.9788 | 0.7887 | 0.8749 | 0.7524 | / |

| CNN | 0.9235 | 0.8063 | 0.9675 | 0.6679 | 0.8721 | 0.5521 | / | |

| SVM | 0.8391 | 0.6758 | 0.8717 | 0.5203 | 0.8326 | 0.3558 | / | |

| Decision tree | 0.7113 | 0.5221 | 0.8701 | 0.6403 | 0.7496 | 0.3558 | / | |

| Kunshan | REFEREE | 0.9587 | 0.8988 | 0.9432 | 0.9735 | / | / | 0.8750 |

| CNN | 0.9336 | 0.8413 | 0.8844 | 0.9559 | / | / | 0.8491 | |

| SVM | 0.8024 | 0.6654 | 0.6830 | 0.8762 | / | / | 0.3743 | |

| Decision tree | 0.6979 | 0.4844 | 0.6642 | 0.7913 | / | / | 0.1542 | |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Lyu, H.; Lu, H.; Mou, L. Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection. Remote Sens. 2016, 8, 506. https://doi.org/10.3390/rs8060506

Lyu H, Lu H, Mou L. Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection. Remote Sensing. 2016; 8(6):506. https://doi.org/10.3390/rs8060506

Chicago/Turabian StyleLyu, Haobo, Hui Lu, and Lichao Mou. 2016. "Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection" Remote Sensing 8, no. 6: 506. https://doi.org/10.3390/rs8060506

APA StyleLyu, H., Lu, H., & Mou, L. (2016). Learning a Transferable Change Rule from a Recurrent Neural Network for Land Cover Change Detection. Remote Sensing, 8(6), 506. https://doi.org/10.3390/rs8060506