Signal Processing for a Multiple-Input, Multiple-Output (MIMO) Video Synthetic Aperture Radar (SAR) with Beat Frequency Division Frequency-Modulated Continuous Wave (FMCW)

Abstract

:1. Introduction

2. Theory of Video SAR

2.1. Overview

2.2. Frame Rate

2.3. Image Size

- (a)

- Scene size (diameter) is limited by range curvature as follows:

- (b)

- Scene size is limited by residual video phase (RVP) ([36] (Equation (B.26)), when the chirp rate is very high, as follows:where is the chirp rate in range.

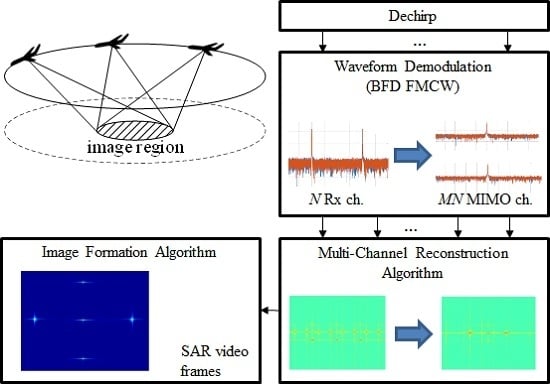

3. Signal Processing

3.1. Geometric Models

3.2. Signal Model

- Signal model in the fast time–slow time domain.

- Signal model in the fast time–azimuth angle domain.

3.3. Signal Processing Procedure

4. Simulation Results

4.1. System Parameters

4.2. Point Target Simulation

5. Conclusions

Supplementary Materials

Supplementary File 1Acknowledgments

Author Contributions

Conflicts of Interest

References

- Curlander, J.C.; McDonough, R.N. Synthetic Aperture Radar: Systems and Signal Processing; John Wiley & Sons: New York, NY, USA, 1991; ISBN 978-0-471-85770-9. [Google Scholar]

- Carrara, W.G.; Goodman, R.S.; Majewski, R.M. Spotlight Synthetic Aperture Radar: Signal Processing Algorithms; Artech House: Norwood, MA, USA, 1995; ISBN 0890067287. [Google Scholar]

- Melvin, W.; Scheer, J. Principles of Modern Radar Vol. II: Advanced Techniques. Edison; Scitech Publishing: Mendham, NJ, USA, 2013; ISBN 978-1-891121-53-1. [Google Scholar]

- Willey, C. Synthetic aperture radars—A paradigm for technology evolution. IEEE Trans. Aerosp. Electron. Syst. 1985, 21, 440–443. [Google Scholar] [CrossRef]

- Currie, A.; Brown, M.A. Wide-swath SAR. IEE Proc. F Radar Signal Process. 1992, 139, 122–135. [Google Scholar] [CrossRef]

- Krieger, G.; Gebert, N.; Moreira, A. Unambiguous SAR signal reconstruction from nonuniform displaced phase center sampling. IEEE Geosci. Remote Sens. Lett. 2004, 1, 260–264. [Google Scholar] [CrossRef]

- Gebert, N.; Krieger, G.; Moreira, A. Digital beamforming on receive: Techniques and optimization strategies for high-resolution wide-swath SAR imaging. IEEE Trans. Aerosp. Electron. Syst. 2009, 45. [Google Scholar] [CrossRef]

- Gebert, N. Multi-Channel Azimuth Processing for High-Resolution Wide-Swath SAR Imaging; DLR (German Aerospace Center): Köln, Germany, 2009. [Google Scholar]

- Cerutti-Maori, D.; Sikaneta, I.; Klare, J.; Gierull, C.H. MIMO SAR processing for multichannel high-resolution wide-swath radars. IEEE Trans. Geosci. Remote Sens. 2014, 52, 5034–5055. [Google Scholar] [CrossRef]

- Liu, B.; He, Y. Improved DBF algorithm for multichannel high-resolution wide-swath SAR. IEEE Trans. Geosci. Remote Sens. 2016, 54, 1209–1225. [Google Scholar] [CrossRef]

- Meta, A.; Hoogeboom, P.; Ligthart, L.P. Signal processing for FMCW SAR. IEEE Trans. Geosci. Remote Sens. 2007, 45, 3519–3532. [Google Scholar] [CrossRef]

- Kim, J.H.; Younis, M.; Moreira, A.; Wiesbeck, W. Spaceborne MIMO synthetic aperture radar for multimodal operation. IEEE Trans. Geosci. Remote Sens. 2015, 53, 2453–2466. [Google Scholar] [CrossRef]

- Damini, A.; Balaji, B.; Parry, C.; Mantle, V. A videoSAR mode for the X-band wideband experimental airborne radar. Proc. SPIE Def. Secur. Sens. Int. Soc. Opt. Photonics 2010, 7699, 76990E. [Google Scholar] [CrossRef]

- Wallace, H.B. Development of a video SAR for FMV through clouds. Proc. SPIE Def. Secur. Sens. Int. Soc. Opt. Photonics 2015, 9749, 94790L. [Google Scholar] [CrossRef]

- Miller, J.; Bishop, E.; Doerry, A. An application of backprojection for video SAR image formation exploiting a subaperature circular shift register. Proc. SPIE Def. Secur. Sens. Int. Soc. Opt. Photonics 2013, 8746, 874609. [Google Scholar] [CrossRef]

- Wallace, H.; Gorman, J.; Maloney, P. Video Synthetic Aperture Radar (ViSAR); Defense Advanced Research Projects Agency: Arlington, VA, USA, 2012. [Google Scholar]

- Baumgartner, S.V.; Krieger, G. Simultaneous high-resolution wide-swath SAR imaging and ground moving target indication: Processing approaches and system concepts. IEEE J. Sel. Top. App. Earth Obs. Remote Sens. 2015, 8, 5015–5029. [Google Scholar] [CrossRef]

- De Wit, J.; Meta, A.; Hoogeboom, P. Modified range-Doppler processing for FM-CW synthetic aperture radar. IEEE Geosci. Remote Sens. Lett. 2006, 3, 83–87. [Google Scholar] [CrossRef]

- Jiang, Z.H.; Huang-Fu, K. Squint LFMCW SAR data processing using Doppler-centroid-dependent frequency scaling algorithm. IEEE Trans. Geosci. Remote Sens. 2008, 46, 3535–3543. [Google Scholar] [CrossRef]

- Xin, Q.; Jiang, Z.; Cheng, P.; He, M. Signal processing for digital beamforming FMCW SAR. Math. Probl. Eng. 2014, 2014, 859890. [Google Scholar] [CrossRef]

- Paulraj, A.J.; Kailath, T. Increasing Capacity in Wireless Broadcast Systems Using Distributed Transmission/ directional Reception (DTDR). U.S. Patent 5,345,599, 6 September 1994. [Google Scholar]

- Fishler, E.; Haimovich, A.; Blum, R.; Chizhik, D.; Cimini, L.; Valenzuela, R. MIMO radar: An idea whose time has come. In Proceedings of the 2004 IEEE Radar Conference, Philadelphia, PA, USA, 26–29 April 2004; pp. 71–78. [Google Scholar] [CrossRef]

- Ponce, O.; Rommel, T.; Younis, M.; Prats, P.; Moreira, A. Multiple-input multiple-output circular SAR. In Proceedings of the 2014 IEEE 15th International Radar Symposium (IRS), Gdańsk, Poland, 16–18 June 2014; pp. 1–5. [Google Scholar] [CrossRef]

- Kim, J.H.; Younis, M.; Moreira, A.; Wiesbeck, W. A novel OFDM chirp waveform scheme for use of multiple transmitters in SAR. IEEE Geosci. Remote Sens. Lett. 2013, 10, 568–572. [Google Scholar] [CrossRef]

- Kim, J.H. Multipe-Input Multiple-Output Synthetic Aperture Radar for Multimodal Operation. Ph.D. Thesis, Karlsruher Institut für Technologie (KIT), Karlsruhe, Germany, 2011. [Google Scholar]

- Krieger, G. MIMO-SAR: Opportunities and pitfalls. IEEE Trans. Geosci. Remote Sens. 2014, 52, 2628–2645. [Google Scholar] [CrossRef]

- Wang, W.Q. Multi-Antenna Synthetic Aperture Radar; CRC Press: Nottingham, UK, 2013; ISBN 978-1-4665-1051-7. [Google Scholar]

- De Wit, J.; Van Rossum, W.; De Jong, A. Orthogonal waveforms for FMCW MIMO radar. In Proceedings of the 2011 IEEE Radar Conference (RADAR), Kansas City, MO, USA, 23–27 May 2011; pp. 686–691. [Google Scholar] [CrossRef]

- Cheng, P.; Wang, Z.; Xin, Q.; He, M. Imaging of FMCW MIMO radar with interleaved OFDM waveform. In Proceedings of the 2014 IEEE 12th International Conference on Signal Processing (ICSP), Hangzhou, China, 19–23 October 2014; pp. 1944–1948. [Google Scholar] [CrossRef]

- Sikaneta, I.; Gierull, C.H.; Cerutti-Maori, D. Optimum signal processing for multichannel SAR: With application to high-resolution wide-swath imaging. IEEE Trans. Geosci. Remote Sens. 2014, 52, 6095–6109. [Google Scholar] [CrossRef]

- Li, X.; Xing, M.; Xia, X.G.; Sun, G.C.; Liang, Y.; Bao, Z. Simultaneous stationary scene imaging and ground moving target indication for high-resolution wide-swath SAR system. IEEE Trans. Geosci. Remote Sens. 2016, 54, 4224–4239. [Google Scholar] [CrossRef]

- Walker, J.L. Range-Doppler imaging of rotating objects. IEEE Trans. Aerosp. Electron. Syst. 1980, 16, 23–52. [Google Scholar] [CrossRef]

- Doren, N.; Jakowatz, C.; Wahl, D.E.; Thompson, P.A. General formulation for wavefront curvature correction in polar-formatted spotlight-mode SAR images using space-variant post-filtering. In Proceedings of the IEEE International Conference on Image Processing, Santa Barbara, CA, USA, 26–29 October 1997; Volume 1, pp. 861–864. [Google Scholar] [CrossRef]

- Jakowatz, C.V., Jr.; Wahl, D.E.; Thompson, P.A.; Doren, N.E. Space-variant filtering for correction of wavefront curvature effects in spotlight-mode SAR imagery formed via polar formatting. In Proceedings of the International Society for Optics and Photonics (AeroSense’97), Orlando, FL, USA, 21 July 1997; pp. 33–42. [Google Scholar] [CrossRef]

- Doren, N.E. Space-Variant Post-Filtering for Wavefront Curvature Correction in Polar-Formatted Spotlight-Mode SAR Imagery; Technical Report; Sandia National Labs.: Albuquerque, NM, USA; Livermore, CA, USA, 1999. [Google Scholar]

- Jakowatz, C.V.; Wahl, D.E.; Eichel, P.H.; Ghiglia, D.C.; Thompson, P.A. Spotlight-Mode Synthetic Aperture Radar: A Signal Processing Approach: A Signal Processing Approach; Springer Science & Business Media: Berlin, Germany, 2012; ISBN 978-0-7923-9677-2. [Google Scholar]

- Johannes, W.; Essen, H.; Stanko, S.; Sommer, R.; Wahlen, A.; Wilcke, J.; Wagner, C.; Schlechtweg, M.; Tessmann, A. Miniaturized high resolution Synthetic Aperture Radar at 94 GHz for microlite aircraft or UAV. In Proceedings of the 2011 IEEE Sensors, Limerick, Ireland, 28–31 October 2011; pp. 2022–2025. [Google Scholar] [CrossRef]

- Cheng, S.W. Rapid deployment UAV. In Proceedings of the 2008 IEEE Aerospace Conference, Big Sky, MT, USA, 1–8 March 2008; pp. 1–8. [Google Scholar] [CrossRef]

| Parameters | MIMO ViSAR | Single Channel ViSAR |

|---|---|---|

| Transmitted frequency | 94 GHz | 94 GHz |

| Bandwidth | 1 GHz | 1 GHz |

| Slant range to scene center | 1000 m | 1000 m |

| Operation velocity | 40 m/s | 20 m/s |

| Scene size | 80 m | 40 m |

| Resolution | 0.15 × 0.08 m | 0.15 × 0.08 m |

| Radar losses | 10 dB | 10 dB |

| Number of transmit apertures | 2 | 1 |

| Number of receive apertures | 2 | 1 |

| Transmit antenna gain | 15 dB | 15 dB |

| Receive antenna gain | 15 dB | 15 dB |

| Sweep duration | 1 msec | 1 msec |

| Sampling frequency | 4 MHz | 2 MHz |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Kim, S.; Yu, J.; Jeon, S.-Y.; Dewantari, A.; Ka, M.-H. Signal Processing for a Multiple-Input, Multiple-Output (MIMO) Video Synthetic Aperture Radar (SAR) with Beat Frequency Division Frequency-Modulated Continuous Wave (FMCW). Remote Sens. 2017, 9, 491. https://doi.org/10.3390/rs9050491

Kim S, Yu J, Jeon S-Y, Dewantari A, Ka M-H. Signal Processing for a Multiple-Input, Multiple-Output (MIMO) Video Synthetic Aperture Radar (SAR) with Beat Frequency Division Frequency-Modulated Continuous Wave (FMCW). Remote Sensing. 2017; 9(5):491. https://doi.org/10.3390/rs9050491

Chicago/Turabian StyleKim, Seok, Jiwoong Yu, Se-Yeon Jeon, Aulia Dewantari, and Min-Ho Ka. 2017. "Signal Processing for a Multiple-Input, Multiple-Output (MIMO) Video Synthetic Aperture Radar (SAR) with Beat Frequency Division Frequency-Modulated Continuous Wave (FMCW)" Remote Sensing 9, no. 5: 491. https://doi.org/10.3390/rs9050491

APA StyleKim, S., Yu, J., Jeon, S. -Y., Dewantari, A., & Ka, M. -H. (2017). Signal Processing for a Multiple-Input, Multiple-Output (MIMO) Video Synthetic Aperture Radar (SAR) with Beat Frequency Division Frequency-Modulated Continuous Wave (FMCW). Remote Sensing, 9(5), 491. https://doi.org/10.3390/rs9050491