1. Introduction

In recent years, UAV has emerged as an attractive data acquisition platform for a wide range of photogrammetry and remote sensing tasks [

1,

2]. Compared with the conventional aerial- or satellite-based platforms, an UAV platform features rapid data acquisition, efficient cost and easiness of use. Generally, even with a non-metric camera integrated, an UAV-based photogrammetric system could efficiently capture images with extremely high spatial resolution because of relative lower flight heights. Thus, diverse applications across different fields of science have been documented [

1].

At the same time, oblique imaging is another commonly adopted technique aiming at data acquisition from side-looking directions. It records both footprints and facades of targets, especially for buildings in urban environment when compared with the traditional vertical imaging systems. Nowadays, this technique is undergoing explosive development and becoming increasingly more important in the photogrammetric community, whose abilities for civil applications have been more and more reported [

3,

4]. It is rational that the combination of UAV platforms and oblique imaging systems could enhance both of their strengths. Some applications and experimental tests have been conducted and reported about the usage of oblique UAV images, including but not limited to urban forestry and urban greening [

5] and 3D model reconstruction [

6].

To use oblique UAV images for different scenarios with success, accurate camera poses are mandatorily required. In the conventional aerial photogrammetry, direct positioning and orientation of images can be achieved from combined and very precise GNSS/IMU (Global Navigation Satellite System/Inertial Measurement Unit) devices. However, a majority of market available UAVs are just equipped with low-cost GNSS/IMU sensors and the orientation data often cannot satisfy the accuracy requirements for direct orientation. Although some attempts have been made for direct geo-referencing of UAV images [

7,

8], almost all researches have focused on precise positioning while accurate orientation cannot be determined because of high costs of accurate miniature IMU sensors and maximum overload limitations of most UAVs on the market [

7]. Therefore, combined bundle adjustment (BA), involving vertical and oblique images, becomes an established standard in almost all data processing pipelines, which is achieved by minimizing the total difference between predicted and observed image points. Prior to the combined BA, tie-point extraction and matching must be finished to set up the optimization problem.

In the communities of digital photogrammetry and computer vision, feature extraction and matching is a fundamental issue to ensure accurate information interpretation, such as dense point cloud generation, building 3D model reconstruction, etc. Therefore, lots of researches have been proposed to put forward the development of this technique towards the direction of precision and automation, which can be observed from the earliest corner detectors [

9] to the newly invariant detectors [

10]. Compared with classical corner detectors, in which matching operation is usually conducted by comparing pixel gray values of images in a fixed size window, invariant detectors use feature vectors to describe the local regions of interest points, which are called descriptors of the related points. Then, feature matching can be achieved through searching the nearest point with the smallest Euclidean distance between two descriptor sets. Among all invariant detectors, the SIFT (Scale Invariant Feature Transformation) outstands the others with best performance [

10]. However, reliable matching cannot be achieved until the viewpoint variation does not exceed 50 degrees [

11], which promotes the prosperous development of affine invariant descriptors [

12]. In addition, to improve matching efficiency, other attempts have also been documented, including dimension reduction [

13], binary descriptor [

14] and hardware acceleration [

15]. In a word, dozens of algorithms and free open-source software packages can be considered to perform tie-point extraction and matching for oblique UAV images.

Unfortunately, new challenges are imposed on tie-point extraction and matching for oblique UAV images. Major issues are listed as follows: (1) correspondence establishment between vertical and oblique images is extraordinary difficult because of different appearances mainly caused by occlusions and perspective deformations, which is much more severe in urban environment with dense buildings; (2) the loss of location accuracy and the reduction of tie-point number would be caused by the commonly adopted down-sampling strategy in order to adapt to the limited memory capacity when extracting features from high resolution images; and (3) because of the relative small footprints of UAV images and the adoption of oblique cameras, many more images are collected for a specified surveying area when compared with traditional aerial images, which complexes the combinatorial complexity for match pair selection. Therefore, extra considerations should be gained for sufficient and precise tie-point extraction and matching with impressive efficiency. In this study, we mainly focus on the first and the second issues.

In the literatures, for relieving perspective deformations, affine-invariant regions have been deeply exploited to compute affine-invariant descriptors [

12]; however, these methods can cause either decreases in the amount of extracted features or performance losses in the cases of slightly affine deformations [

11]. Differing from internet photos, images captured by photogrammetric systems usually include rough or precise positioning and orientation data, which is obtained by either non-professional or professional GNSS/IMU devices. Therefore, perspective deformations can be globally relieved for each image rather than for per interest point. Thus, Hu et al. [

16] injected this strategy into the tie-point matching pipeline of oblique aerial images. It was used as a pre-processing step prior to tie-point extraction. With the use of FAST corner detector [

17] and BRIEF descriptor [

18], sufficient and well-distributed tie-points were extracted. Similarly, on-board GNSS/IMU data can also be used for geometric rectification of oblique UAV images, which possesses the potential to be considered as a possible solution to address the first issue.

To decrease the negative effects of image down-sampling on the loss of location accuracy and the reduction of tie-point number, the divide-and-conquer or the tiling strategy maybe the most obvious and reliable solution. In general, moderate changes in scale, rotation and affinity can be tolerated by most elaborately designed detectors and descriptors, such as the SIFT-based algorithms, which aim for low-resolution and low-accuracy tasks [

19]. To cope with high-precision surveying and mapping tasks in the photogrammetric field with high-resolution images, some solutions have been proposed. Novák et al. [

19] combined a recursively tiling technique with the existing tie-point extraction and matching algorithms and attempted to process images with high spatial resolution. The tiling strategy can be regarded as a semi-global solution, which is more global than affine-invariant detectors and more local than methods based on global geometric rectification. To decrease the side-effects on high computational costs, Sun et al. [

20] proposed a similar algorithm to extract and match tie-points for large images in large scale aerial photogrammetry, where a 2D rigid transformation was adopted to create image relationships and a single recursion level is used to tile images. In their researches, the divided-and-conquer strategy can not only increase the number of extracted points, but also decrease the risk of loss of location accuracy because it can work on images with original spatial resolution. Therefore, in this paper, we attempt to exploit the validity of using the tiling strategy for feature extraction and matching of oblique UAV images, and also to verify the validity of using on-board GNSS/IMU data to predict corresponding tiles. This could be an alternative solution to address the second issue mentioned above.

In this paper, we exploit the use of on-board GNSS/IMU data to assist feature extraction and matching of oblique UAV images. Because original GNSS/IMU data is recorded in the navigation system, orientation angle transformation is firstly applied to convert original navigation data to the coordinate system used in this study. Secondly, two possible strategies, including the global geometric rectification and tiling strategy, are comprehensively evaluated in bundle adjustment tests from aspects of efficiency, completeness and accuracy. Finally, an optimal solution is recommended to design the GNSS/IMU assisted workflow for feature extraction and matching.

The remainder of this paper is organized as follows.

Section 2 describes the study site and one test dataset. The methodology applied in this study, including orientation angle transformation, feature extraction and feature matching, is then introduced. In

Section 3, performance of these two strategies on feature extraction and matching is evaluated and compared by using BA experiments. In addition, some aspects of these two strategies are discussed with related researches in

Section 4. Finally,

Section 5 presents the conclusion and further studies.

3. Experimental Results

In the experiments, we would evaluate the performance of two potential solutions, including the global geometric rectification and tiling strategy, for feature extraction and matching of oblique UAV images. Firstly, the rough POS of each image is calculated from on-board GNSS/IMU data and camera installation angles, which enables image pair selection and geometric rectification. Secondly, the performance evaluation on feature matching is individually conducted and analyzed in terms of the number and distribution of correspondences. Finally, various combinations of above mentioned methods would be compared from the aspects of efficiency, completeness and accuracy, and the best solution for feature extraction and matching of oblique UAV images is proposed. In this study, all experiments are executed on an Intel Core i7-4770 PC (manufactured by Micro-Star Corporation at Shenzhen, China) on the Windows platform with a 3.4 GHz CPU and a 2.0 G GeForce GTX 770M graphics card.

3.1. Orientation Angle Transformation

Two individual campaigns are configured for outdoor data acquisition in this study. The first campaign is conducted for capturing nadir images with near zero roll and pitch angles imposed on camera installation. To collect oblique images, the camera is mounted with near 45 pitch angle in the second campaign. Therefore, by using on-board GNSS/IMU data and camera installation angles, exterior orientation (EO) parameters, consisted of positioning and orientation angles, are computed for each image with respect to an object coordinate system, which is set as a LTP coordinate system with origin in the site center.

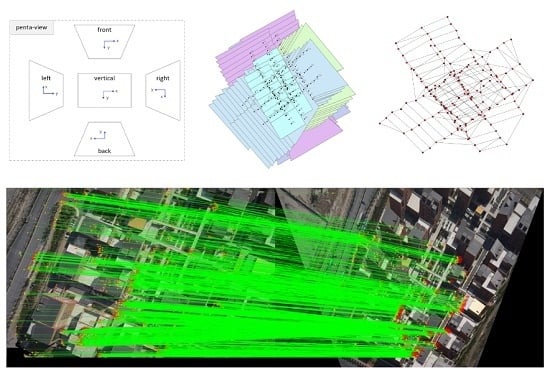

The rough EO parameters enable computation of image footprints as shown in

Figure 7. It is clearly shown that two tracks are set for the vertical direction and three tracks for east, west, south and north directions, respectively. In addition, high overlap degree between footprints can be observed, especially for oblique images. If just considering a direct overlap principle for image pair selection, too many redundant image pairs would be preserved and fed into the process of feature matching. Thus, high time consumption would be frequently required even for data processing of UAV images collected from small areas.

Figure 8a presents the result of image pair selection just using an overlap principle where one image pair would be preserved only if the dimension of overlap exceeds half of the footprint size. We can see that a total of 4430 image pairs are found based on the principle and redundant image pairs can be clearly observed, especially near the center of the study site. Alternatively, in this study, the maximal spanning tree expansion strategy is used to remove these redundant image pairs. The strategy depends on a two-stage algorithm, named as MST-Expansion, for the further simplification of initial image pairs and extracted image pairs are shown in

Figure 8b. Compared with the direct overlap principle, the MST-Expansion takes into count the topological connection relationship of image footprints and can dramatically reduce the number of image pairs while preserving most of essential image pairs. Finally, there are 309 image pairs retained for the test dataset. In the following sections of this study, image pairs generated from the MST-Expansion algorithm would participate in the further processing and analysis.

3.2. Performance Evaluation of Geometric Rectification

In this study, global geometric rectification is the process to simulate vertical imaging when the EO parameters of images are known. By using the calculated POS data, rectification operations can be executed on original oblique images to relieve perspective deformations. In order to evaluate the performance of rectification on feature matching, two image pairs, covering a build-up area and a bare-land area, are selected and matching results are shown in

Figure 9 and

Figure 10, where oblique images are rectified. Because of serious occlusions of buildings and homogeneous textures of roofs, most correspondences are extracted on the ground, such as roads and parterres as shown in

Figure 9a, for the test without image rectification. With image rectification, the same situation occurs as illustrated in

Figure 9b. The distribution of correspondences is not obviously improved with the match number increased from 35 to 58, because almost all newly matched points are centralized in the regions illustrated by red ellipses. On the contrary, geometric rectification can improve both the number and distribution of correspondences for the test in bare-land area as shown in

Figure 10b. The number of matches increases from 108 to 209 and the distribution of correspondences is more uniform compared with the results presented in

Figure 10a. Therefore, with the aids of rough POS data of oblique images, global geometric rectification can to some extent relieve perspective deformations and increase the number of matches for both build-up and bare-land areas. However, it cannot improve the distribution of correspondences for images with dense buildings.

Obviously, geometric rectification is achieved through image resampling. The parts near photo nadir points of oblique images would be seriously compressed, which is equivalent to resolution down-sampling and image dimension reduction. Therefore, geometric rectification also has impact on feature extraction in terms of number and distribution.

Figure 11 shows the results of feature extraction influenced by geometric rectification, where

Figure 11a,b are results of feature extraction performed on the original and rectified images, respectively. It is noted that the bottom part of the oblique image is near the photo nadir point and would be compressed with respect to the top part. The experimental result shows that there are 11,424 features extracted from the rectified image while 9681 features are extracted from the original image. By the further analysis of four sub-images, some findings can be observed: (1) more features are extracted from the top part of the rectified image than that of the original images by comparing sub-images 1 with 3; (2) the density of features near the bottom part of the rectified image is sparser than that in the original image by comparing sub-images 2 with 4. Therefore, global geometric rectification increases the total number of features, but causes the un-uniform distribution of features on the image plane.

3.3. Performance Evaluation of Tiling Strategy

Corresponding block searching is the primary task to achieve the tiling strategy, which can be implemented based on two solutions. Without the assistant of POS data, seed points are firstly extracted from a down-sampled image, and homography estimation is then executed to calculate transformation parameters. This solution, named as ‘HE’ method, requires computational costs for generating seed points, but does not rely on the accuracy of the auxiliary data sources. The other solution is to predict corresponding blocks by using images’ POS data, which is referred as the ‘POS’ method. Besides, depending on whether or not the tiling strategy is used for feature extraction, two extra situations are existed, where ‘NE’ and ‘TE’ stands for feature extraction without tiling strategy and feature extraction with tiling strategy, respectively. Therefore, in this subsection, a total of four conditions would be compared and analyzed.

To compare the performance of these four combinations, four tests on feature extraction and matching are conducted and the results are listed in

Figure 12. Because no matches are retrieved by using the POS-NE method, correspondences of the HE-NE, HE-TE and POS-TE methods are presented in

Figure 12a–c, respectively. In addition, the number of correspondences is 53, 2728 and 15, respectively. From visual inspection and statistical analysis of the matching results, we can see that: (1) although the number of correspondences of the HE-NE method is almost equal to that of the method without tile strategy as shown in

Figure 9b, well-distribution of correspondences can be observed from

Figure 12a; (2) with tiling strategy used in feature extraction, the number of matches is dramatically increased and the distribution of matches is noticeably improved by checking

Figure 12b; and (3) the least number of matches is retrieved by the POS-TE method, as shown in

Figure 12c, which could be mainly caused by the inaccurate POS data. Because of the inaccurate POS data for corresponding block prediction and the less number of features extracted by the non-tiling strategy, no correspondences are matched from the POS-NE method. Thus, to improve both number and distribution of correspondences, tile-based feature extraction is recommended to be utilized in collaboration with tile-based matching. Besides, homography estimation is a preferred solution for corresponding block retrieving when no accurate POS data can be accessed.

3.4. Comparison of Various Combined Solutions

3.4.1. Solution

In this subsection, the main purpose is to find an optimal solution for feature extraction and matching of oblique UAV images. Consequently, various combined solutions, consisted of above mentioned strategies, are designed and comprehensive comparisons would be conducted in terms of efficiency, completeness and accuracy. The details of combined solutions are listed in

Table 3, where a total of four solutions have been promoted. The solution with the use of the tiling strategy indicates that the strategy is adopted in both feature extraction and matching because the tiling strategy could noticeably increase the correspondence number, which has been verified in the

Section 3.3. In addition, the homography estimation method is adopted for corresponding block searching because rough POS data cannot enable accurate prediction. Designs of these four solutions follow the criterions: (1) the first solution is designed for the situation that none of these two strategies is adopted; (2) the second and third solutions aim to assess the performance of the geometric rectification and tiling strategy; and (3) to evaluate the combined performance, the forth solution is designed and evaluated in this study.

3.4.2. Efficiency

In this study, the SiftGPU software package [

15], a hardware acceleration version of the SIFT algorithm based on GPU (graphic processing unit), is adopted for feature extraction and matching. The default parameters are used with the maximum image dimension of 3200 pixels, the ratio test threshold of 0.8 and the distance threshold of 0.7. Thus, images are down-sampled to the dimension no more than 3200 pixels when the tiling strategy is not prepared, and matches would be removed when either ratio rests or Euclidean distances surpass the corresponding threshold. In the geometric verification based on the fundamental matrix estimation, the maximum epipolar error is set as one pixel to guarantee high inlier ratio among all matches. In addition, for the tiling strategy, the block size is configured as 1024 pixels for both feature extraction and matching, which is a compromise between computational efficiency and feature number as discussed in the work of [

20].

Feature extraction and matching are executed on 157 images and 309 image pairs, respectively. Time consumption is illustrated in

Figure 13. It is clearly shown that the least computational costs are need in feature extraction of the NR-NT solution and almost the same and the highest time consumption is observed from feature extraction of the R-NT and R-T solutions. Approximately, for feature extraction, the ratio of time consumption is 7.67 between solutions with and without geometric rectification, which means that 6.67 times of more computational costs are required for image rectification with respect to the costs of feature extraction. Similarly, the ratio between solutions with and without the tiling strategy is about 1.40, which indicates that very little time costs are need in the tile-based solutions compared with the solutions using geometric rectification.

In addition, for feature matching, time costs of the NR-NT and the R-NT solutions are almost identical because geometric rectification cannot dramatically increase the number of extracted features. For solutions with the tiling strategy, including the NR-T and R-T solutions, many more features are detected, which leads to more time costs on feature matching. The ratio of time costs between solutions with and without the tiling strategy is about 3.13. Finally, the statistical results of time consumption are shown in

Table 4. We can see that the highest total time costs are consumed by the solutions with geometric rectification while the least costs from solutions without geometric rectification.

3.4.3. Completeness

For completeness analysis, bundle adjustment experiments are conducted and sparse 3D models are used for comparison, including the number of connected images and 3D points. In this study, an incremental structure from motion (SfM) software package is used for BA tests, which is developed at home and described in detail in the work of [

30]. The only inputs of the BA software are correspondences generated from feature matching due to the fact that it utilizes an incremental procedure for camera pose estimation and 3D point triangulation.

The statistical results of reconstructed images and points are listed in

Table 5. We can see that: (1) all images are successfully connected by using these four solutions; (2) the largest number of points is reconstructed in the NR-T solution, while the least number of points from the NR-NT solution; and (3) although geometric rectification is applied in the R-T solution, the number of recovered points is less than that of the NR-T solution, which can explained by the non-uniform distribution of feature points caused by image rectification as shown in

Section 3.2. In conclusion, the tiling strategy can dramatically increase the number of reconstructed points than geometric rectification. The sparse 3D models of these four solutions are presented in

Figure 14.

3.4.4. Accuracy

Accuracy assessment is also conducted by using the BA experiments. In this subsection, the free and open-source photogrammetric software package MicMac [

31] is also adopted except for the SfM software package. MicMac provides a complete set of tools to compute and reconstruct 3D models from overlapped images. Among all functions of MicMac, the Tapioca function is used for feature extraction and matching with multi-core parallel computation technology and the Apero function provides abilities for image orientation with or without camera self-calibration. Because of different definitions of camera calibration parameters, a small subset of images with high overlap degree are firstly chosen. Then, feature extraction and matching are executed for the image set. Finally, image orientation with self-calibration is performed to estimate an initial set of camera parameters, which would be used in the latter image orientation for the whole image set.

Because correspondences have been extracted from the proposed solutions, just the orientation tool Apero is used for the assessment of orientation accuracy for the whole image set. To guarantee high precision, the self-calibration is also configured in the orientation of the whole dataset. The RMSEs (root mean square errors) of BA tests in both MicMac and SfM are listed in

Table 6, where SfM stands for the BA software developed at home. By the analysis of the orientation results, some conclusions can be made: (1) geometric rectification cannot improve the orientation accuracy by comparing the RMSEs of the NR-NT and R-NT solutions; (2) the tiling strategy can noticeably improve orientation accuracy by comparing the RMSEs of the NR-NT and NR-T solutions; (3) even with the combination of these two strategies in the R-T solution, ignorable improvements of orientation accuracy can be observed from the RMSE in MicMac. In addition, side effects are also observed from the BA test in SfM because the estimated accuracy from the SfM is smaller than that of the MicMac. The main reason is that outlier removal is also performed in the processing of image orientation. However, for MicMac, all input matches are considered as true observations and used in bundle adjustment. To ensure reliable feature extraction and matching for oblique UAV images, the solution NR-T is proposed from the comparison results of BA tests.

4. Discussion

This research evaluates the performance of the global geometric rectification and tiling strategy for feature extraction and matching of oblique UAV images. To achieve these two strategies, rough POS for each image is calculated by using on-board GNSS/IMU data and camera installation angles. In addition, four combined solutions are widely compared and analyzed from aspects of efficiency, completeness and accuracy in BA tests. The experimental results show that the solution integrated with the tiling strategy is superior to the other solutions.

Although global geometric rectification could to some extent relieve perspective deformations between oblique images, it does not noticeably increase the number of correspondences, as well as improving the distribution. In the experiments reported in this paper, the performance of geometric rectification has been evaluated by using one image pair of build-up area and one image pair of bare-land area, as presented in

Figure 8 and

Figure 9, respectively. The results demonstrate that this strategy can increase the number of correspondences in both build-up and bare-land areas. However, the distribution of correspondences is not noticeably improved, especially in the build-up area. In the study of [

16], the same operation was executed on aerial oblique images of a build-up area and satisfying results were gained even with the BRIEF descriptor, which is neither scale invariant nor rotation invariant [

14]. Main reasons can be deduced from some aspects. Firstly, aerial oblique images are usually captured with relative higher flight heights. Under the condition that the same oblique angles are used for camera installation, occlusions become more serious in UAV images, especially in build-up areas, which leads to fewer correspondences extracted from ground points. Secondly, metric cameras with long focal length are standard instruments in aerial photogrammetry tasks. On the contrary, non-metric and low-cost digital cameras are equipped with UAV platforms, which have relative shorter focal length. Thus, perspective deformations are of severity in UAV images. Finally, because of weak stability of UAV platforms, oblique angles of images would exceed pre-designed values, which are mainly caused by winds. Thus, remainder of deformations still exists in images rectified by using the rough POS data. In conclusion, geometric rectification is not suitable for the pre-processing of oblique UAV images aiming at feature extraction and matching when compared with its usage in aerial oblique images.

On the contrary, promising results have been obtained from the use of the tiling strategy in both feature extraction and matching. In the comparison tests, the NR-T solution with the tiling strategy outperforms the other solutions in terms of efficiency, completeness and accuracy. The total time spent on feature extraction and matching is about one third of that used in the solutions with geometric rectification. In addition, this solution can achieve BA tests with the lowest RMSEs and the maximum number of reconstructed points. Compared with geometric rectification, this strategy can not only increase the number of matches, but also improve their distribution. The number of correspondences can be noticeably increased due to the fact that when feature matching is restricted to the corresponding tiles, the ratio of the smallest Euclidian distance between two descriptor sets to the second smallest one would be smaller than the specified threshold with higher probability [

20]. Besides, when original images are tiled, feature extraction can be applied on images with full resolution. Similarly, it also improves the distribution of correspondences because they would be found within each tile region. The tiling strategy is regarded as a semi-global solution, which is more local than methods with global geometric verification [

19].

To use the tiling strategy in feature extraction and matching, corresponding block searching has been achieved through homography estimation between image pairs in this study because on-board GNSSS/IMU is not precious enough for corresponding block prediction. Nowadays, most of market-available UAVs are equipped with non-professional GNSS/IMU devices with low positioning and orientation accuracy. In order to improve the accuracy, some other attempts could be made, such as the elimination of GNSS multipath and visual localization. In the urban environment, one of the main factors causing the degradation of positioning precision is the GNSS multipath because of the relative lower flight height of UAV platforms. Then, some attempts would be made for the GNSS multipath mitigation [

32]. In addition, visual localization is an important clue for the direct positioning of UAVs, which can adapt to some special situations without GNSS signals. With the aids of existing aerial images or urban structure data [

33], visual localization technique could furtherly decrease the positioning errors, which is about several meters for most market-available UAVs. In the future, with the positioning and orientation accuracy improvement of UAV platforms, the prediction task can be implemented without homography estimation.

In addition, the combination of these two strategies is also analyzed and verified in this study, which corresponds to the R-T solution. The experimental results show that competitive accuracy of BA tests can be achieved from the solution, but more computational costs are observed for image rectification. Meanwhile, image rectification leads to the non-uniform distribution of feature points, as well as does not increase the number of reconstructed points. Therefore, in this study, the NR-T solution is proposed for feature extraction and matching of oblique UAV images.