Reflectance Intensity Assisted Automatic and Accurate Extrinsic Calibration of 3D LiDAR and Panoramic Camera Using a Printed Chessboard

Abstract

:1. Introduction

2. Related Works

2.1. Multiple Views on a Planar Checkerboard

2.2. Multiple Geometry Elements

2.3. Correlation of Mutual Information

2.4. Our Approach

3. Overview and Notations

3.1. Overview

3.2. Notations

- : coordinates of a 3D point.

- : set of n 3D points.

- : rotation angle vector whose element corresponds to the rotation angle along x-, y-, z-axis respectively.

- : the translation vector.

- : rotation matrix.

- : function that transforms the 3D point with the angle vector and translation vector .

- : transformed point of .

- : set of estimated 3D corner points of the chessboard from the point cloud. N is the number of the corners in the chessboard.

- : coordinates of 2D pixel.

- : set of detected 2D corner pixels of the chessboard from the image.

4. Corner Estimation from the Point Cloud

4.1. Automatic Detection of the Chessboard

4.1.1. Segmentation of the Point Cloud

4.1.2. Finding the Chessboard from the Segments

4.2. Corner Estimation

4.2.1. Model Formulation

- directions of are defined to obey to the right hand rule.

- direction of (the normal of the chessboard) is defined to point to the side of origin of the LiDAR coordinate system.

- angle between and x axis of the LiDAR coordinate system is not more than

4.2.2. Correspondence of Intensity and Color

4.2.3. Cost Function and Optimization

5. Extrinsic Calibration Estimation

5.1. Corner Estimation from the Image

5.2. Correspondence of the 3D-2D Corners

5.3. Initial Value by PnP

5.4. Refinement with Nonlinear Optimization

6. Experimental Results and Error Evaluation

6.1. Setup

6.2. Simulation for Corner Detection Error in the Point Cloud

6.2.1. Simulation of the Point Cloud

6.2.2. Error Results from the Simulation

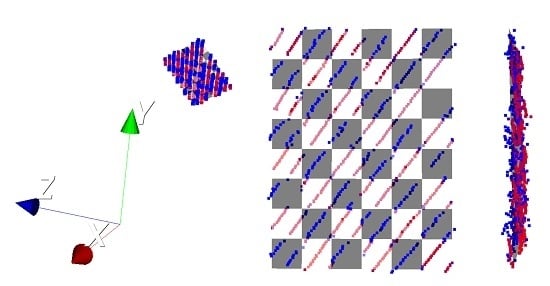

6.3. Detected Corners

6.3.1. From the Image

6.3.2. From the Point Cloud

6.4. Estimated Extrinsic Parameters

6.5. Re-Projection Error

6.6. Re-Projection Results

7. Discussions

- Automatic segmentation. As the first step of the proposed method, automatic segmentation is performed. The current segmentation method is only based on the distance information, which needs the chessboard to be spatially separated from the surrounding objects. Nevertheless, slight under-segmentation caused by the stand of the chessboard or over-segmentation caused by the measurement noise may still occur. The degree of mis-segmentation generated by the segmentation method used in this work is experimentally shown to be negligible for the corners estimation with the overall optimization of the proposed method.

- Simulation. To evaluate the performance for the corner estimation with the proposed method, we approximately simulated the points by considering the probability model of the distance as Gaussian distribution. However, the probability model for the noise of reflectance intensity, which is an aspect for corners estimation, is not considered. Under the influence of reflectance intensity, the real error of corner estimation is supposed to be higher than the simulated results in this work. This is one of the reasons why the relative error for corners estimation is about 0.2%, as shown in Figure 13b, and the final re-projection error increased to 0.8% in Section 6.5. For a more precise simulation, the probability model of the reflectance value related to the incidence angle, the distance and the divergence of laser beam needs to be formulated.

- Chessboard. As shown in Figure 11, both the horizontal and vertical intervals increase as the distance increases. To gather enough information for corner estimation, the side length of one grid in the chessboard is suggested to be greater than 1.5 times of the theoretical vertical interval at the farthest place. In addition, the intersection angle between the diagonal line of the chessboard and the z-axis of the LiDAR is suggested to be less than to enable the scanning of as many patterns as possible.We use the panoramic image for calibration, therefore, to remain unaffected by the stitching error, it is better to place the chessboard in the center of the field of view for each camera.

- Correspondence of 3D and 2D corners. In this work, a chessboard with 6∼8 patterns is used and the counting order is defined as starting from the of the chessboard for automatic correspondence. To make the “lower left” identified correctly, the chessboard should be captured to make the “lower left” of the real chessboard be same with that of chessboard in the image during the data acquisition. Also, the direction of z-axis of the two sensors should be almost consistent shown as in Figure 9b. However, these restrictions can be released with the introduction of asymmetrical patterns in practical use.

8. Conclusions and Future Works

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Mastin, A.; Kepner, J.; Fisher, J. Automatic registration of LIDAR and optical images of urban scenes. In Proceedings of the 2009 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Miami, FL, USA, 20–25 June 2009. [Google Scholar]

- Paparoditis, N.; Papelard, J.P.; Cannelle, B.; Devaux, A.; Soheilian, B.; David, N.; Houzay, E. Stereopolis II: A multi-purpose and multi-sensor 3d mobile mapping system for street visualisation and 3d metrology. Rev. Fr. Photogramm. Télédétec. 2012, 200, 69–79. [Google Scholar]

- Szarvas, M.; Sakai, U.; Ogata, J. Real-time pedestrian detection using LIDAR and convolutional neural networks. In Proceedings of the 2006 IEEE Intelligent Vehicles Symposium (IV), Tokyo, Japan, 13–15 June 2006. [Google Scholar]

- Premebida, C.; Nunes, U. Fusing LIDAR, camera and semantic information: A context-based approach for pedestrian detection. Int. J. Robot. Res. (IJRR) 2013, 32, 371–384. [Google Scholar] [CrossRef]

- Schlosser, J.; Chow, C.K.; Kira, Z. Fusing LIDAR and images for pedestrian detection using convolutional neural networks. In Proceedings of the 2016 IEEE International Conference on Robotics and Automation (ICRA), Stockholm, Sweden, 16–21 May 2016. [Google Scholar]

- Levinson, J.; Thrun, S. Automatic Online Calibration of Cameras and Lasers. Available online: http://roboticsproceedings.org/rss09/p29.pdf (accessed on 27 April 2017).

- Taylor, Z.; Nieto, J.; Johnson, D. Multi-modal sensor calibration using a gradient orientation measure. J. Field Robot. (JFR) 2014, 32, 675–695. [Google Scholar] [CrossRef]

- Pandey, G.; McBride, J.R.; Savarese, S.; Eustice, R.M. Automatic extrinsic calibration of vision and lidar by maximizing mutual information. J. Field Robot. (JFR) 2014, 32, 696–722. [Google Scholar] [CrossRef]

- Mirzaei, F.M.; Kottas, D.G.; Roumeliotis, S.I. 3D LIDAR–camera intrinsic and extrinsic calibration: Identifiability and analytical least-squares-based initialization. Int. J. Robot. Res. (IJRR) 2012, 31, 452–467. [Google Scholar] [CrossRef]

- Park, Y.; Yun, S.; Won, C.; Cho, K.; Um, K.; Sim, S. Calibration between color camera and 3D LIDAR instruments with a polygonal planar board. Sensors 2014, 14, 5333–5353. [Google Scholar] [CrossRef] [PubMed]

- García-Moreno, A.I.; Hernandez-García, D.E.; Gonzalez-Barbosa, J.J.; Ramírez-Pedraza, A.; Hurtado-Ramos, J.B.; Ornelas-Rodriguez, F.J. Error propagation and uncertainty analysis between 3D laser scanner and camera. Robot. Auton. Syst. 2014, 62, 782–793. [Google Scholar] [CrossRef]

- Powell, M.J.D. An efficient method for finding the minimum of a function of several variables without calculating derivatives. Comput. J. 1964, 7, 155–162. [Google Scholar] [CrossRef]

- Kneip, L.; Li, H.; Seo, Y. UPnP: An optimal O(n) solution to the absolute pose problem with universal applicability. In Proceedings of the European Conference on Computer Vision (ECCV), Zurich, Switzerland, 6–12 September 2014; Springer: Berlin, Germany, 2014; pp. 127–142. [Google Scholar]

- Levenberg, K. A method for the solution of certain non-linear problems in least squares. Q. Appl. Math. 1944, 2, 164–168. [Google Scholar] [CrossRef]

- Marquardt, D.W. An algorithm for least-squares estimation of nonlinear parameters. J. Soc. Ind. Appl. Math. 1963, 11, 431–441. [Google Scholar] [CrossRef]

- Zhang, Q.; Pless, R. Extrinsic calibration of a camera and laser range finder (improves camera calibration). In Proceedings of the 2004 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Sendai, Japan, 28 September–2 October 2004. [Google Scholar]

- Unnikrishnan, R.; Hebert, M. Fast Extrinsic Calibration of a Laser Rangefinder to a Camera. Available online: http://repository.cmu.edu/robotics/339/ (accessed on 27 April 2017).

- Pandey, G.; McBride, J.; Savarese, S.; Eustice, R. Extrinsic calibration of a 3D laser scanner and an omnidirectional camera. IFAC Proc. Vol. 2010, 43, 336–341. [Google Scholar] [CrossRef]

- Pandey, G.; McBride, J.R.; Eustice, R.M. Ford Campus vision and lidar data set. Int. J. Robot. Res. (IJRR) 2011, 30, 1543–1552. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Harati, A.; Siegwart, R. Extrinsic self calibration of a camera and a 3D laser range finder from natural scenes. In Proceedings of the 2007 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), San Diego, CA, USA, 29 October–2 November 2007. [Google Scholar]

- Moghadam, P.; Bosse, M.; Zlot, R. Line-based extrinsic calibration of range and image sensors. In Proceedings of the 2013 IEEE International Conference on Robotics and Automation, Karlsruhe, Germany, 6–10 May 2013. [Google Scholar]

- Gong, X.; Lin, Y.; Liu, J. 3D LIDAR-camera extrinsic calibration using an arbitrary trihedron. Sensors 2013, 13, 1902–1918. [Google Scholar] [CrossRef] [PubMed]

- Geiger, A.; Moosmann, F.; Car, O.; Schuster, B. Automatic camera and range sensor calibration using a single shot. In Proceedings of the IEEE International Conference on Robotics and Automation (ICRA), Saint Paul, MN, USA, 14–18 May 2012. [Google Scholar]

- Geiger, A.; Lenz, P.; Stiller, C.; Urtasun, R. Vision meets robotics: The KITTI dataset. Int. J. Robot. Res. (IJRR) 2013, 32, 1231–1237. [Google Scholar] [CrossRef]

- Atanacio-Jiménez, G.; González-Barbosa, J.J.; Hurtado-Ramos, J.B.; Ornelas-Rodríguez, F.J.; Jiménez-Hernández, H.; García-Ramirez, T.; González-Barbosa, R. LIDAR velodyne HDL-64E calibration using pattern planes. Int. J. Adv. Robot. Syst. 2011, 8, 59. [Google Scholar] [CrossRef]

- Zhang, Z. A flexible new technique for camera calibration. IEEE Trans. Pattern Anal. Mach. Intell. (TPAMI) 2000, 22, 1330–1334. [Google Scholar] [CrossRef]

- Scaramuzza, D.; Martinelli, A.; Siegwart, R. A toolbox for easily calibrating omnidirectional cameras. In Proceedings of the 2006 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Beijing, China, 9–15 October 2006. [Google Scholar]

- Rabbani, T.; Van Den Heuvel, F.; Vosselmann, G. Segmentation of point clouds using smoothness constraint. Int. Arch. Photogramm. Remote Sens. Spat. Inf. Sci. (ISPRS) 2006, 36, 248–253. [Google Scholar]

- Fischler, M.A.; Bolles, R.C. Random Sample Consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Wang, W.; Sakurada, K.; Kawaguchi, N. Incremental and enhanced scanline-based segmentation method for surface reconstruction of sparse LiDAR data. Remote Sens. 2016, 8, 967. [Google Scholar] [CrossRef]

- Velodyne LiDAR, Inc. HDL-32E User’s Manual; Velodyne LiDAR, Inc.: San Jose, CA, USA, 2012. [Google Scholar]

- Jolliffe, I. Principal Component Analysis; Wiley Online Library: Hoboken, NJ, USA, 2002. [Google Scholar]

- Jones, E.; Oliphant, T.; Peterson, P. SciPy: Open Source Scientific Tools for Python. Available online: http://www.scipy.org (accessed on 27 April 2017).

- Rufli, M.; Scaramuzza, D.; Siegwart, R. Automatic detection of checkerboards on blurred and distorted images. In Proceedings of the IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Nice, France, 22–26 September 2008. [Google Scholar]

- Kneip, L.; Furgale, P. OpenGV: A unified and generalized approach to real-time calibrated geometric vision. In Proceedings of the 2014 IEEE International Conference on Robotics and Automation (ICRA), Hong Kong, China, 31 May–7 June 2014; pp. 1–8. [Google Scholar]

- Point Grey Research, Inc. Technical Application Note (TAN2012009): Geometric Vision Using Ladybug® Cameras; Point Grey Research, Inc.: Richmond, BC, Canada, 2012. [Google Scholar]

| Camera Index | 0 | 1 | 2 | 3 | 4 |

|---|---|---|---|---|---|

| Frame Index | 1, 6, 11, 16 | 2, 7, 12, 17 | 3, 8, 13, 18 | 4, 9, 14, 19 | 5, 10, 15, 20 |

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wang, W.; Sakurada, K.; Kawaguchi, N. Reflectance Intensity Assisted Automatic and Accurate Extrinsic Calibration of 3D LiDAR and Panoramic Camera Using a Printed Chessboard. Remote Sens. 2017, 9, 851. https://doi.org/10.3390/rs9080851

Wang W, Sakurada K, Kawaguchi N. Reflectance Intensity Assisted Automatic and Accurate Extrinsic Calibration of 3D LiDAR and Panoramic Camera Using a Printed Chessboard. Remote Sensing. 2017; 9(8):851. https://doi.org/10.3390/rs9080851

Chicago/Turabian StyleWang, Weimin, Ken Sakurada, and Nobuo Kawaguchi. 2017. "Reflectance Intensity Assisted Automatic and Accurate Extrinsic Calibration of 3D LiDAR and Panoramic Camera Using a Printed Chessboard" Remote Sensing 9, no. 8: 851. https://doi.org/10.3390/rs9080851

APA StyleWang, W., Sakurada, K., & Kawaguchi, N. (2017). Reflectance Intensity Assisted Automatic and Accurate Extrinsic Calibration of 3D LiDAR and Panoramic Camera Using a Printed Chessboard. Remote Sensing, 9(8), 851. https://doi.org/10.3390/rs9080851