3.1. Calibration and Validation Results

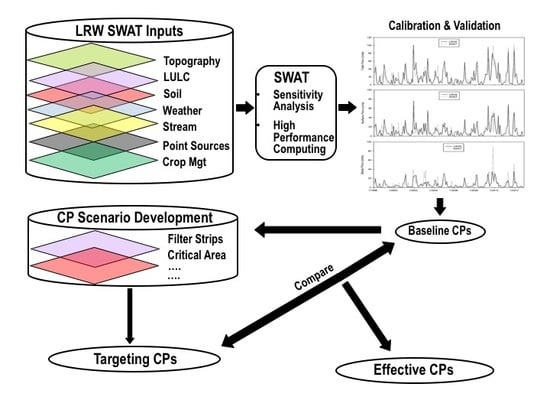

Water balance is the major driving force behind all of the processes in SWAT, as it impacts plant growth as well as movement of sediments, nutrients, pesticides, and pathogens. SWAT simulates watershed hydrology into the land phase, controlling the amount of water, sediment, nutrient, and pesticide loadings to the main channel in each sub-watershed, and the in-stream phase, controlling the movement of water, sediments, etc., through the channel network of the watershed to the outlet. More details of these processes can be found in the SWAT theoretical documentation [

52]. To evaluate the initial model performance, an overall annual water balance was calculated at the watershed scale using the basin level output (output.std). The water balance was calculated with the help of Equation (2).

To evaluate water balance, both sides of the above equation were expected to be similar over a long simulation period. This was verified using simulations from 1998 to 2005 (calibration time period) at an annual scale. The sum of surface runoff (343 mm), groundwater discharge (165 mm), evapotranspiration (512 mm), and aquifer recharge (122 mm) accounted for ~100% of the precipitation (1145 mm), and the small difference being the change in the soil water content; hence, the water balance of the watershed was considered satisfactory. The sum of simulated groundwater (165 mm) and lateral flow (10 mm) contributed 37.8% of the total water yield (463 mm), which is similar to the base flow estimated at the USGS gage at Colt (38%). Precipitation in the watershed ranged from 1105 mm to 1275 mm per year during the modeling period against an average annual precipitation of 1141 mm.

SWAT-Check warnings, as well as their potential resolutions, are reported in

Table 5. The warnings were related to the hydrology, N-cycle, plant growth, and point sources sections of the SWAT-Check tool. Once warning issues were resolved, the sensitivity analysis was conducted to identify the parameters that could be used for model calibration. The five most sensitive parameters for flow, sediment, TP, and NO3-N are listed in

Table 6. Some parameters (not identified as sensitive parameters) were selected to make a better fit for the measured and simulated data [

33].

CN2 affects the overland flow and was ranked as the most sensitive parameter for flow. The soil evaporation compensation factor (ESCO) was another sensitive parameter for flow, mainly because water balance is sensitive to ESCO. USLE_P was the most sensitive parameter for sediment, which indicated that changes in the land-use practice factor would affect sediment losses. USLE_C was another sensitive parameter affecting sediment, indicating that a change in crop management factors would affect sediment losses. The SPCON, SPEXP, and CH_N2 parameters that impact the channel processes also affected sediment losses, indicating that sediment losses in the LRW were affected by both overland (surface) and channel processes.

PHOSKD was the most sensitive parameter affecting TP. Similar to CN2, PHOSKD was also related to the overland process. As a result, it was observed that the overland processes mostly impacted flow, sediment, and TP in the LRW. RCHRG_DP was the most sensitive parameter for NO3-N, which was expected, as the movement of NO3-N is mainly an underground process.

Parameters adjusted for calibration and validation are shown in

Table 7. A detailed description of the parameters is reported in the SWAT2012 Input/Output File Documentation available at

http://swat.tamu.edu/documentation/2012-io/. The parameters were adjusted such that their values were kept within the recommended ranges suggested by SWAT modelers/developers. The statistical calibration and validation results at the Colt and Palestine sites are shown in

Table 8, and the temporal results at both of the sites are shown in

Figure 3,

Figure 4 and

Figure 5. The R

2, NSE, and PBIAS were satisfactory or better, indicating a satisfactory goodness of fit; however, RSR was unsatisfactory during the calibration period for sediment, TP, and NO3-N. Since both PBIAS and RSR are error indexes, and PBIAS was satisfactory or better for sediment, TP, and NO3-N, calibration results were assumed as satisfactory.

The PBIAS statistics indicated an overprediction of total flow during the calibration period (negative biases) and underprediction during the validation period (positive biases) at the Colt site (

Figure 3). A high underprediction of total flow occurred during March 2002, whereas high overprediction was observed during October 1998 and 1999, June 2000, and May 2004 (

Figure 3). In contrast, total, surface, and base flow was overpredicted both during the calibration and validation period (negative biases) at the Palestine site. The only exception of underprediction of total and base flow at the Palestine site was in November 2009 (

Figure 4). The underprediction and overprediction is attributed to the SWAT model's inability to simulate a storm event as it is designated for long-term simulation. It has been reported that the inability of the input rainfall data to completely reflect the actual spatial variability of rain could lead to errors in predicting flow [

33,

54]. The other sources that could lead to the overprediction or underprediction of flow comprises of a combination of measurement error, systematic error, model uncertainty, subjective judgment, and inherent randomness [

55,

56,

57].

Sediment was underpredicted by SWAT for the calibration and validation period at the Colt site. Sediment underprediction during March 2002 is tied with flow underprediction for the same month (

Figure 5). As P binds with sediment and gets transported with it, TP was found to be underpredicted for the calibration and validation period, which was similar to sediment. Overpredicted peaks were observed for NO3-N (October 1999 and November 2004) during the calibration and validation period, while it was found to be underpredicted for February 2002. In general, the calibration of NO3-N is often difficult, resulting in poor simulations [

58]. It can also be assumed that errors in baseflow predictions were expected to propagate to NO3-N. Overall, the model was considered satisfactory due to the robustness of the multisite, multivariable, and multiobjective calibration and validation strategy.

3.2. Comparison of Pre-CP and Post-CP Scenarios

Since water quality losses in the near future are of interest, simulations were run for five years (2013 to 2017) past the model calibration and validation period. The statistical weather calculator available in SWAT was used for preparing the projected weather data files. Paired t-tests were performed at a monthly scale to test differences between the predicted losses (i.e., sediment, TP, and TN) at each HRU comparing pre-CP and post-CP datasets, as well as each pair of CPs.

It should be noted that the pre-CP scenario reflects no CPs implementation in LRW. For the post-CP scenario, each CP was simulated separately in SWAT. The simulated average annual sediment and nutrient losses exiting the HRUs from each CP (post-CP) were compared with the pre-CP losses scenario. The relative percentage load reduction associated with the selected CPs compared to the pre-CP scenario is shown in

Figure 6. The authors have used a relative comparison criterion where if a CP has a greater total percentage reduction (sum of the percentage reduction of sediment, total phosphorus, and total nitrogen), then that CP gets a higher ranking.

In general, filter strips are used to reduce nutrient losses from upland areas of the watershed. It was the most effective CP in reducing predicted TN loss (40%), along with second most effective in reducing predicted TP loss (43%;

Figure 6). The filter strip scenario results reported in this study fall within the ranges of sediment, TP, and TN reduction from 22 published studies reported by White and Arnold [

59]. Parajuli, Mankin, and Barnes [

60] reported 63% sediment reduction with the target filter strip scenario, which is little higher than what has been observed in this study. Critical area planting was the most effective CP at reducing predicted sediment (80%) and predicted TP loss (58%), along with the second most effective at reducing predicted TN (16%;

Figure 6). Santhi, Srinivasan, Arnold, and Williams [

42] and Tuppad, Santhi, Srinivasan, and Williams [

61] also reported high reduction in predicted sediment, TP, and TN losses (98–100% sediment reduction, 90–99% TP reduction, 82–99% TN reduction) with the critical area planting scenario.

As grade stabilization structures work to increase deposition in upstream channel reaches, these structures were most effective where channel degradation causes erosion and deteriorate water quality. Grade stabilization structures decreased predicted sediment loss (36%) to a relatively greater extent than predicted TP (5%) and predicted TN (2%;

Figure 6). Tuppad, Santhi, Srinivasan, and Williams [

61] and Arabi, Frankenberger, Engel, and Arnold [

62] also reported high reduction in sediment (71–74%) with a grade stabilization structure scenario.

Irrigation land leveling resulted in a decrease in the HRU slope and slope length, which in turn influenced surface transport processes. As sediment and P are affected the most by surface processes, with N transport mainly a subsurface flow process, irrigation land leveling was more effective in reducing predicted sediment (52%; second most effective among CPs) and predicted TP (37%) loss compared with predicted TN (7%) loss. Kannan, Omani, and Miranda [

63] also found a higher percentage of sediment reduction (42.4%) compared with TP and TN reduction, with the simulation of irrigation land leveling CP.

Irrigation pipeline, irrigation water management, and nutrient reduction CPs were found to be less effective in decreasing nutrient and sediment loading in the LRW compared to other selected CPs. Irrigation pipeline CPs reduced sediment, TP, and TN by 4%, 3%, and 8%, respectively; and irrigation water management by 3%, 2%, and 5%, respectively. Kannan, Omani, and Miranda [

63] also reported similar results—a reduction of 3% in sediment, 4.3% in TP, and 11.9% in TN with the simulation of irrigation CPs.

Nutrient reduction decreased TP and TN loss by 4% and 14%, respectively, but increased sediment loss slightly (5%;

Figure 6) compared with pre-CP losses. This reduction in N and P losses with the adoption of nutrient reduction is consistent with this CP functioning to decrease the rate of nutrients applied, and at a time when there is a lower risk of runoff or leaching. The slight increase in sediment loss compared with the pre-CP with nutrient reduction may have resulted from lower crop yields as a consequence of lower N and P applications (5%;

Figure 6). The lower crop yields (overall 8% reduced yield) likely resulted in less vegetative cover, and thus more erodible exposed soil surfaces. This yield reduction was contrary to the expected impact of properly applied nutrient reduction, which is intended to eliminate excess nutrient application, not to reduce yield [

64].

In summary, the variability in CP performance in this study supports previous reports that CP effectiveness is dependent on watershed characteristics, placement strategy, and CP type [

61,

65,

66].

The overall effectiveness of CPs in decreasing order were: critical area planting, filter strip, irrigation land leveling, grade stabilization structure, irrigation pipeline, nutrient reduction, and irrigation water management. A paired t-test was performed at a monthly scale to evaluate differences between predicted losses at each HRU and compare pre-CP and post-CP datasets, and it suggests significant differences (p 0.05) in sediments and nutrient loadings. In addition, paired t-tests between losses from each pair of CPs also revealed significant differences (p 0.05).

Cumulative nutrient and sediment loss curves were developed at the HRU level to visualize the effectiveness of CPs (

Figure 7). Only one CP, i.e., irrigation land leveling, is shown in

Figure 7 for sediment, TP, and TN. White, Storm, Busteed, Stoodley, and Phillips [

67] presented several such curves to show sediment and nutrient losses variation with respect to the HRU area. The cumulative nutrient and sediment curves were sorted with respect to the contributing watershed area (HRU area). The contributing watershed area (%) was arranged in ascending order, and sediment and nutrient losses (tons or kg) were plotted with respect to the contributing watershed area. This allowed the comparison of pre-CP and post-CP loss from the same HRU (

Figure 7). It is evident that cumulative sediment, TP, and TN loss with CPs in place was less than that with no CPs for the same contributing watershed area. For instance, the post-CP sediment losses were approximately 35,000 tons compared to the pre-CP sediment losses of approximately 75,000 tons. As the contributing watershed area increased, the difference in loadings between with and without the CP scenario became larger for sediment than TP and TN (

Figure 7). The trend for TP was similar to that of sediment, because of the ability of P to bind and transport with sediments. Moreover, sediment and TP are dominated by surface processes, as opposed to TN, which is dominated by underground processes. The reductions obtained were higher for sediments (52%), followed by TP (37%) and TN (7%). Kannan, Omani, and Miranda [

63] also reported that the sediment reduction from irrigation land leveling CP (42%) was higher than that obtained by TN (35%).