1. Introduction

In 1991, the Welding Institute (TWI) of the United Kingdom invented friction stir welding (FSW) as a solid-state joining technique in which the material being welded does not melt or recast [

1]. FSW is considered to be the most significant advance in metal joining processes of the past decade. FSW was initially applied to aluminum alloys [

2]. Friction between the plate surface and the contact surface of a certain tool generates heat in the FSW process. The shoulder and pin are the two fundamental components of the one-of-a-kind instrument. The shoulder is responsible for generating heat and keeping the plasticized material within the weld zone. To optimize ultimate tensile strength (UTS), upon which the quality of a welded connection is dependent, it is vital to have complete control over the relevant process parameters in order to achieve the required strength. To attain optimal strength, it is necessary to select and regulate the welding process’s parameters. Developing mathematical models that characterize the link between input parameters and output variables can be used to define the intended output variables via a number of prediction techniques.

Reference [

3] introduced a new algorithm for determining the optimal friction stir welding parameters to enhance the tensile strength of a weld seam formed of semisolid material (SSM) ADC 12 aluminum. Welding parameters included rotating speed, welding speed, tool tilt, tool pin profile, and rotational direction. The given method is a variable neighborhood strategy adaptive search (VaNSAS) technique. Using the optical spectrum and extreme gradient boosting decision tree, Reference [

4] investigated the regression prediction of laser welding seam strength of aluminum–lithium alloy utilized in a rocket fuel tank (XGBoost). The effects of extrusion settings and heat treatment on the microstructures and mechanical properties of the weld seam were disclosed by Reference [

5]. Weld performance is dependent on the direction of the weld, and transverse and diagonal welds are frequently employed, according to [

6]. During extrusion and solution treatment, abnormal grain growth (AGG) was seen at longitudinal welds of profiles, as determined by [

7]. Fine equiaxed granules with copper orientation were generated in the welding area when extruded at low temperature and low extrusion speed, and some of these grains changed into recrystallization textures as the deformation temperature increased. The LTT effect was obtained by in situ alloying of dissimilar conventional filler wires with the base material, hence obviating the need for special manufacture of an LTT wire, as described in Reference [

8]. Two distinct material combinations produced the LTT effect. From this, it is clear that the mechanical properties, particularly the ultimate tensile strength, are dependent on a number of factors/parameters that are prone to error when real welding is performed in industry. After the FSW has been performed at the welding site, the question arises as to whether the welding quality matches the expected quality when the welding parameters are established.

The question is if the weld seam of the FSW can indicate the welding quality, especially the UTS. Using digital image correlation (DIC), Reference [

9] conducted a tensile test with the loading direction perpendicular to the weld seam to characterize the local strain distribution. For the recrystallized alloy, the weld seam region was mechanically stronger than the surrounding region, while the opposite was true for the unrecrystallized example. The link between the variation in weld seam and tensile shear strength in laser-welded galvanized steel lap joints was examined in order to determine the applicability of weld seam variation as a joining quality estimate. By training various neural networks, Reference [

10] proposed a model to estimate the joint strength (qualities) of continuously ultrasonic welded TCs. Reference [

11] offered an examination of various quality parameters for longitudinal seam welding in aluminum profiles extruded using porthole dies.

The analysis of prior studies indicates that the UTS or other mechanical parameters of the FSW weld seam can be predicted using the weld seam’s prediction. Extraction of feathers from the weld seam of various welding types and aspects has been the subject of research. The paper [

12] describes a deep learning method for extracting the weld seam feather. The proposed approach is able to deal with images with substantial noise. Reference [

13] describes the detection model for the weld seam characteristic with an extraction method based on the improved target detection model CenterNet. The Faster R–CNN network model was presented by [

14] to identify the weld seam’s surface imperfection. By incorporating the FPN pyramid structure, variable convolution network, and background suppression function into the classic Faster R–CNN network, the path between the lower layer and the upper layer is significantly shortened, and location information is kept more effectively. Presented in [

15] is an inline weld depth control system based on OCT keyhole depth monitoring. The technology is able to autonomously execute inline control of the deep penetration welding process based solely on the set weld depth target. On various aluminum alloys and at varying penetration depths, the performance of the control system was demonstrated. In addition, the control’s responsiveness to unanticipated external disturbances was evaluated.

We were unable to uncover research that directly predicts the UTS from the weld seam or a mechanism for classifying the quality of the UTS without harming the weld seam, despite an exhaustive review of previous literature. Due to the advancement of image processing tools and techniques, we believe that the weld seam, which is the direct result of the FSW, should be able to indicate the UTS. In this study, we created a deep learning model to categorize the quality of the welding. Adapted from Mishra [

16], this study classifies the quality of welding into three distinct groups. These three groups are characterized by the percentage of the weld seam’s UTS that is less than the base material. These three categories are (1) UTS less than 70%, (2) UTS between 70% and (3) 85%, and UTS greater than 85% of the base material.

However, setting the parameters in FSW can cause errors, and quality can vary. Verifying the quality of FSW can involve huge costs and the damage to the weld during UTS testing. Analyzing a photograph to predict UTS class can save cost and time during the classification of FSW UTS.

Deep learning models [

17,

18,

19,

20,

21,

22,

23,

24] have recently made significant strides in computer vision, particularly in terms of medical image interpretation, as a result of their ability to automatically learn complicated and advanced characteristics from images. This is due to their propensity to automatically learn complicated visual features. Consequently, a number of studies have used these models to classify histopathological images of breast cancer [

17]. Because of their capacity to share information throughout multiple levels inside one deep learning model, convolutional neural networks (CNNs) [

25] are extensively employed for image-related tasks. A range of CNN-based architectures have been proposed in recent years; AlexNet [

20] was considered one of the first deep CNNs to achieve managerial competency in the 2012 ImageNet Large Scale Visual Recognition Challenge (ILSVRC). Consequently, VGG architecture pioneered the idea of employing deeper networks with smaller convolution layers and ranked second in the 2014 ILSVRC competition. Multiple stacked smaller convolution layers can form an excellent region of interest and are used in newly proposed pre-trained models, including the inception network and residual neural network (ResNet) [

18].

Many studies have attempted to predict or improve FSW UTS with welding parameters. Srichok et al. [

26] introduced a revamped version of the differential evolution algorithm to determine optimal friction stir welding parameters. Dutta et al. [

27] studied the process of gas tungsten arc welding using both conventional regression analysis and neural network-based techniques. They discovered that the performance of ANN was superior to that of regression analysis. Okuyucu et al. [

28] investigated the feasibility of using neural networks to determine the mechanical behavior of FSW aluminum plates by evaluating process data such as speed of rotation and welding rate. Mishra [

16] studied two supervised machine learning algorithms for predicting the ultimate tensile strength (MPa) and weld joint efficiency of friction stir-welded joints. Several techniques, including thermography processing [

29], contour plots of image intensity [

30], and support vector machines for image reconstruction and blob detection [

31], have been used to detect the failures of FSW.

Given that, to the best of our knowledge, ensemble deep learning has not been utilized to categorize the quality of FSW weld seams, it is possible to evaluate the UTS of weld seams without resorting to destructive testing. Thus, the following contributions are provided by this study:

- (1)

An approach outlining the first ensemble deep learning model capable of classifying weld seam quality without destroying the tested material;

- (2)

A determination of UTS quality using the proposed model and a single weld seam image;

- (3)

A weld seam dataset was created so that an effective UTS prediction model could be designed.

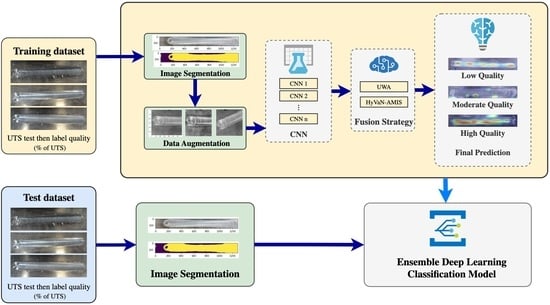

Figure 1 shows a comparison of the proposed method with the traditional weld seam UTS testing method. In the traditional method, the specimen is cut out and then tested with the UTS testing machine, while the proposed model uses an image of the weld seam to determine UTS quality.

The study’s remaining sections are organized as follows.

Section 2 addresses comparable research in sentiment feature engineering and classification algorithms. In

Section 3, we describe two feature set strategies and offer a framework for ensemble classification. The results of the experiments are presented and analyzed in

Section 4. In

Section 5, we draw conclusions and suggest research subjects for the future.

5. Discussion

According to

Section 4.1 and

Section 4.2, the proposed model components contributed to an improvement in classification accuracy. Image segmentation enhances classification accuracy by 17.18% compared to models without image segmentation. The results of [

61] indicate that threshold segmentation can improve breast cancer image classification quality. Due to segmentation, the quality of the solution can be enhanced to extract only the essential components for grouping the images into various categories. In spite of this, classification accuracy is improving as a result of a greater emphasis on images’ essential characteristics. Furthermore, this is reinforced by [

62,

63,

64].

Image enhancement is a crucial component that can increase the accuracy of the classification of UTS using a weld seam image. Image augmentation can increase the quality of a solution by 2.17% when compared to a model that does not use it, according to the results presented earlier. This will increase the size of the trained dataset as a consequence of image enhancement. In general, the accuracy of the model will increase as the number of images used to train it grows. This conclusion is supported by [

65,

66], which demonstrate that image augmentation is an efficient ensemble strategy for improving solution quality.

The image segmentation and augmentation techniques (ISAT) are utilized to de-crease the noise that can occur in classification models. Nevertheless, the application of efficient ISAT would improve the final classification result. As stated previously, the model using ISAT can improve solution quality by 2.17% to 17.18% compared to the model that does not use ISAT. This occurs as a result of the reduction in noise in the image and data structures brought about by these two processes. The robust deblur generative adversarial network (DeblurGAN) was suggested by [

67] to improve the quality of target images by eliminating image noise. Numerous types of noise penalties, such as low resolution, Gaussian noise, low contrast, and blur, affect the identification performance, and the computational results demonstrated that the strategy described in this research may improve the accuracy of the target problem. ResNet50-LSTM is a method proposed by Reference [

68] for reducing the noise in food photographs, and its results are exceptional when compared to existing state-of-the-art methods. The results of these two studies corroborate our conclusion that the ISAT, which is a noise reduction strategy, can improve the classification model’s quality.

The computational results presented in

Table 10 and

Table 11 reveal that the heterogeneous ensemble structure of the various CNN designs provides a superior solution to the homogeneous ensemble structures. The classification of the acoustic emission (AE) signal is improved, utilizing a machine learning heterogeneous model as demonstrated in [

69]. According to this study’s findings, the heterogeneous model provides a solution that is approximately 5.21% better than the answer supplied by homogeneous structures. This finding is supported by numerous studies, including [

70,

71,

72]. Moreover, Gupta and Bhavsar [

73] proposed a similar improvement technique for their human epithelial cell image classification work which achieved an accuracy of 86.03% when tested on fresh large-scale data encompassing 63,000 samples. The fundamental concept behind why heterogeneous structures outperform homogeneous structures is that heterogeneous structures employ the advantages of different CNN architectures to create more effective and robust ensemble models. The weak aspect of a particular CNN architecture can be compensated for, for instance, by other CNN architecture strengths.

Figure 11 shows the accuracy obtained from various types of CNN architecture, including the heterogenous architectures. The data shown in

Figure 11 are taken from in-formation given in

Table 9. According to [

74], ensemble learning is an essential procedure that provides robustness and greater precision than a single model. In their proposed model, a snapshot ensemble convolutional neural network (CNN) was given. In addition, they suggested the dropCyclic learning rate schedule, which is a step decay to lower the value of the learning rate in each learning epoch. The dropCyclic can lessen the learning rate and locate the new local minimum in the following cycle. The ensemble model makes use of three CNN backbone architectures: MobileNet-V2, VGG16, and VGG19. The results indicate that the dropCyclic classification approach achieved a greater degree of classification precision than other existing methods. The findings of this study validated our research’s conclusion. Using a newly created decision fusion approach, which is one of the most essential procedures for improving classification quality [

69], can improve the quality of the answer in comparison to typical fusion techniques.

In this study, the first classification model of UTS based on weld seam outlook is presented. The model given in [

38] for predicting the UTS of friction stir welding is based on supervised machine learning regression and classification. The method entails conducting experiments on friction stir welding with varying levels of controlled parameters, including (1) tool traverse speed (mm/min), (2) tool rotational speed (RPM), and (3) axial force. The final result approximates the UTS that will occur when using different levels of (1), (2), and (3), but it is unknown whether the UTS result will be the same when performing FSW welding, which has more than three parameters, and whether the prediction model can be relied upon, despite the fact that the results in the article demonstrate excellent accuracy. Both [

75,

76] provide a comparable paradigm and methodology.

Figure 12 is a GradCam image of a weld seam derived from an experiment with a three-class classification training dataset. GradCam employs the gradients of the classification score relative to the final convolutional feature map to identify the image components that have the largest impact on the classification score. Where this gradient is strongest is where the data influence the final score the most. It is demonstrated that the model will initially evaluate the UTS of the welding joint area (welding line). From

Figure 12, the low quality of the weld seam can be identified mostly from the middle of the weld seam in the photograph. If this component exhibits a poor joining appearance, the model will classify it as “low-quality weld seam: low UTS”. For moderate- and high-quality weld seams (high UTS), the model will determine the edge of the weld seam. If it sees an abnormality, then “moderate quality of weld seam” will be assigned to that image, and if it cannot find an abnormal sign, then “high quality of weld seam” will be assigned to that image. It is demonstrated that the classification result of a weld seam image uses several regions to identify which corresponds to the results discussed in [

77,

78].

In our study, in contrast to the three discussed above, we are not interested in the input parameters, but rather the end outcome of welding. The weld seam is used to determine whether the UTS is of low, moderate, or high grade. The number of parameters and the level of each parameter are no longer essential for classifying welding quality. Due to the fact that the degree of UTS is decided by the base material UTS value, the only thing that matters is which material is using the FSW. This method can be used in real-world welding when FSW has been completed and it is unknown whether the weld quality is satisfactory. The proposed model can provide an answer without destroying any samples.

Table 12 displays the outcome of the experiment conducted to validate the accuracy of the suggested model submitted to the new experiment. We conducted the experiment by randomly selecting 15 sets of parameters from

Table 2. The UTS was then tested using the same procedure as described previously. The weld seam of these 15 specimens has been extracted, and the proposed classification model has been applied to classify the UTS. The outcome demonstrated that 100% of all specimens can be correctly categorized. Six, five, and subsamples were categorized as having UTS values between 194.45 and 236.10 MPa, less than 194.45 MPa, and greater than 236.10 MPa, respectively. The outcome of the actual UTS test matches the classification mode result exactly. This confirms that only weld seams can be used to estimate the UTS of the weld seam. Even though the current model cannot predict exactly (classified within the range of UTS), it serves as an excellent beginning point for research that can predict UTS without destroying the specimen. In the actual working environment of welding, it is impossible to cut the specimen to test for the UTS, and it is nearly impossible to cut the weld seam to test after welding is completed. This research can assist the welder in ensuring that the quality of the weld meets specifications without harming the weld seam.

Table 12 provides evidence that the weld seam outlook can be used to determine the UTS of the FSW. This may be consistent with [

79,

80]. According to [

79], the typical surface appearance of FSW is a succession of partly circular ripples that point toward the beginning of the weld. During traversal, the final sweep of the trailing circumferential edge of the shoulder creates these essentially cycloidal ripples. The distance between ripples is regulated by the tool’s rotational speed and the traverse rate of the workpiece, with the latter increasing as the former decreases. By definition, the combined relative motion is a superior trochoid, which is a cycloid with a high degree of overlap between subsequent revolutions. Under optimal conditions, the surface color of aluminum alloys is often silvery-white. Reference [

80] carried out frictional spot-welding of A5052 aluminum using an applied process. The investigation indicated that the molten polyethylene terephthalate (PET) evaporated to produce bubbles around the connecting interface and then cooled to form hollows. The bubbles have two opposing effects: their presence at the joining interface prevents PET from coming into touch with A5052, but bubbles or hollows are crack sources that generate crack courses, hence reducing the joining strength. It therefore follows that the appearance and surface characteristics of the weld seam can reflect the mechanical feature of the FSW that corresponds with our finding.

In addition, the deep learning approach may be utilized to forecast the FSW process parameters backward from the obtained UTS of the classification model. The data obtained in

Section 3.1 can be characterized as the parameters found. The UTS can be considered the input, while the collection of parameters is interpreted as the output. Then, these models are utilized to estimate the set of parameters based on the UTS obtained. This is one form of multi-label prediction model. This can correspond to the proposed research in [

81]. This research predicts the macroscopic traffic stream variables such as speed and flow of the traffic operation and management in an Intelligent Transportation System (ITS) by using the weather conditions such as fog, precipitation, and snowfall as the input parameters that affect the driver’s visibility, vehicle mobility, and road capacity.

6. Conclusions and Outlook

In this study, we developed an ensemble deep learning model with the purpose of distinguishing UTS weld seams from FSW weld seams. We constructed 1664 weld seam images by altering 11 types of input factors in order to obtain a diverse set of weld seam images. An ensemble deep learning model was created to differentiate UTS from the weld seam. The optimal model consisted of four steps: (1) image segmentation, (2) image augmentation, (3) the use of a heterogeneous CNN structure, and (4) the use of HyVaN-AMIS as the decision fusion technique.

The computational results revealed that the suggested model’s overall average accuracy for classifying the UTS of the weld seam was 96.23%, which is relatively high. At this time, we can confidently assert that with our controlled execution of FSW, the weld seam can be utilized to forecast UTS with an accuracy of at least 96.23%. When examining the model in depth, we discovered that image segmentation, image augmentation, the usage of heterogeneous CNN structure, and the use of HyVaN-AMIS improved the quality of the solution by 17.18%, 2.17%, 5.21%, and 4.04%, respectively.

The suggested model is compared to the state-of-the-art method of computer science communication, which is typically used to identify various problems in medical and agricultural images. We adapted these CNN architectures to classify the UTS of the weld seam images, and the computational results demonstrate that the proposed model provides a 0.85% to 7.88% more accurate classification than eight state-of-the-art methods, namely MobileNet-v2, DenseNet121, EfficientNetB1, RessNet101-v2, InceptionResnet-v2, NasNetMobile, EfficientNetV2B1, and EfficientNetV2S.

In order to build a categorization model that is more accurate, it is necessary to construct a classification model that is more precise in future studies. Image segmentation improvement is a procedure that can significantly increase accuracy. Due to the fact that real images from diverse sources with different cameras, light conditions, temperature conditions, and skill of the photographer may result in varied image quality, image segmentation is necessary to future-proof the classification model. In addition, it cannot be assured that the proposed model will continue to show excellent performance when the experiment’s base material is changed; consequently, additional weld seam images from different base materials must be evaluated and incorporated into the model.