A Feedback Optimal Control Algorithm with Optimal Measurement Time Points

Abstract

:1. Introduction

2. On the Estimation, Control, and Design Problems

2.1. Nonlinear Dynamic Systems

2.2. State and Parameter Estimation Problems

2.3. Optimal Control Problems

2.4. Optimal Experimental Design Problems

| Algorithm 1 OED |

Input: Fixed p and u, initial values , possible measurement times

|

3. A Feedback Optimal Control Algorithm With Optimal Measurement Times

| Algorithm 2 FOCoed |

| Input: Initial guess , initial values , possible measurement times Initialize sampling counter , measurement grid counter and “current time” while stopping criterion not fulfilled do |

3.1. Finite Support Designs

3.2. Robustification

4. Numerical Examples

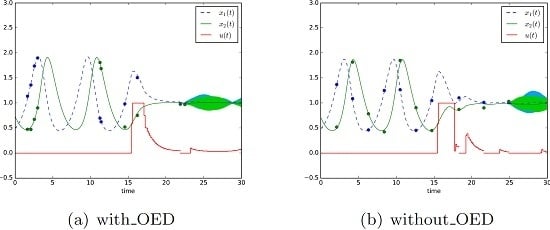

4.1. Lotka-Volterra Fishing Benchmark Problem

4.2. Software and Experimental Settings

4.3. Three Versions of Algorithm FOCoed applied to the Lotka-Volterra fishing problem

- with_OED. This is Algorithm 2, i.e., using measurement time points from non-robust OED.

- without_OED. The OED problem in Step 2 of Algorithm 2 is omitted, and an equidistant time grid is used for measurements.

- with_r_OED. The OC problem in Step 1 and the OED problem in Step 2 of Algorithm 2 are replaced with their robust counterparts as described in Section 3.2.

4.4. Analyzing Finite Support Designs of Optimal Experimental Design Problems

4.5. Discussion

5. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| FIM | Fisher Information Matrix |

| MPC | Model Predictive Control |

| NLP | Nonlinear Program |

| ODE | Ordinary Differential Equation |

| OC | Optimal Control |

| OED | Optimal Experimental Design |

| SPE | State and Parameter Estimation |

References

- Morari, M.; Lee, J.H. Model predictive control: Past, present and future. Comput. Chem. Eng. 1999, 23, 667–682. [Google Scholar] [CrossRef]

- Henson, M. Nonlinear model predictive control: Current status and future directions. Comput. Chem. Eng. 1998, 23, 187–202. [Google Scholar] [CrossRef]

- Rawlings, J.; Mayne, D. Model Predictive Control: Theory and Design; Nob Hill Publishing, LLC.: Madison, WI, USA, 2009. [Google Scholar]

- Diehl, M.; Bock, H.; Schlöder, J. A real-time iteration scheme for nonlinear optimization in optimal feedback control. SIAM J. Control Optim. 2005, 43, 1714–1736. [Google Scholar] [CrossRef]

- Zavala, V.; Biegler, L. The advanced–step NMPC controller: Optimality, stability and robustness. Automatica 2009, 45, 86–93. [Google Scholar] [CrossRef]

- Frasch, J.; Wirsching, L.; Sager, S.; Bock, H. Mixed—Level Iteration Schemes for Nonlinear Model Predictive Control. In Proceedings of the IFAC Conference on Nonlinear Model Predictive Control, Noordwijkerhout, The Netherlands, 23–27 August 2012.

- Frasch, J. Parallel Algorithms for Optimization of Dynamic Systems in Real-Time. Ph.D. Thesis, Otto-von-Guericke University Magdeburg, Magdeburg, Germany, 2014. [Google Scholar]

- Steinbach, M. Fast Recursive SQP Methods for Large-Scale Optimal Control Problems. Ph.D. Thesis, Ruprecht-Karls-Universität Heidelberg, Heidelberg, Germany, 1995. [Google Scholar]

- Frasch, J.V.; Sager, S.; Diehl, M. A parallel quadratic programming method for dynamic optimization problems. Math. Program. Comput. 2015, 7, 289–329. [Google Scholar] [CrossRef]

- Schlegel, M.; Marquardt, W. Detection and exploitation of the control switching structure in the solution of dynamic optimization problems. J. Process Control 2006, 16, 275–290. [Google Scholar] [CrossRef]

- Domahidi, A. Methods and Tools for Embedded Optimization and Control. Ph.D. Thesis, ETH Zurich, Zurich, Switzerland, 2013. [Google Scholar]

- Houska, B.; Ferreau, H.; Diehl, M. ACADO Toolkit—An Open Source Framework for Automatic Control and Dynamic Optimization. Optim. Control Appl. Methods 2011, 32, 298–312. [Google Scholar] [CrossRef]

- Ferreau, H. qpOASES—An open-source implementation of the online active set strategy for fast model predictive control. In Proceedings of the Workshop on Nonlinear Model Based Control—Software and Applications, Loughborough, UK, 2007; pp. 29–30.

- Kirches, C.; Potschka, A.; Bock, H.; Sager, S. A Parametric Active Set Method for a Subclass of Quadratic Programs with Vanishing Constraints. Pac. J. Optim. 2013, 9, 275–299. [Google Scholar]

- Klatt, K.U.; Engell, S. Rührkesselreaktor mit Parallel- und Folgereaktion. In Nichtlineare Regelung—Methoden, Werkzeuge, Anwendungen; VDI-Berichte Nr. 1026; Engell, S., Ed.; VDI-Verlag: Düsseldorf, Germany, 1993; pp. 101–108. [Google Scholar]

- Chen, H. Stability and Robustness Considerations in Nonlinear Model Predictive Control; Fortschr.-Ber. VDI Reihe 8 Nr. 674; VDI Verlag: Düsseldorf, Germany, 1997. [Google Scholar]

- Houska, B.; Ferreau, H.; Diehl, M. An Auto-Generated Real-Time Iteration Algorithm for Nonlinear MPC in the Microsecond Range. Automatica 2011, 47, 2279–2285. [Google Scholar] [CrossRef]

- Diehl, M.; Ferreau, H.; Haverbeke, N. Efficient Numerical Methods for Nonlinear MPC and Moving Horizon Estimation. In Nonlinear Model Predictive Control; Magni, L., Raimondo, D., Allgöwer, F., Eds.; Springer Lecture Notes in Control and Information Sciences; Springer: Berlin/Heidelberg, Germany; New York, NY, USA, 2009; Volume 384, pp. 391–417. [Google Scholar]

- Zavala, V.M.; Biegler, L.T. Nonlinear Programming Strategies for State Estimation and Model Predictive Control. In Nonlinear Model Predictive Control; Springer: London, UK, 2009; pp. 419–432. [Google Scholar]

- Kirches, C.; Wirsching, L.; Sager, S.; Bock, H. Efficient numerics for nonlinear model predictive control. In Recent Advances in Optimization and its Applications in Engineering; Diehl, M., Glineur, F., Jarlebring, E., Michiels, W., Eds.; Springer: Berlin/Heidelberg, Germany, 2010; pp. 339–359. [Google Scholar]

- Fedorov, V. Theory of Optimal Experiments; Academic Press: New York, NY, USA; London, UK, 1972. [Google Scholar]

- Atkinson, A.; Donev, A. Optimum Experimental Designs; Number 8 in Oxford Statistical Sciences Series; Oxford University Press: Oxford, UK, 1992. [Google Scholar]

- Kitsos, C. Optimal Experimental Design for Non-Linear Models; Springer: Heidelberg, Germany, 2013. [Google Scholar]

- Pukelsheim, F. Optimal Design of Experiments; Classics in Applied Mathematics 50; Society for Industrial and Applied Mathematic (SIAM): Philadelphia, PA, USA, 2006. [Google Scholar]

- Körkel, S.; Bauer, I.; Bock, H.; Schlöder, J. A Sequential Approach for Nonlinear Optimum Experimental Design in DAE Systems. In Scientific Computing in Chemical Engineering II; Springer: Berlin/Heidelberg, Germany, 1999; pp. 338–345. [Google Scholar]

- Kreutz, C.; Timmer, J. Systems biology: Experimental design. FEBS J. 2009, 276, 923–942. [Google Scholar] [CrossRef] [PubMed]

- Stigter, J.; Vries, D.; Keesman, K. On adaptive optimal input design: A bioreactor case study. AIChE J. 2006, 52, 3290–3296. [Google Scholar] [CrossRef]

- Galvanin, F.; Barolo, M.; Bezzo, F. Online Model-Based Redesign of Experiments for Parameter Estimation in Dynamic Systems. Ind. Eng. Chem. Res. 2009, 48, 4415–4427. [Google Scholar] [CrossRef]

- Barz, T.; López Cárdenas, D.C.; Arellano-Garcia, H.; Wozny, G. Experimental evaluation of an approach to online redesign of experiments for parameter determination. AIChE J. 2013, 59, 1981–1995. [Google Scholar] [CrossRef]

- Qian, J.; Nadri, M.; Moroşan, P.D.; Dufour, P. Closed loop optimal experiment design for on-line parameter estimation. In Proceedings of the IEEE 2014 European Control Conference (ECC), Strasbourg, France, 24–27 June 2014; pp. 1813–1818.

- Lemoine-Nava, R.; Walter, S.F.; Körkel, S.; Engell, S. Online optimal experimental design: Reduction of the number of variables. In Proceedings of the 11th IFAC Symposium on Dynamics and Control of Process Systems, Trondheim, Norway, 6–8 June 2016.

- Feldbaum, A. Dual Control Theory. I. Avtom. Telemekhanika 1960, 21, 1240–1249. [Google Scholar]

- Wittenmark, B. Adaptive dual control methods: An overview. In Proceedings of the IFAC Symposium on Adaptive Systems in Control and Signal Processing, Budapest, Hungary, 14–16 June 1995; pp. 67–72.

- Filatov, N.M.; Unbehauen, H. Adapive Dual Control; Lecture Notes in Control and Information Sciences; Springer: Berlin/Heidelberg, Germany, 2004. [Google Scholar]

- Recker, S.; Kerimoglu, N.; Harwardt, A.; Marquardt, W. On the integration of model identification and process optimization. Comput. Aided Chem. Eng. 2013, 32, 1012–1026. [Google Scholar]

- Bavdekar, V.A.; Mesbah, A. Stochastic model predictive control with integrated experiment design for nonlinear systems. In Proceedings of the 11th IFAC Symposium on Dynamics and Control of Process Systems, Including Biosystems, Trondheim, Norway, 6–8 June 2016.

- Telen, D.; Houska, B.; Vallerio, M.; Logist, F.; van Impe, J. A study of integrated experiment design for NMPC applied to the Droop model. Chem. Eng. Sci. 2017, 160, 370–383. [Google Scholar] [CrossRef]

- Lucia, S.; Andersson, J.; Brandt, H.; Diehl, M.; Engell, S. Handling uncertainty in economic nonlinear model predictive control: A comparative case study. J. Process Control 2014, 24, 1247–1259. [Google Scholar] [CrossRef]

- Lucia, S.; Paulen, R. Robust Nonlinear Model Predictive Control with Reduction of Uncertainty Via Robust Optimal Experiment Design. IFAC Proc. Vol. 2014, 47, 1904–1909. [Google Scholar] [CrossRef]

- Lucia, S.; Schliemann-Bullinger, M.; Findeisen, R.; Bullinger, E. A Set-Based Optimal Control Approach for Pharmacokinetic/Pharmacodynamic Drug Dosage Design. In Proceedings of the 11th IFAC Symposium on Dynamics and Control of Process Systems, Including Biosystems, Trondheim, Norway, 6–8 June 2016.

- Jost, F.; Rinke, K.; Fischer, T.; Schalk, E.; Sager, S. Optimum experimental design for patient specific mathematical leukopenia models. In Proceedings of the Foundations of Systems Biology in Engineering (FOSBE) Conference, Magdeburg, Germany, 9–12 October 2016.

- Ben-Tal, A.; Nemirovski, A. Robust Convex Optimization. Math. Oper. Res. 1998, 23, 769–805. [Google Scholar] [CrossRef]

- Diehl, M.; Bock, H.; Kostina, E. An approximation technique for robust nonlinear optimization. Math. Program. 2006, 107, 213–230. [Google Scholar] [CrossRef]

- Gjøsæter, H.; Bogstad, B.; Enberg, K.; Kovalev, Y.; Shamrai, E.A. (Eds.) Long term sustainable management of living marine resources in the Northern Seas. In Proceedings of the 17th Norwegian-Russian Symposium, Bergen, Norway, 16–17 March 2016.

- Jana, D.; Agrawal, R.; Upadhyay, R.K.; Samanta, G. Ecological dynamics of age selective harvesting of fish population: Maximum sustainable yield and its control strategy. Chaos Solitons Fractals 2016, 93, 111–122. [Google Scholar] [CrossRef]

- Gerdts, M. Optimal Control of Ordinary Differential Equations and Differential-Algebraic Equations; University of Bayreuth: Bayreuth, Germany, 2006. [Google Scholar]

- Kircheis, R. Structure Exploiting Parameter Estimation and Optimum Experimental Design Methods and Applications in Microbial Enhanced Oil Recovery. Ph.D. Thesis, University Heidelberg, Heidelberg, Germany, 2015. [Google Scholar]

- Biegler, L. Nonlinear Programming: Concepts, Algorithms, and Applications to Chemical Processes; Series on Optimization; Society for Industrial and Applied Mathematic (SIAM): Philadelphia, PA, USA, 2010. [Google Scholar]

- Betts, J. Practical Methods for Optimal Control Using Nonlinear Programming; Society for Industrial and Applied Mathematic (SIAM): Philadelphia, PA, USA, 2001. [Google Scholar]

- Sager, S. Sampling Decisions in Optimum Experimental Design in the Light of Pontryagin’s Maximum Principle. SIAM J. Control Optim. 2013, 51, 3181–3207. [Google Scholar] [CrossRef]

- Körkel, S. Numerische Methoden für Optimale Versuchsplanungsprobleme bei nichtlinearen DAE-Modellen. Ph.D. Thesis, Universität Heidelberg, Heidelberg, Germany, 2002. [Google Scholar]

- Gerdts, M. A variable time transformation method for mixed-integer optimal control problems. Optim. Control Appl. Methods 2006, 27, 169–182. [Google Scholar] [CrossRef]

- Sager, S.; Reinelt, G.; Bock, H. Direct Methods With Maximal Lower Bound for Mixed-Integer Optimal Control Problems. Math. Program. 2009, 118, 109–149. [Google Scholar] [CrossRef]

- Gerdts, M.; Sager, S. Mixed-Integer DAE Optimal Control Problems: Necessary conditions and bounds. In Control and Optimization with Differential-Algebraic Constraints; Biegler, L., Campbell, S., Mehrmann, V., Eds.; Society for Industrial and Applied Mathematic (SIAM): Philadelphia, PA, USA, 2012; pp. 189–212. [Google Scholar]

- Fedorov, V.; Malyutov, M. Optimal designs in regression problems. Math. Operationsforsch. Stat. 1972, 3, 281–308. [Google Scholar] [CrossRef]

- La, H.C.; Schlöder, J.P.; Bock, H.G. Structure of Optimal Samples in Continuous Nonlinear Experimental Design for Parameter Estimation. In Proceedings of the 6th International Conference on High Performance Scientific Computing, Hanoi, Vietnam, 16–20 March 2015.

- Boyd, S.; Vandenberghe, L. Convex Optimization; Cambridge University Press: Cambridge, UK, 2004. [Google Scholar]

- Körkel, S.; Kostina, E.; Bock, H.; Schlöder, J. Numerical Methods for Optimal Control Problems in Design of Robust Optimal Experiments for Nonlinear Dynamic Processes. Optim. Methods Softw. 2004, 19, 327–338. [Google Scholar] [CrossRef]

- Venturino, E. The influence of diseases on Lotka-Volterra systems. J. Math. 1994, 24, 1. [Google Scholar] [CrossRef]

- Lee, S.J.; Lee, D.J.; Oh, H.S. Technological forecasting at the Korean stock market: A dynamic competition analysis using Lotka-Volterra model. Technol. Forecast. Soc. Chang. 2005, 72, 1044–1057. [Google Scholar] [CrossRef]

- Andersson, J. A General-Purpose Software Framework for Dynamic Optimization. Ph.D. Thesis, Arenberg Doctoral School, KU Leuven, Leuven, Belgium, 2013. [Google Scholar]

- Wächter, A.; Biegler, L. On the Implementation of an Interior-Point Filter Line-Search Algorithm for Large-Scale Nonlinear Programming. Math. Program. 2006, 106, 25–57. [Google Scholar] [CrossRef]

- Hindmarsh, A.; Brown, P.; Grant, K.; Lee, S.; Serban, R.; Shumaker, D.; Woodward, C. SUNDIALS: Suite of Nonlinear and Differential/Algebraic Equation Solvers. ACM Trans. Math. Softw. 2005, 31, 363–396. [Google Scholar] [CrossRef]

| At t = 15 | ||||||||||

| OC | with_r_OED (A) | with_OED (B) | without_OED (C) | |||||||

| value | value | value | value | |||||||

| 1.000 | 1.0074 | 0.0003377 | 0.9925 | 0.0003300 | 1.0293 | 0.0005090 | 33.65 | 35.17 | -2.33 | |

| 1.000 | 1.0085 | 0.0005540 | 0.9954 | 0.0005404 | 1.0267 | 0.0005313 | -4.27 | -1.71 | -2.52 | |

| 1.000 | 0.9935 | 0.0005861 | 1.0073 | 0.0006063 | 0.9758 | 0.0006139 | 4.53 | 1.24 | 3.33 | |

| 1.000 | 0.9959 | 0.0006466 | 1.0053 | 0.0006635 | 0.9762 | 0.0008780 | 26.36 | 24.43 | 2.55 | |

| At t = 30 | ||||||||||

| with_r_OED (A) | with_OED (B) | without_OED (C) | ||||||||

| value | value | value | value | |||||||

| 1.000 | 1.0066 | 0.0002414 | 0.9974 | 0.0002418 | 1.0082 | 0.0004214 | 42.71 | 42.62 | 0.17 | |

| 1.000 | 1.0065 | 0.0003639 | 1.0004 | 0.0003706 | 1.0069 | 0.0004624 | 21.30 | 19.85 | 1.81 | |

| 1.000 | 0.9936 | 0.0003472 | 1.0029 | 0.0003582 | 0.9924 | 0.0005068 | 31.49 | 29.32 | 3.07 | |

| 1.000 | 0.9958 | 0.0003575 | 1.0014 | 0.0003764 | 0.9937 | 0.0006837 | 47.71 | 44.95 | 5.02 | |

| 0.714 | 0.724 | 0.727 | 0.790 | 9.62 | 7.97 | 0.41 | ||||

© 2017 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license ( http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Jost, F.; Sager, S.; Le, T.T.-T. A Feedback Optimal Control Algorithm with Optimal Measurement Time Points. Processes 2017, 5, 10. https://doi.org/10.3390/pr5010010

Jost F, Sager S, Le TT-T. A Feedback Optimal Control Algorithm with Optimal Measurement Time Points. Processes. 2017; 5(1):10. https://doi.org/10.3390/pr5010010

Chicago/Turabian StyleJost, Felix, Sebastian Sager, and Thuy Thi-Thien Le. 2017. "A Feedback Optimal Control Algorithm with Optimal Measurement Time Points" Processes 5, no. 1: 10. https://doi.org/10.3390/pr5010010

APA StyleJost, F., Sager, S., & Le, T. T. -T. (2017). A Feedback Optimal Control Algorithm with Optimal Measurement Time Points. Processes, 5(1), 10. https://doi.org/10.3390/pr5010010