Assessment of Aircraft Engine Blade Inspection Performance Using Attribute Agreement Analysis

Abstract

:1. Introduction

2. Literature Review

3. Materials and Methods

3.1. Research Objective and Methodology

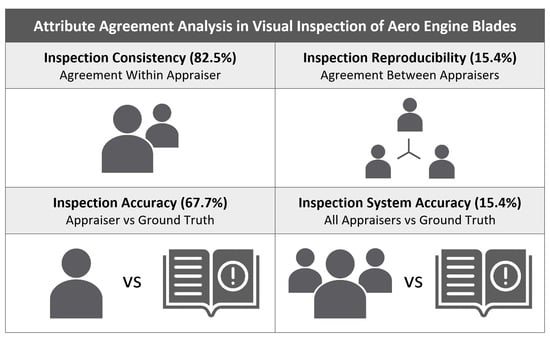

- How accurately is each operator making a serviceability decision, i.e., do they detect all defects, and do they know the difference between a defect and a condition?

- How consistently do operators inspect blades, i.e., do they come to the same serviceability decision when inspecting the same blade twice?

- How reproducible are the inspection results, i.e., do different operators make the same serviceability decision when inspecting the same blade?

- How accurate is the inspection system, i.e., do all operators’ agreeing decisions align to the ground truth?

3.2. Research Sample

3.3. Research Population

3.4. Experimental Setup and Data Collection

3.5. Attribute Agreement Analysis

3.6. Kappa Analysis

4. Results

4.1. Appraiser Consistency and Reproducibility

4.2. Appraiser and Inspection System Accuracy

4.3. Assessment of the Expertise Factor

5. Discussion

5.1. Summary of Results and Comparison to Other Studies

5.1.1. Attribute Agreement Results

5.1.2. Kappa Results

5.1.3. False Negative Results

5.1.4. Effect of Appraiser Number on the AAA Results

5.2. Implications for Practitioners

5.3. Limitations

5.4. Future Work

6. Conclusions

Author Contributions

Funding

Institutional Review Board Statement

Informed Consent Statement

Data Availability Statement

Acknowledgments

Conflicts of Interest

Appendix A

| Appraiser | Number Inspected | Number Matched | Agreement Percentage | 95% CI |

|---|---|---|---|---|

| 1 | 26 | 26 | 100.00 | (89.12, 100.00) |

| 2 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 3 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 4 | 26 | 24 | 92.31 | (74.87, 99.05) |

| 5 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 6 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 7 | 26 | 19 | 73.08 | (52.21, 88.43) |

| 8 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 9 | 26 | 24 | 92.31 | (74.87, 99.05) |

| 10 | 26 | 17 | 65.38 | (44.33, 82.79) |

| 11 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 12 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 13 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 14 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 15 | 26 | 25 | 96.15 | (80.36, 99.90) |

| 16 | 26 | 17 | 65.38 | (44.33, 82.79) |

| 17 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 18 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 19 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 20 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 21 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 22 | 26 | 24 | 92.31 | (74.87, 99.05) |

| 23 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 24 | 26 | 14 | 53.85 | (33.37, 73.41) |

| 25 | 26 | 24 | 92.31 | (74.87, 99.05) |

| 26 | 26 | 25 | 96.15 | (80.36, 99.90) |

| 27 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 28 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 29 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 30 | 26 | 19 | 73.08 | (52.21, 88.43) |

| 31 | 26 | 24 | 92.31 | (74.87, 99.05) |

| 32 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 33 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 34 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 35 | 26 | 26 | 100.00 | (89.12, 100.00) |

| 36 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 37 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 38 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 39 | 26 | 25 | 96.15 | (80.36, 99.90) |

| 40 | 26 | 26 | 100.00 | (89.12, 100.00) |

| 41 | 26 | 25 | 96.15 | (80.36, 99.90) |

| 42 | 26 | 13 | 50.00 | (29.93, 70.07) |

| 43 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 44 | 26 | 17 | 65.38 | (44.33, 82.79) |

| 45 | 26 | 26 | 100.00 | (89.12, 100.00) |

| 46 | 26 | 24 | 92.31 | (74.87, 99.05) |

| 47 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 48 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 49 | 26 | 19 | 73.08 | (52.21, 88.43) |

| 50 | 26 | 20 | 76.92 | (56.35, 91.03) |

| Average | 26 | 21.5 | 82.54 | (79.29, 85.79) |

| Appraiser | Number Inspected | Number Matched | Agreement Percentage | 95% CI |

|---|---|---|---|---|

| 1 | 26 | 14 | 53.85 | (33.37, 73.41) |

| 2 | 26 | 17 | 65.38 | (44.33, 82.79) |

| 3 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 4 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 5 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 6 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 7 | 26 | 16 | 61.54 | (40.57, 79.77) |

| 8 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 9 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 10 | 26 | 15 | 57.69 | (36.92, 76.65) |

| 11 | 26 | 16 | 61.54 | (40.57, 79.77) |

| 12 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 13 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 14 | 26 | 17 | 65.38 | (44.33, 82.79) |

| 15 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 16 | 26 | 17 | 65.38 | (44.33, 82.79) |

| 17 | 26 | 15 | 57.69 | (36.92, 76.65) |

| 18 | 26 | 16 | 61.54 | (40.57, 79.77) |

| 19 | 26 | 14 | 53.85 | (33.37, 73.41) |

| 20 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 21 | 26 | 19 | 73.08 | (52.21, 88.43) |

| 22 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 23 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 24 | 26 | 14 | 53.85 | (33.37, 73.41) |

| 25 | 26 | 19 | 73.08 | (52.21, 88.43) |

| 26 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 27 | 26 | 16 | 61.54 | (40.57, 79.77) |

| 28 | 26 | 14 | 53.85 | (33.37, 73.41) |

| 29 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 30 | 26 | 17 | 65.38 | (44.33, 82.79) |

| 31 | 26 | 16 | 61.54 | (40.57, 79.77) |

| 32 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 33 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 34 | 26 | 19 | 73.08 | (52.21, 88.43) |

| 35 | 26 | 22 | 84.62 | (65.13, 95.64) |

| 36 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 37 | 26 | 16 | 61.54 | (40.57, 79.77) |

| 38 | 26 | 21 | 80.77 | (60.65, 93.45) |

| 39 | 26 | 23 | 88.46 | (69.85, 97.55) |

| 40 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 41 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 42 | 26 | 11 | 42.31 | (23.35, 63.08) |

| 43 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 44 | 26 | 10 | 38.46 | (20.23, 59.43) |

| 45 | 26 | 20 | 76.92 | (56.35, 91.03) |

| 46 | 26 | 16 | 61.54 | (40.57, 79.77) |

| 47 | 26 | 19 | 73.08 | (52.21, 88.43) |

| 48 | 26 | 18 | 69.23 | (48.21, 85.67) |

| 49 | 26 | 15 | 57.69 | (36.92, 76.65) |

| 50 | 26 | 16 | 61.54 | (40.57, 79.77) |

| Average | 26 | 17.6 | 67.69 | (64.82, 70.56) |

| Appraiser | False Positives (FP) | FP Rate | False Negatives (FN) | FN Rate | Mixed | Imprecision |

|---|---|---|---|---|---|---|

| 1 | 12 | 66.67 | 0 | 0.00 | 0 | 0.00 |

| 2 | 5 | 27.78 | 0 | 0.00 | 4 | 15.38 |

| 3 | 2 | 11.11 | 0 | 0.00 | 6 | 23.08 |

| 4 | 4 | 22.22 | 0 | 0.00 | 2 | 7.69 |

| 5 | 0 | 0.00 | 4 | 50.00 | 4 | 15.38 |

| 6 | 3 | 16.67 | 0 | 0.00 | 5 | 19.23 |

| 7 | 1 | 5.56 | 2 | 25.00 | 7 | 26.92 |

| 8 | 2 | 11.11 | 0 | 0.00 | 6 | 23.08 |

| 9 | 2 | 11.11 | 0 | 0.00 | 2 | 7.69 |

| 10 | 0 | 0.00 | 2 | 25.00 | 9 | 34.62 |

| 11 | 0 | 0.00 | 2 | 25.00 | 8 | 30.77 |

| 12 | 3 | 16.67 | 0 | 0.00 | 5 | 19.23 |

| 13 | 1 | 5.56 | 3 | 37.50 | 4 | 15.38 |

| 14 | 6 | 33.33 | 0 | 0.00 | 3 | 11.54 |

| 15 | 0 | 0.00 | 7 | 87.50 | 1 | 3.85 |

| 16 | 0 | 0.00 | 0 | 0.00 | 9 | 34.62 |

| 17 | 3 | 16.67 | 0 | 0.00 | 8 | 30.77 |

| 18 | 5 | 27.78 | 0 | 0.00 | 5 | 19.23 |

| 19 | 4 | 22.22 | 2 | 25.00 | 6 | 23.08 |

| 20 | 0 | 0.00 | 0 | 0.00 | 8 | 30.77 |

| 21 | 0 | 0.00 | 2 | 25.00 | 5 | 19.23 |

| 22 | 2 | 11.11 | 0 | 0.00 | 2 | 7.69 |

| 23 | 5 | 27.78 | 0 | 0.00 | 3 | 11.54 |

| 24 | 0 | 0.00 | 0 | 0.00 | 12 | 46.15 |

| 25 | 2 | 11.11 | 3 | 37.50 | 2 | 7.69 |

| 26 | 0 | 0.00 | 4 | 50.00 | 1 | 3.85 |

| 27 | 2 | 11.11 | 0 | 0.00 | 8 | 30.77 |

| 28 | 3 | 16.67 | 4 | 50.00 | 5 | 19.23 |

| 29 | 0 | 0.00 | 5 | 62.50 | 3 | 11.54 |

| 30 | 0 | 0.00 | 2 | 25.00 | 7 | 26.92 |

| 31 | 8 | 44.44 | 0 | 0.00 | 2 | 7.69 |

| 32 | 0 | 0.00 | 4 | 50.00 | 4 | 15.38 |

| 33 | 0 | 0.00 | 0 | 0.00 | 6 | 23.08 |

| 34 | 2 | 11.11 | 0 | 0.00 | 5 | 19.23 |

| 35 | 4 | 22.22 | 0 | 0.00 | 0 | 0.00 |

| 36 | 2 | 11.11 | 0 | 0.00 | 3 | 11.54 |

| 37 | 5 | 27.78 | 0 | 0.00 | 5 | 19.23 |

| 38 | 2 | 11.11 | 0 | 0.00 | 3 | 11.54 |

| 39 | 2 | 11.11 | 0 | 0.00 | 1 | 3.85 |

| 40 | 6 | 33.33 | 0 | 0.00 | 0 | 0.00 |

| 41 | 0 | 0.00 | 5 | 62.50 | 1 | 3.85 |

| 42 | 2 | 11.11 | 0 | 0.00 | 13 | 50.00 |

| 43 | 5 | 27.78 | 0 | 0.00 | 3 | 11.54 |

| 44 | 5 | 27.78 | 2 | 25.00 | 9 | 34.62 |

| 45 | 4 | 22.22 | 2 | 25.00 | 0 | 0.00 |

| 46 | 8 | 44.44 | 0 | 0.00 | 2 | 7.69 |

| 47 | 1 | 5.56 | 2 | 25.00 | 4 | 15.38 |

| 48 | 2 | 11.11 | 3 | 37.50 | 3 | 11.54 |

| 49 | 4 | 22.22 | 0 | 0.00 | 7 | 26.92 |

| 50 | 0 | 0.00 | 4 | 50.00 | 6 | 23.08 |

| Average | 2.58 | 14.33 | 1.28 | 16.00 | 4.54 | 17.46 |

| Appraiser | Number Inspected | Number Matched | Agreement Percentage | 95% CI |

|---|---|---|---|---|

| All | 26 | 4 | 15.4% | (4.36, 34.87) |

| Appraiser | Number Inspected | Number Matched | Agreement Percentage | 95% CI |

|---|---|---|---|---|

| All | 26 | 4 | 15.4% | (4.36, 34.87) |

References

- Marais, K.; Robichaud, M. Analysis of trends in aviation maintenance risk: An empirical approach. Reliab. Eng. Syst. Saf. 2012, 106, 104–118. [Google Scholar] [CrossRef]

- Reason, J.; Hobbs, A. Managing Maintenance Error: A Practical Guide; CRC Press: Boca Raton, FL, USA, 2017. [Google Scholar]

- Rankin, W.L.; Shappell, S.; Wiegmann, D. Error and Error Reporting Systems. Human Factors Guide for Aviation Maintenance and Inspection. 2003. Available online: https://www.faa.gov/about/initiatives/maintenance_hf/training_tools/media/hf_guide.pdf (accessed on 13 November 2021).

- Allen, J.; Marx, D. Maintenance Error Decision Aid Project (MEDA). In Proceedings of the Eighth Federal Aviation Administration Meeting on Human Factors Issues in Aircraft Maintenance and Inspection, Washington, DC, USA, 16–17 November 1993. [Google Scholar]

- UK CAA. CAP 1367–Aircraft Maintenance Incident Analysis. 2016. Available online: https://publicapps.caa.co.uk/modalapplication.aspx?appid=11&mode=detail&id=7185 (accessed on 14 November 2021).

- UK CAA. Aircraft Maintenance Incident Analysis. 2009. Available online: http://publicapps.caa.co.uk/modalapplication.aspx?appid=11&mode=detail&id=3609 (accessed on 14 November 2021).

- Insley, J.; Turkoglu, C. A Contemporary Analysis of Aircraft Maintenance-Related Accidents and Serious Incidents. Aerospace 2020, 7, 81. [Google Scholar] [CrossRef]

- Carter, T.J. Common failures in gas turbine blades. Eng. Fail. Anal. 2005, 12, 237–247. [Google Scholar] [CrossRef]

- Kumari, S.; Satyanarayana, D.; Srinivas, M. Failure analysis of gas turbine rotor blades. Eng. Fail. Anal. 2014, 45, 234–244. [Google Scholar] [CrossRef]

- Dewangan, R.; Patel, J.; Dubey, J.; Prakash, K.; Bohidar, S. Gas turbine blades–A critical review of failure at first and second stages. Int. J. Mech. Eng. Robot. Res. 2015, 4, 216–223. Available online: www.ijmerr.com/v4n1/ijmerr_v4n1_24.pdf (accessed on 13 November 2021).

- Rao, N.; Kumar, N.; Prasad, B.; Madhulata, N.; Gurajarapu, N. Failure mechanisms in turbine blades of a gas turbine Engine-an overview. Int. J. Eng. Res. Dev. 2014, 10, 48–57. [Google Scholar] [CrossRef]

- Rani, S. Common Failures in Gas Turbine Blade: A critical Review. Int. J. Eng. Sci. Res. Technol. 2018, 7, 799–803. [Google Scholar] [CrossRef]

- Latorella, K.; Prabhu, P. A review of human error in aviation maintenance and inspection. Int. J. Ind. Ergon. 2000, 14653, 133–161. [Google Scholar] [CrossRef]

- Gallwey, T.J. Evaluation and control of industrial inspection: Part I–Guidelines for the practitioner. In Ergonomics Guidelines and Problem Solving; Elsevier Ergonomics Book Series; Elsevier: Amsterdam, The Netherlands, 2000; Volume 1, pp. 301–312. [Google Scholar]

- Gallwey, T.J. Evaluation and control of industrial inspection: Part II–The scientific basis for the guide. In Ergonomics Guidelines and Problem Solving; Elsevier Ergonomics Book Series; Elsevier: Amsterdam, The Netherlands, 2000; Volume 1, pp. 313–327. [Google Scholar]

- Gramopadhye, A.K.; Drury, C.G. Human factors in aviation maintenance: How we got to where we are. Int. J. Ind. Ergon. 2000, 26, 125–131. [Google Scholar] [CrossRef]

- Civil Aviation Authority (CAA). CAP 715-An Introduction to Aircraft Maintenance Engineering Human Factors for JAR 66. 2002. Available online: https://publicapps.caa.co.uk/docs/33/CAP715.PDF (accessed on 17 November 2021).

- Webber, L.; Wallace, M. Quality Control for Dummies; John Wiley & Sons: Hoboken, NJ, USA, 2011. [Google Scholar]

- Raza, A.; Ulansky, V. Modelling of false alarms and intermittent faults and their impact on the maintenance cost of digital avionics. Procedia Manuf. 2018, 16, 107–114. [Google Scholar] [CrossRef]

- National Transportation Safety Board (NTSB). Southwest Airlines flight 1380 engine accident. Available online: https://www.ntsb.gov/investigations/Pages/DCA18MA142.aspx (accessed on 3 November 2018).

- National Transportation Safety Board (NTSB). United Airlines Flight 232 McDonnell Douglas DC-10-10. Available online: https://www.ntsb.gov/investigations/accidentreports/pages/AAR9006.aspx (accessed on 18 December 2018).

- Hobbs, A. An Overview of Human Factors in Aviation Maintenance. 2008. Available online: https://www.atsb.gov.au/media/27818/hf_ar-2008-055.pdf (accessed on 23 November 2021).

- Rankin, W. MEDA Investigation Process. In Boeing Commercial Aero; Boeing: Chicago, IL, USA, 2007; Available online: https://www.boeing.com/commercial/aeromagazine/articles/qtr_2_07/AERO_Q207_article3.pdf (accessed on 17 November 2021).

- Guerra, A.-S.; Pillet, M.; Maire, J.-L. Control of variability for man measurement. In Proceedings of the 12th IMEKO TC1 & TC7 Joint Symposium on Man Science & Measurement, Annecy, France, 3–5 September 2008. [Google Scholar]

- Simion, C. Evaluation of an attributive measurement system in the automotive industry. IOP Conf. Ser. Mater. Sci. Eng. 2016, 145, 052005. [Google Scholar] [CrossRef] [Green Version]

- Simion, C. Assessment of Human Capability, An Effective Tool to Obtain Confidence in the Visual Inspection Process. Acta Univ. Cibiniensis. Tech. Ser. 2018, 70, 1–6. [Google Scholar] [CrossRef] [Green Version]

- Simion, C. Measurement system analysis by attribute, an effective tool to ensure the quality of the visual inspection process within an organization. MATEC Web Conf. 2019, 290, 05004. [Google Scholar] [CrossRef]

- Aust, J.; Pons, D. Comparative Analysis of Human Operators and Advanced Technologies in the Visual Inspection of Aero Engine Blades. Appl. Sci. 2022, 12, 2250. [Google Scholar] [CrossRef]

- Gališanskis, A. Aspects of quality evaluation in aviation maintenance. Aviation 2004, 8, 18–26. [Google Scholar] [CrossRef]

- International Organization for Standardization. Quality Management Principles. 2015. Available online: https://www.iso.org/iso/pub100080.pdf (accessed on 18 March 2022).

- Rolls-Royce. Measurement System Analysis: How-to Guide. Version 6.1. 2013. Available online: https://suppliers.rolls-royce.com/GSPWeb/ShowProperty?nodePath=/BEA%20Repository/Global%20Supplier%20Portal/Section%20DocLink%20Lists/SABRe_2/Main/Column%201/SABRe%20Supplier%20Requirements_intro/Documents/SABRe2//file (accessed on 27 October 2021).

- Furterer, S.; Hernandez, E. Improving the Healthcare Quality Measurement System Using Attribute Agreement Analysis Assessing the Presence and Stage of Pressure Ulcers. Int. J. Stat. Probab. 2019, 8, 47. [Google Scholar] [CrossRef]

- Marques, C.; Lopes, N.; Santos, G.; Delgado, I.; Delgado, P. Improving operator evaluation skills for defect classification using training strategy supported by attribute agreement analysis. Measurement 2018, 119, 129–141. [Google Scholar] [CrossRef]

- Jarvis, H.L.; Nester, C.J.; Jones, R.K.; Williams, A.; Bowden, P.D. Inter-assessor reliability of practice based biomechanical assessment of the foot and ankle. J. Foot Ankle Res. 2012, 5, 14. [Google Scholar] [CrossRef] [Green Version]

- Aerospace Engine Supplier Quality (AESQ) Strategy Group. RM13003-Measurement Systems Analysis 2021, RM13003. Available online: https://www.sae.org/standards/content/aesqrm003202105/ (accessed on 29 October 2021).

- Wojtaszak, M.; Biały, W. Measurement system analysis of attribute or continuous data, as a one of the first steps in Lean Six Sigma projects. In Systems Supporting Production Engineering; Kaźmierczak, J., Ed.; PA NOVA: Gliwice, Poland, 2013; pp. 144–162. [Google Scholar]

- Schoonard, J.W.; Gould, J.D.; Miller, L.A. Studies of visual inspection. Ergonomics 1973, 16, 365–379. [Google Scholar] [CrossRef]

- Santiago, N.; Jorge, L. Attribute Data Treatment of Automated Inspection Vision System For Product Mix-Up Detection. Manufacturing Engineering. 2012. Available online: http://prcrepository.org:8080/xmlui/handle/20.500.12475/518 (accessed on 24 March 2022).

- Liu, D.; Zhu, M. Study on Repeatability Evaluation Method of Precision Automatic Inspecting Machine of Aviation Coupling Based on Independent Sub-Sample. In Proceedings of the International Conference on E-Product E-Service and E-Entertainment (ICEEE), Henan, China, 7–9 November 2010. [Google Scholar]

- Schneider, G.M.; Jull, G.; Thomas, K.; Smith, A.; Emery, C.; Faris, P.; Schneider, K.; Salo, P. Intrarater and interrater reliability of select clinical tests in patients referred for diagnostic facet joint blocks in the cervical spine. Arch. Phys. Med. Rehabil. 2013, 94, 1628–1634. [Google Scholar] [CrossRef]

- Elveru, R.A.; Rothstein, J.M.; Lamb, R.L. Goniometric reliability in a clinical setting. Subtalar and ankle joint measurements. Phys. Ther. 1988, 68, 672–677. [Google Scholar] [CrossRef] [PubMed]

- Keenan, A.-M.; Bach, T.M. Clinicians’ Assessment of the Hindfoot: A Study of Reliability. Foot Ankle Int. 2006, 27, 451–460. [Google Scholar] [CrossRef] [PubMed]

- Makrygianni, M. Aircraft accident evaluation using quality assessment tools. Aviation 2018, 22, 67–76. [Google Scholar] [CrossRef]

- Vassilakis, E.; Besseris, G. An application of TQM tools at a maintenance division of a large aerospace company. J. Qual. Maint. Eng. 2009, 15, 31–46. [Google Scholar] [CrossRef]

- Barbosa, G.F.; Peres, G.F.; Hermosilla, J.L.G. R&R (repeatability and reproducibility) gage study applied on gaps’ measurements of aircraft assemblies made by a laser technology device. Prod. Eng. 2014, 8, 477–489. [Google Scholar] [CrossRef]

- Fyffe, D.; Moran, J.; Kannaiyan, K.; Sadr, R.; Al-Sharshani, A. Effect of GTL-Like Jet Fuel Composition on GT Engine Altitude Ignition Performance: Part I—Combustor Operability. In Proceedings of the ASME 2011 Turbo Expo: Turbine Technical Conference and Exposition, Vancouver, BC, Canada, 6–10 June 2011; pp. 485–494. [Google Scholar]

- Elkady, A.M.; Herbon, J.; Kalitan, D.M.; Leonard, G.; Akula, R.; Karim, H.; Hadley, M. Gas Turbine Emission Characteristics in Perfectly Premixed Combustion. J. Eng. Gas Turbines Power 2012, 134, 061501. [Google Scholar] [CrossRef]

- Wang, Y.; Zhang, D.; Zhang, S.; Jia, Q.; Zhang, H. Quality Confirmation of Electrical Measurement Data for Space Parts Based on MSA Method; Springer: Singapore, 2020; pp. 344–351. [Google Scholar]

- Cotter, T.S.; Yesilbas, V. An Attribute Agreement Analysis Method for HFACS Inter-Rater Reliability Assessment. In Proceedings of the International Annual Conference of the American Society for Engineering Management (ASEM), Huntsville, AL, USA, 17–20 October 2018; pp. 1–12. [Google Scholar]

- Hawary, A.F.; Hoe, Y.H.; Bakar, E.A.; Othman, W.A.F.W. A Study of Gauge Repeatability and Reproducibility of The Back-End Semiconductor Lead Inspection System. ROBOTIKA 2019, 1, 1–6. Available online: http://www.technical-journals.org/index.php/ROBOTIKA/article/view/35 (accessed on 7 December 2021).

- Aust, J.; Mitrovic, A.; Pons, D. Comparison of Visual and Visual–Tactile Inspection of Aircraft Engine Blades. Aerospace 2021, 8, 313. [Google Scholar] [CrossRef]

- Aust, J.; Pons, D.; Mitrovic, A. Evaluation of Influence Factors on the Visual Inspection Performance of Aircraft Engine Blades. Aerospace 2022, 9, 18. [Google Scholar] [CrossRef]

- Aust, J.; Mitrovic, A.; Pons, D. Assessment of the Effect of Cleanliness on the Visual Inspection of Aircraft Engine Blades: An Eye Tracking Study. Sensors 2021, 21, 6135. [Google Scholar] [CrossRef]

- AS13100; AESQ Quality Management System Requirements for Aero Engine Design and Production Organizations. SAE International: Warrendale, PA, USA, 2021; p. 84. [CrossRef]

- Hunt, R.J. Percent agreement, Pearson’s correlation, and kappa as measures of inter-examiner reliability. J. Dent. Res. 1986, 65, 128–130. [Google Scholar] [CrossRef] [PubMed]

- Viera, A.J.; Garrett, J.M. Understanding interobserver agreement: The kappa statistic. Fam. Med. 2005, 37, 360–363. [Google Scholar] [PubMed]

- Landis, J.R.; Koch, G.G. The Measurement of Observer Agreement for Categorical Data. Biometrics 1977, 33, 159–174. [Google Scholar] [CrossRef] [PubMed] [Green Version]

- Aust, J.; Pons, D. Methodology for Evaluating Risk of Visual Inspection Tasks of Aircraft Engine Blades. Aerospace 2021, 8, 117. [Google Scholar] [CrossRef]

- Spencer, F.W. Visual Inspection Research Project Report on Benchmark Inspections; Aging Aircraft NDI Validation Center (AANC), Sandia National Labs.: Albuquerque, NM, USA, 1996.

- Waite, S. Defect Types and Inspection. In Proceedings of the MIL17 Maintenance Workshop, Chicago, IL, USA, 19–21 July 2006. [Google Scholar]

- Brunyé, T.T.; Drew, T.; Weaver, D.L.; Elmore, J.G. A review of eye tracking for understanding and improving diagnostic interpretation. Cogn. Res. Princ. Implic. 2019, 4, 7. [Google Scholar] [CrossRef] [PubMed]

- Hrymak, V.; Codd, P. Improving Visual Inspection Reliability in Aircraft Maintenance. In Proceedings of the ESREL2021: 31st European Safety and Reliability Conference, Angers, France, 19–23 September 2021; pp. 1355–1362. [Google Scholar]

- Shankar, R. Process Improvement Using Six Sigma: A DMAIC Guide; ASQ Quality Press: Milwaukee, WI, USA, 2009. [Google Scholar]

- Al-Mishari, S.T.; Suliman, S. Integrating Six-Sigma with other reliability improvement methods in equipment reliability and maintenance applications. J. Qual. Maint. Eng. 2008, 14, 59–70. [Google Scholar] [CrossRef]

- Prabuwono, A.S.; Usino, W.; Yazdi, L.; Basori, A.H.; Bramantoro, A.; Syamsuddin, I.; Yunianta, A.; Allehaibi, K.; Allehaibi, S. Automated Visual Inspection for Bottle Caps Using Fuzzy Logic. TEM J. 2019, 8, 107–112. [Google Scholar] [CrossRef]

- Ebadi, M.; Bagheri, M.; Lajevardi, M.S.; Haas, B. Defect Detection of Railway Turnout Using 3D Scanning; Springer International Publishing: Cham, Switzerland, 2019; pp. 1–18. [Google Scholar]

- Huang, S.-H.; Pan, Y.-C. Automated visual inspection in the semiconductor industry: A survey. Comput. Ind. 2015, 66, 1–10. [Google Scholar] [CrossRef]

- Chin, R.T.; Harlow, C.A. Automated Visual Inspection: A Survey. IEEE Trans. Pattern Anal. Mach. Intell. 1982, PAMI-4, 557–573. [Google Scholar] [CrossRef]

| Attribute Agreement | Excellent | Acceptable | Unacceptable |

|---|---|---|---|

| Appraiser Consistency | >90% | 80–90% | <80% |

| Agreement between Appraisers | >90% | 80–90% | <80% |

| Appraiser Agreement with Ground Truth | >90% | 80–90% | <80% |

| All Appraisers with Ground Truth | >90% | 80–90% | <80% |

| Attribute Metrics | False Positive | False Negative |

|---|---|---|

| Appraiser Agreement with Ground Truth | >75% | >95% |

| Kappa Values | Agreement Class |

|---|---|

| κ > 0.80 | Almost perfect agreement |

| 0.80 ≥ κ > 0.60 | Substantial agreement |

| 0.60 ≥ κ > 0.40 | Moderate agreement |

| 0.40 ≥ κ > 0.20 | Fair agreement |

| 0.20 ≥ κ > 0 | Slight agreement |

| κ ≤ 0 | Poor agreement |

| Metric | Percent Agreement (95% CI) |

|---|---|

| Appraiser Consistency | 82.5 (79.3, 85.8) |

| Appraiser Accuracy | 67.7 (64.8, 70.6) |

| Appraiser Reproducibility | 15.4 (4.36, 34.87) |

| All Appraisers Agreement with Ground Truth | 15.4 (4.36, 34.87) |

| Kappa Value | Agreement Level | Distribution of Achieved ‘Agreement with Themselves’ | Distribution of Achieved ‘Agreement with Ground Truth’ |

|---|---|---|---|

| 1.00 ≥ κ > 0.80 | Almost perfect agreement | 12 (24%) | 0 (0%) |

| 0.80 ≥ κ > 0.60 | Substantial agreement | 14 (28%) | 11 (22%) |

| 0.60 ≥ κ > 0.40 | Moderate agreement | 11 (22%) | 22 (44%) |

| 0.40 ≥ κ > 0.20 | Fair agreement | 7 (14%) | 12 (24%) |

| 0.20 ≥ κ > 0.00 | Slight agreement | 0 (0%) | 4 (8%) |

| κ ≤ 0.00 | Poor agreement | 6 (12%) | 1 (2%) |

| Number of Appraisers | Appraiser Consistency | Appraiser Accuracy | Appraiser Reproducibility | All Appraisers vs. Ground Truth |

|---|---|---|---|---|

| 2 | 76.9% | 63.1% | 65.4% | 57.7% |

| 5 | 81.5% | 63.9% | 30.8% | 30.8% |

| 10 | 82.7% | 66.2% | 23.1% | 23.1% |

| 15 | 79.2% | 64.9% | 23.1% | 23.1% |

| 20 | 80.0% | 69.5% | 23.1% | 23.1% |

| 30 | 80.8% | 69.2% | 15.4% | 15.4% |

| 50 | 82.5% | 67.7% | 15.4% | 15.4% |

Publisher’s Note: MDPI stays neutral with regard to jurisdictional claims in published maps and institutional affiliations. |

© 2022 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (https://creativecommons.org/licenses/by/4.0/).

Share and Cite

Aust, J.; Pons, D. Assessment of Aircraft Engine Blade Inspection Performance Using Attribute Agreement Analysis. Safety 2022, 8, 23. https://doi.org/10.3390/safety8020023

Aust J, Pons D. Assessment of Aircraft Engine Blade Inspection Performance Using Attribute Agreement Analysis. Safety. 2022; 8(2):23. https://doi.org/10.3390/safety8020023

Chicago/Turabian StyleAust, Jonas, and Dirk Pons. 2022. "Assessment of Aircraft Engine Blade Inspection Performance Using Attribute Agreement Analysis" Safety 8, no. 2: 23. https://doi.org/10.3390/safety8020023

APA StyleAust, J., & Pons, D. (2022). Assessment of Aircraft Engine Blade Inspection Performance Using Attribute Agreement Analysis. Safety, 8(2), 23. https://doi.org/10.3390/safety8020023