Machine Learning and AI for Sensors

A topical collection in Sensors (ISSN 1424-8220). This collection belongs to the section "Sensor Networks".

Viewed by 54286

Share This Topical Collection

Editors

Prof. Dr. Panagiotis Pintelas

Prof. Dr. Panagiotis Pintelas

Prof. Dr. Panagiotis Pintelas

Prof. Dr. Panagiotis Pintelas

E-Mail

Website

Collection Editor

Department of Mathematics, University of Patras, GR 265-00 Patras, Greece

Interests: software engineering; AI in education; intelligent systems; decision support systems; machine learning; data mining; knowledge discovery

Special Issues, Collections and Topics in MDPI journals

Topical Collection Information

Dear Colleagues,

We invite you to submit your latest research in the area of “Machine Learning and Artificial Intelligence Techniques Employing All Kinds of Sensory Devices”. The application domain of this topic covers a huge area; from smart devices to smart cities, from geoscience and geospatial applications to medical prognosis and treatment, and so on.

Recent Machine Learning (ML) and Artificial Intelligence (AI) advances cover areas from zero and single-shot algorithms to very deep and complex neural network algorithms for vast amounts of data.

On the other hand, advances in sensors and sensory technology (local and remote) are responsible for a profound impact on our daily life and all aspects of current industrial and technological developments. Combining the two areas creates an extremely interesting, challenging, and promising interdisciplinary area of research deploying new forefront research and development opportunities.

The aim of this Topical Collection is to focus on methods for intelligent information acquisition and extraction from imaging sensors, present the most recent advances of the scientific community, and investigate the impact of their application in a diversity of real-world problems on topics such as:

- Sensing systems and intelligence;

- Sensing systems and DM;

- Machine Learning methods for sensing systems;

- Sensing systems and IoT;

- AI and DM for smart systems;

- Smart homes and smart cities;

- Sensor based geoscience systems and applications;

- Geo-spatial AI/DM applications;

- Remote sensing applications;

- Image sensor applications;

- AI/DM using sensing systems for medical and biomedical prognosis and treatment;

- Ethical issues in the application of AI/DM methods on sensory data;

- Interpretability and explainability issues vs. AI/DM methods on sensory data;

- Intelligent data routing techniques in ad hoc/wireless sensor networks;

- Machine learning applications in IoT and smart sensors;

- Sensors in machine vision of automated systems.

Prof. Dr. Panagiotis E. Pintelas

Ass. Prof. Dr. Sotiris Kotsiantis

Dr. Ioannis E. Livieris

Collection Editors

Manuscript Submission Information

Manuscripts should be submitted online at www.mdpi.com by registering and logging in to this website. Once you are registered, click here to go to the submission form. Manuscripts can be submitted until the deadline. All submissions that pass pre-check are peer-reviewed. Accepted papers will be published continuously in the journal (as soon as accepted) and will be listed together on the collection website. Research articles, review articles as well as short communications are invited. For planned papers, a title and short abstract (about 100 words) can be sent to the Editorial Office for announcement on this website.

Submitted manuscripts should not have been published previously, nor be under consideration for publication elsewhere (except conference proceedings papers). All manuscripts are thoroughly refereed through a single-blind peer-review process. A guide for authors and other relevant information for submission of manuscripts is available on the Instructions for Authors page. Sensors is an international peer-reviewed open access semimonthly journal published by MDPI.

Please visit the Instructions for Authors page before submitting a manuscript.

The Article Processing Charge (APC) for publication in this open access journal is 2600 CHF (Swiss Francs).

Submitted papers should be well formatted and use good English. Authors may use MDPI's

English editing service prior to publication or during author revisions.

Published Papers (15 papers)

Open AccessArticle

Leveraging Deep Learning for Time-Series Extrinsic Regression in Predicting the Photometric Metallicity of Fundamental-Mode RR Lyrae Stars

by

Lorenzo Monti, Tatiana Muraveva, Gisella Clementini and Alessia Garofalo

Viewed by 1122

Abstract

Astronomy is entering an unprecedented era of big-data science, driven by missions like the ESA’s Gaia telescope, which aims to map the Milky Way in three dimensions. Gaia’s vast dataset presents a monumental challenge for traditional analysis methods. The sheer scale of this

[...] Read more.

Astronomy is entering an unprecedented era of big-data science, driven by missions like the ESA’s Gaia telescope, which aims to map the Milky Way in three dimensions. Gaia’s vast dataset presents a monumental challenge for traditional analysis methods. The sheer scale of this data exceeds the capabilities of manual exploration, necessitating the utilization of advanced computational techniques. In response to this challenge, we developed a novel approach leveraging deep learning to estimate the metallicity of fundamental mode (ab-type) RR Lyrae stars from their light curves in the Gaia optical G-band. Our study explores applying deep-learning techniques, particularly advanced neural-network architectures, in predicting photometric metallicity from time-series data. Our deep-learning models demonstrated notable predictive performance, with a low mean absolute error (MAE) of 0.0565, the root mean square error (RMSE) of 0.0765, and a high

regression performance of 0.9401, measured by cross-validation. The weighted mean absolute error (wMAE) is 0.0563, while the weighted root mean square error (wRMSE) is 0.0763. These results showcase the effectiveness of our approach in accurately estimating metallicity values. Our work underscores the importance of deep learning in astronomical research, particularly with large datasets from missions like Gaia. By harnessing the power of deep-learning methods, we can provide precision in analyzing vast datasets, contributing to more precise and comprehensive insights into complex astronomical phenomena.

Full article

►▼

Show Figures

Open AccessArticle

Deep Learning Technology to Recognize American Sign Language Alphabet

by

Bader Alsharif, Ali Salem Altaher, Ahmed Altaher, Mohammad Ilyas and Easa Alalwany

Cited by 11 | Viewed by 6081

Abstract

Historically, individuals with hearing impairments have faced neglect, lacking the necessary tools to facilitate effective communication. However, advancements in modern technology have paved the way for the development of various tools and software aimed at improving the quality of life for hearing-disabled individuals.

[...] Read more.

Historically, individuals with hearing impairments have faced neglect, lacking the necessary tools to facilitate effective communication. However, advancements in modern technology have paved the way for the development of various tools and software aimed at improving the quality of life for hearing-disabled individuals. This research paper presents a comprehensive study employing five distinct deep learning models to recognize hand gestures for the American Sign Language (ASL) alphabet. The primary objective of this study was to leverage contemporary technology to bridge the communication gap between hearing-impaired individuals and individuals with no hearing impairment. The models utilized in this research include AlexNet, ConvNeXt, EfficientNet, ResNet-50, and VisionTransformer were trained and tested using an extensive dataset comprising over 87,000 images of the ASL alphabet hand gestures. Numerous experiments were conducted, involving modifications to the architectural design parameters of the models to obtain maximum recognition accuracy. The experimental results of our study revealed that ResNet-50 achieved an exceptional accuracy rate of 99.98%, the highest among all models. EfficientNet attained an accuracy rate of 99.95%, ConvNeXt achieved 99.51% accuracy, AlexNet attained 99.50% accuracy, while VisionTransformer yielded the lowest accuracy of 88.59%.

Full article

►▼

Show Figures

Open AccessEditorial

Special Issue on Machine Learning and AI for Sensors

by

Panagiotis Pintelas, Sotiris Kotsiantis and Ioannis E. Livieris

Viewed by 1729

Abstract

This article summarizes the works published under the “

Machine Learning and AI for Sensors” (https://www [...]

Full article

Open AccessArticle

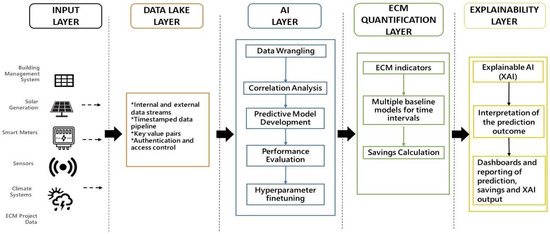

A Robust Artificial Intelligence Approach with Explainability for Measurement and Verification of Energy Efficient Infrastructure for Net Zero Carbon Emissions

by

Harsha Moraliyage, Sanoshi Dahanayake, Daswin De Silva, Nishan Mills, Prabod Rathnayaka, Su Nguyen, Damminda Alahakoon and Andrew Jennings

Cited by 13 | Viewed by 3359

Abstract

Rapid urbanization across the world has led to an exponential increase in demand for utilities, electricity, gas and water. The building infrastructure sector is one of the largest global consumers of electricity and thereby one of the largest emitters of greenhouse gas emissions.

[...] Read more.

Rapid urbanization across the world has led to an exponential increase in demand for utilities, electricity, gas and water. The building infrastructure sector is one of the largest global consumers of electricity and thereby one of the largest emitters of greenhouse gas emissions. Reducing building energy consumption directly contributes to achieving energy sustainability, emissions reduction, and addressing the challenges of a warming planet, while also supporting the rapid urbanization of human society. Energy Conservation Measures (ECM) that are digitalized using advanced sensor technologies are a formal approach that is widely adopted to reduce the energy consumption of building infrastructure. Measurement and Verification (M&V) protocols are a repeatable and transparent methodology to evaluate and formally report on energy savings. As savings cannot be directly measured, they are determined by comparing pre-retrofit and post-retrofit usage of an ECM initiative. Given the computational nature of M&V, artificial intelligence (AI) algorithms can be leveraged to improve the accuracy, efficiency, and consistency of M&V protocols. However, AI has been limited to a singular performance metric based on default parameters in recent M&V research. In this paper, we address this gap by proposing a comprehensive AI approach for M&V protocols in energy-efficient infrastructure. The novelty of the framework lies in its use of all relevant data (pre and post-ECM) to build robust and explainable predictive AI models for energy savings estimation. The framework was implemented and evaluated in a multi-campus tertiary education institution setting, comprising 200 buildings of diverse sensor technologies and operational functions. The results of this empirical evaluation confirm the validity and contribution of the proposed framework for robust and explainable M&V for energy-efficient building infrastructure and net zero carbon emissions.

Full article

►▼

Show Figures

Open AccessArticle

An IoT-Enabled Platform for the Assessment of Physical and Mental Activities Utilizing Augmented Reality Exergaming

by

Dionysios Koulouris, Andreas Menychtas and Ilias Maglogiannis

Cited by 15 | Viewed by 3274

Abstract

Augmented reality (AR) and Internet of Things (IoT) are among the core technological elements of modern information systems and applications in which advanced features for user interactivity and monitoring are required. These technologies are continuously improving and are available nowadays in all popular

[...] Read more.

Augmented reality (AR) and Internet of Things (IoT) are among the core technological elements of modern information systems and applications in which advanced features for user interactivity and monitoring are required. These technologies are continuously improving and are available nowadays in all popular programming environments and platforms, allowing for their wide adoption in many different business and research applications. In the fields of healthcare and assisted living, AR is extensively applied in the development of exergames, facilitating the implementation of innovative gamification techniques, while IoT can effectively support the users’ health monitoring aspects. In this work, we present a prototype platform for exergames that combines AR and IoT on commodity mobile devices for the development of serious games in the healthcare domain. The main objective of the solution was to promote the utilization of gamification techniques to boost the users’ physical activities and to assist the regular assessment of their health and cognitive statuses through challenges and quests in the virtual and real world. With the integration of sensors and wearable devices by design, the platform has the capability of real-time monitoring the users’ biosignals and activities during the game, collecting data for each session, which can be analyzed afterwards by healthcare professionals. The solution was validated in real world scenarios and the results were analyzed in order to further improve the performance and usability of the prototype.

Full article

►▼

Show Figures

Open AccessArticle

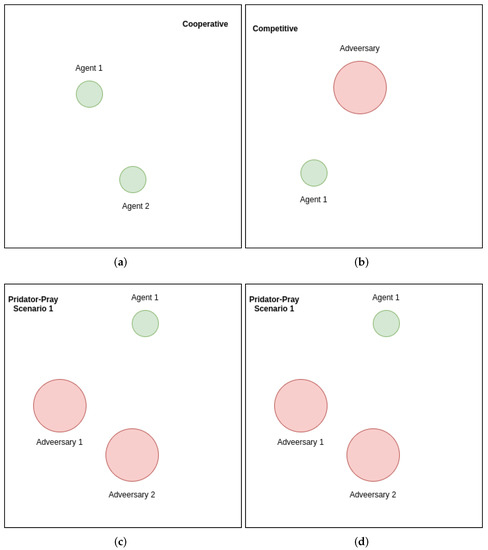

Multi-Agent Reinforcement Learning via Adaptive Kalman Temporal Difference and Successor Representation

by

Mohammad Salimibeni, Arash Mohammadi, Parvin Malekzadeh and Konstantinos N. Plataniotis

Cited by 3 | Viewed by 2351

Abstract

Development of distributed Multi-Agent Reinforcement Learning (MARL) algorithms has attracted an increasing surge of interest lately. Generally speaking, conventional Model-Based (MB) or Model-Free (MF) RL algorithms are not directly applicable to the MARL problems due to utilization of a fixed reward model for

[...] Read more.

Development of distributed Multi-Agent Reinforcement Learning (MARL) algorithms has attracted an increasing surge of interest lately. Generally speaking, conventional Model-Based (MB) or Model-Free (MF) RL algorithms are not directly applicable to the MARL problems due to utilization of a fixed reward model for learning the underlying value function. While Deep Neural Network (DNN)-based solutions perform well, they are still prone to overfitting, high sensitivity to parameter selection, and sample inefficiency. In this paper, an adaptive Kalman Filter (KF)-based framework is introduced as an efficient alternative to address the aforementioned problems by capitalizing on unique characteristics of KF such as uncertainty modeling and online second order learning. More specifically, the paper proposes the Multi-Agent Adaptive Kalman Temporal Difference (MAK-TD) framework and its Successor Representation-based variant, referred to as the MAK-SR. The proposed MAK-TD/SR frameworks consider the continuous nature of the action-space that is associated with high dimensional multi-agent environments and exploit Kalman Temporal Difference (KTD) to address the parameter uncertainty. The proposed MAK-TD/SR frameworks are evaluated via several experiments, which are implemented through the OpenAI Gym MARL benchmarks. In these experiments, different number of agents in cooperative, competitive, and mixed (cooperative-competitive) scenarios are utilized. The experimental results illustrate superior performance of the proposed MAK-TD/SR frameworks compared to their state-of-the-art counterparts.

Full article

►▼

Show Figures

Open AccessArticle

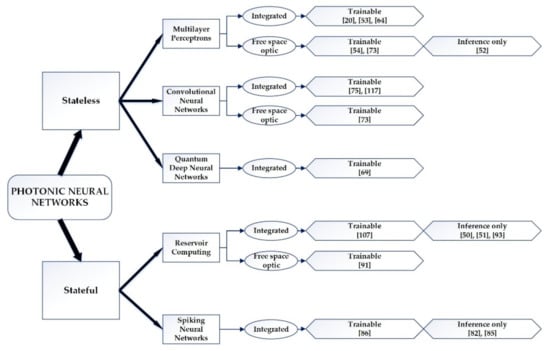

A Comprehensive Survey on Nanophotonic Neural Networks: Architectures, Training Methods, Optimization, and Activations Functions

by

Konstantinos Demertzis, Georgios D. Papadopoulos, Lazaros Iliadis and Lykourgos Magafas

Cited by 4 | Viewed by 3800

Abstract

In the last years, materializations of neuromorphic circuits based on nanophotonic arrangements have been proposed, which contain complete optical circuits, laser, photodetectors, photonic crystals, optical fibers, flat waveguides and other passive optical elements of nanostructured materials, which eliminate the time of simultaneous processing

[...] Read more.

In the last years, materializations of neuromorphic circuits based on nanophotonic arrangements have been proposed, which contain complete optical circuits, laser, photodetectors, photonic crystals, optical fibers, flat waveguides and other passive optical elements of nanostructured materials, which eliminate the time of simultaneous processing of big groups of data, taking advantage of the quantum perspective, and thus highly increasing the potentials of contemporary intelligent computational systems. This article is an effort to record and study the research that has been conducted concerning the methods of development and materialization of neuromorphic circuits of neural networks of nanophotonic arrangements. In particular, an investigative study of the methods of developing nanophotonic neuromorphic processors, their originality in neuronic architectural structure, their training methods and their optimization was realized along with the study of special issues such as optical activation functions and cost functions. The main contribution of this research work is that it is the first time in the literature that the most well-known architectures, training methods, optimization and activations functions of the nanophotonic networks are presented in a single paper. This study also includes an extensive detailed meta-review analysis of the advantages and disadvantages of nanophotonic networks.

Full article

►▼

Show Figures

Open AccessArticle

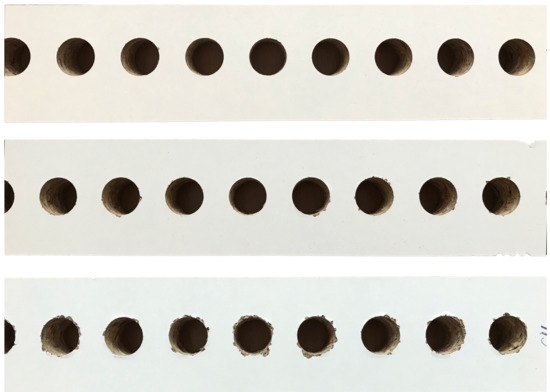

Multiclass Image Classification Using GANs and CNN Based on Holes Drilled in Laminated Chipboard

by

Grzegorz Wieczorek, Marcin Chlebus, Janusz Gajda, Katarzyna Chyrowicz, Kamila Kontna, Michał Korycki, Albina Jegorowa and Michał Kruk

Cited by 8 | Viewed by 2754

Abstract

The multiclass prediction approach to the problem of recognizing the state of the drill by classifying images of drilled holes into three classes is presented. Expert judgement was made on the basis of the quality of the hole, by dividing the collected photographs

[...] Read more.

The multiclass prediction approach to the problem of recognizing the state of the drill by classifying images of drilled holes into three classes is presented. Expert judgement was made on the basis of the quality of the hole, by dividing the collected photographs into the classes: “very fine,” “acceptable,” and “unacceptable.” The aim of the research was to create a model capable of identifying different levels of quality of the holes, where the reduced quality would serve as a warning that the drill is about to wear down. This could reduce the damage caused by a blunt tool. To perform this task, real-world data were gathered, normalized, and scaled down, and additional instances were created with the use of data-augmentation techniques, a self-developed transformation, and with general adversarial networks. This approach also allowed us to achieve a slight rebalance of the dataset, by creating higher numbers of images belonging to the less-represented classes. The datasets generated were then fed into a series of convolutional neural networks, with different numbers of convolution layers used, modelled to carry out the multiclass prediction. The performance of the so-designed model was compared to predictions generated by Microsoft’s Custom Vision service, trained on the same data, which was treated as the benchmark. Several trained models obtained by adjusting the structure and hyperparameters of the model were able to provide better recognition of less-represented classes than the benchmark.

Full article

►▼

Show Figures

Open AccessArticle

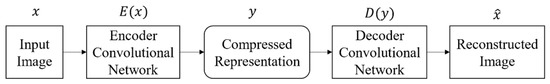

A Convolutional Autoencoder Topology for Classification in High-Dimensional Noisy Image Datasets

by

Emmanuel Pintelas, Ioannis E. Livieris and Panagiotis E. Pintelas

Cited by 26 | Viewed by 3359

Abstract

Deep convolutional neural networks have shown remarkable performance in the image classification domain. However, Deep Learning models are vulnerable to noise and redundant information encapsulated into the high-dimensional raw input images, leading to unstable and unreliable predictions. Autoencoders constitute an unsupervised dimensionality reduction

[...] Read more.

Deep convolutional neural networks have shown remarkable performance in the image classification domain. However, Deep Learning models are vulnerable to noise and redundant information encapsulated into the high-dimensional raw input images, leading to unstable and unreliable predictions. Autoencoders constitute an unsupervised dimensionality reduction technique, proven to filter out noise and redundant information and create robust and stable feature representations. In this work, in order to resolve the problem of DL models’ vulnerability, we propose a convolutional autoencoder topological model for compressing and filtering out noise and redundant information from initial high dimensionality input images and then feeding this compressed output into convolutional neural networks. Our results reveal the efficiency of the proposed approach, leading to a significant performance improvement compared to Deep Learning models trained with the initial raw images.

Full article

►▼

Show Figures

Open AccessArticle

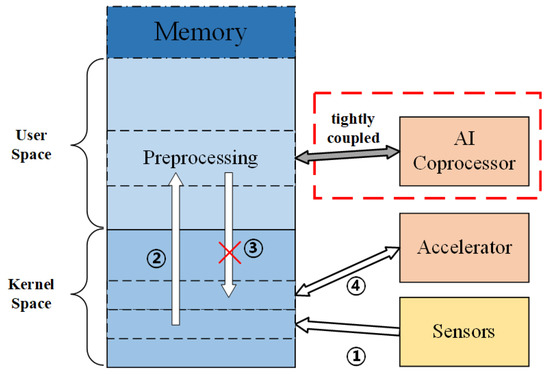

A Heterogeneous RISC-V Processor for Efficient DNN Application in Smart Sensing System

by

Haifeng Zhang, Xiaoti Wu, Yuyu Du, Hongqing Guo, Chuxi Li, Yidong Yuan, Meng Zhang and Shengbing Zhang

Cited by 5 | Viewed by 3680

Abstract

Extracting features from sensing data on edge devices is a challenging application for which deep neural networks (DNN) have shown promising results. Unfortunately, the general micro-controller-class processors which are widely used in sensing system fail to achieve real-time inference. Accelerating the compute-intensive DNN

[...] Read more.

Extracting features from sensing data on edge devices is a challenging application for which deep neural networks (DNN) have shown promising results. Unfortunately, the general micro-controller-class processors which are widely used in sensing system fail to achieve real-time inference. Accelerating the compute-intensive DNN inference is, therefore, of utmost importance. As the physical limitation of sensing devices, the design of processor needs to meet the balanced performance metrics, including low power consumption, low latency, and flexible configuration. In this paper, we proposed a lightweight pipeline integrated deep learning architecture, which is compatible with open-source RISC-V instructions. The dataflow of DNN is organized by the very long instruction word (VLIW) pipeline. It combines with the proposed special intelligent enhanced instructions and the single instruction multiple data (SIMD) parallel processing unit. Experimental results show that total power consumption is about 411 mw and the power efficiency is about 320.7 GOPS/W.

Full article

►▼

Show Figures

Open AccessArticle

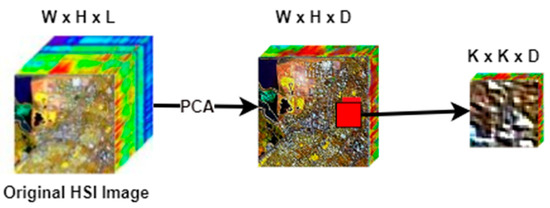

Hyperspectral Image Classification Using Deep Genome Graph-Based Approach

by

Haron Tinega, Enqing Chen, Long Ma, Richard M. Mariita and Divinah Nyasaka

Cited by 4 | Viewed by 4207

Abstract

Recently developed hybrid models that stack 3D with 2D CNN in their structure have enjoyed high popularity due to their appealing performance in hyperspectral image classification tasks. On the other hand, biological genome graphs have demonstrated their effectiveness in enhancing the scalability and

[...] Read more.

Recently developed hybrid models that stack 3D with 2D CNN in their structure have enjoyed high popularity due to their appealing performance in hyperspectral image classification tasks. On the other hand, biological genome graphs have demonstrated their effectiveness in enhancing the scalability and accuracy of genomic analysis. We propose an innovative deep genome graph-based network (GGBN) for hyperspectral image classification to tap the potential of hybrid models and genome graphs. The GGBN model utilizes 3D-CNN at the bottom layers and 2D-CNNs at the top layers to process spectral–spatial features vital to enhancing the scalability and accuracy of hyperspectral image classification. To verify the effectiveness of the GGBN model, we conducted classification experiments on Indian Pines (IP), University of Pavia (UP), and Salinas Scene (SA) datasets. Using only 5% of the labeled data for training over the SA, IP, and UP datasets, the classification accuracy of GGBN is 99.97%, 96.85%, and 99.74%, respectively, which is better than the compared state-of-the-art methods.

Full article

►▼

Show Figures

Open AccessArticle

Boosting Intelligent Data Analysis in Smart Sensors by Integrating Knowledge and Machine Learning

by

Piotr Łuczak, Przemysław Kucharski, Tomasz Jaworski, Izabela Perenc, Krzysztof Ślot and Jacek Kucharski

Cited by 8 | Viewed by 2410

Abstract

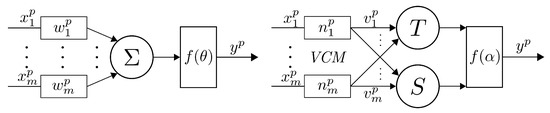

The presented paper proposes a hybrid neural architecture that enables intelligent data analysis efficacy to be boosted in smart sensor devices, which are typically resource-constrained and application-specific. The postulated concept integrates prior knowledge with learning from examples, thus allowing sensor devices to be

[...] Read more.

The presented paper proposes a hybrid neural architecture that enables intelligent data analysis efficacy to be boosted in smart sensor devices, which are typically resource-constrained and application-specific. The postulated concept integrates prior knowledge with learning from examples, thus allowing sensor devices to be used for the successful execution of machine learning even when the volume of training data is highly limited, using compact underlying hardware. The proposed architecture comprises two interacting functional modules arranged in a homogeneous, multiple-layer architecture. The first module, referred to as the knowledge sub-network, implements knowledge in the Conjunctive Normal Form through a three-layer structure composed of novel types of learnable units, called L-neurons. In contrast, the second module is a fully-connected conventional three-layer, feed-forward neural network, and it is referred to as a conventional neural sub-network. We show that the proposed hybrid structure successfully combines knowledge and learning, providing high recognition performance even for very limited training datasets, while also benefiting from an abundance of data, as it occurs for purely neural structures. In addition, since the proposed L-neurons can learn (through classical backpropagation), we show that the architecture is also capable of repairing its knowledge.

Full article

►▼

Show Figures

Open AccessCommunication

Decision Confidence Assessment in Multi-Class Classification

by

Michał Bukowski, Jarosław Kurek, Izabella Antoniuk and Albina Jegorowa

Cited by 8 | Viewed by 3599

Abstract

This paper presents a novel approach to the assessment of decision confidence when multi-class recognition is concerned. When many classification problems are considered, while eliminating human interaction with the system might be one goal, it is not the only possible option—lessening the workload

[...] Read more.

This paper presents a novel approach to the assessment of decision confidence when multi-class recognition is concerned. When many classification problems are considered, while eliminating human interaction with the system might be one goal, it is not the only possible option—lessening the workload of human experts can also bring huge improvement to the production process. The presented approach focuses on providing a tool that will significantly decrease the amount of work that the human expert needs to conduct while evaluating different samples. Instead of hard classification, which assigns a single label to each class, the described solution focuses on evaluating each case in terms of decision confidence—checking how sure the classifier is in the case of the currently processed example, and deciding if the final classification should be performed, or if the sample should instead be manually evaluated by a human expert. The method can be easily adjusted to any number of classes. It can also focus either on the classification accuracy or coverage of the used dataset, depending on user preferences. Different confidence functions are evaluated in that aspect. The results obtained during experiments meet the initial criteria, providing an acceptable quality for the final solution.

Full article

►▼

Show Figures

Open AccessArticle

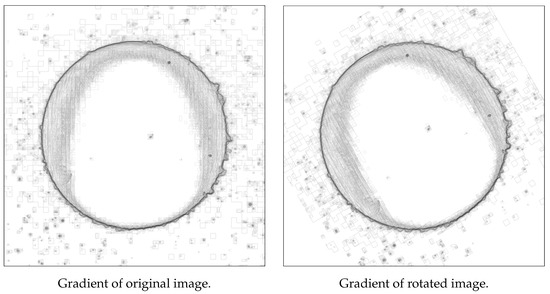

XCycles Backprojection Acoustic Super-Resolution

by

Feras Almasri, Jurgen Vandendriessche, Laurent Segers, Bruno da Silva, An Braeken, Kris Steenhaut, Abdellah Touhafi and Olivier Debeir

Cited by 2 | Viewed by 2400

Abstract

The computer vision community has paid much attention to the development of visible image super-resolution (SR) using deep neural networks (DNNs) and has achieved impressive results. The advancement of non-visible light sensors, such as acoustic imaging sensors, has attracted much attention, as they

[...] Read more.

The computer vision community has paid much attention to the development of visible image super-resolution (SR) using deep neural networks (DNNs) and has achieved impressive results. The advancement of non-visible light sensors, such as acoustic imaging sensors, has attracted much attention, as they allow people to visualize the intensity of sound waves beyond the visible spectrum. However, because of the limitations imposed on acquiring acoustic data, new methods for improving the resolution of the acoustic images are necessary. At this time, there is no acoustic imaging dataset designed for the SR problem. This work proposed a novel backprojection model architecture for the acoustic image super-resolution problem, together with Acoustic Map Imaging VUB-ULB Dataset (AMIVU). The dataset provides large simulated and real captured images at different resolutions. The proposed XCycles BackProjection model (XCBP), in contrast to the feedforward model approach, fully uses the iterative correction procedure in each cycle to reconstruct the residual error correction for the encoded features in both low- and high-resolution space. The proposed approach was evaluated on the dataset and showed high outperformance compared to the classical interpolation operators and to the recent feedforward state-of-the-art models. It also contributed to a drastically reduced sub-sampling error produced during the data acquisition.

Full article

►▼

Show Figures

Open AccessArticle

A Novel Approach to Image Recoloring for Color Vision Deficiency

by

George E. Tsekouras, Anastasios Rigos, Stamatis Chatzistamatis, John Tsimikas, Konstantinos Kotis, George Caridakis and Christos-Nikolaos Anagnostopoulos

Cited by 11 | Viewed by 4059

Abstract

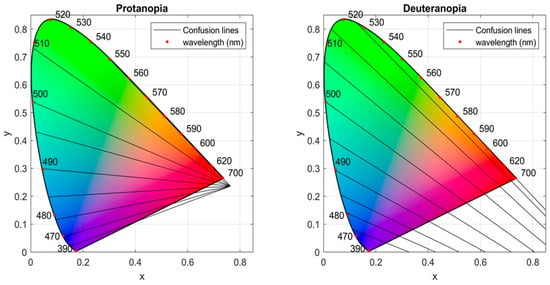

In this paper, a novel method to modify color images for the protanopia and deuteranopia color vision deficiencies is proposed. The method admits certain criteria, such as preserving image naturalness and color contrast enhancement. Four modules are employed in the process. First, fuzzy

[...] Read more.

In this paper, a novel method to modify color images for the protanopia and deuteranopia color vision deficiencies is proposed. The method admits certain criteria, such as preserving image naturalness and color contrast enhancement. Four modules are employed in the process. First, fuzzy clustering-based color segmentation extracts key colors (which are the cluster centers) of the input image. Second, the key colors are mapped onto the CIE 1931 chromaticity diagram. Then, using the concept of confusion line (i.e., loci of colors confused by the color-blind), a sophisticated mechanism translates (i.e., removes) key colors lying on the same confusion line to different confusion lines so that they can be discriminated by the color-blind. In the third module, the key colors are further adapted by optimizing a regularized objective function that combines the aforementioned criteria. Fourth, the recolored image is obtained by color transfer that involves the adapted key colors and the associated fuzzy clusters. Three related methods are compared with the proposed one, using two performance indices, and evaluated by several experiments over 195 natural images and six digitized art paintings. The main outcomes of the comparative analysis are as follows. (a) Quantitative evaluation based on nonparametric statistical analysis is conducted by comparing the proposed method to each one of the other three methods for protanopia and deuteranopia, and for each index. In most of the comparisons, the Bonferroni adjusted

p-values are <0.015, favoring the superiority of the proposed method. (b) Qualitative evaluation verifies the aesthetic appearance of the recolored images. (c) Subjective evaluation supports the above results.

Full article

►▼

Show Figures

Planned Papers

The below list represents only planned manuscripts. Some of these

manuscripts have not been received by the Editorial Office yet. Papers

submitted to MDPI journals are subject to peer-review.

Title: An ΙοΤ enabled platform for the assessment of physical and mental activity utilizing augmented reality exergaming

Authors: Dionysios Koulouris, Andreas Menychtas and Ilias Maglogiannis

Affiliation: University of Piraeus: Department of Digital Systems, University of Piraeus, 80, M. Karaoli & A. Dimitriou St.,18534 Piraeus, Greece

Bioassist SA

Title: intelligent edge computing providing AI services with ease

Authors: Kwihoon Kim

Affiliation: Korea National University of Education (KNUE)