On Thermodynamic Interpretation of Transfer Entropy

Abstract

:1. Introduction

2. Definitions

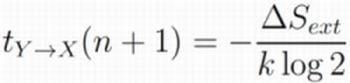

2.1. Transfer Entropy

2.2. Local Transfer Entropy

2.3. Causal Effect as Information Flow

- and the causal links through v on the path are not both into v, or

- the causal links through v on the path are both into v, and v and all its causal descendants are not in U.)

2.4. Local Information Flow

3. Preliminaries

3.1. System Definition

3.2. Entropy Definitions

3.3. Transition Probabilities

3.4. Entropy Production

3.5. Range of Applicability

3.6. An Example: Random Fluctuation Near Equilibrium

4. Transfer Entropy: Thermodynamic Interpretation

4.1. Transitions Near Equilibrium

4.2. Transfer Entropy as Entropy Production

4.3. Transfer Entropy as a Measure of Equilibrium’s Stability

4.4. Heat Transfer

5. Causal Effect: Thermodynamic Interpretation?

6. Discussion and Conclusions

Acknowledgements

References

- Schreiber, T. Measuring information transfer. Phys. Rev. Lett. 2000, 85, 461–464. [Google Scholar] [CrossRef] [PubMed]

- Jakubowski, M.H.; Steiglitz, K.; Squier, R. Information transfer between solitary waves in the saturable Schrödinger equation. Phys. Rev. E 1997, 56, 7267. [Google Scholar] [CrossRef]

- Baek, S.K.; Jung, W.S.; Kwon, O.; Moon, H.T. Transfer Entropy Analysis of the Stock Market. 2005; arXiv:physics/0509014v2. [Google Scholar]

- Moniz, L.J.; Cooch, E.G.; Ellner, S.P.; Nichols, J.D.; Nichols, J.M. Application of information theory methods to food web reconstruction. Ecol. Model. 2007, 208, 145–158. [Google Scholar] [CrossRef]

- Chávez, M.; Martinerie, J.; Le Van Quyen, M. Statistical assessment of nonlinear causality: Application to epileptic EEG signals. J. Neurosci. Methods 2003, 124, 113–128. [Google Scholar] [CrossRef]

- Pahle, J.; Green, A.K.; Dixon, C.J.; Kummer, U. Information transfer in signaling pathways: A study using coupled simulated and experimental data. BMC Bioinforma. 2008, 9, 139. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Local information transfer as a spatiotemporal filter for complex systems. Phys. Rev. E 2008, 77, 026110. [Google Scholar] [CrossRef]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Information modification and particle collisions in distributed computation. Chaos 2010, 20, 037109. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Coherent information structure in complex computation. Theory Biosci. 2012, 131, 193–203. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. Local measures of information storage in complex distributed computation. Inf. Sci. 2012, 208, 39–54. [Google Scholar] [CrossRef]

- Lizier, J.T.; Prokopenko, M.; Tanev, I.; Zomaya, A.Y. Emergence of Glider-like Structures in a Modular Robotic System. In Proceedings of the Eleventh International Conference on the Simulation and Synthesis of Living Systems (ALife XI), Winchester, UK, 5–8 August 2008; Bullock, S., Noble, J., Watson, R., Bedau, M.A., Eds.; MIT Press: Cambridge, MA, USA, 2008; pp. 366–373. [Google Scholar]

- Lizier, J.T.; Prokopenko, M.; Zomaya, A.Y. The Information Dynamics of Phase Transitions in Random Boolean Networks. In Proceedings of the Eleventh International Conference on the Simulation and Synthesis of Living Systems (ALife XI), Winchester, UK, 5–8 August 2008; Bullock, S., Noble, J., Watson, R., Bedau, M.A., Eds.; MIT Press: Cambridge, MA, USA, 2008; pp. 374–381. [Google Scholar]

- Lizier, J.T.; Pritam, S.; Prokopenko, M. Information dynamics in small-world Boolean networks. Artif. Life 2011, 17, 293–314. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Heinzle, J.; Horstmann, A.; Haynes, J.D.; Prokopenko, M. Multivariate information-theoretic measures reveal directed information structure and task relevant changes in fMRI connectivity. J. Comput. Neurosci. 2011, 30, 85–107. [Google Scholar] [CrossRef] [PubMed]

- Wang, X.R.; Miller, J.M.; Lizier, J.T.; Prokopenko, M.; Rossi, L.F. Quantifying and tracing information cascades in swarms. PLoS One 2012, 7, e40084. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Prokopenko, M.; Cornforth, D.J. The Information Dynamics of Cascading Failures in Energy Networks. In Proceedings of the European Conference on Complex Systems (ECCS), Warwick, UK, 21–25 October 2009; p. 54.

- Kaiser, A.; Schreiber, T. Information transfer in continuous processes. Physica D 2002, 166, 43–62. [Google Scholar] [CrossRef]

- Ay, N.; Polani, D. Information flows in causal networks. Adv. Complex Syst. 2008, 11, 17–41. [Google Scholar] [CrossRef]

- Bennett, C.H. Notes on Landauer’s principle, reversible computation, and Maxwell’s Demon. Stud. History Philos. Sci. Part B 2003, 34, 501–510. [Google Scholar] [CrossRef]

- Piechocinska, B. Information erasure. Phys. Rev. A 2000, 61, 062314. [Google Scholar] [CrossRef]

- Lloyd, S. Programming the Universe; Vintage Books: New York, NY, USA, 2006. [Google Scholar]

- Parrondo, J.M.R.; den Broeck, C.V.; Kawai, R. Entropy production and the arrow of time. New J. Phys. 2009, 11, 073008. [Google Scholar] [CrossRef]

- Prokopenko, M.; Lizier, J.T.; Obst, O.; Wang, X.R. Relating Fisher information to order parameters. Phys. Rev. E 2011, 84, 041116. [Google Scholar] [CrossRef]

- Landauer, R. Irreversibility and heat generation in the computing process. IBM J. Res. Dev. 1961, 5, 183–191. [Google Scholar] [CrossRef]

- Maroney, O.J.E. Generalizing Landauer’s principle. Phys. Rev. E 2009, 79, 031105. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. Phys. Rev. 1957, 106, 620–630. [Google Scholar] [CrossRef]

- Jaynes, E.T. Information theory and statistical mechanics. II. Phys. Rev. 1957, 108, 171–190. [Google Scholar] [CrossRef]

- Crooks, G. Measuring thermodynamic length. Phys. Rev. Lett. 2007, 99, 100602+. [Google Scholar] [CrossRef] [PubMed]

- Liang, X.S. Information flow within stochastic dynamical systems. Phys. Rev. E 2008, 78, 031113. [Google Scholar] [CrossRef]

- Lüdtke, N.; Panzeri, S.; Brown, M.; Broomhead, D.S.; Knowles, J.; Montemurro, M.A.; Kell, D.B. Information-theoretic sensitivity analysis: A general method for credit assignment in complex networks. J. R. Soc. Interface 2008, 5, 223–235. [Google Scholar] [CrossRef] [PubMed]

- Auletta, G.; Ellis, G.F.R.; Jaeger, L. Top-down causation by information control: From a philosophical problem to a scientific research programme. J. R. Soc. Interface 2008, 5, 1159–1172. [Google Scholar] [CrossRef] [PubMed]

- Hlaváčková-Schindler, K.; Paluš, M.; Vejmelka, M.; Bhattacharya, J. Causality detection based on information-theoretic approaches in time series analysis. Phys. Rep. 2007, 441, 1–46. [Google Scholar] [CrossRef]

- Lungarella, M.; Ishiguro, K.; Kuniyoshi, Y.; Otsu, N. Methods for quantifying the causal structure of bivariate time series. Int. J. Bifurc. Chaos 2007, 17, 903–921. [Google Scholar] [CrossRef]

- Ishiguro, K.; Otsu, N.; Lungarella, M.; Kuniyoshi, Y. Detecting direction of causal interactions between dynamically coupled signals. Phys. Rev. E 2008, 77, 026216. [Google Scholar] [CrossRef]

- Sumioka, H.; Yoshikawa, Y.; Asada, M. Causality Detected by Transfer Entropy Leads Acquisition of Joint Attention. In Proceedings of the 6th IEEE International Conference on Development and Learning (ICDL 2007), London, UK, 11–13 July 2007; pp. 264–269.

- Vejmelka, M.; Palus, M. Inferring the directionality of coupling with conditional mutual information. Phys. Rev. E 2008, 77, 026214. [Google Scholar] [CrossRef]

- Verdes, P.F. Assessing causality from multivariate time series. Phys. Rev. E 2005, 72, 026222:1–026222:9. [Google Scholar] [CrossRef]

- Tung, T.Q.; Ryu, T.; Lee, K.H.; Lee, D. Inferring Gene Regulatory Networks from Microarray Time Series Data Using Transfer Entropy. In Proceedings of the Twentieth IEEE International Symposium on Computer-Based Medical Systems (CBMS ’07), Maribor, Slovenia, 20–22 June 2007; Kokol, P., Podgorelec, V., Mičetič-Turk, D., Zorman, M., Verlič, M., Eds.; IEEE: Los Alamitos, CA, USA, 2007; pp. 383–388. [Google Scholar]

- Van Dijck, G.; van Vaerenbergh, J.; van Hulle, M.M. Information Theoretic Derivations for Causality Detection: Application to Human Gait. In Proceedings of the International Conference on Artificial Neural Networks (ICANN 2007), Porto, Portugal, 9–13 September 2007; Sá, J.M.d., Alexandre, L.A., Duch, W., Mandic, D., Eds.; Springer-Verlag: Berlin/Heidelberg, Germany, 2007; Volume 4669, Lecture Notes in Computer Science. pp. 159–168. [Google Scholar]

- Hung, Y.C.; Hu, C.K. Chaotic communication via temporal transfer entropy. Phys. Rev. Lett. 2008, 101, 244102. [Google Scholar] [CrossRef] [PubMed]

- Lizier, J.T.; Prokopenko, M. Differentiating information transfer and causal effect. Eur. Phys. J. B 2010, 73, 605–615. [Google Scholar] [CrossRef]

- Wuensche, A. Classifying cellular automata automatically: Finding gliders, filtering, and relating space-time patterns, attractor basins, and the Z parameter. Complexity 1999, 4, 47–66. [Google Scholar] [CrossRef]

- Solé, R.V.; Valverde, S. Information transfer and phase transitions in a model of internet traffic. Physica A 2001, 289, 595–605. [Google Scholar] [CrossRef]

- Solé, R.V.; Valverde, S. Information Theory of Complex Networks: On Evolution and Architectural Constraints. In Complex Networks; Ben-Naim, E., Frauenfelder, H., Toroczkai, Z., Eds.; Springer: Berlin/Heidelberg, Germany, 2004; Volume 650, Lecture Notes in Physics; pp. 189–207. [Google Scholar]

- Prokopenko, M.; Boschietti, F.; Ryan, A.J. An information-theoretic primer on complexity, self-organization, and emergence. Complexity 2009, 15, 11–28. [Google Scholar] [CrossRef]

- Takens, F. Detecting Strange Attractors in Turbulence. In Dynamical Systems and Turbulence, Warwick 1980; Rand, D., Young, L.S., Eds.; Springer: Berlin/Heidelberg, Germany, 1981; pp. 366–381. [Google Scholar]

- Granger, C.W.J. Investigating causal relations by econometric models and cross-spectral methods. Econometrica 1969, 37, 424–438. [Google Scholar] [CrossRef]

- Fano, R. Transmission of Information: A Statistical Theory of Communications; The MIT Press: Cambridge, MA, USA, 1961. [Google Scholar]

- Manning, C.D.; Schütze, H. Foundations of Statistical Natural Language Processing; The MIT Press: Cambridge, MA, USA, 1999. [Google Scholar]

- Dasan, J.; Ramamohan, T.R.; Singh, A.; Nott, P.R. Stress fluctuations in sheared Stokesian suspensions. Phys. Rev. E 2002, 66, 021409. [Google Scholar] [CrossRef]

- MacKay, D.J. Information Theory, Inference, and Learning Algorithms; Cambridge University Press: Cambridge, MA, USA, 2003. [Google Scholar]

- Pearl, J. Causality: Models, Reasoning, and Inference; Cambridge University Press: Cambridge, MA, USA, 2000. [Google Scholar]

- Goyal, P. Information physics–towards a new conception of physical reality. Information 2012, 3, 567–594. [Google Scholar] [CrossRef]

- Sethna, J.P. Statistical Mechanics: Entropy, Order Parameters, and Complexity; Oxford University Press: Oxford, UK, 2006. [Google Scholar]

- Seife, C. Decoding the Universe; Penguin Group: New York, NY, USA, 2006. [Google Scholar]

- Einstein, A. Über einen die Erzeugung und Verwandlung des Lichtes betreffenden heuristischen Gesichtspunkt. Ann. Phys. 1905, 322, 132–148. [Google Scholar] [CrossRef]

- Norton, J.D. Atoms, entropy, quanta: Einstein’s miraculous argument of 1905. Stud. History Philos. Mod. Phys. 2006, 37, 71–100. [Google Scholar] [CrossRef] [Green Version]

- Barnett, L.; Buckley, C.L.; Bullock, S. Neural complexity and structural connectivity. Phys. Rev. E 2009, 79, 051914. [Google Scholar] [CrossRef]

- Ay, N.; Bernigau, H.; Der, R.; Prokopenko, M. Information-driven self-organization: The dynamical system approach to autonomous robot behavior. Theory Biosci. 2012, 131, 161–179. [Google Scholar] [CrossRef] [PubMed]

- Evans, D.J.; Cohen, E.G.D.; Morriss, G.P. Probability of second law violations in shearing steady states. Phys. Rev. Lett. 1993, 71, 2401–2404. [Google Scholar] [CrossRef] [PubMed]

- Searles, D.J.; Evans, D.J. Fluctuations relations for nonequilibrium systems. Aus. J. Chem. 2004, 57, 1129–1123. [Google Scholar] [CrossRef]

- Crooks, G.E. Entropy production fluctuation theorem and the nonequilibrium work relation for free energy differences. Phys. Rev. E 1999, 60, 2721–2726. [Google Scholar] [CrossRef]

- Jarzynski, C. Nonequilibrium work relations: Foundations and applications. Eur. Phys. J. B-Condens. Matter Complex Syst. 2008, 64, 331–340. [Google Scholar] [CrossRef]

- Schlögl, F. Information Measures and Thermodynamic Criteria for Motion. In Structural Stability in Physics: Proceedings of Two International Symposia on Applications of Catastrophe Theory and Topological Concepts in Physics, Tübingen, Germany, 2–6 May and 11–14 December 1978; Güttinger, W., Eikemeier, H., Eds.; Springer: Berlin/Heidelberg, Germany, 1979; Volume 4, Springer series in synergetics. pp. 199–209. [Google Scholar]

© 2013 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution license (http://creativecommons.org/licenses/by/3.0/).

Share and Cite

Prokopenko, M.; Lizier, J.T.; Price, D.C. On Thermodynamic Interpretation of Transfer Entropy. Entropy 2013, 15, 524-543. https://doi.org/10.3390/e15020524

Prokopenko M, Lizier JT, Price DC. On Thermodynamic Interpretation of Transfer Entropy. Entropy. 2013; 15(2):524-543. https://doi.org/10.3390/e15020524

Chicago/Turabian StyleProkopenko, Mikhail, Joseph T. Lizier, and Don C. Price. 2013. "On Thermodynamic Interpretation of Transfer Entropy" Entropy 15, no. 2: 524-543. https://doi.org/10.3390/e15020524

APA StyleProkopenko, M., Lizier, J. T., & Price, D. C. (2013). On Thermodynamic Interpretation of Transfer Entropy. Entropy, 15(2), 524-543. https://doi.org/10.3390/e15020524