1. Introduction

Suppose that there is a party, say Alice, who prepares her system in a particular state. The state is chosen from a set of states that have been publicly declared. The system is then given to the other party, called Bob, who then applies a measurement to find which state has been prepared in among the possibilities. This scenario defines the problem of optimal state discrimination that seeks the guessing probability,

i.e., the maximum probability that Bob can correctly guess the state that has been prepared by Alice, as well as the optimal measurement that achieves the guessing probability. Optimal state discrimination shows that there is a fundamental limit in the distinguishability of systems. This problem constitutes one of the most fundamental measures in information theory with deep connection to applications in quantum information processing [

1,

2,

3].

Generalized probabilistic theories (GPTs) capture the formalism of the convex operational framework, in which operational significances of states, effects, and dynamics can be identified and characterized, respectively [

4,

5,

6], see also a recent review [

7]. States are elements of a convex set, effects are postulated to map states into probabilities and present probabilities measures, and dynamics constrains possible evolution of states. In quantum theory, states correspond to non-negative and unit-trace bounded operators on Hilbert spaces, effects are postulated such that product of positive-operator-valued-measures and states results to probabilities, and dynamics is generally described by positive and completely positive maps. GPTs are of fundamental interest, particularly within the foundations of quantum information theory and they also useful for identifying specific properties of states or effects that have operational significances. For instance, in quantum theory, the fact that quantum states cannot be perfectly cloned may be found as one of properties associated with the Hilbert spaces, e.g., non-orthogonality of state vectors. However, the no-cloning theorem does not necessarily rely on the structure of Hilbert spaces and in fact, GPTs which have violations of Bell inequalities can also incorporate the no-cloning theorem [

8].

Recently, optimal state discrimination in GPTs has been considered and it has been shown that it is tightly connected to ensemble steering of states and the no-signaling principle [

9]. Specifically, in a GPT where ensemble steering is possible, the no-signaling principle can determine optimal state discrimination. This also holds true in quantum theory, where the no-signaling principle elucidates the relation between optimal state discrimination and quantum cloning [

10]. Given that ensemble steering itself does not single out quantum theory [

11], the result is valid even beyond quantum theory as long as ensemble steering is allowed in a theory. That is, GPTs are a useful theoretical tool to find operational relations that may play a key role in quantum information applications [

7].

In this work, we investigate general properties of optimal state discrimination in GPTs and present a method of optimal state discrimination based on the convex geometry of a state space. After briefly introducing the framework of GPTs and optimal state discrimination, we formalize optimal state discrimination within the convex optimization framework. We show that primal and dual problems return the idential result, and thus formulate the problem in the form of the complementarity problem that gives a generalization of the optimization problems. This then allows us to derive a geometric method of state discrimination. We consider an example of GPTs, the polygon states, and apply the geometric formulation to optimal discrimination. We identify those properties that optimal quantum state discrimination shares with GPTs: (i) optimal measurement is not unique in general, and (ii) no measurement can sometimes give optimal state discrimination.

The present paper is structured as follows. We first review the framework of GPTs and optimal state discrimination, and then formulate optimal state discrimination in the convex optimization framework. We show that primal and dual problems result in the same solutions, due to the strong duality in the problem. We then apply the complementarity problem that generalizes the primal and the dual problems, and derive the method of optimal state discrimination. The polygon system is considered as examples of GPTs, and we apply the method to optimal discrimination of polygon states.

2. Optimal State Discrimination in GPTs

We briefly summarise GPTs [

4,

5,

6] and formulate optimal state discrimination as a convex optimization problem. In particular, we apply the complementarity problem and then present a method of optimal discrimination based on the convex geometry of states.

2.1. Generalized Probabilistic Theories

As it has been mentioned, a GPT contains states and effects such that they produce probabilities. Any convex set can be a state space. A set of states, denoted by Ω, consists of all possible states that a system can be prepared in. Any probabilistic mixture of states, i.e., for and probability p is also a state, and thus the set is convex. A general mapping from states to probabilities is described by effects, linear functionals . A measurement denoted by s is described by a set of effects, , with which the probability of getting outcome for measurement s when state w is given is given by . A unit effect u is introduced so that states are mapped to probabilities by effects: once a measurement occurs, we have for all . Thus, it holds that for any measurement s, we have . As effects are dual to the state space, they are also convex.

2.2. State Discrimination in Convex Optimization

Optimal state discrimination in GPTs can be described by a game of two parties, Alice and Bob, as follows. Suppose that they have agreed on a set of N states in advance, and then Alice prepares a system in one of the N states with some probability and gives it to Bob. Note also that the a priori probabilities are known publicly. Given that the set of states and a priori probabilities are known, Bob applies measurement and attempts to guess which one has been prepared by Alice. If he makes a correct guess, the score is given 1, and 0 otherwise. The goal is to maximize the average score by optimizing measurements.

Let us label the

N states by

and their prior probabilities by

, so that together they can be expressed as

. Bob seeks optimal measurement

that fulfills the condition

, in such a way that he makes guesses for each effect

. Let

denotes the probability that Bob makes a guess

from effect

corresponding to the state

given by Alice. Optimal state discrimination allows us to determine the

guessing probability, the maximum success probability that Bob makes correct guesses on average, with

where the maximization runs over all effects. Note that GPTs are generally not self-dual, that is, two spaces of states and effects are in general not isomorphic [

12].

A Convex Optimization Framework

We recall that the state space Ω is convex, and so is its dual, the space of effects, leading naturally to the following optimization problem:

where by

it is meant that

for all

. Note that the above problem is feasible as the set of parameters satisfying constraints is not empty. The trivial solution can be

for a single

and

for

. For convenience, we follow the notation in [

13], and rewrite the maximization problem in the above as minimization,

It is then straightforward to derive the dual problem to this. Let us write down the Lagrangian as follows,

where

and

K are dual parameters. Note that

are constants and

are normalized states. The dual problem can be obtained by solving the following,

The minimization in the above is given as

if

for each

, and

otherwise. Thus, we have

for each

. Since

is a (unnormalized) state, it is positive, that is

for all effects

e. We write this as,

for each

. The dual problem is thus as follows,

or, equivalently,

In the above, the inequality means an order relation in a convex cone, which is determined by effects, that is, for all effects e. Note also that the dual problem is also feasible: the trivial solution would be .

2.3. Constraint Qualification

Recall that, in general, the primal and the dual problems do not return an identical solution but there can be a finite gap between solutions of the two problems. In the case of state discrimination in the above, both problems in the above are feasible. This means that from Slater’s constraint qualification, the strong duality holds. Hence, no gap exists between the solutions, and in other words, one can get the optimal solution by solving either of the primal or the dual problems.

In addition, the strong duality also implies that the list of optimality conditions, the so-called Karush-Kuhn-Tucker (KKT) conditions, are also sufficient. That is, parameters satisfying KKT conditions provide optimal solutions in both primal and dual problems. For the optimization problems in the above, The KKT conditions are, together with constraints in both primal and dual problems, as follows,

The former one is called Lagrangian stability, and the latter one the complementary slackness. The fact that the strong duality holds also guarantees that there exist dual parameters

K and

that fulfill KKT conditions, and then those parameters give optimal solutions to the primal and the dual problems. Here, the optimal effects are also characterized by the complementary slackness in the above. This also shows existence of optimal effects or observables in a GPT. All these follow from the fact that the state space is convex. For comparison with quantum cases, the formulation for minimum-error discrimination has been shown in [

14], and see also its applications to various forms of figures of merit in [

15].

To summarize, the sole fact that state and effect spaces are convex allows us to formalize the discrimination problem in the convex optimization framework [

13]. This in fact provides a general approach of finding optimal discrimination in GPTs. For states

, we take the form in Equation (

1) as the primal problem denoted by

and derive its dual

, as follows,

where inequalities mean the order relation in the convex set: by

, it is meant that

for all

, and by

, that

for all effects

e.

For the primal and dual problems in Equations (

4) and (

5), the property called the strong duality holds true. This means that the two problems have an identical solution,

i.e.,

, and therefore one can obtain the guessing problem by solving either of the problems. The strong duality can be shown from the so-called Slater’s constraint quantification in convex optimization. A sufficient condition for the strong duality is the strict feasibility to either of primal or dual problems, that is, the existence of a strictly feasible point of parameters. For instance, primal parameters

are in the case, since

and

. From this, it is shown that the guessing probability can be obtained from either the primal or the dual problem.

2.4. The Complementarity Problem

In convex optimization, there is another approach called

the complementarity problem that generalizes primal and dual problems. It collects optimality conditions and analyzes them directly. Consequently, the complementarity problem deals with both primal and dual parameters in Equations (

4) and (

5) and find all of optimal parameters. In this sense, the approach is generally not considered more efficient than primal or dual problems. The advantage, actually, lies at the fact that generic structures existing in an optimization problem are found and exploited.

The optimality conditions for optimal state discrimination in Equations (

4) and (

5) can be summarized by the so-called Karush-Kuhn-Tucker (KKT) conditions, which are constraints listed in Equations (

4) and (

5), together with the followings,

where

for all

, and

are normalized states,

i.e.,

. We call

complementary states that construct the symmetry operator. Two conditions in the above are explained in terms of the convex geometry of given states, as follows.

The first condition, symmetry parameter, follows from the Lagrangian stability and shows that for any discrimination problem e.g.,

, there exists a single parameter

K which is decomposed into

N different ways with given states and complementary states

. Then, the second condition in Equation (

7) from the complementary slackness characterizes optimal effects by the orthogonality relation between complementary states and optimal effects. These generalize optimality conditions from quantum cases to all GPTs, see also various forms of optimality conditions in quantum cases [

14].

Primal and dual parameters satisfying the KKT conditions are automatically optimal parameters that provide solutions in the primal and the dual problems. Note also that, since the strong duality holds, both problems show the same solution. Conversely, the fact that the strong duality holds in Equations (

4) and (

5) implies the existence of optimal parameters which satisfy KKT conditions and give the guessing probability in Equation (

1).

Note that a similar approach has been made in [

16] in the form of the so-called Helstrom family, by generalising examples in quantum cases to GPTs. For quantum state discrimination, the approach based on the complementarity problem has been firstly applied in [

17,

18] for two qubit states. This has been generalised to a pair of arbitrary states in GPTs [

19]. When this result is generalized to arbitrary number of states in GPTs, however, the existence of the symmetry operator and the orhogonality conditions has been only assumed [

16]: in particular, those cases for which the optimal parameters exist are called Helstrom families. Here, we apply the complementarity problem that immediately proves the existence of optimal parameters.

2.5. The Geometric Method and the General Form of the Guessing Probability

We are now ready to present a geometric method of solving minimum-error state discrimination within the framework of GPTs for the complementarity problem. We first observe that, for optimality conditions in Equations (

6) and (

7), constraints for states and effects are separated. The symmetry parameter

K is characterized on a state space and gives the guessing probability, see Equation (

5), that is,

This means that one can find the guessing probability from a state space. To do this, one has to find the symmetry operator

K such that it is decomposed into a given state

and complementary states

in the state space. Or, equivalently, one has to search complementary states

fulfilling Equation (

6) on the state space.

Let us introduce a convex polytope denoted by

of given states in the state space: each vertex of the polytope corresponds to state

for

. Then, the polytope of complementary states,

, is immediately congruent to

in the state space: from Equation (

6) the following holds,

which shows that corresponding lines of two polytopes

and

are of equal lengths and anti-parallel. Then, from the convex geometry of the state space, one can find the polytope of complementary states as well as complementary states by putting two congruent polytopes such that the condition in Equation (

6) holds. Once complementary states are obtained, optimal effects can be found from the orthogonal relation in Equation (

7), accordingly.

Finally, let us provide a general form of the guessing probability in GPTs, when

a priori probabilities are equal,

i.e.,

for all

. In this case, the guessing probability is in a simpler form and show its meaning with the convex geometry. First, from Equation (

8) we have

for any

. Since

, we have

for all

. Denoted by

for all

, the guessing probability has the form in the following

where the expression of

r follows from the condition in Equation (

9) with a distance measure

that can be defined in the state space. The parameter

r has a meaning as the ratio between two polytopes,

of given states, and

of complementary states.

3. Examples: Polygon States

We illustrate the method of optimal state discrimination in GPTs, with an example called the polygon systems in [

12]. We consider cases of three and four states and apply the geometric method of optimal state discrimination. It is straightforward to apply to

N states. The polygon system is in general given by

N states

,

where

. Effects are given by

as follows,

where the unit effect

and the map to probabilities is given by the Euclidean inner product between states and effects.

3.1. A Case of

Let us first consider the case

, in which states and effects are given as

One can easily check that

. We consider optimal state discrimination for

. Applying the geometric method and also from the geometry of the polygon system for

, see also

Figure 1, one can find it holds that

where

and the addition is computed in modulo 3.

Figure 1.

The polygon states for

are shown, see also Equation (

12). The three states form a regular triangle on the plane

. The effects in Equation (

13) are identical to the states.

Figure 1.

The polygon states for

are shown, see also Equation (

12). The three states form a regular triangle on the plane

. The effects in Equation (

13) are identical to the states.

Optimal measurement is therefore

since

, from the orthogonality condition in Equation (

7). Thus, we have

for

, and the guessing probability is found as

which leads to the perfect discrimination.

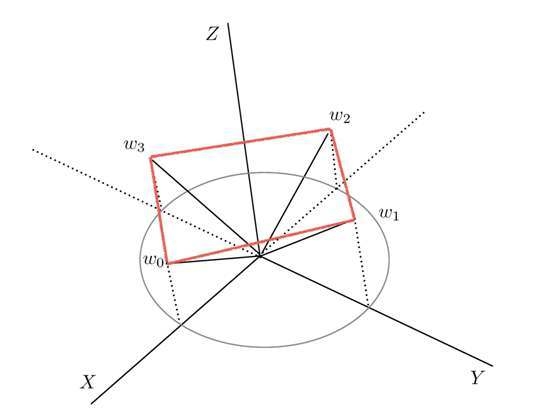

3.2. A Case of

We next consider the case

, in which states and effects are given as

For four states

, the goal is now to find the guessing probability and optimal measurement. Exploiting the convex geometry, see

Figure 2, the polytope

forms a rectangle, from which it follows that

from Equation (

10). To be precise, from the state space geometry, one can see that

where the complementary states are obtained as

. Thus, we have the guessing probability, from the primal problem in Equation (

6),

Figure 2.

The polygon states for

are shown, see also Equation (

14). The four states form a square on the plane

. The effects in Equation (

15) are located on the plane

.

Figure 2.

The polygon states for

are shown, see also Equation (

14). The four states form a square on the plane

. The effects in Equation (

15) are located on the plane

.

Note that these four states are analogous to cases in quantum theory, pairs of orthogonal states: for the four quantum states, the guessing probability is also given by

[

14].

Optimal measurement is obtained by using the orthogonality condition in Equation (

7). In fact, optimal measurement is not unique and the following effects give the guessing probability.

From optimal measurement shown in the above, we remark that properties of optimal quantum state discrimination also hold true in GPTs. First, optimal measurement of quantum state discrimination is generally not unique [

1], and the example in the above shows that this also holds true in GPTs. Moreover, optimal measurement for discriminating

N quantum states does not always contain output ports in the same number, that is,

N POVMs [

14,

20]. This also holds true in GPTs in general as shown above.

3.3. When No Measurement Is Optimal

We here show another property that quantum state discrimination shares with GPTs. Namely, no measurement is sometimes optimal in state discrimination. That is, applying no measurement but simply guessing the state from

a priori probabilities gives a guessing probability higher than any other strategies. This also holds true in GPTs. In the following, we provide an example from the result in the quantum case [

20].

Let us consider the four polygon states for in the above together with their mixture . Let denote a priori probabilities for states for , respectively, and for state . Hence, we consider optimal state discrimination for .

In particular, let us also assume that

. Then, one can find the optimal discrimination with the symmetry operator as follows,

with constants

. It is then straightforward to find

such that the equalities in Equation (

16) hold true. Note also that whenever

it holds that

. Then, the guessing probability is simply given as

, which can be made by guessing the state

with the

a priori probability, without measurement.

4. Conclusions

Optimal state discrimination is one of the most fundamental tasks in information theory, and also connected to information applications. For instance, in quantum cases it is the operational task that corresponds to the information-theoretic measure, the min-entropy [

21]. On the other hand, GPTs are of theoretical and fundamental interest such that states, effects, and dynamics are identified in a convex operational framework. Their operational significances can be found without detailed structures of a given theory, e.g., Hilbert spaces of quantum theory.

In the present work, we have considered optimal state discrimination in GPTs within the convex optimization framework. This generalizes the result in quantum cases where optimization runs over symmetric operators describing quantum states and measurements [

14]. Here, we have considered optimal state discrimination without such structures, and shown that the results in quantum cases, e.g., see [

14], are shared in GPTs in general. These include, firstly, the convex optimization and the complementariy problem, and then the method of optimal state discrimination with the convex geometry of state spaces. In particular, we has shown with the polygon systems how the method can be applied. We have shown that the followings hold true in GPTs in general: (i) optimal measurement is not unique, and (ii) no measurement can sometimes give optimal discrimination. The results may be useful in the operational characterization of quantum information processing, and we also envisage their usefulness in quantum information applications.