Vision-Based Pose Estimation for Robot-Mediated Hand Telerehabilitation

Abstract

:1. Introduction

1.1. Telerehabilitation

1.2. Vision-Based Hand Pose Estimation

1.3. Vision-Based Hand Telerehabilitation

2. Experimental Section

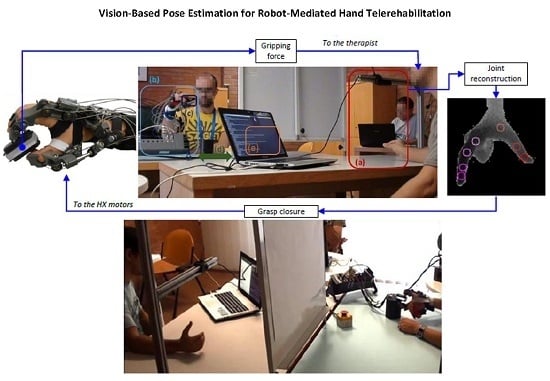

2.1. System Overview

2.1.1. Master Unit

Random Forests

Features

Training

Operator Hand Motion Estimation

2.1.2. Slave Unit

2.1.3. Communication

2.2. Master–Slave Control Strategy

| Grasp Type | MCP (deg) | P-DIP (deg) | MC-IP (deg) | CMC (deg) | |

|---|---|---|---|---|---|

| Pinch | 0 | 0 | 0 | 0 | |

| 90 | 60 | 45 | 75 | ||

| Lateral | 0 | 0 | 0 | 0 | |

| 75 | 100 | 65 | 45 | ||

2.3. Experimental Design and Methods

3. Results and Discussion

| Grasp Type and Speed | MCP (deg) | P-DIP (deg) | MC-IP (deg) | CMC (deg) | ||

|---|---|---|---|---|---|---|

| Pinch | Slow | Hz | 3.0 ± 0.5 | 4.2 ± 1.0 | 2.5 ± 0.4 | 2.4 ± 0.6 |

| Medium | Hz | 4.8 ± 1.7 | 5.7 ± 1.5 | 3.2 ± 0.8 | 4.2 ± 2.3 | |

| Fast | Hz | 7.6 ± 3.7 | 8.2 ± 2.6 | 4.5 ± 1.8 | 7.1 ± 3.6 | |

| Lateral | Slow | Hz | 5.1 ± 2.1 | 7.4 ± 3.7 | 4.4 ± 1.1 | 2.4 ± 0.6 |

| Medium | Hz | 6.1 ± 4.4 | 9.0 ± 5.7 | 5.1 ± 2.0 | 2.5 ± 1.0 | |

| Fast | Hz | 8.1 | 12.1 | 6.7 | 2.8 | |

4. Conclusions

Acknowledgments

Author Contributions

Conflicts of Interest

Abbreviations

| a/a | abduction/adduction |

| f/e | flexion/extension |

| DIP | Distal-Intra-Phalangeal |

| DoM | Degree of Mobility |

| GPU | Graphics Processor Unit |

| HRI | Human Robot Interaction |

| HX | Hand eXoskeleton |

| MCP | Meta-Carpo-Phalangeal |

| MC-IP | Meta-Carpo-Inter-Phalangeal |

| P-DIP | Proximal-Distal-Intra-Phalangeal |

| PIP | Proximal-Intra-Phalangeal |

| PSO | Particle Swarm Optimization |

| RGB-D | Reg, Green, Blue, and Depth |

| RF | Random Rorest |

| RMSE | Root-Mean-Squared Error |

| RT | Real Time |

| UDP/IP | User Datagram Protocol/Internet Protocol |

| VPE | Vision-Based Pose Estimation |

| VR | Virtual Reality |

References

- Dobkin, B. The economic impact of stroke. Neurology 1995, 45, S6–S9. [Google Scholar] [PubMed]

- Fox, S. EVAL-revolutionizing hand exams. Adv. Occup. Ther. 1991, 7, 1–7. [Google Scholar]

- Popescu, V.G.; Burdea, G.C.; Bouzit, M.; Hentz, V.R. A virtual-reality-based telerehabilitation system with force feedback. IEEE Trans. Inf. Technol. Biomed. 2000, 4, 45–51. [Google Scholar] [CrossRef] [PubMed]

- Burdea, G.; Popescu, V.; Hentz, V.; Colbert, K. Virtual reality-based orthopedic telerehabilitation. IEEE Trans. Rehabil. Eng. 2000, 8, 430–432. [Google Scholar] [CrossRef] [PubMed]

- Giansanti, D.; Morelli, S.; Maccioni, G.; Macellari, V. Validation of a tele-home-care for hand-telerehabilitation. Conf. Proc. IEEE Eng. Med. Biol. Soc. 2007, 2007, 3830–3832. [Google Scholar] [PubMed]

- Holden, M.K.; Dyar, T.A.; Dayan-Cimadoro, L. Telerehabilitation using a virtual environment improves upper extremity function in patients with stroke. IEEE Trans. Neural Syst. Rehabil. Eng. 2007, 15, 36–42. [Google Scholar] [CrossRef] [PubMed]

- Holden, M.K.; Dyar, T.A.; Schwamm, L.; Bizzi, E. Virtual-environment-based telerehabilitation in patients with stroke. Presence 2005, 14, 214–233. [Google Scholar] [CrossRef]

- Holden, M.K.; Dyar, T.; Schwamm, L.; Bizzi, E. Home-based telerehabilitation using a virtual environment system. In Proceedings of the 2nd International Workshop on Virtual Rehabilitation, Piscataway, NJ, USA, 13–20 September 2003; pp. 4–12.

- Song, A.; Pan, L.; Xu, G.; Li, H. Adaptive motion control of arm rehabilitation robot based on impedance identification. Robotica 2015, 33, 1795–1812. [Google Scholar] [CrossRef]

- Basteris, A.; Nijenhuis, S.M.; Stienen, A.; Buurke, J.H.; Prange, G.B.; Amirabdollahian, F. Training modalities in robot-mediated upper limb rehabilitation in stroke: A framework for classification based on a systematic review. J. Neuroeng. Rehabil. 2014, 11, 111. [Google Scholar] [CrossRef] [PubMed]

- Pan, L.; Song, A.; Xu, G.; Li, H.; Xu, B.; Xiong, P. Hierarchical safety supervisory control strategy for robot-assisted rehabilitation exercise. Robotica 2013, 31, 757–766. [Google Scholar] [CrossRef]

- Brochard, S.; Robertson, J.; Medee, B.; Remy-Neris, O. What’s new in new technologies for upper extremity rehabilitation? Curr. Opin. Neurol. 2010, 23, 683–687. [Google Scholar] [CrossRef] [PubMed]

- Balasubramanian, S.; Klein, J.; Burdet, E. Robot-assisted rehabilitation of hand function. Curr. Opin. Neurol. 2010, 23, 661–670. [Google Scholar] [CrossRef] [PubMed]

- Mehrholz, J.; Platz, T.; Kugler, J.; Pohl, M. Electromechanical-assisted training for improving arm function and disability after stroke. Cochrane Database Syst. Rev. 2008, 4, CD006876. [Google Scholar] [PubMed]

- Reinkensmeyer, J. Comparison of robot-assisted reaching to free reaching in promoting recovery from chronic stroke. In Proceedings of the 7th International Conference on Rehabilitation Robotics, Evry Cedex, France, 1 January 2001; p. 39.

- Burgar, C.G.; Lum, P.S.; Shor, P.C.; Van der Loos, H.M. Development of robots for rehabilitation therapy: The Palo Alto VA/Stanford experience. J. Rehabil. Res. Dev. 2000, 37, 663–674. [Google Scholar] [PubMed]

- Song, G.; Guo, S. Development of a novel tele-rehabilitation system. In Proceedings of the IEEE International Conference on Robotics and Biomimetics (ROBIO’06), Kunming, China, 17–20 December 2006; pp. 785–789.

- Peng, Q.; Park, H.S.; Zhang, L.Q. A low-cost portable telerehabilitation system for the treatment and assessment of the elbow deformity of stroke patients. In Proceedings of the IEEE 9th International Conference on Rehabilitation Robotics (ICORR 2005), Chicago, IL, USA, 28 June–1 July 2005; pp. 149–151.

- Duong, M.D.; Terashima, K.; Miyoshi, T. A novel stable teleoperation with haptic feedback by means of impedance adjustment via arbitrary time delay environment for rehabilitation. In Proceedings of the 2009 IEEE International Conference on Control Applications (CCA) & Intelligent Control (ISIC), Saint Petersburg, Russia, 8–10 July 2009; pp. 1744–1749.

- Cortese, M.; Cempini, M.; de Almeida Ribeiro, P.R.; Soekadar, S.R.; Carrozza, M.C.; Vitiello, N. A Mechatronic System for Robot-Mediated Hand Telerehabilitation. IEEE/ASME Trans. Mechatron. 2014, 20, 1753–1764. [Google Scholar] [CrossRef]

- Antón, D.; Goni, A.; Illarramendi, A.; Torres-Unda, J.J.; Seco, J. KiReS: A Kinect-based telerehabilitation system. In Proceedings of the 2013 IEEE 15th International Conference on E-Health Networking, Applications & Services (Healthcom), Lisbon, Portugal, 9–12 October 2013; pp. 444–448.

- Russo, L.O.; Airò Farulla, G.; Pianu, D.; Salgarella, A.R.; Controzzi, M.; Cipriani, C.; Oddo, C.M.; Geraci, C.; Rosa, S.; Indaco, M. PARLOMA-A Novel Human-Robot Interaction System for Deaf-blind Remote Communication. Int. J. Adv. Robot. Syst. 2015. [Google Scholar] [CrossRef] [Green Version]

- Shotton, J.; Sharp, T.; Kipman, A.; Fitzgibbon, A.; Finocchio, M.; Blake, A.; Cook, M.; Moore, R. Real-time human pose recognition in parts from single depth images. Commun. ACM 2013, 56, 116–124. [Google Scholar] [CrossRef]

- Keskin, C.; Kıraç, F.; Kara, Y.E.; Akarun, L. Real time hand pose estimation using depth sensors. In Consumer Depth Cameras for Computer Vision; Springer: Zurich, Switzerland, 2013; pp. 119–137. [Google Scholar]

- Oikonomidis, I.; Kyriazis, N.; Argyros, A.A. Efficient model-based 3D tracking of hand articulations using Kinect. BMVC 2011, 1, 3. [Google Scholar]

- Wachs, J.P.; Kölsch, M.; Stern, H.; Edan, Y. Vision-based hand-gesture applications. Communic. ACM 2011, 54, 60–71. [Google Scholar] [CrossRef]

- Kennedy, J. Particle swarm optimization. In Encyclopedia of Machine Learning; Springer: New York, NY, USA, 2010; pp. 760–766. [Google Scholar]

- Breiman, L. Random forests. Mach. Learn. 2001, 45, 5–32. [Google Scholar] [CrossRef]

- Cempini, M.; Cortese, M.; Vitiello, N. A Powered Finger–Thumb Wearable Hand Exoskeleton With Self-Aligning Joint Axes. IEEE/ASME Trans. Mechatron. 2015, 20, 705–716. [Google Scholar] [CrossRef]

- Donati, M.; Vitiello, N.; De Rossi, S.M.M.; Lenzi, T.; Crea, S.; Persichetti, A.; Giovacchini, F.; Koopman, B.; Podobnik, J.; Munih, M.; et al. A flexible sensor technology for the distributed measurement of interaction pressure. Sensors 2013, 13, 1021–1045. [Google Scholar] [CrossRef] [PubMed]

- Fischler, M.A.; Bolles, R.C. Random sample consensus: A paradigm for model fitting with applications to image analysis and automated cartography. Commun. ACM 1981, 24, 381–395. [Google Scholar] [CrossRef]

- Comaniciu, D.; Meer, P. Mean shift: A robust approach toward feature space analysis. IEEE Trans. Pattern Anal. Mach. Intell. 2002, 24, 603–619. [Google Scholar] [CrossRef]

- Lin, J.; Wu, Y.; Huang, T.S. Modeling the constraints of human hand motion. In Proceedings of the IEEE Workshop on Human Motion, Los Alamitos, CA, USA, 7–8 December 2000; pp. 121–126.

- Lenzi, T.; Vitiello, N.; De Rossi, S.M.M.; Persichetti, A.; Giovacchini, F.; Roccella, S.; Vecchi, F.; Carrozza, M.C. Measuring human–robot interaction on wearable robots: A distributed approach. Mechatronics 2011, 21, 1123–1131. [Google Scholar] [CrossRef]

- Šarić, M. LibHand: A Library for Hand Articulation, Version 0.9. Available online: http://www.libhand.org/ (accessed on 29 January 2016).

- Mohammadi, A.; Tavakoli, M.; Marquez, H.J. Control of nonlinear teleoperation systems subject to disturbances and variable time delays. In Proceedings of the 2012 IEEE/RSJ International Conference on Intelligent Robots and Systems (IROS), Algarve, Portugal, 7–12 October 2012; pp. 3017–3022.

- Tropp, H.; Alaranta, H.; Renstrom, P. Proprioception and coordination training in injury prevention. Sports Inj. Basic Princ. Prev. Care 1993, 4, 277–290. [Google Scholar]

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Airò Farulla, G.; Pianu, D.; Cempini, M.; Cortese, M.; Russo, L.O.; Indaco, M.; Nerino, R.; Chimienti, A.; Oddo, C.M.; Vitiello, N. Vision-Based Pose Estimation for Robot-Mediated Hand Telerehabilitation. Sensors 2016, 16, 208. https://doi.org/10.3390/s16020208

Airò Farulla G, Pianu D, Cempini M, Cortese M, Russo LO, Indaco M, Nerino R, Chimienti A, Oddo CM, Vitiello N. Vision-Based Pose Estimation for Robot-Mediated Hand Telerehabilitation. Sensors. 2016; 16(2):208. https://doi.org/10.3390/s16020208

Chicago/Turabian StyleAirò Farulla, Giuseppe, Daniele Pianu, Marco Cempini, Mario Cortese, Ludovico O. Russo, Marco Indaco, Roberto Nerino, Antonio Chimienti, Calogero M. Oddo, and Nicola Vitiello. 2016. "Vision-Based Pose Estimation for Robot-Mediated Hand Telerehabilitation" Sensors 16, no. 2: 208. https://doi.org/10.3390/s16020208

APA StyleAirò Farulla, G., Pianu, D., Cempini, M., Cortese, M., Russo, L. O., Indaco, M., Nerino, R., Chimienti, A., Oddo, C. M., & Vitiello, N. (2016). Vision-Based Pose Estimation for Robot-Mediated Hand Telerehabilitation. Sensors, 16(2), 208. https://doi.org/10.3390/s16020208