A Comprehensive Motion Estimation Technique for the Improvement of EIS Methods Based on the SURF Algorithm and Kalman Filter

Abstract

:1. Introduction

2. Materials and Methods

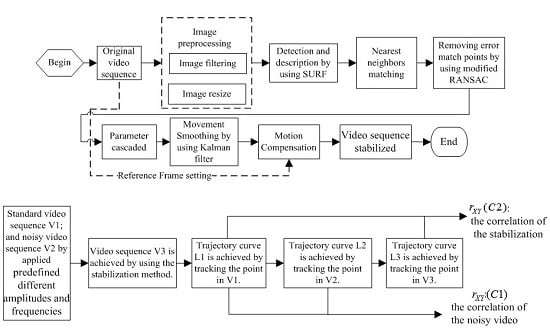

2.1. The Scheme of the EIS Method

2.2. Selection of Feature Point Detection Algorithms

2.3. Quality Assessment by Using PSNR and Trajectory Tracking

3. Experimental Results and Discussion

3.1. Module Performance Testing

3.2. Accuracy Evaluation with Vibration Videos of Predefined Amplitudes

3.3. Performance Assessment Using a Vibration Video Sequence

3.4. Performance Assessment Using the Video Sequences with Increasing Vehicle Speed

4. Conclusions and Future Work

Acknowledgments

Author Contributions

Conflicts of Interest

References

- Sato, K.; Ishizuka, S.; Nikami, A.; Sato, M. Control techniques for optical image stabilizing system. IEEE Trans. Consum. Electron. 1993, 39, 461–466. [Google Scholar] [CrossRef]

- Binoy, P.; Anurenjan, P.R. Video stabilization using speeded up robust features. In Proceedings of the 2011 International Conference on Communications and Signal Processing, Calicut, India, 10–12 February 2011; pp. 527–531.

- Lyou, J.; Kang, M.; Kwak, H.; Choi, Y. Dual stage and an image processing-based method for sight stabilization. J. Mech. Sci. Technol. 2009, 23, 2097–2106. [Google Scholar] [CrossRef]

- Ji, M. Line-of-sight coarse/fine combination two-level stabilization technique in armed helicopters. Acta Aeronaut. Astronaut. Sin. 1997, 18, 289–293. [Google Scholar]

- Tan, K.; Lee, T.; Khor, E.; Ang, D. Design and real-time implementation of a multivariable gyro-mirror line-of-sight stabilization platform. Fuzzy Sets Syst. 2002, 128, 81–93. [Google Scholar] [CrossRef]

- Xu, L.; Lin, X. Digital image stabilization based on circular block matching. IEEE Trans. Consum. Electron. 2006, 52, 566–574. [Google Scholar]

- Xu, J.; Chang, H.; Yang, S.; Wang, M. Fast feature-based video stabilization without accumulative global motion estimation. IEEE Trans. Consum. Electron. 2012, 58, 993–999. [Google Scholar] [CrossRef]

- Ko, S.; Lee, S.; Lee, K. Digital image stabilization algorithms based on bit-plane matching. IEEE Trans. Consum. Electron. 1998, 44, 619–622. [Google Scholar]

- Amanatiadis, A.A.; Adreadis, I. Digital image stabilization by independent component analysis. IEEE Trans. Instrum. Meas. 2010, 59, 1755–1763. [Google Scholar] [CrossRef]

- Li, C.; Liu, Y. Global motion estimation based on SIFT feature match for digital image stabilization. In Proceedings of the IEEE International Conference on Computer Science and Network Technology (ICCSNT), Harbin, China, 24–26 December 2011; pp. 2264–2267.

- Bay, H.; Ess, A.; Tuytelaars, T.; Gool, V. Speeded-up robust features (SURF). Comput. Vis. Comput. Sci. 2008, 110, 346–359. [Google Scholar] [CrossRef]

- Huang, K.; Tsai, Y.; Tsai, C.; Chen, L. Video stabilization for vehicular applications using SURF-like descriptor and KD-tree. In Proceedings of the 17th IEEE International Conference Image Processing, Hong Kong, China, 26–29 September 2010; pp. 3517–3520.

- Kraft, M.; Schmidt, A. Simplifying SURF feature descriptor to achieve real-time performance. In Computer Recognition Systems 4. Advances in Intelligent and Soft Computing; Burduk, R., Kurzynśki, M., Woźniak, M., Żołnierek, A., Eds.; Springer: Heidelberg, Germany, 2011; Volume 95, pp. 431–440. [Google Scholar]

- Zhou, D.; Hu, D. A robust object tracking algorithm based on SURF. In Proceedings of the IEEE International Conference on Wireless Communications and Signal Processing (WCSP), Hangzhou, China, 24–26 October 2013; pp. 1–5.

- Ertürk, S. Real-time digital image stabilization using Kalman filters. Real Time Imaging 2002, 8, 317–328. [Google Scholar] [CrossRef]

- Chang, H.; Lai, S.; Lu, K. A robust real-time video stabilization algorithm. J. Vis. Commun. Image Represent. 2006, 17, 659–673. [Google Scholar] [CrossRef]

- Yang, J.; Schonfeld, D.; Mohamed, M. Robust video stabilization based on particle filter tracking of projected camera motion. IEEE Trans. Circuits Syst. Video Technol. 2009, 19, 945–954. [Google Scholar] [CrossRef]

- Liu, Y.; De, J.; Li, B.; Xu, Q. Real-time global motion vectors estimation based on phase correlation and gray projection algorithm. In Proceedings of the CISP’09 2nd International Congress on Image and Signal Processing, Tianjin, China, 2009; pp. 1–5.

- Erturk, S. Digital image stabilization with sub-image phase correlation based global motion estimation. IEEE Trans. Consum. Electron. 2003, 49, 1320–1325. [Google Scholar] [CrossRef]

- Litvin, A.; Konrad, J.; Willian, C.K. Probabilistic video stabilization using Kalman filtering and mosaicking. In Proceedings of the IS&T/SPIE Symposium on Electronic Imaging, Image and Video Communications and Processing, Santa Clara, CA, USA, 20–24 January 2003; pp. 663–674.

- Song, C.; Zhao, H.; Jing, W.; Zhu, H. Robust video stabilization based on particle filtering with weighted feature points. IEEE Trans. Consum. Electron. 2012, 58, 570–577. [Google Scholar] [CrossRef]

- Wang, C.; Kim, J.; Byun, K.; Ni, J.; Ko, S. Robust digital image stabilization using the Kalman filter. IEEE Trans. Consum. Electron. 2009, 55, 6–14. [Google Scholar] [CrossRef]

- Lowe, D.G. Distinctive image features from scale-invariant keypoints. Int. J. Comput. Vis. 2004, 60, 91–100. [Google Scholar] [CrossRef]

- Saleem, S.; Bais, A.; Sablatnig, R. Performance evaluation of SIFT and SURF for multispectral image matching. In Proceedings of the 9th International Conference on Image Analysis and Recognition, Aveiro, Portugal, 25–27 June 2012; pp. 166–173.

- Rublee, E.; Rabaud, V.; Konolige, K.; Bradski, G. ORB: An efficient alternative to SIFT or SURF. In Proceedings of the IEEE International Conference on Computer Vision, Barcelona, Spain, 6–13 November 2011; pp. 2564–2571.

| Segment No. | P | PSNR of Source Video/dB | PSNR of Stabilized Video/dB | ||

|---|---|---|---|---|---|

| 1 | 0.52% | 22.95 | 24.38 | 0.9962 | 0.9932 |

| 2 | 0.61% | 22.08 | 24.45 | 0.9900 | 0.9928 |

| 3 | 1.26% | 20.60 | 24.40 | 0.9734 | 0.9911 |

| 4 | 1.40% | 20.46 | 24.45 | 0.9615 | 0.9907 |

| 5 | 2.09% | 20.40 | 24.46 | 0.9626 | 0.9964 |

| NO. | Vehicle Speed/km/h | Road Condition | PSNR of Source Video/dB | PSNR of Stabilized Video/dB |

|---|---|---|---|---|

| 1 | 20 | Stable concrete | 23.87 | 30.87 |

| Bumpy sand | 23.51 | 25.83 | ||

| Soft mud | 24.52 | 26.85 | ||

| 2 | 40 | Stable concrete | 23.46 | 27.28 |

| Bumpy sand | 22.45 | 24.77 | ||

| Soft mud | 24.34 | 27.32 | ||

| 3 | 60 | Stable concrete | 22.10 | 25.64 |

© 2016 by the authors; licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons by Attribution (CC-BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Cheng, X.; Hao, Q.; Xie, M. A Comprehensive Motion Estimation Technique for the Improvement of EIS Methods Based on the SURF Algorithm and Kalman Filter. Sensors 2016, 16, 486. https://doi.org/10.3390/s16040486

Cheng X, Hao Q, Xie M. A Comprehensive Motion Estimation Technique for the Improvement of EIS Methods Based on the SURF Algorithm and Kalman Filter. Sensors. 2016; 16(4):486. https://doi.org/10.3390/s16040486

Chicago/Turabian StyleCheng, Xuemin, Qun Hao, and Mengdi Xie. 2016. "A Comprehensive Motion Estimation Technique for the Improvement of EIS Methods Based on the SURF Algorithm and Kalman Filter" Sensors 16, no. 4: 486. https://doi.org/10.3390/s16040486

APA StyleCheng, X., Hao, Q., & Xie, M. (2016). A Comprehensive Motion Estimation Technique for the Improvement of EIS Methods Based on the SURF Algorithm and Kalman Filter. Sensors, 16(4), 486. https://doi.org/10.3390/s16040486