Anti-Interference Aircraft-Tracking Method in Infrared Imagery †

Abstract

:1. Introduction

2. Distribution Field

3. Aircraft-Tracking Algorithm

3.1. Aircraft and Decoy Infrared Signatures

3.2. Region Proposal for Searching

3.3. Structural Feature Extraction

3.4. Model Matching

3.5. Occlusion Handling

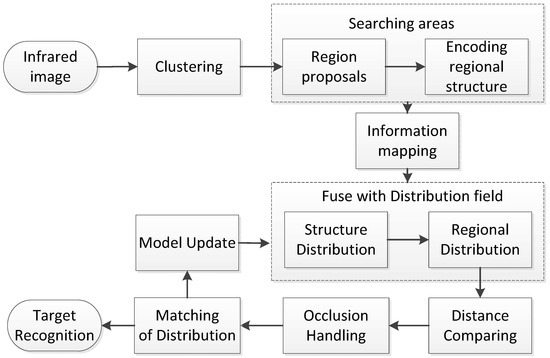

3.6. Our Aircraft-Tracking Algorithm

| Algorithm 1: Aircraft-tracking algorithm |

| Input: Image sequence. |

| Output: Aircraft location with bounding box. |

| 1: Generate region proposals by clustering. |

| 2: for n =1 to m (m is the number of candidate regions) do |

| 3: Calculate gray distribution field. |

| 4: Compute structural distribution. |

| 5: Calculate similarity between target’s model and candidate region. |

| 6: end for |

| 7: Detect occlusion via measuring the variation of the model distance. |

| 8: Select a region with the minimal distance as the tracking region. |

| 9: Update the target’s model. |

4. Experiments and Discussions

4.1. Analyzing Regional-Distribution Tracker

4.1.1. Search Space and Feature Representations

4.1.2. Distance Normalization

4.2. Computational-Cost Analysis

4.3. Evaluating Tracking Benchmark

5. Conclusions

Author Contributions

Funding

Acknowledgments

Conflicts of Interest

References

- Wu, S.; Niu, S.; Zhang, K.; Yan, J. An Aircraft Tracking Method in Simulated Infrared Image Sequences Based on Regional Distribution. In Pacific-Rim Symposium on Image and Video Technology; Springer: Cham, Switzerland, 2017; pp. 343–355. [Google Scholar] [CrossRef]

- Zaveri, M.A.; Merchant, S.N.; Desai, U.B. Air-borne approaching target detection and tracking in infrared image sequence. In Proceedings of the 2004 International Conference on Image Processing, Singapore, 24–27 October 2004; Volume 2, pp. 1025–1028. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Chen, Q.; Zhang, H.; Maldague, X. Total Variation Regularization Term-Based Low-Rank and Sparse Matrix Representation Model for Infrared Moving Target Tracking. Remote Sens. 2018, 10, 510. [Google Scholar] [CrossRef]

- Fan, G.; Venkataraman, V.; Fan, X.; Havlicek, J.P. Appearance learning for infrared tracking with occlusion handling. In Machine Vision Beyond Visible Spectrum; Springer: Berlin/Heidelberg, Germany, 2011; pp. 33–64. [Google Scholar] [CrossRef]

- Wan, M.; Gu, G.; Qian, W.; Ren, K.; Chen, Q.; Maldague, X. Infrared Image Enhancement Using Adaptive Histogram Partition and Brightness Correction. Remote Sens. 2018, 10, 682. [Google Scholar] [CrossRef]

- Del Blanco, C.R.; Jaureguizar, F.; García, N.; Salgado, L. Robust automatic target tracking based on a Bayesian ego-motion compensation framework for airborne FLIR imagery. Proc. SPIE Int. Soc. Opt. Eng. 2009, 7335, 733514. [Google Scholar] [CrossRef]

- Cao, Y.; Wang, G.; Yan, D.; Zhao, Z. Two algorithms for the detection and tracking of moving vehicle targets in aerial infrared image sequences. Remote Sens. 2016, 8, 28. [Google Scholar] [CrossRef]

- Zingoni, A.; Diani, M.; Corsini, G. A flexible algorithm for detecting challenging moving objects in real-time within IR video sequences. Remote Sens. 2017, 9, 1128. [Google Scholar] [CrossRef]

- Berg, A.; Ahlberg, J.; Felsberg, M. A thermal object tracking benchmark. In Proceedings of the 2015 12th IEEE International Conference on Advanced Video and Signal Based Surveillance (AVSS), Karlsruhe, Germany, 25–28 August 2015; pp. 1–6. [Google Scholar] [CrossRef]

- Gray, G.J.; Aouf, N.; Richardson, M.A.; Butters, B.; Walmsley, R.; Nicholls, E. Feature-based tracking algorithms for imaging infrared anti-ship missiles. In Proceedings of the SPIE Security + Defence, Prague, Czech Republic, 19–22 September 2011. [Google Scholar] [CrossRef]

- Dudzik, M.C.; Accetta, J.; Shumaker, D. The Infrared & Electro-Optical Systems Handbook: Electro-Optical Systems Design, Analysis, and Testing; Infrared Information Analysis Center: Ann Arbor, MI, USA, 1993; Volume 4. [Google Scholar]

- Lin, W.; Ma, H.; Li, F. Accurate tracking based on interest points in the infrared imaging guidance. In Proceedings of the 2005 International Conference on Neural Networks and Brain, Beijing, China, 13–15 October 2005; pp. 1156–1159. [Google Scholar] [CrossRef]

- Zhang, K.; Zhong, D.; Yan, J.; Wang, J. Research on the image matching and tracking algorithm for the end of infrared target tracking. In Proceedings of the 2008 International Conference on Audio, Language and Image Processing, Shanghai, China, 7–9 July 2008; pp. 557–562. [Google Scholar] [CrossRef]

- Gray, G.J.; Aouf, N.; Richardson, M.A.; Butters, B.; Walmsley, R. An intelligent tracking algorithm for an imaging infrared anti-ship missile. In Proceedings of the SPIE Security + Defence, Edinburgh, UK, 24–27 September 2012. [Google Scholar] [CrossRef]

- Krueger, M.R. A Comparison of Detection and Tracking Methods as Applied to OPIR Optics. Ph.D. Thesis, Naval Postgraduate School, Monterey, CA, USA, 2014. [Google Scholar]

- Merritt, P.H. Beam Control for Laser Systems; Directed Energy Professional Society, High Energy Laser Joint Technology Office: Albuquerque, NM, USA, 2012. [Google Scholar]

- Yousefi, B.; Fleuret, J.; Zhang, H.; Maldague, X.P.; Watt, R.; Klein, M. Automated assessment and tracking of human body thermal variations using unsupervised clustering. Appl. Opt. 2016, 55, D162–D172. [Google Scholar] [CrossRef]

- Gustafsson, F.; Gunnarsson, F.; Bergman, N.; Forssell, U.; Jansson, J.; Karlsson, R.; Nordlund, P.J. Particle filters for positioning, navigation, and tracking. IEEE Trans. Signal. Process. 2002, 50, 425–437. [Google Scholar] [CrossRef] [Green Version]

- Zhu, Z.; Ji, Q.; Fujimura, K.; Lee, K. Combining Kalman filtering and mean shift for real time eye tracking under active IR illumination. In Object Recognition Supported by User Interaction for Service Robots; IEEE: Los Alamitos, CA, USA, 2002; Volume 4, pp. 318–321. [Google Scholar]

- Teutsch, M.; Krüger, W.; Beyerer, J. Fusion of region and point-feature detections for measurement reconstruction in multi-target Kalman tracking. In Proceedings of the 14th International Conference on Information Fusion, Chicago, IL, USA, 5–8 July 2011; pp. 1–8. [Google Scholar]

- Gordon, N.J.; Salmond, D.J.; Smith, A.F. Novel approach to nonlinear/non-Gaussian Bayesian state estimation. In IEE Proceedings F—Radar and Signal Processing; IET: Stevenage, UK, 1993; Volume 140, pp. 107–113. [Google Scholar]

- Wang, X.; Tang, Z. Modified particle filter-based infrared pedestrian tracking. Infrared Phys. Technol. 2010, 53, 280–287. [Google Scholar] [CrossRef]

- Zaveri, M.A.; Merchant, S.N.; Desai, U.B. Tracking of multiple-point targets using multiple-model-based particle filtering in infrared image sequence. Opt. Eng. 2006, 45, 056404. [Google Scholar] [CrossRef]

- Lei, L.; Zhijian, H. Improved motion information-based infrared dim target tracking algorithms. Infrared Phys. Technol. 2014, 67, 341–349. [Google Scholar] [CrossRef]

- Zhang, M.; Xin, M.; Yang, J. Adaptive multi-cue based particle swarm optimization guided particle filter tracking in infrared videos. Neurocomputing 2013, 122, 163–171. [Google Scholar] [CrossRef]

- Yilmaz, A.; Shafique, K.; Lobo, N.; Li, X.; Olson, T.; Shah, M.A. Target-tracking in FLIR imagery using mean-shift and global motion compensation. In Proceedings of the IEEE Workshop on Computer Vision Beyond Visible Spectrum, Kauai, HI, USA, 14 December 2001; pp. 54–58. [Google Scholar]

- Yilmaz, A.; Shafique, K.; Shah, M. Target tracking in airborne forward looking infrared imagery. Image Vis. Comput. 2003, 21, 623–635. [Google Scholar] [CrossRef]

- Hu, T.; Liu, E.; Yang, J. Multi-feature based ensemble classification and regression tree (ECART) for target tracking in infrared imagery. J. Infrared Millim. Terahertz Waves 2009, 30, 484–495. [Google Scholar] [CrossRef]

- Braga-Neto, U.M.; Choudhury, M.; Goutsias, J.I. Automatic target detection and tracking in forward-looking infrared image sequences using morphological connected operators. J. Electron. Imaging 2004, 13, 802–814. [Google Scholar] [CrossRef]

- Bal, A.; Alam, M.S. Automatic target tracking in FLIR image sequences using intensity variation function and template modeling. IEEE Trans. Instrum. Meas. 2005, 54, 1846–1852. [Google Scholar] [CrossRef]

- Loo, C.H.; Alam, M.S. Invariant object tracking using fringe-adjusted joint transform correlator. Opt. Eng. 2004, 43, 2175–2184. [Google Scholar] [CrossRef]

- Dawoud, A.; Alam, M.S.; Bal, A.; Loo, C. Target tracking in infrared imagery using weighted composite reference function-based decision fusion. IEEE Trans. Image Process. 2006, 15, 404–410. [Google Scholar] [CrossRef]

- Ling, J.; Liu, E.; Liang, H.; Yang, J. Infrared target tracking with kernel-based performance metric and eigenvalue-based similarity measure. Appl. Opt. 2007, 46, 3239–3252. [Google Scholar] [CrossRef]

- Lamberti, F.; Sanna, A.; Paravati, G. Improving robustness of infrared target tracking algorithms based on template matching. IEEE Trans. Aerosp. Electron. Syst. 2011, 47, 1467–1480. [Google Scholar] [CrossRef]

- Sun, X.; Zhang, T. Airborne target tracking algorithm against oppressive decoys in infrared imagery. In Proceedings of the Sixth International Symposium on Multispectral Image Processing and Pattern Recognition, Yichang, China, 30 October–1 November 2009. [Google Scholar] [CrossRef]

- Felsberg, M.; Berg, A.; Häger, G.; Ahlberg, J.; Kristan, M.; Matas, J.; Leonardis, A.; Cehovin, L.; Fernandez, G.; Vojır, T.; et al. The Thermal Infrared Visual Object Tracking VOT-TIR2015 Challenge Results. In Proceedings of the 2015 IEEE International Conference on Computer Vision Workshop (ICCVW), Santiago, Chile, 7–13 December 2015; pp. 639–651. [Google Scholar] [CrossRef]

- Sevilla-Lara, L.; Learned-Miller, E. Distribution fields for tracking. In Proceedings of the 2012 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Providence, RI, USA, 16–21 June 2012; pp. 1910–1917. [Google Scholar] [CrossRef]

- Berg, A.; Ahlberg, J.; Felsberg, M. Channel coded distribution field tracking for thermal infrared imagery. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition Workshops, Las Vegas, NV, USA, 26 June–1 July 2016; pp. 9–17. [Google Scholar] [CrossRef]

- Kristan, M.; Pflugfelder, R.; Leonardis, A.; Matas, J.; Porikli, F.; Cehovin, L.; Nebehay, G.; Fernandez, G.; Vojir, T.; Gatt, A.; et al. The Visual Object Tracking VOT2013 Challenge Results. In Proceedings of the 2013 IEEE International Conference on Computer Vision Workshops, Sydney, NSW, Australia, 2–8 December 2013; pp. 98–111. [Google Scholar] [CrossRef]

- Felsberg, M. Enhanced distribution field tracking using channel representations. In Proceedings of the IEEE International Conference on Computer Vision, Sydney, NSW, Australia, 2–8 December 2013; pp. 121–128. [Google Scholar] [CrossRef]

- Ning, J.; Shi, W.; Yang, S.; Yanne, P. Visual tracking based on distribution fields and online weighted multiple instance learning. Image Vis. Comput. 2013, 31, 853–863. [Google Scholar] [CrossRef]

- Venkataraman, V. Advanced Machine Learning Approaches for Target Detection, Tracking and Recognition. Ph.D. Thesis, Oklahoma State University, Stillwater, OK, USA, 2010. [Google Scholar]

- Minkina, W.; Dudzik, S. Infrared Thermography: Errors and Uncertainties; John Wiley & Sons: New York, NY, USA, 2009. [Google Scholar]

- Mahulikar, S.P.; Sonawane, H.R.; Rao, G.A. Infrared signature studies of aerospace vehicles. Prog. Aerosp. Sci. 2007, 43, 218–245. [Google Scholar] [CrossRef] [Green Version]

- White, J.R. Aircraft Infrared Principles, Signatures, Threats, and Countermeasures; Technical Report; Naval Air Warfare Center Weapons div China Lake: Fort Belvoir, VA, USA, 2012. [Google Scholar]

- Pollock, D.H.; Accetta, J.S.; Shumaker, D.L. The Infrared & Electro-Optical Systems Handbook: Countermeasure Systems; Infrared Information Analysis Center: Ann Arbor, MI, USA, 1993; Volume 7. [Google Scholar]

- Schleijpen, R.H.; Degache, M.A.; Veerman, H.; van Sweeden, R.; Devecchi, B. Modelling infrared signatures of ships and decoys for countermeasures effectiveness studies. In Proceedings of the SPIE Security + Defence, Edinburgh, UK, 24–27 September 2012. [Google Scholar] [CrossRef]

- Yousefi, B.; Sfarra, S.; Ibarra-Castanedo, C.; Avdelidis, N.P.; Maldague, X.P. Thermography data fusion and nonnegative matrix factorization for the evaluation of cultural heritage objects and buildings. J. Therm. Anal. Calorim. 2018, 1–13. [Google Scholar] [CrossRef]

- Jadin, M.S.; Taib, S.; Ghazali, K.H. Finding region of interest in the infrared image of electrical installation. Infrared Phys. Technol. 2015, 71, 329–338. [Google Scholar] [CrossRef] [Green Version]

- Eschrich, S.; Ke, J.; Hall, L.O.; Goldgof, D.B. Fast fuzzy clustering of infrared images. In Proceedings of the Joint 9th IFSA World Congress and 20th NAFIPS International Conference (Cat. No. 01TH8569), Vancouver, BC, Canada, 25–28 July 2001; Volume 2, pp. 1145–1150. [Google Scholar]

- Mohd, M.R.S.; Herman, S.H.; Sharif, Z. Application of K-Means clustering in hot spot detection for thermal infrared images. In Proceedings of the 2017 IEEE Symposium on Computer Applications & Industrial Electronics (ISCAIE), Langkawi, Malaysia, 24–25 April 2017; pp. 107–110. [Google Scholar]

- Gupta, S.; Mukherjee, A. Infrared image segmentation using Enhanced Fuzzy C-means clustering for automatic detection systems. In Proceedings of the 2011 IEEE International Conference on Fuzzy Systems (FUZZ-IEEE 2011), Taipei, Taiwan, 27–30 June 2011; pp. 944–949. [Google Scholar]

- Chuang, K.S.; Tzeng, H.L.; Chen, S.; Wu, J.; Chen, T.J. Fuzzy c-means clustering with spatial information for image segmentation. Comput. Med. Imaging Graph. 2006, 30, 9–15. [Google Scholar] [CrossRef]

- Bezdek, J.C. Pattern Recognition with Fuzzy Objective Function Algorithms; Springer Science & Business Media: Boston, MA, USA, 2013. [Google Scholar]

- Krinidis, S.; Chatzis, V. A robust fuzzy local information C-means clustering algorithm. IEEE Trans. Image Process. 2010, 19, 1328–1337. [Google Scholar] [CrossRef]

- Achanta, R.; Shaji, A.; Smith, K.; Lucchi, A.; Fua, P.; Süsstrunk, S. SLIC superpixels compared to state-of-the-art superpixel methods. IEEE Trans. Pattern Anal. Mach. Intell. 2012, 34, 2274–2282. [Google Scholar] [CrossRef]

- MacQueen, J. Some Methods for Classification and Analysis of Multivariate Observations. Available online: https://projecteuclid.org/download/pdf_1/euclid.bsmsp/1200512992 (accessed on 13 March 2019).

- Yuan, X.T.; Hu, B.G.; He, R. Agglomerative mean-shift clustering. IEEE Trans. Knowl. Data Eng. 2012, 24, 209–219. [Google Scholar] [CrossRef]

- Lei, T.; Jia, X.; Zhang, Y.; He, L.; Meng, H.; Nandi, A.K. Significantly fast and robust fuzzy c-means clustering algorithm based on morphological reconstruction and membership filtering. IEEE Trans. Fuzzy Syst. 2018, 26, 3027–3041. [Google Scholar] [CrossRef]

- Fischler, M.A.; Elschlager, R.A. The representation and matching of pictorial structures. IEEE Trans. Comput. 1973, C-22, 67–92. [Google Scholar] [CrossRef]

- Wu, Y.; Lim, J.; Yang, M.H. Object tracking benchmark. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 1834–1848. [Google Scholar] [CrossRef]

- Zhang, J.; Ma, S.; Sclaroff, S. MEEM: Robust Tracking via Multiple Experts Using Entropy Minimization. In Proceedings of the 13th European Conference, Zurich, Switzerland, 6–12 September 2014. [Google Scholar] [CrossRef]

- Henriques, J.F.; Caseiro, R.; Martins, P.; Batista, J. High-speed tracking with kernelized correlation filters. IEEE Trans. Pattern Anal. Mach. Intell. 2015, 37, 583–596. [Google Scholar] [CrossRef]

- Danelljan, M.; Häger, G.; Khan, F.; Felsberg, M. Accurate scale estimation for robust visual tracking. In Proceedings of the British Machine Vision Conference, Nottingham, UK, 1–5 September 2014. [Google Scholar] [CrossRef]

- Ma, C.; Yang, X.; Zhang, C.; Yang, M.H. Long-term correlation tracking. In Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, Boston, MA, USA, 7–12 June 2015; pp. 5388–5396. [Google Scholar] [CrossRef]

- Danelljan, M.; Bhat, G.; Khan, F.S.; Felsberg, M. ECO: Efficient Convolution Operators for Tracking. In Proceedings of the 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), Honolulu, HI, USA, 21–26 July 2017. [Google Scholar] [CrossRef]

- Allerton, D. Principles of Flight Simulation; John Wiley & Sons: Wiltshire, UK, 2009. [Google Scholar]

- Lepage, J.F.; Labrie, M.A.; Rouleau, E.; Richard, J.; Ross, V.; Dion, D.; Harrison, N. DRDC’s approach to IR scene generation for IRCM simulation. In Proceedings of the SPIE Defense, Security, and Sensing, Orlando, FL, USA, 25–29 April 2011. [Google Scholar] [CrossRef]

- Le Goff, A.; Cathala, T.; Latger, J. New impressive capabilities of SE-workbench for EO/IR real-time rendering of animated scenarios including flares. In Proceedings of the SPIE Security + Defence, Toulouse, France, 21–24 September 2015. [Google Scholar] [CrossRef]

- Willers, C.J.; Willers, M.S.; Lapierre, F. Signature modelling and radiometric rendering equations in infrared scene simulation systems. In Proceedings of the SPIE Security + Defence, Prague, Czech Republic, 19–22 September 2011. [Google Scholar] [CrossRef]

- Willers, M.S.; Willers, C.J. Key considerations in infrared simulations of the missile-aircraft engagement. In Proceedings of the SPIE Security + Defence, Edinburgh, UK, 24–27 September 2012. [Google Scholar] [CrossRef]

| Trackers | Search Space | Feature Representations | Precision | Success |

|---|---|---|---|---|

| DFT | Local region with step size 1 | Gray-level-value distribution | 0.389 | 0.280 |

| DFT | Local region with step size 5 | Gray-level-value distribution | 0.446 | 0.302 |

| RDT-gd | Region proposal | Gray-level-value distribution | 0.937 | 0.770 |

| RDT | Region proposal | Regional distribution | 0.952 | 0.878 |

© 2019 by the authors. Licensee MDPI, Basel, Switzerland. This article is an open access article distributed under the terms and conditions of the Creative Commons Attribution (CC BY) license (http://creativecommons.org/licenses/by/4.0/).

Share and Cite

Wu, S.; Zhang, K.; Niu, S.; Yan, J. Anti-Interference Aircraft-Tracking Method in Infrared Imagery. Sensors 2019, 19, 1289. https://doi.org/10.3390/s19061289

Wu S, Zhang K, Niu S, Yan J. Anti-Interference Aircraft-Tracking Method in Infrared Imagery. Sensors. 2019; 19(6):1289. https://doi.org/10.3390/s19061289

Chicago/Turabian StyleWu, Sijie, Kai Zhang, Saisai Niu, and Jie Yan. 2019. "Anti-Interference Aircraft-Tracking Method in Infrared Imagery" Sensors 19, no. 6: 1289. https://doi.org/10.3390/s19061289

APA StyleWu, S., Zhang, K., Niu, S., & Yan, J. (2019). Anti-Interference Aircraft-Tracking Method in Infrared Imagery. Sensors, 19(6), 1289. https://doi.org/10.3390/s19061289